Proxmox virtualization manager

Cloudless

A strange trend has surfaced in which everyone suddenly wants to use OpenStack, even if it is only to manage a few virtual machines (VMs). OpenStack projects that are launched without any other motivation typically disappear again very quickly, especially when the company realizes the overhead it is taking on with OpenStack.

Without a doubt, if you only want to manage a few VMs, you are significantly better off with a typical virtualization manager than with a tool designed to support the operation of a public cloud platform. Although classic VM managers are wallflowers compared with the popular cloud solutions, they still exist and are very successful. Red Hat Enterprise Virtualization (RHEV) enjoys a popularity similar to SUSE Linux Enterprise Server (SLES) 12, to which you can add extensions for high availability (HA) and which supports alternative storage solutions.

Another solution has been around for years: Proxmox Virtual Environment (VE) by Vienna-based Proxmox Server Solutions GmbH. Recently, Proxmox VE reached version 5.0. In this article, I look at what Proxmox can do, what applications it serves, and what you might pay for support.

KVM and LXC

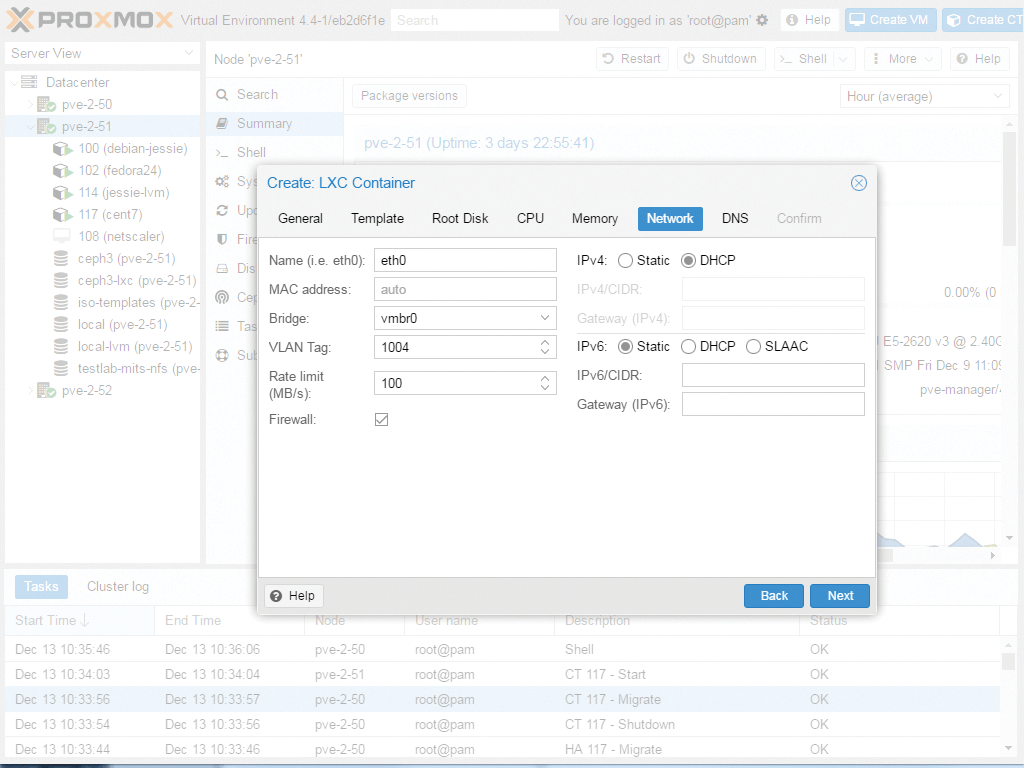

Proxmox VE sees itself as a genuine virtualization manager and not as a cloud in disguise. At the heart of the product, Proxmox combines two virtualization technologies from which you can choose: KVM, which is now the virtualization standard for Linux, and LXC, for the operation of lightweight containers. Proxmox also gives you the choice of paravirtualizing the whole computer or relying on containers in which to run individual applications (Figure 1).

On the system level, a Proxmox-managed server does not initially differ from a system on which you manually roll out KVM or LXC instances. What sets Proxmox apart is an intermediate layer displayed to the user that supports the management of VMs and any attached storage from the top down and offers standardized interfaces with which you can determine your desired configuration from the bottom up.

This intermediate layer in Proxmox is very powerful: On request, it sets up redundancy for virtual systems or persistent memory, creates new VMs on demand, and always keeps you up to date with the current load. To achieve this goal, the developers have combined different tools.

The Proxmox API

The most important of these tools is the API, which goes by the slightly clumsy name pvedaemon in the Proxmox universe. It forms the central switching point in the entire setup. If you want to run an operation within the platform (e.g., start a VM or create a new volume on connected storage), this command ultimately ends up with one of the running API instances.

At this point, Proxmox is not much different from a cloud computing solution. The concept of the central API also is available from all sides; however, unlike OpenStack, in which an instance of the API runs on every host, in Proxmox VE, each host has its own API.

Nevertheless, you do not have to address a specific API when running commands. Because Proxmox has the ability to send the target host as part of a command, the pveproxy helper service, which runs on every host, is all you need. No matter to which proxy instance in the cluster the user sends a command, the command always initially ends up in the proxy of the respective host, and the host forwards it to the target, if it is not the target host itself.

The PVE stats daemon, pvestatsd, supports the Proxmox tools as they work. It regularly lists the running and available resources for each host and shares this information with all other nodes in the cluster. Each node has a complete image of the resource situation at any point in time, which makes it significantly easier to, for example, launch additional VMs by making it far easier to discover the host on which the new resource needs to be created.

High Availability

A significant difference between classic virtualization and the "anything can break at anytime" mantra that was propagated for clouds, is the way the setup responds to failures. In the cloud, either the software author or the administrator who operates the software is responsible. Together, they need to design and roll out the software so that the failure of an individual server, or any other infrastructure component important for operations, does not result in service failures.

Classical setups try to field failures by introducing measures at the infrastructure level. To do this, for example, the VM has to reside on redundant storage so that its data remains available, even if the previous computing nodes fail. Additionally, a cluster resource manager (CRM) has to ensure that the VM is restarted automatically on another node if the previous home node has crashed.

Because Proxmox sees itself as a classical virtualization solution, the developers have taken precisely this approach: The pve-ha-lrm and pve-cluster services establish a complete HA cluster that fields crashes of individual nodes. The resource manager pve-ha-lrm runs the commands it receives from pve-ha-crm on the local system as part of pve-cluster and the cluster-wide resources manager: If a node crashes, the CRM service initially decides on which hosts to relaunch the VMs previously running on the crashed node; then, it sends the corresponding commands to the local resource managers (LRMs).

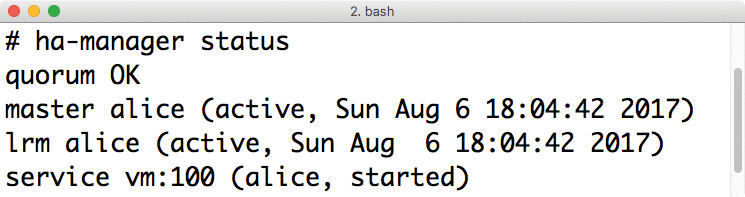

Although Proxmox relies on Corosync to enable communication between the cluster nodes, it does not use Pacemaker as the CRM, which is the norm in this combination. Instead, the developers have written their own cluster manager, ha-manager, which they have been using since Proxmox VE v4 (Figure 2).

The pve-cluster service includes even more: The team has also developed a database-based filesystem known as pmxcfs, which takes care of keeping configuration files in sync across the hosts of a setup. That was not always so easy in the cluster history of Linux. Solutions such as csync2 have regularly proved very inflexible in the past.

Classical automation tools don't help, either. Some files kept in sync across hosts change during operation. Regular replacement with a static template also does not work. From today's perspective, it is questionable whether you really need to tackle this problem with your own, partially Posix-compatible, FUSE-based filesystem. Today, both Consul and its etcd competitor offer comparable functionality without using their own filesystems. However, it is possible that the two services simply didn't exist when Proxmox started the development of pmxcfs.

Virtualization Functions

From the user's point of view, the comprehensive management framework underpinning Proxmox VE pays off: VMs – whether KVM or container based – can be launched using a standardized REST-like API defined in JSON. Proxmox VE supports different approaches on the network side: Basically, the network concept of the solution is based on network bridges, but you can also integrate software-defined networking (SDN) solutions such as Open vSwitch. IPv4 and IPv6 support for VMs is included.

On the storage side, Proxmox can handle typical cloud solutions. Local storage can be connected by logical volume management (LVM) or the classical Linux filesystems (ext4/XFS, based on LVM), such as ZFS. If you want network storage, instead, you will find many options: Fibre Channel, iSCSI, and NFS are all supported, as are distributed storage solutions, such as Ceph and GlusterFS. (Proxmox can install Ceph, itself, if so desired.)

If you frequently have to juggle your VMs around, you will be happy to hear that live migration in Proxmox VE works. However, the VM should be on redundant storage, preferably on object storage such as Ceph.

If something goes wrong, the Proxmox developers fortunately have prepared for such an event: Backup and restore tools integrated directly into the solution can be used to restore VMs in an emergency, as well as snapshots taken on the fly, and you have the option of creating a completely new backup with point and click in the GUI.

Of Templates and Cloning

Proxmox VE allows you to create and clone VM templates. Both functions are particularly popular in cloud computing: If a VM is based on a template, it can be rolled out far more quickly than would be possible for a manual installation. Essentially, a VM template is a prebuilt and generalized hard disk image that launches as a new VM at the push of a button. Parameters come from outside.

Templates are cloned at rollout; the vendor distinguishes between "linked clones" and "complete clones." For linked clones, the template and clone are mapped, and the clone does not work without the template. Although this restricts flexibility, it also means that the VM does not require much storage space. With a full clone, Proxmox copies the entire template at startup, but the VM then runs without its original template (Figure 3).

Access Options

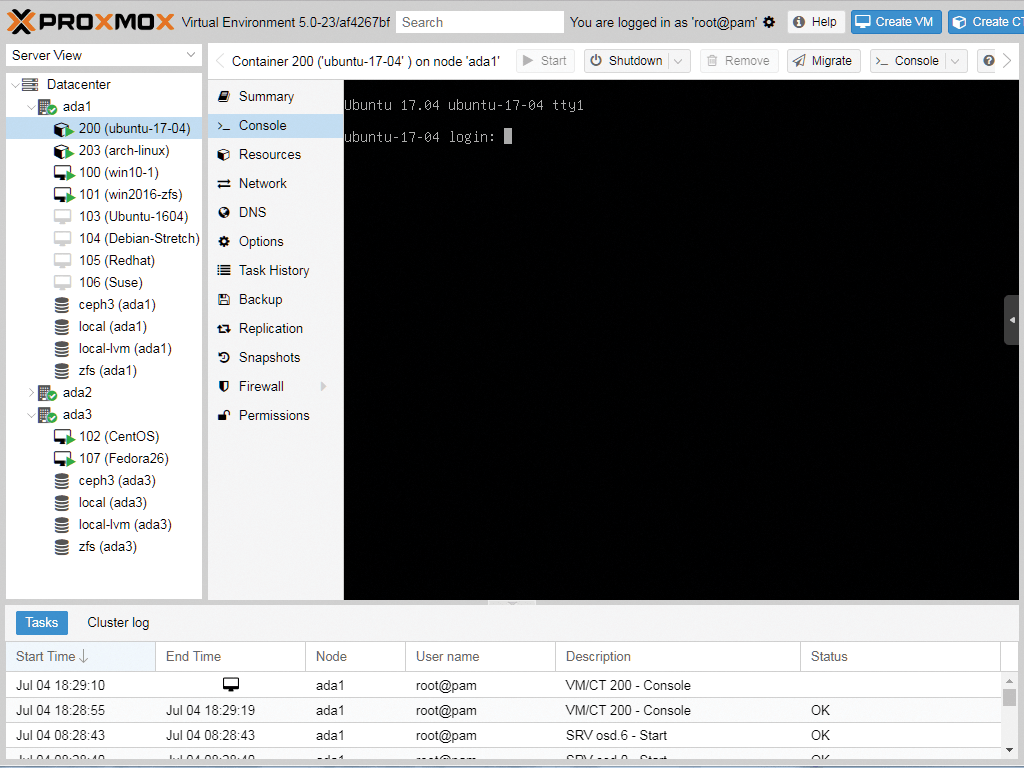

I already mentioned the extremely well-designed web GUI that comes with Proxmox VE. It can be reached via the web browser and can run on any host in the cloud, meaning that a separate management server is not needed. In the past few years, Proxmox has successively altered the visual appearance of the graphical interface, without losing sight of user friendliness.

Nor has functionality fallen by the wayside. In the left pane, you find a list of available hosts. If you click on one of these hosts, a list of its properties, including the option to edit them, appears in the right part of the window.

Rudimentary monitoring of system values such as CPU load and RAM utilization is also integrated. If so desired, you can look at the central logfiles (e.g., the Syslog). A virtual console login also supports Proxmox VE in the web GUI, making it a key management tool and in no way inferior to SUSE or Red Hat (Figure 4).

If you are experienced on the command line (CLI) and can't really come to grips with the web GUI, Proxmox offers a truckload of command-line tools as an alternative. Proxmox VE fully leverages the open and standardized API. The CLI commands ultimately produce the same API calls as the web interface. Maintaining the CLI tools also means less overhead, and Proxmox is obviously keen to keep them to avoid annoying power users.

Comprehensive User Management

User management is a very important issue when it comes to virtualization solutions. Usually, more than one person needs access to the central management tool. For example, the accounting department wants to know regularly which clients make use of which service, and not every administrator should have all authorizations: If you need to create and manage VMs, you do not necessarily have to have the ability to create new users.

Proxmox takes this into account with comprehensive, roles-based authorization management. A role is a collection of specific authorizations that can be combined at will. Rights management in Proxmox is granular: You can stipulate what each role can do right down to the level of individual commands.

Additionally, Proxmox VE can be connected to multiple sources to obtain user data. Privileged access management (PAM) is the classic choice on Linux; Proxmox has its own user management, if needed. If you have a working LDAP directory or an Active Directory (AD) domain, either can be used as sources for accounts and does not change anything in rights management.

Users from these sources can also be assigned roles. To make sure that nothing goes wrong at login, Proxmox also supports two-factor authentication: In addition to time-based one-time passwords, a key-fob-style YubiKey can also be used.

Through its API, Proxmox also offers the ability to equip servers that are part of the cluster with firewall rules. The technology is based on Netfilter, (i.e., iptables) and lets you enforce access rules on the individual hosts. Although Proxmox comes with a predefined set of rules, you can adapt and modify them at will.

Ceph Integration

One of the biggest challenges in VM operations has always been providing redundant storage; without it, high availability is unattainable. Different solutions are on the market, such as distributed replicated block device (DRBD), which is also manufactured by the Vienna-based Linbit, and which has made a name for itself in connection with two-node clusters in particular.

Proxmox has supported DRBD as a plugin for a while, but now the developers have shifted their attention to another solution: Ceph. This distributed object store, which has become well known in the OpenStack world, now has many fans outside the cloud, too. Proxmox fully integrates Ceph into Proxmox VE, which means that an existing Ceph cluster can be used for more than just external storage.

Proxmox VE also installs its own Ceph cluster, which can be managed with the usual Proxmox tools. Proxmox covers all stages of Ceph deployment: The monitoring server, which enforces the cluster quorum in Ceph and provides all clients with information about the state of the cluster, can be rolled out on individual servers at the push of a button and can declare hard drives object storage devices (OSDs). OSDs in Ceph ultimately store data broken down into small segments.

The type, scope, and quality that Proxmox offers with Ceph integration into the VE environment is remarkable: The cluster setup in the Proxmox GUI is easy to use and works well. If necessary, you can even adjust the individual parameters of the cluster (e.g., to improve performance).

Because the best made memory is useless if the VMs don't actually store anything on it, Proxmox integrates Ceph RADOS block devices (RBDs). If you launch a VM, you can tell Proxmox that it has to be based on a new RBD volume. RBD can be selected as a storage volume, just like other storage types.

The simple fact that Proxmox suggests operating a hyperconverged setup causes frowns among users in smaller environments. "Hyperconverged" means that VMs run at the same time on the machines that provide hard drive space for Ceph.

Inktank, the company behind Ceph, regularly advises against relying on such a setup: Ceph's controlled replication under scalable hashing (CRUSH) algorithm is resource hungry. If one node in a Ceph setup fails, restoring missing replicas generates heavy load on the other Ceph nodes and can have a negative effect on the virtual systems running on the same servers.

What's New in Version 5.0

Version 5.0 contains several important new features. If you want to import VMs from other hypervisor types, the process is now far easier in Proxmox VE 5.0 than previously. The developers have genuinely overhauled the basic system: Proxmox VE is based on Debian GNU/Linux. The new version 5.0. is based on Debian 9, only recently released. In contrast to the original version, Proxmox delivers its product with a modified kernel 4.10.

As of Proxmox 5.0, the developers deliver their own Debian packages for Ceph. Until now, they had relied on the packages officially provided by Inktank.

The developers' main goal is to provide Proxmox customers with bug fixes more quickly than the official packages offered by Inktank. Only time will tell if they manage to do so: Ceph is not a small solution anymore, but a complex beast. It will undoubtedly be interesting to see how an external service provider without Ceph core developers manages to be faster than the manufacturer.

Either way, Ceph RBD will be the official de facto standard for distributed storage in Proxmox VE 5.0. Plans for the near future include integration of the advantages of the upcoming Ceph LTS version 12.2, aka Luminous: The "Blue Store" back end for OSDs, which Ceph LTS includes, is said to be significantly faster than the previous approach based on XFS. However, Inktank has not yet released the new version.

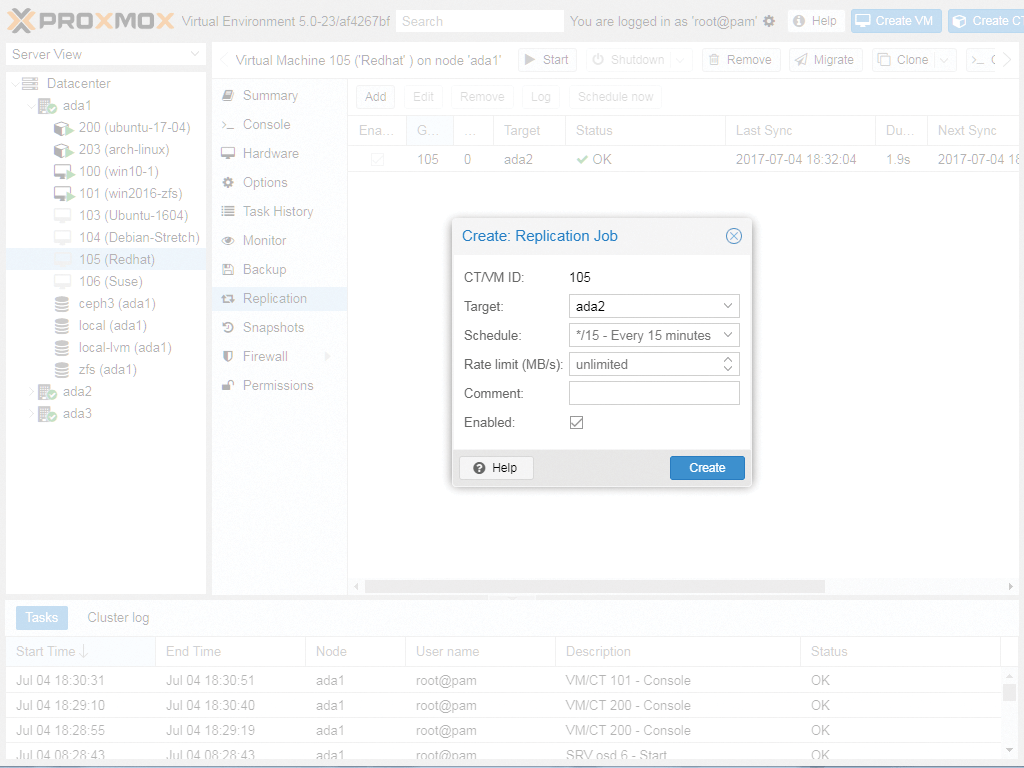

According to the developers, the most important new feature in Proxmox VE 5.0 by far is the new replication stack based on ZFS, which lets you create asynchronous replicas of storage volumes in Proxmox. In this context, Proxmox states that this procedure "minimizes" data loss in the event of malfunctions – as usual, asynchronous replication suffers in this case, because not all data can survive an error. If you want to use the feature, you can configure volume-based replication using the Proxmox GUI or at the command line (Figure 5).

How Much Does It Cost?

Proxmox calculates the price of its support products on the basis of CPU sockets. The Standard subscription, which includes access to the Proxmox Enterprise repository, 10 support tickets to the provider, a maximum response time of one business day, and remote support via SSH, costs about EUR33 (~$39) per CPU per month. For double that, you get unlimited support tickets; for EUR20 (~$24), you get three support tickets per year, but no SSH support. All prices are exclusive of VAT.

If you don't want support from Proxmox, you can download the individual components from the local GitHub repository and install them manually [1]. Proxmox offers a trial license [2] that includes all functions and is valid for 30 days, so you know what you are getting yourself into.

Conclusions

Proxmox VE 5.0 is a solid virtualization solution that does not need to hide behind Red Hat and SUSE. If you want to run a small setup, Proxmox VE is far better suited than a classic cloud solution such as OpenStack. The process used for HA of VMs is just as impressive as the attention to detail in many individual Proxmox features. Before resorting to RHEV or SLES, I strongly recommend a test with Proxmox.