Monitoring container clusters with Prometheus

Perfect Fit

Kubernetes [1] makes it much easier for admins to distribute container-based infrastructures. In principle, you no longer have to worry about where applications run or if sufficient resources are available. However, if you want to ensure the best performance, you usually cannot avoid monitoring the applications, the containers in which they run, and Kubernetes itself.

You can read how Prometheus works in a previous ADMIN article [2]; here, I shed light on the collaboration between Prometheus and Kubernetes. Because of its service discovery, Prometheus independently retrieves information about the container platform, the current container, services, and applications via the Kubernetes API. You do not have to change the configuration of Prometheus when pods launch or die or when new nodes appear in the cluster: Prometheus detects all of this.

Uplifting

In addition to the usual information, such as CPU usage, memory usage, and hard disk performance, the metrics of containers, pods, deployments, and ongoing applications are of interest in a Kubernetes environment. In this article, I show you how to collect and visualize information about your Kubernetes installation with Prometheus and Grafana. A demo environment provides impressions of the insights Prometheus delivers into a Kubernetes installation.

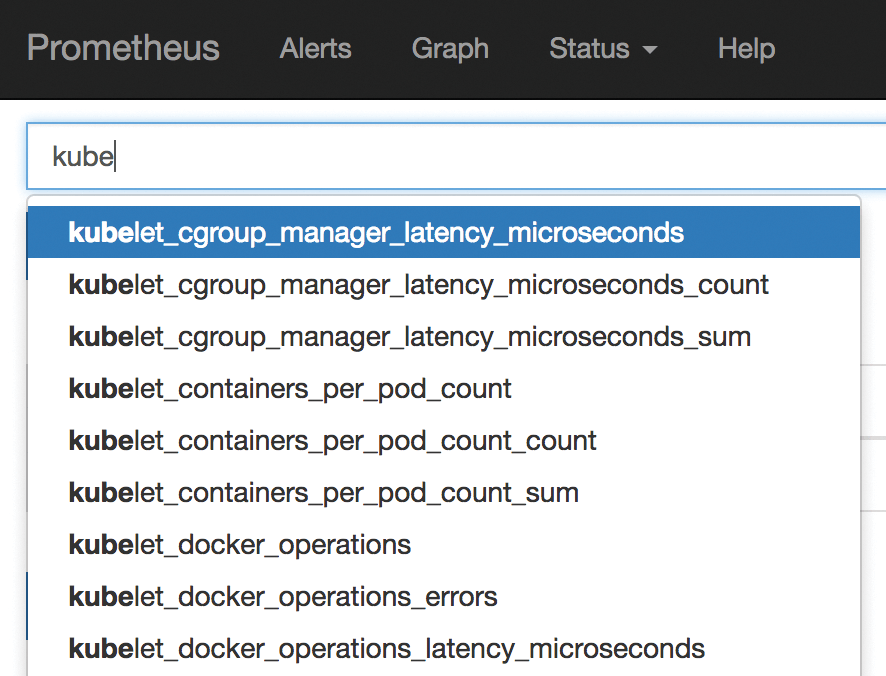

The Prometheus configuration is oriented on the official example [3]. When querying metrics from the Kubernetes API, the excerpt from Listing 1 is sufficient. Thanks to service discovery in Prometheus, many metrics can be retrieved, as shown in Figure 1.

Listing 1: 02-prometheus-configmap.yml (Extract 1)

[...]

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/[...]/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/[...]/token

[...]

Labeled

The biggest advantage from the interaction between Prometheus and Kubernetes has to be the support for labels. Labels are the only way to access or identify specific pods, services, and other objects in Kubernetes. An important task for Prometheus, therefore, is to identify and maintain these labels. The software's service discovery stores this information temporarily in meta labels. With the use of relabeling rules, Prometheus converts the meta labels into valid Prometheus labels and discards the meta labels as soon as it has generated the monitoring targets.

A blog post [4] describes the relabeling process in detail. The rules could look something like Listing 2. In the end, Prometheus knows the labels that Kubernetes assigns its nodes, applications, and services.

Listing 2: 02-prometheus-configmap.yml (Extract 2)

[...] - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: replace target_label: kubernetes_name [...]

Prom Night

You can define graphs or alarms based on these labels with the powerful PromQL [5] query language. Kubernetes defines labels as shown in Listing 3, which more or less inherits a resulting Prometheus metric:

my_app_metric{app="<myapp>",mylabel="<myvalue>",[...]}

Listing 3: Label Example

metadata:

labels:

app: <myapp>

mylabel: <myvalue>

Prometheus creates a separate time series for each additional label. Each label adds another dimension to my_app_metric, which Prometheus in turn stores as a separate time series. The software can already cope with millions of time series, yet version 2.0 [6] should cover more extreme Kubernetes environments with thousands of nodes.

Permanent or Volatile?

Before installing Prometheus, you should consider whether you want to install the software inside or outside the Kubernetes environment. An installation outside can open up many options for permanent data storage. Monitoring also works independent of the monitored system.

However, you can set up integration in Kubernetes far more easily; this applies to both the network and authentication. Thanks to persistent volumes [7] or stateful sets [8], Kubernetes has the option to keep data permanently. If you operate further external monitoring, you will likely combine Prometheus with Kubernetes.

Tested

To illustrate the information outlined above, I will demonstrate how you can run your own small Kubernetes cluster with a Prometheus extension based on Minikube [9]. Minikube offers the easiest way to test Kubernetes on your own computer, whether Linux, OS X, or Windows (Table 1). If you want to follow the steps, you will find an installation manual online [10]. The minikube start command generates a new Kubernetes; depending on the base system, Minikube still requires VirtualBox or kubectl to be in place.

Tabelle 1: Useful Minikube Commands

|

Command |

Effect |

|---|---|

|

|

Opens the Kubernetes dashboard in the browser. |

|

|

Calls up the |

|

|

Outputs the URL for the |

Complete listings of the extracts shown in the article are available online [11], in particular, the YAML files with the Kubernetes definitions (*.yml): Unpack them in a working directory to send them later to Kubernetes using kubectl. Kubernetes internally stores the content generated from the YAML files and creates corresponding objects as namespaces, deployments, or services.

Because the following steps affect Minikube, I omit advanced topics such as persistent storage and role-based access (RBAC) [12], introduced in Kubernetes 1.6, that can be used with Prometheus.

Name Tag

Kubernetes uses namespaces to isolate the resources of individual users or a group of users from one another on a physical cluster. For the sample project, generate the monitoring namespace:

kubectl create -f 01-monitoring-namespace.yml

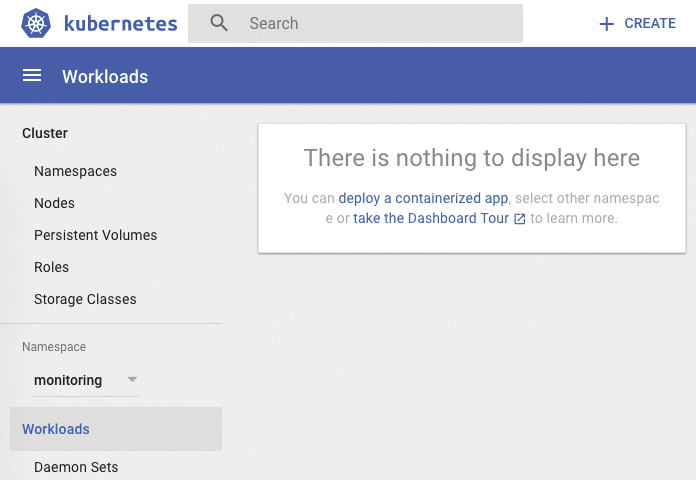

If you simply want to understand what is happening in the small Kubernetes cluster, launch the administration interface with

minikube dashboard

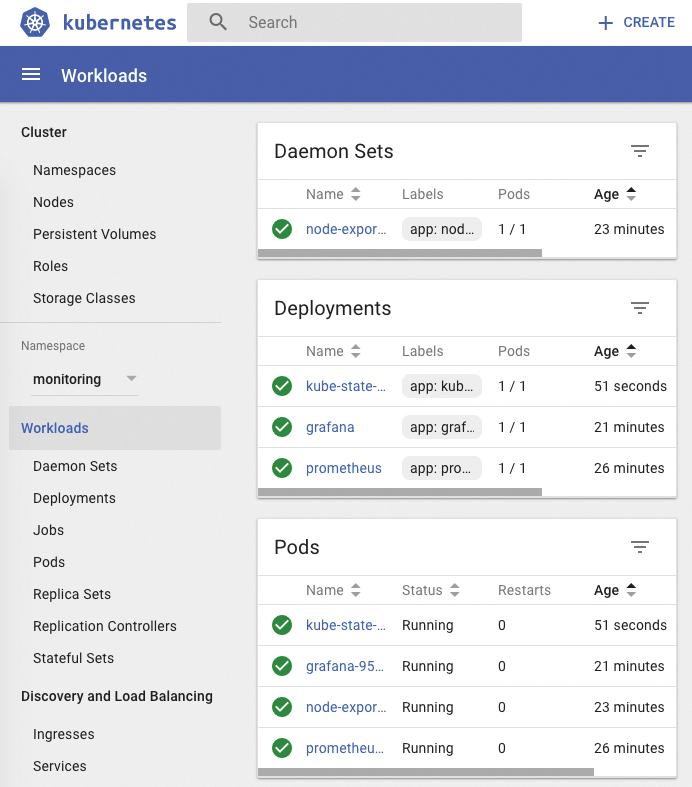

then select the monitoring namespace as shown in Figure 2.

The next step is then carried out by Prometheus. The software is available as an official Docker image [13], but without a configuration. To avoid having to build a new image for each change, pack the Kubernetes configuration as a prometheus.yml data object in a ConfigMap [14] with the name prometheus-configmap. You can then independently modify, delete, or create a new ConfigMap:

kubectl create -f 02-prometheus-configmap.yml

Deployments provide declarations [15] for updating pods and replica sets. The Kubernetes deployment for Prometheus (Listing 4) integrates the recently created ConfigMap as a new volume with the name prometheus volume-config by means of a volume mount in the /etc/prometheus/prometheus.yml path. This establishes a connection between Prometheus and its configuration:

kubectl create -f 03-prometheus-deployment.yml

Listing 4: 03-prometheus-deployment.yml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

labels:

app: prometheus

name: prometheus

namespace: monitoring

spec:

replicas: 1

template:

metadata:

labels:

app: prometheus

spec:

containers:

- image: prom/prometheus:v1.7.1

name: prometheus

args:

- -config.file=/etc/prometheus/prometheus.yml

- -storage.local.path=/prometheus

ports:

- containerPort: 9090

volumeMounts:

- mountPath: /etc/prometheus

name: prometheus-volume-config

- mountPath: /prometheus

name: prometheus-volume-data

volumes:

- name: prometheus-volume-config

configMap:

name: prometheus-configmap

- emptyDir: {}

name: prometheus-volume-data

You can configure the directory that stores the Prometheus database with volumes, and more specifically as emptyDir. It discards the data when you relaunch the Prometheus pod; you will want to use persistent volumes here for a production setup.

You are still missing an appropriate service for Prometheus to access the current Prometheus instance:

kubectl create -f 04-prometheus-service.yml

The service can then be called via kubectl (Listing 5). At this point, note that Minikube sometimes displays services as pending. Do not worry, they are still working.

Listing 5: Services in the monitoring Namespace

# kubectl get service --namespace=monitoring NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE prometheus 10.0.0.221 <pending> 9090:31244/TCP 1m

On the Lookout

The monitoring software automatically detects applications that provide metrics in Prometheus. To do so, you must first provide specific annotations in key-value format, as described by the example in Listing 6 [3].

Listing 6: Annotations Example

[...]

metadata:

annotations:

prometheus.io/scrape: 'true'

prometheus.io/port: '9100'

[...]

The next component, node_exporter, makes use of these annotations [16] and collects data about the cluster nodes, such as storage usage, network throughput, and CPU usage. If you want to make sure the software is running on every single node, you need to launch the node_exporter as a DaemonSet [17]. This step simply ensures that a separate instance of node_exporter runs on each node: If a new node is added, Kubernetes automatically calls a new instance.

Add the above-mentioned annotation to node_exporter to help Prometheus find all its instances without further configuration; in this way, you noticeably reduce your manual configuration work.

kubectl create -f 05-node-exporter.yml

For the node_exporter to have access to the information of all host systems, you must provide it with extended privileges by extending the YAML file:

securityContext: privileged: true

This privilege gives the node_exporter instance access to the host's resources and lets it read, for example, its /proc filesystem (Listing 7).

Listing 7: 05-node-exporter.yml

[...]

hostPID: true

hostIPC: true

hostNetwork: true

[...]

volumeMounts:

- name: proc

mountPath: /host/proc

[...]

volumes:

- name: proc

hostPath:

path: /proc

[...]

The Aim

After these few steps, Prometheus is ready for use; following this call,

minikube service prometheus --namespace=monitoring

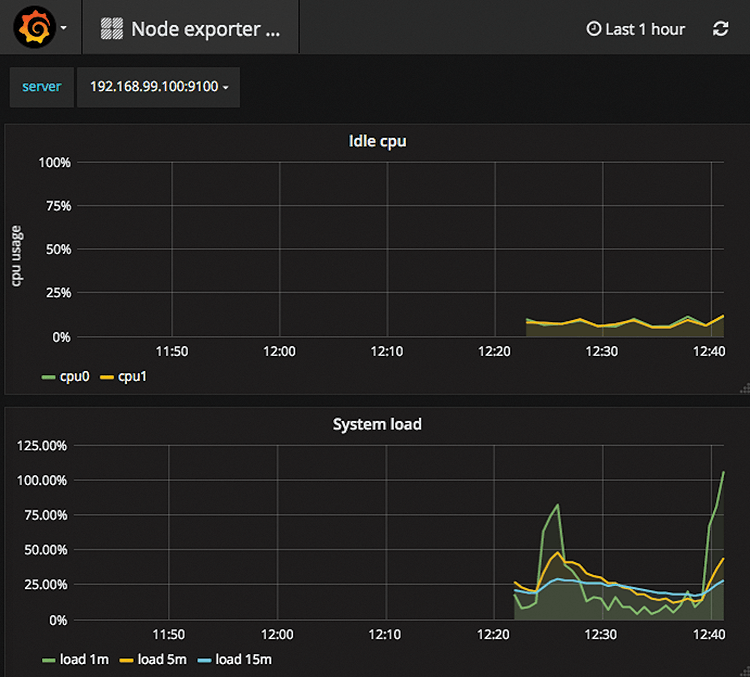

Prometheus delivers the metrics, and Grafana [18] provides a nice graphical overview of the Kubernetes cluster:

kubectl create -f 06-grafana-deployment.yml kubectl create -f 07-grafana-service.yml minikube service grafana --namespace=monitoring

A script I wrote helps set up Grafana [11], which creates a data source for Prometheus and imports two useful dashboards for Kubernetes [19] [20]:

./configure_grafana.sh

If you log in with the same usernames and the password admin, you can select the newly created dashboards from the drop-down menu, and you can then browse the information that the Minikube cluster reveals (Figure 3).

A series of dashboards for Kubernetes [21] is available from the Grafana website [18]; however, some trial and error is in order: Sometimes the developers seem to use other relabeling rules, and all fields remain empty. Adjusting the queries can be quite complex, so these dashboards are more suitable as a good starting point for your own programming.

What Else?

So far, you have gathered a lot of information about the Kubernetes cluster, but there is still more. One interesting Kubernetes subproject named kube-state-metrics [22] retrieves information relating to existing objects from the Kubernetes API and generates new metrics:

kubectl create -f 08-kube-state-metrics-deployment.yml kubectl create -f 09-kube-state-metrics-service.yml

It provides these metrics in a form compatible with Prometheus [23]. Thus, it can notify administrators, for example, if nodes are not accepting any new pods (unschedulable) or if pods are on the kill list. Complete monitoring in the Kubernetes dashboard is shown in Figure 4.

Conclusions

The demo environment shown in this article shows how you can monitor your Kubernetes cluster. Various metrics inform you about what is currently happening in the cluster. More fine tuning helps: You can expand the production monitoring system to include the Alert Manager and the need to think about persistent data storage. The CoreOS Prometheus operator [24] [25] takes an interesting approach; it installs production-ready Kubernetes monitoring with very little effort.