Combining containers and OpenStack

Interaction

Most IT users rely on containers à la Docker [1], rkt [2], and LXD [3] as platforms for processing data. Sooner or later, cloud solutions such as OpenStack [4] also have to contend with containers; currently, several possibilities exist. The most obvious solution is to anchor the container technology within OpenStack with the use of services such as Magnum [5] and Zun [6]. The other case is less obvious, wherein the container technology runs OpenStack, so it is outside the cloud. This approach includes the Kolla project [7].

Incidentally: The idea of using containers as a basic infrastructure can also be found in Google's Infrastructure for Everyone Else (GIFEE) project [8]. This community has been the impetus for recent developments in the field of OpenStack in containers. Depending on your preference, you can operate containers above OpenStack, below it, or even in combinations of the two. In this article, I briefly introduce container-related OpenStack projects and explain their goals and interactions.

Zun

The Zun project has only been around for about a year. Its goal is to provide an interface for managing containers as native OpenStack components (e.g., like Cinder, Neutron, or Nova). Thus, it is the successor to Nova Docker [9], which has now been discontinued.

Nova Docker was a simple way to integrate containers in OpenStack. Managing containers followed the same principles as managing virtual machines (VMs), but this integration shortcut meant that the benefits of container technology were largely lost. At the end of the day, Docker and the like are not VMs.

Zun now fills the gap, supporting container management while still part of the OpenStack universe. As a result, you get seamless integration with other components such as Keystone and Glance and abstraction from the underlying container technology.

You do not have to deal with the complexity of Docker versus rkt versus LXD. This abstraction is undoubtedly ambitious. Zun thus starts with the basics – the simple acts of creating, updating, and deleting containers. In this context, the documentation occasionally references CRUD (Create, Read, Update, Delete).

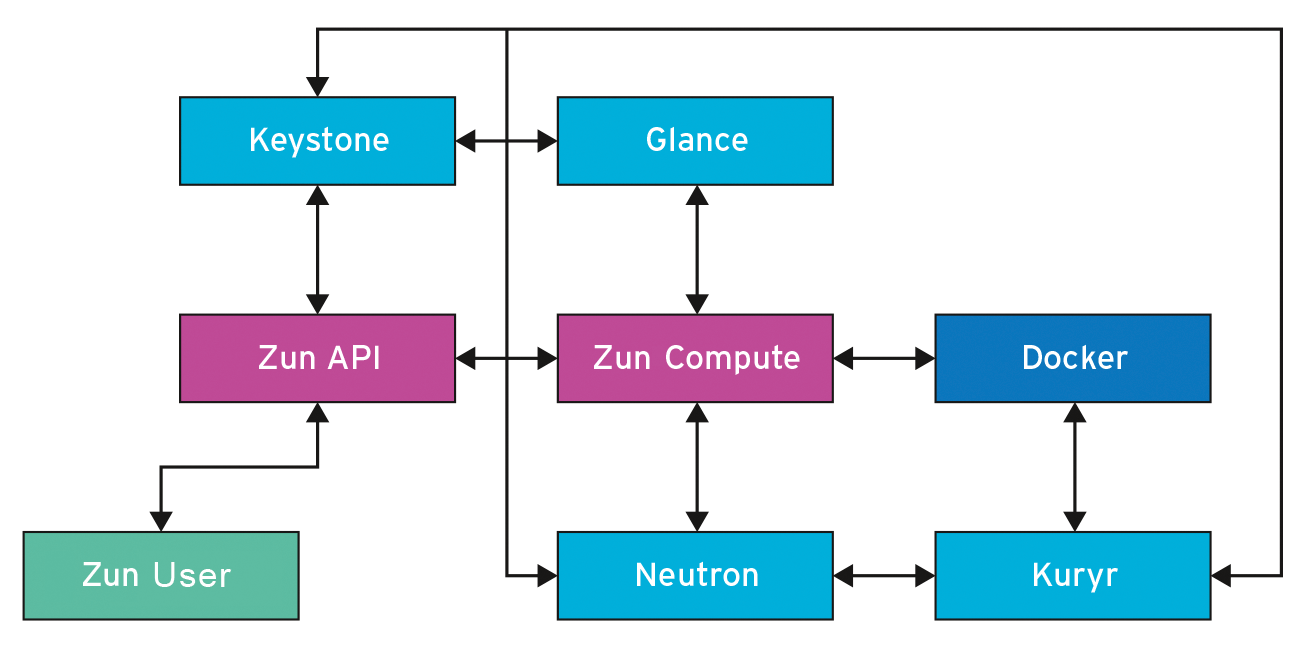

Figure 1 shows the Zun architecture and its integration with OpenStack. Zun comprises two components: The Zun API is used to communicate and interact with the user, and in the background, Zun Compute interacts with the OpenStack components via drivers and manages the resources for containers, such as communicating with Glance to provide the necessary images or with Neutron for the network. Another project plays an important role: Kuryr [10] forms the bridge between the network worlds, with OpenStack on one side and containers on the other.

Zun's target group is OpenStack users who want to use containers in addition to bare metal and VMs. The requirements are quite low: You do not need any special management software for the containers themselves or for the underlying hosts. The aforementioned CRUD approach is sufficient (Listing 1).

Listing 1: Creating and Deleting a Container

$ zun run --name pingtest alpine ping -c 4 8.8.8.8 ** $ zun list +--------------------------------------+----------+--------+---------+-----------------------------+ | uuid | name | image | status | task_state| address | prt| +--------------------------------------+----------+--------+---------+-----------+------------+----+ | 36adtb1a-6371-521a-0fa4-a8c204a9e7df | pingtest | alpine | Stopped | None | 172.17.5.8 | [] | +--------------------------------------+----------+--------+---------+-----+-----+------------+----+ ** $ zun logs test PING 8.8.8.8 (8.8.8.8): 56 data bytes 64 bytes from 8.8.8.8: seq=0 ttl=40 time=31.113 ms 64 bytes from 8.8.8.8: seq=1 ttl=40 time=31.584 ms [...] $ $ zun delete pingtest

Magnum

According to the Git repository, the roots of Magnum go back to 2014, with the first release in 2015. Magnum's original mission was split between providing Container as a Service (CaaS) and Container Orchestration as a Service (COaaS), with its focus on COaaS.

Magnum's objective now is to provide a management platform for containers with the help of OpenStack [11]. Magnum aims to make Container Orchestration Engines (COEs; e.g., Kubernetes [12], Docker Swarm [13], and Apache Mesos [14]) available as resources in OpenStack.

Compared with older versions of the Magnum architecture, many components have been dropped. The container, pod, and service constructs are no longer of any interest. Now, clusters (formerly bays) and cluster templates (formerly bay models) are the central components. The OpenStack COE project is Heat (Listing 2).

Listing 2: Integrating Heat Templates

$ magnum-template-manage list-templates --details +------------------------+---------+-------------+---------------+------------+ | Name | Enabled | Server_Type | OS | COE | +------------------------+---------+-------------+---------------+------------+ | magnum_vm_atomic_k8s | True | vm | fedora-atomic | kubernetes | | magnum_vm_atomic_swarm | True | vm | fedora-atomic | swarm | | magnum_vm_coreos_k8s | True | vm | coreos | kubernetes | | magnum_vm_ubuntu_mesos | True | vm | ubuntu | mesos | +------------------------+---------+-------------+---------------+------------+ $

If you are starting from scratch, the first step is to create the cluster template. Important pieces of information are the orchestration software to be used and the images to generate the server. However, the devil is in the details. If necessary, Magnum also can use a private registry to store the container images, for which you then have to provide additional information about the size of the data storage and which driver is necessary for access.

Other specifications relate to the network addresses of the DNS server to be used or details of how the container data can be stored in a non-volatile manner. For the first step, it is worth turning to the templates provided.

The template then lets you create the cluster on the assigned infrastructure using OpenStack tools. Two programs work behind the scenes. The first is the Magnum API server, which organizes the external interface by accepting requests and providing the corresponding information. For reliability, or simply scaling, it is possible to run multiple API servers simultaneously.

The process forwards requests on the interface to the second Magnum component, the Conductor. The Conductor interacts with the corresponding orchestration instances (Listing 3).

Listing 3: Magnum API and Conductor

$ ps auxww | grep -i magnu stack17987 0.1 1.2 224984 49332 pts/35S+15:450:19 /usr/bin/python /usr/bin/magnum-api stack18984 0.0 1.4 228088 57308 pts/36S+15:450:06 /usr/bin/python /usr/bin/magnum-conductor $

Kubernetes, Mesos, and Docker Swarm can be operated without OpenStack, so what value is gained from Magnum? Magnum is aimed at those who want to manage their containers within OpenStack.

OpenStack can provide genuine added value beyond pure infrastructure: Multitenancy and other security mechanisms result quite naturally from Keystone integration. By design, it is not possible to have containers of different OpenStack clients running on a host. Discussions about whether the Hypervisor or the Docker host can be trusted are thus almost meaningless.

When it comes to Zun and Magnum, containers can be managed individually or as part of a whole with standard OpenStack tools. However, the question remains as to whether it all can be combined: Yes, but ….

Interim Conclusions

Strictly speaking, Magnum and Zun have no direct connection. The intermediaries between the two OpenStack projects are the COEs (i.e., container management). Besides Docker, the container also can control the API provided by Zun. The possible operations are, of course, limited to the lowest common denominator of the container implementations, but containers as an OpenStack service cannot deliver more, anyway.

Mixing Zun and Magnum is not recommended. The two projects serve different target groups, and combining them is of more interest academically than practically. When it comes to the use of individual containers as native OpenStack resources, Zun is the right choice (e.g., to conduct isolated tests or for installations that need no more than a handful of Docker instances). However, if you want OpenStack to serve as the basis for more complex applications, including life cycle and infrastructure management for containers, then Magnum is the better choice.

Kolla

OpenStack users separate naturally into two camps. Up to this point, the target group has been users who want access to containers, in addition to classical physical and virtual machines. Their focus of attention is managing Docker, rkt, LXD, and the like, thus bringing Kubernetes, Mesos, and Docker Swarm into the limelight.

However, more recent interest has been in containers as the basic framework for overall management of OpenStack, wherein OpenStack resides on top of the containers. One then refers, for example, to OpenStack on Kubernetes. Stackanetes [15] is a well-known project to deploy and manage OpenStack on Kubernetes that came out of a collaboration between CoreOS and Google. The Kolla project also fits in this camp very nicely. Because it is an OpenStack project, Kolla naturally is aimed specifically at this application.

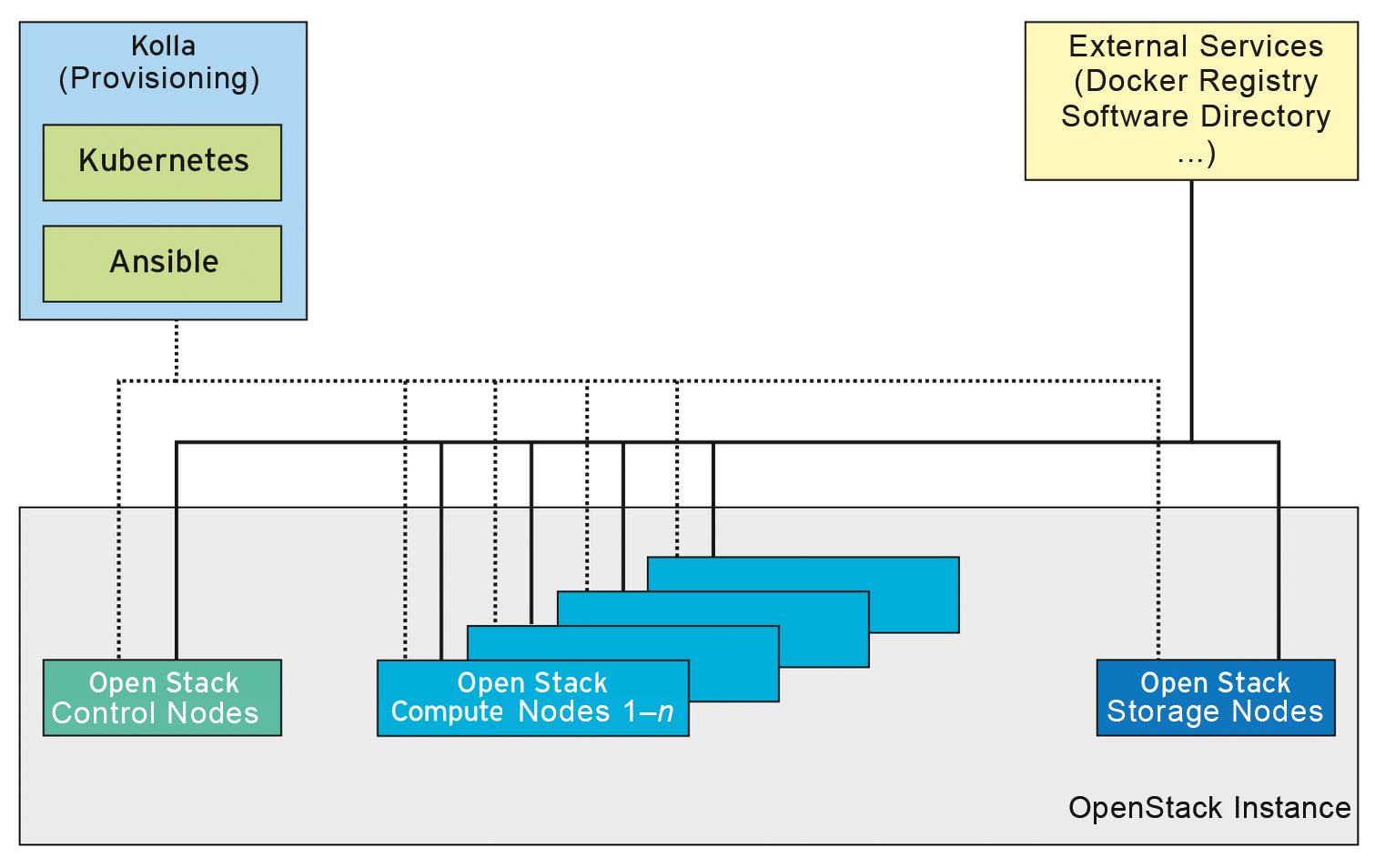

Figure 2 shows the architecture of the container substructure for OpenStack. The project itself is not so new. The first entries in the Git repository date back to 2014; therefore, Kolla is even older than Magnum. The motivation for the project was to address the inadequacies in the previously used methods for updating OpenStack without downtime for the user. The GitHub page describes three use cases [16].

The first case is to update the overall OpenStack construct, which is equivalent to changing from one version to the next. As Kolla emerged, this approach was standard practice and was the only way to update OpenStack. The other two cases are based on updating or rolling back individual OpenStack components.

The design of Kolla is based on two components: individual containers and container sets. Both must meet specific, well-documented requirements [16]. Kolla top-level containers correspond to OpenStack's basic services.

All controlling instances, such as the Neutron server or the Glance Registry, are part of the OpenStack Control group, whereas Cinder and Swift fall under OpenStack storage. Table 1 provides a more precise accounting.

Tabelle 1: Kolla Container Sets

|

Container Set |

Components |

|---|---|

|

Messaging Control |

RabbitMQ |

|

High-Availability Control |

HAProxy, Keepalived |

|

OpenStack Instance |

Keystone; Glance, Nova, Ceilometer, and Heat APIs |

|

OpenStack Control |

Glance, Neutron, Cinder, Nova, Ceilometer, and Heat controllers |

|

OpenStack Compute |

nova-compute, nova-libvirt, Neutron agents |

|

OpenStack Storage |

Cinder, Swift |

|

OpenStack Network |

DHCP, L3, Load Balancer as a Service (LBaaS), Firewall-as-a-Service (FWaaS) |

In operation, you can make use of prefabricated container images by the project or build them yourself [17]. At this point in time, only Docker has been tested and is supported as a container technology. The images themselves can reside either on the public Docker hub or in a private registry.

In principle, two approaches are conceivable for the installation of OpenStack. The first is deploying, starting, and stopping the container instances manually, which certainly is not desirable in these times of automation. The alternative is to use management software. Kolla offers two possibilities: Kubernetes [12] [18] and Ansible [19] [20]. First, you need to set up the required infrastructure.

Ansible is significantly faster than using a Kubernetes cluster; however, Ansible comes from the configuration management field and covers many application cases outside of containers, whereas Kubernetes considers mastering Docker and containers to be its main task. What you should use at the end of the day strongly depends on the nature of the IT landscape in which you want to run Kolla. I tend to choose Kubernetes in many cases.

What Remains

If you want to connect containers and OpenStack, you cannot ignore the Zun, Magnum, and Kolla projects. Whereas Zun and Magnum are aimed at OpenStack users, Kolla is intended more for OpenStack administrators. In any case, container fans certainly get their money's worth. Those interested in OpenStack on containers also should look outside OpenStack – think Stackanetes.

The leap to using containers is no longer so huge. However, containerizing OpenStack is not trivial and requires prior consideration. What services are fundamental in order for other services or the basic framework to function? In which order should the individual services be instantiated? Is it advisable to hardwire certain components to simplify installation and operation? Is container management software recommended or even necessary?