Correctly integrating containers

Let's Talk

Kubernetes supports different ways of making containers and microservices contact each other, from connections with the hardware in the data center to the configuration of load balancers. To ensure communication, the Kubernetes [1] network model does not use Network Address Translation (NAT). All containers receive an IP address for communication with nodes and with each other, without the use of NAT.

Therefore, you cannot simply set up two Docker hosts with Kubernetes: The network is a distinct layer that you need to configure for Kubernetes. Several solutions currently undergoing rapid development, like Kubernetes itself, are candidates for this job. In addition to bandwidth and latency, integration with existing solutions and security also play a central role. Kubernetes pulls out all stops with the protocols and solutions implemented in Linux.

The Docker container solution takes care of bridges, and Linux contributes the IP-IP tunnel, iptables rules, Berkeley packet filters (BPFs), virtual network interfaces, and even the Border Gateway Protocol (BGP), among other things.

Kubernetes addresses networks partly as an overlay and partly as a software-defined network. All of these solutions have advantages and disadvantages when it comes to functionality, performance, latency, and ease of use.

In this article, I limited myself to a solution that I am familiar with from my own work. That doesn't mean that other solutions are not good; however, introducing only the projects mentioned here [2] would probably fill an entire ADMIN magazine special.

Before version 1.7, Kubernetes only implemented IPv4 pervasively. IPv6 support was limited to the services by Kubernetes. Thanks to Calico [3], IPv6 is also used for the pods [4]. The Kubernetes network proxy (kube-proxy) was to be IPv6-capable from version 1.7, released in June 2017 [5].

Kubernetes abstraction enables completely new concepts. Network policy [6] regulates how groups of pods talk with each other and with other network endpoints. With this feature, you can set up, for example, partitioned zones in the network. It relies on Kubernetes namespaces (not to be confused with those in the kernel) and labels. Abstraction in this way is complex to implement in a Linux configuration, and not every Kubernetes product supports the implementation.

Flannel

Flannel (Figure 1) [7] by CoreOS is the oldest and simplest Kubernetes network. It supports two basic principles by connecting containers with each other and ensuring that all nodes can reach each container.

![A Flannel overlay network manages a class C network; the nodes typically use parts of a private network from the 10/8 class A network [8]. A Flannel overlay network manages a class C network; the nodes typically use parts of a private network from the 10/8 class A network [8].](images/F01-packet-01.png)

Flannel creates a class C network on each node and connects it internally with Docker bridge docker0 via Flannel bridge flannel0. The Flannel daemon flanneld connects the other nodes to the outside using an external interface. Depending on the back end, Kubernetes transports packets between pods of various nodes via VxLAN, by way of host routes or encapsulated in UDP packets. One node sends packets, and its counterpart accepts them and forwards them to the addressed pod.

Each node is given a class C network with 254 addresses. Kubernetes maps the address to the class B network externally. In terms of network technology, flanneld thus only changes the network masks from B to C and back. Listing 1 shows a simple Flannel configuration.

Listing 1: Flannel Configuration

01 [...]

02 {

03 "Network": "10.0.0.0/8",

04 "SubnetLen": 20,

05 "SubnetMin": "10.10.0.0",

06 "SubnetMax": "10.99.0.0",

07 "Backend": {

08 "Type": "udp",

09 "Port": 7890

10 }

11 }

12 [...]

At first glance, this concept looks robust and simple, but it quite quickly reaches its limits, because this network model generates a maximum of 256 nodes. Although 256 nodes is rather a small cluster for a project with the ambitions of Kubernetes, they can be easily integrated into the virtual private cloud (VPC) networks of some cloud providers. For more complex networks, Kubernetes supports a concept called container network interface (CNI) that allows the configuration of various versions of network environments.

Kubernetes with CNI

Listing 2 shows a sample configuration for CNI. In addition to the version number, it sets a unique name for the network (dbnet), determines the type (bridge), and otherwise lets the admin define the usual parameters, such as network masks, gateway, and a list of name servers, all with IP address management (IPAM), which integrates DNS and DHCP, among other things.

Listing 2: CNI Configuration

01 [...]

02 {

03 "cniVersion": "0.3.1",

04 "name": "dbnet",

05 "type": "bridge",

06 // type (plugin) specific

07 "bridge": "cni0",

08 "ipam": {

09 "type": "host-local",

10 // ipam specific

11 "subnet": "10.1.0.0/16",

12 "gateway": "10.1.0.1"

13 },

14 "dns": {

15 "nameservers": [ "10.1.0.1" ]

16 }

17 }

18 [...]

After launch, a container can successively use a number of plugins. In a well-defined chain, thanks to CNI, Kubernetes forwards the JSON output from one plugin to the next plugin as input. This procedure is described in detail at the CNI GitHub repository [9].

Calico Project

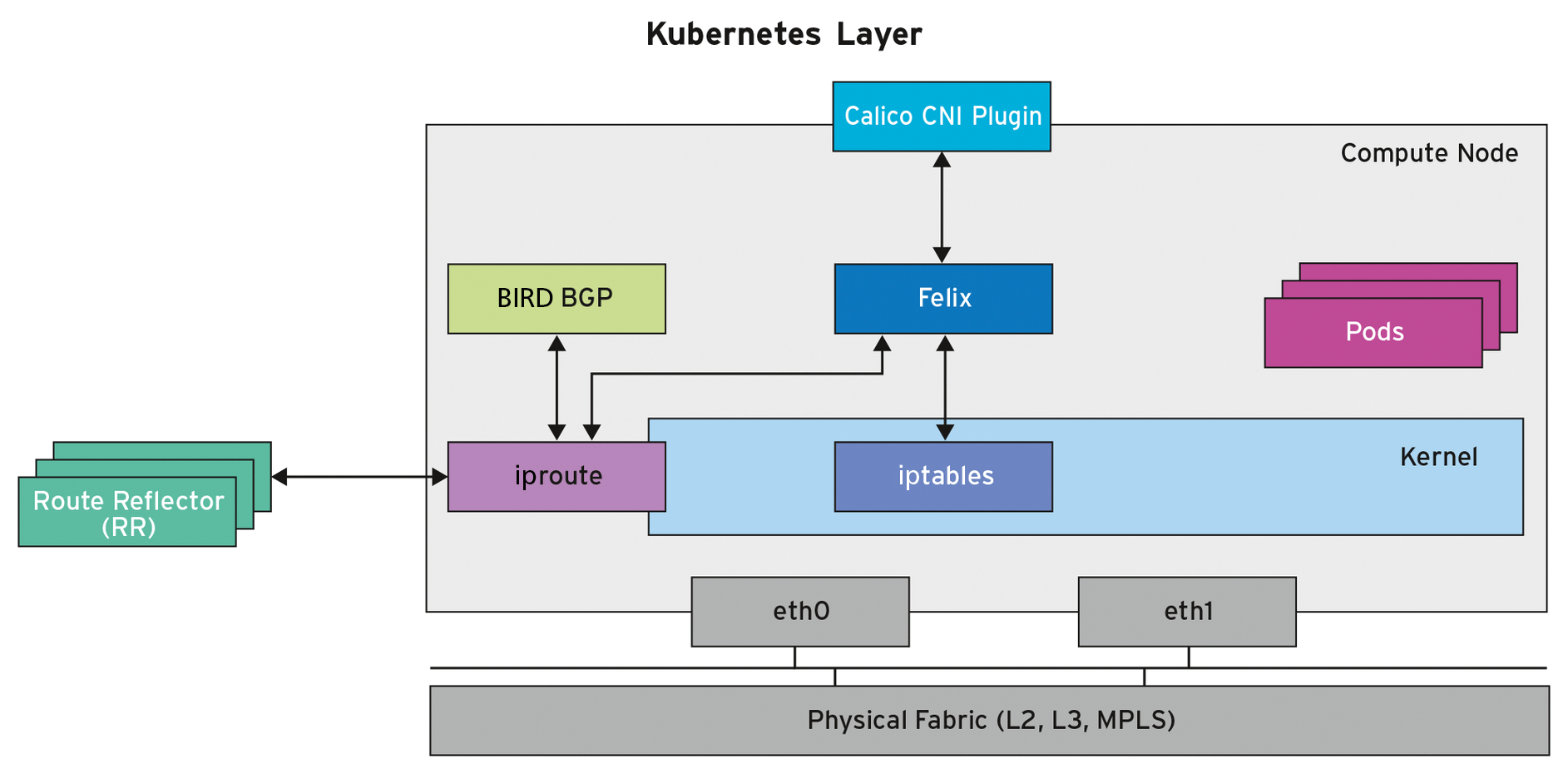

Calico [3] considers the ratio of nodes to containers. The idea is to transfer the concepts and relations between the data center and the host. The node is to the pod what a data center is to a host. In a data center, however, containers have overtaken hardware in terms of dynamics and life cycle by many orders of magnitude.

For Calico, you have two possible installation approaches. A custom installation launches Calico in the containers themselves, whereas a host installation uses systemd to launch all services. In this article, I describe the custom installation, which is more flexible but can result in tricky bootstrapping problems, depending on the environment and versions involved.

Calico uses CNI to set itself up as a network layer in Kubernetes. You can find a trial version with Vagrant at the Calico GitHub site [10]. In addition to configuration by CoreOS [11], you can configure and operate Calico in a custom installation using on-board tools provided entirely by Kubernetes. The actual configuration resides in a ConfigMap [12].

A DaemonSet [13] runs with Felix [14] on nodes, guaranteeing that the Calico node pod runs exactly once per node (Figure 2). Because Calico implements the Kubernetes network policy [6] with the Felix per-host daemon, Kubernetes launches a replica of a Calico policy controller [15].

BGP, Routing, and iptables Rules

When creating, Felix assigns an IP address to each pod; iptables rules configure all allowed and prohibited connections. If the cluster is to adapt the routing, Felix also generates the necessary routes and adapts them to the kernel. However, unlike the iptables rules, routing is a concept that goes beyond a single node.

BGP now comes into play. A protocol that configures Internet-wide routes also helps connect containers on different nodes. You need to limit the scope of Calico BGP to the Kubernetes cluster. Most administrators are a little in awe of BGP as an almost all-powerful routing protocol that can redirect the traffic of entire countries.

At first glance, the options are rather frightening, especially in terms of their effect and security. A connection to the carrier back end is not necessary. Calico does not need access to this very heavily protected part of the Internet routing infrastructure, so it is only an apparent security risk.

Rules for the Network

One important aspect of Kubernetes is the ability to share clusters – that is, to set up a common cluster for several teams and possibly even for several stages of development, testing, and production. Listings 3 and 4 show typical configurations. Listing 3 adds an annotation to a namespace. In specific cases, you need to extend net.beta.kubernetes.io/network-policy by including a JSON object that sets ingress isolation to the DefaultDeny value. This means that other namespaces will fail to reach any of the pods in this namespace. If you want to restrict access further, isolate not only the namespaces, but the pods within the namespace, as well.

Listing 3: Annotation of a Namespace

01 [...]

02 kind: Namespace

03 apiVersion: v1

04 metadata:

05 annotations:

06 net.beta.kubernetes.io/network-policy: |

07 {

08 "ingress": {

09 "isolation": "DefaultDeny"

10 }

11 }

12 [...]

Listing 4 describes the policy for a pod with the db role in which a Redis database is running. It only allows incoming TCP traffic on port 6379 (also named ingress) of pods from the myproject namespace with the frontend role. All the other pods do not gain access. If you take a closer look at the definition, you will see significant similarities with known packet filters, such as iptables. However, the Kubernetes user has to adjust these rules very quickly at the level of pods and containers.

Listing 4: Network Policy

01 [...] 02 apiVersion: extensions/v1beta1 03 kind: NetworkPolicy 04 metadata: 05 name: test-network-policy 06 namespace: default 07 spec: 08 podSelector: 09 matchLabels: 10 role: db 11 ingress: 12 - from: 13 - namespaceSelector: 14 matchLabels: 15 project: myproject 16 - podSelector: 17 matchLabels: 18 role: frontend 19 ports: 20 - protocol: tcp 21 port: 6379 22 [...]

If the network layer receives a change in the container life cycle from a container network interface, such as the previously mentioned Calico, it generates and implements the rules in iptables and at the routing level. It is important here to separate the responsibilities (separation of concerns). Kubernetes merely provides the controller; the implementation is handled by the network layer.

Ingress

Kubernetes offers the framework, whereas a controller takes care of availability. Services based on HAProxy [16], Nginx reverse proxy [17], and F5 hardware [18] are available. In an earlier article [19], I describe the general concepts for these services.

Ingress extends these concepts significantly. Whereas the built-in service only allows simple round-robin load balancing, an external controller pulls out all the stops and can conjure up external public hosts with arbitrary reachable URLs, arbitrary load balancer algorithms, SSL termination, or virtual hosting out of thin air.

Listing 5 shows a simple example of a path-based rule. It redirects everything under /foo to the service s1 and everything under /bar to the service s2. Listing 6 shows a host-based rule that is used to evaluate the host headers to identify the hostnames.

Listing 5: A Path-Based Rule

01 [...] 02 apiVersion: extensions/v1beta1 03 kind: Ingress 04 metadata: 05 name: test 06 spec: 07 rules: 08 - host: foo.bar.com 09 http: 10 paths: 11 - path: /foo 12 backend: 13 serviceName: s1 14 servicePort: 80 15 - path: /bar 16 backend: 17 serviceName: s2 18 servicePort: 80 19 [...]

Listing 6: A Host-Based Rule

01 [...] 02 apiVersion: extensions/v1beta1 03 kind: Ingress 04 metadata: 05 name: test 06 spec: 07 rules: 08 - host: foo.bar.com 09 http: 10 paths: 11 - backend: 12 serviceName: s1 13 servicePort: 80 14 - host: bar.foo.com 15 http: 16 paths: 17 - backend: 18 serviceName: s2 19 servicePort: 80 20 [...]

If you want to provide a service with SSL support, you can only do so on port 443. Listing 7 shows how to provide an SSL certificate, which ends up in a Kubernetes secret. To use the certificate, you need to reference it in an ingress rule and simply state the name in the .spec.tls.secretName[0] field (Listing 8).

Listing 7: Setting up an SSL Certificate for a Service

01 [...] 02 apiVersion: v1 03 data: 04 tls.crt: <base64 encoded cert> 05 tls.key: <base64 encoded key> 06 kind: Secret 07 metadata: 08 name: mysecret 09 namespace: default 10 type: Opaque 11 [...]

Listing 8: Using Certificate Services

01 [...] 02 apiVersion: extensions/v1beta1 03 kind: Ingress 04 metadata: 05 name: no-rules-map 06 spec: 07 tls: 08 - secretName: mysecret 09 backend: 10 serviceName: s1 11 servicePort: 80 12 [...]

Pitfalls

Some of the pitfalls on the way to creating useful Kubernetes clusters also need to be mentioned here. Docker, for example, needs to launch a pause container to create a network namespace. In this case, a process that does nothing else runs in this container. Bootstrapping with Docker does not always work without trouble. On Red Hat systems with Calico, for example, inconsistencies with the firewalld daemon occurred and caused a number of solvable yet irritating problems.

Conclusions

Kubernetes is a rapidly evolving ecosystem that pursues many very good strategies the project has not yet fully implemented. If you are aware of the three-month release cycle and are ready to join the development work on Kubernetes, you can look forward to a system that makes developing, updating, and customizing distributed applications easier than any other distributed system.

If you need multitenancy (see the "Multitenancy in Kubernetes" box) or do not work with highly security-sensitive data, Kubernetes can be used now for large, distributed production applications. However, if you have other applications in mind, you should wait one or two versions.

Listing 9: Pod with Security Context

01 [...] 02 apiVersion: v1 03 kind: Pod 04 metadata: 05 name: hello-world 06 spec: 07 containers: 08 # Specification of the Pod's Containers 09 securityContext: 10 readOnlyRootFilesystem: true 11 runAsNonRoot: true 12 [...]