OpenStack Trove for users, sys admins, and database admins

Semiautomatic

In the wake of cloud computing and under the leadership of Amazon, a number of as-a-service resources have for several years been cornering the market previously owned by traditional IT setups. The idea is quite simple: Many infrastructure components, such as databases, VPNs, and load balancers, are only a means to an end for the enterprise.

If your web application needs a place to store its metadata, a database is usually used. However, the company that runs the application has no interest in dealing with a database. A separate server, or at least a separate virtual machine (VM) together with an operating system, would need to be set up and configured for the database. Issues such as high availability increase the complexity. A database with a known login and address that the application can connect to would work just as well, which is where Database as a Service (DBaaS) comes in.

The advantage of DBaaS is that it radically simplifies the deployment and maintenance of the relevant infrastructure. The customer simply clicks in the web interface on the button for a new database, which is configured and available shortly thereafter. The supplier ensures that redundancy and monitoring are included, as well.

A DBaaS component for OpenStack named Trove [1] has existed for about three years. Although you can integrate it into an existing OpenStack platform, Trove alone is unlikely to make you happy. If you look into the topic in any depth, you will notice that vendors, users, and database administrators have to work hand in hand to create a useful service in OpenStack on the basis of Trove.

In this article, I tackle the biggest challenges that operating Trove can cause for all stakeholders. OpenStack vendors can discover more about the major obstacles in working with Trove, and cloud users can look forward to tips for the handling Trove correctly in everyday life.

Performance

Database performance, in particular, causes headaches for cloud providers for obvious reasons: Whereas databases in conventional setups are regularly hosted on their own hardware, in the cloud, they share the same hardware with many other VMs.

Storage presents an even greater challenge. A database, such as MySQL, running on real metal can connect to its local storage – usually a hard disk or fast SSD on the same computer – without a performance hit. However, VMs that run in clouds usually do not have local storage; instead, they use volumes that access network storage in the background.

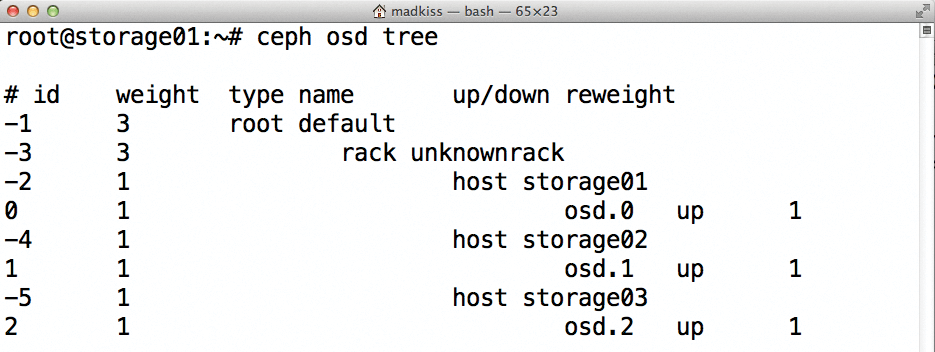

A typical example is Ceph used as a storage back end for OpenStack. Each write operation on a VM results in multiple network reads and writes: The Ceph client on the virtualization server receives the write action and passes it to the primary storage device in the Ceph cluster – that is, in Ceph-speak, its primary Object Storage Device (OSD). This primary OSD then sends the same data in a second step to as many other OSDs as defined by its replication policy (Figure 1).

Only when sufficient replicas are created in the Ceph cluster does the VM's Ceph client send confirmation that the write access was successful. The database client, which originally only wanted to change a single entry in MySQL, thus waits through several network round trips for the operation to complete successfully.

This problem is by no means specific to Ceph: Virtually all solutions for distributed storage in clouds have similar problems. Ceph stands out as a particularly bad example, because the Controlled Replication Under Scalable Hashing (CRUSH) algorithm, which calculates the primary OSD and the secondary OSDs, is particularly prone to latency.

From the provider's point of view, the problem is difficult to manage because a lower limit is clearly defined. Ethernet has an inherent latency that can only be reduced using latency-optimized transport technologies (e.g., InfiniBand), which means the provider chooses a different network technology that has its own challenges.

Paths and Dead Ends

Which approaches are open to a provider to achieve mastery over the topic of latency for DBaaS? The obvious approach is not to store VMs for databases from Trove on network storage, but to run them with local storage. In the OpenStack context, this means that the VM and its hard disk do not reside in Ceph or on any other network storage medium, but directly on the local storage medium of the computer node. In such a scenario, however, it is advisable to start the VM on a node with SSDs, because it offers noticeable performance gains with regard to throughput and latency.

The provider would have to configure their OpenStack to do this: Typically, they would set a separate availability zone with fast local storage and then give customers the opportunity to accommodate Trove databases there.

However, what looks like a good idea at first glance turns out to be a horror scenario on closer inspection. A VM that has been started in this way has no redundancy at all. If the hypervisor node with the VM fails, the VM is simply not accessible. If the disk on which the VM and the database are located fails, the data is lost and the user or provider can resort to a backup (which, one hopes, they have created).

Even if you do not assume the horror scenario of a hardware failure, this type of setup harbors more dangers than benefits for the vendor: If a VM only exists locally, it cannot be moved to another host without downtime – precisely the scenario in the everyday life of a cloud with hundreds of nodes, because, otherwise, the individual servers are virtually impossible to maintain. No matter how you look at it, VMs located on local storage of the individual hypervisor nodes are definitely not a good idea.

Evaluation Is Everything

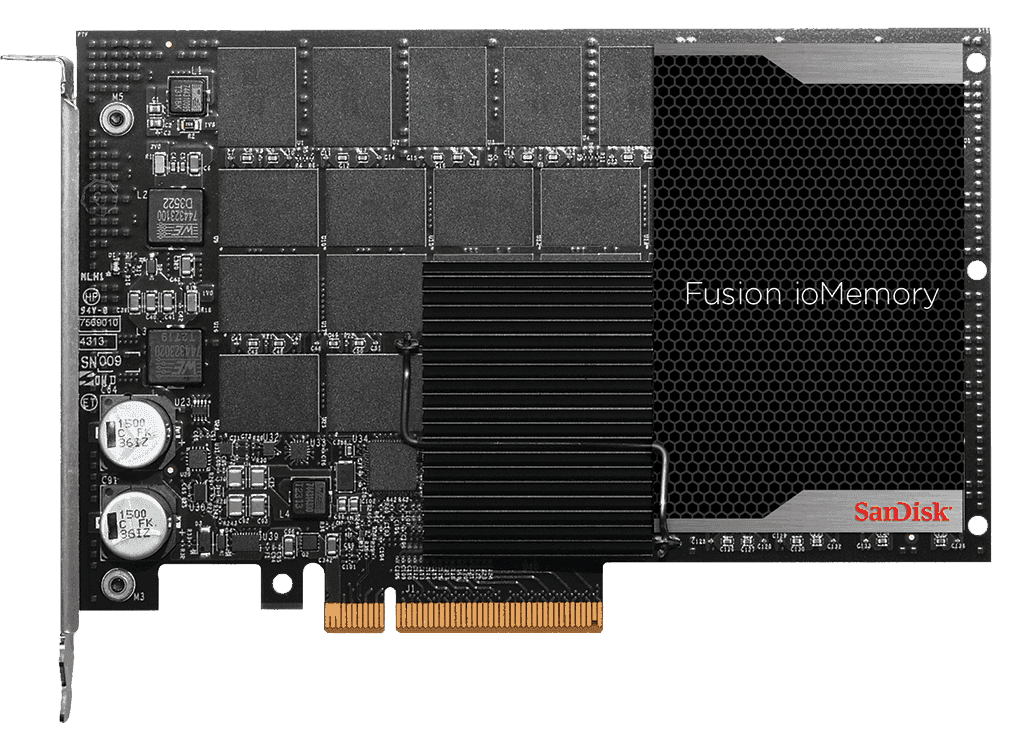

Despite all the disadvantages of local storage, it is also clear that the latency of local storage can never be achieved with network-based storage, especially in the case of sequential writing. Anyone used to using MySQL on Fusion ioMemory (Figure 2) will almost always experience an unpleasant surprise when switching to a DBaaS database in the cloud.

An area of conflict in which Cloud providers are practically always entangled is: What does the setup need to cover? Before a robust answer can be given, it is virtually impossible to find a suitable storage solution for databases in the cloud.

Many – especially small – setups for cloud customers impose minimal requirements on the database, so network-based storage would be perfectly fine. However, anyone who wants to run large setups with thousands of simultaneous database requests are in trouble. In the first step, the supplier therefore has to analyze the customer's needs to provide the basis for further planning.

Storage Alternatives

If you use Ceph and are dissatisfied with its performance for databases, you should look for alternatives. Many solutions such as Quobyte [2] and StorPool [3] advertise significantly lower latencies, without having to give up on Ethernet and without having to replace the existing storage. The previously described solution, with its own availability zone for the nodes with other storage, can be integrated into an existing environment. For the provider, however, this means a good deal of effort, because the they need to evaluate, test, and develop up front.

The Ceph developers are aware of their high latency problems and are already working on a solution: BlueStore is set to do everything better by trying to solve the problem at the physical storage level. Thus far, Ceph has relied on storing the binary objects on an XFS filesystem.

Originally Inktank, the company behind Ceph, promoted Btrfs, but because it is still not up to full speed, they had to make do with XFS. XFS is hardly suitable for the typical Ceph use case, because OSDs virtually never need large parts of the POSIX specification; POSIX compliance comes at the expense of major performance hits.

BlueStore [4] is accordingly a new storage back end or on-disk format for data that resides directly on the individual block storage devices and should perform significantly better than XFS. The next long-term support version of Ceph is supposed to support BlueStore for production use, but users will have to wait.

If Worst Comes to Worst

If you do not have an alternative network storage solution, you will have to come back to local storage, but you definitely will want to mitigate the worst side effects. For example, a single-node setup could be used, with individual nodes running VMs with local storage that is replicated in the background, so that it can be switched over to another host during operations.

DRBD 9, with its n-node replication, would be a good candidate. However, the DRBD driver for OpenStack Cinder does not currently offer the ability to switch a Cinder volume to the primary node on which the VM actually runs. The result would be that, although access to the storage device works, the data ultimately would be returned via the network.

Other variants exist in the form of locally connected Fibre Channel devices. Although they can handle the replication themselves, a tailor-made setup for the individual use case is absolutely essential.

Replication and Multimaster Mode

In principle, replication in MySQL is a useful way of distributing load across multiple nodes and ensuring redundancy. However, if database-level replication takes place between three MySQL instances that write to Ceph volumes in the background, the previously described latency effect multiplies – not a very useful construct.

On the other hand, it would be possible to organize replication at the MySQL level if it takes place between three VMs that have local storage, because then the maximum latency would be almost identical to Ethernet latency between the physical hosts – and definitely much lower than when accessing distributed network storage.

Multinode setups in MySQL are relevant in the context of load distribution. The typical master-slave setup, as used in databases on real metal, can also be used in a DBaaS environment, and, fittingly, Trove supports this type of setup out of the box (see the "Handling Your Own Images" box).

Clustered

When you start a database with Trove, you can determine whether the database should run on a single VM or in a cluster. This works for all database drivers in Trove that support replication of the respective database (i.e., currently, MongoDB, MySQL, Redis, Cassandra, and others, but not PostgreSQL).

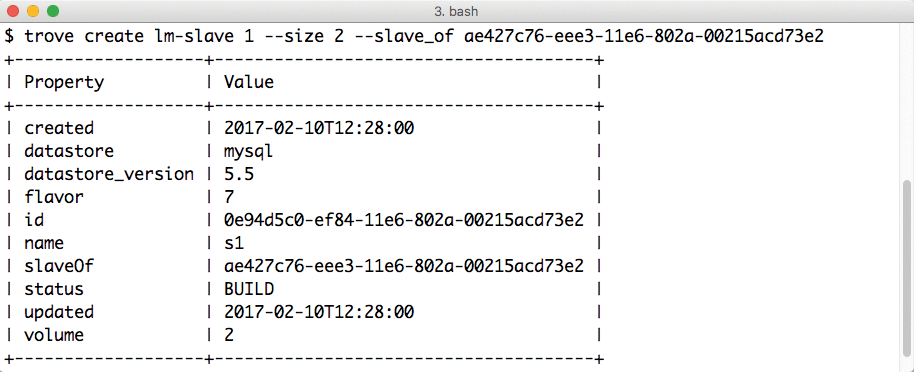

With the use of MySQL, the approach from the admin's point of view is simple: First, start a single MySQL database, which is then supported with a slave database. The Trove agent running on the VM started by Trove then automatically configures the database. In this way, the cluster is created without any further intervention by the user. The command:

trove create lm-slave 1 --size 2 --slave_of ae427c76-eee3-11e6-802a-00215acd73e2

would add a slave node named lm-slave of flavor 1 to the database with the ID ae427c76-eee3-11e6-802a-00215acd73e2 (Figure 3).

If you are using a fairly recent cloud with OpenStack Mitaka, you could even use Galera for clustering with MySQL (see also the article on MySQL group replication [6]). Trove then starts several MySQL instances and prepares them in such a way that Galera creates a multimaster database.

Such a setup easily can be combined with local storage on hypervisor nodes: Because the data then exists several times on different hosts, it does not matter if a single host fails or temporarily disappears from the cluster for maintenance. However, Galera replicates synchronously, so a single network latency at least is generated, which is passed on to the client. However, this is significantly lower latency than for Ceph.

Backups and Snapshots

Creating snapshots or backups is part of everyday operations when you work with databases. A running database is a good thing to have, but the gap between being happy and losing all your data is narrow. Data is often destroyed by overly eager delete commands, and sometimes the content of the database breaks down completely, even without fat fingering. For example, imagine a software update that also updates the database schema unfortunately going wrong and leaving only garbage in the database. In such cases, a good solution is necessary, and a backup is critical to the admin's survival.

The number of tools that can be used to back up and restore different databases is almost unmanageable. Because a user running Trove on a VM cannot actually get to the database, some of the usual candidates are already ruled out. Therefore, Trove developers have given their software its own backup feature: Backups can be launched directly from the VM via the OpenStack API and stored centrally. The restore follows the same path. The following example demonstrates the MySQL-based approach.

Backup Database

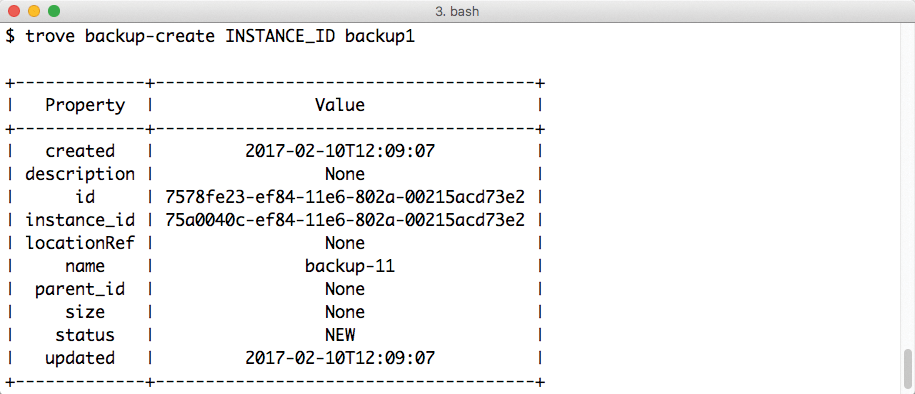

First, the user needs the ID of the Trove instance from which a backup is to be created. At the command line, the trove list command displays all running Trove instances belonging to the respective user. The entries in the id field are important. As soon as the ID of the database from which a backup is to be created is known, you then run the

trove backup-create <ID> backup-1

command to achieve the desired results (Figure 4). This command then displays the backup it created. If the entry in the status field reads COMPLETED, everything worked.

For successful backups, the Trove Guest Agent must be configured properly on the VM. More specifically, /etc/trove/trove-guestagent.conf needs to state where the Guest Agent should store the backups. The default is in OpenStack Swift, the cloud environment's object store.

If you want the backups to be placed elsewhere, as a customer, you will either need to give your VM a modified Guest Agent configuration via the Nova metadata or ask the provider to provide a Trove image. The provider should do the groundwork, anyway, and offer a Trove image in Glance that is adapted to the respective environment.

Restoring the Backup

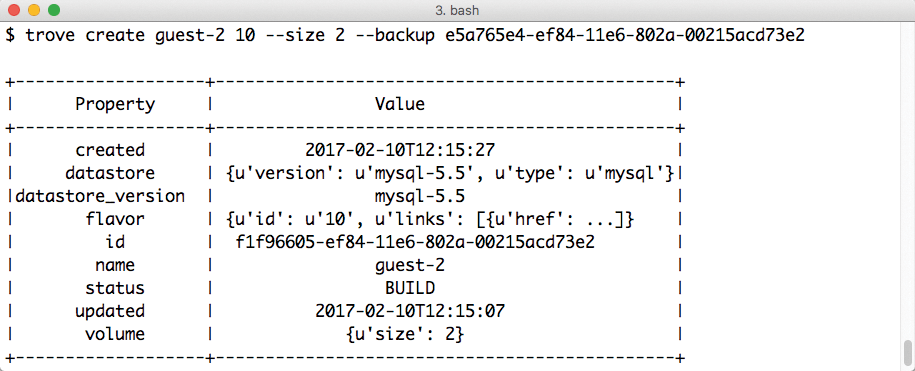

"Nobody needs backups; everyone needs restores" is a platitude – both obvious and inevitable: The best of all backups is worthless if it cannot be restored properly. Luckily, the Trove developers have considered the restore issue and offer the option of restoring a database directly via the Trove API (Figure 5). This works differently, however, than users may be accustomed to from classical setups.

In the first step, the user starts a completely new database and tells Trove to restore the backup. In the second step, the server automatically assigns the (public) IP address used previously by the old database to the new database. In the last step, the admin deletes the old database so that it completely disappears from Trove. Clients then reconnect to a database with valid data, without the user having to change anything in terms of the configuration of their components.

The command for starting a database from an existing backup would look like this:

trove create guest-2 10 --size 5 --backup <ID>

You can use trove delete <ID> to delete the old database after completing the migration.

Although backups in Trove are neatly implemented, things do not look as friendly in the case of snapshots: Support for snapshots is currently completely absent in Trove, but admins can at least resort to the snapshot feature in Nova, which creates a snapshot of the entire VM, not just the database.

Conclusions

Although the individual Trove components can be integrated relatively easily into an existing OpenStack platform, admins still need to be prepared for a huge amount of work: As a platform operator, even creating the required images for Trove is not as easy as one would like. Storage is of particular importance: Operators would do well to define their own needs very precisely. However, from the user's point of view, Trove is fun, if the platform operator does their work well. Trove significantly facilitates the task of managing your VMs for databases.