Professional virtualization with RHV4

Polished

Red Hat Virtualization (RHV) once set off to make professional virtualization at the enterprise level more affordable – in particular for small enterprises. However, the monetary barriers to entry are pretty high if you look at the market leader VMware. RHV4 is available both as a standalone product and as part of Red Hat Cloud Suite, which comprises OpenStack Platform, OpenShift, CloudForms, Satellite, and Ceph storage.

In conjunction with GlusterFS and Ceph storage solutions and integration of OpenShift containers and OpenStack Platform, RHV is a strategic product that can play an important role in transforming IT in many companies. Although still lagging well behind Microsoft, VMware, and Citrix, Red Hat likes to emphasize that its own virtualization solution is finding its way into many businesses via the OpenStack detour.

However, this fact was misunderstood by many companies. Not infrequently, small and medium-sized enterprises switched from VMware to OpenStack for classic virtual server workloads as a cost-effective alternative for vSphere. OpenStack, though, is far too complex as a private cloud solution. According to Red Hat, many such companies are now coming back to RHV after some painful experiences.

In this article, I describe the setup of a small test scenario that investigates whether the elaborate setups required in earlier versions of RHV have become easier, and I take a look at the most important new features, including faster performance, a new programming API, support for OpenStack and containers, the new dashboard for Red Hat Virtualization Manager (RHV-M), and the Cockpit-based option for running the Machine Manager more or less automatically as a hosted engine.

RHV4 Architecture

Setting aside the underlying data of the hypervisor, in which KVM, Xen, vSphere, and Hyper-V traditionally do not differ a great deal, integrated virtualization solutions are more about the trappings – that is, additional software features (live migration, clustering, etc.), interfaces to the outside, and ecosystem of partner products. What RHV4 offers all told can be gathered from the Feature Guide [1].

In terms of architecture, the solution remains unchanged, with the web-based RHV-M, which is based on the in-house JBoss Middleware as an application server, and the hypervisor nodes based either on Red Hat Virtualization Host (RHVH) or Red Hat Enterprise Linux (RHEL). Add to this, components such as the Simple Protocol for Independent Computing Environments (SPICE), which plays a central role in RHV, in particular as a virtual desktop infrastructure (VDI) solution or the Virtual Desktop Server Manager (VDSM) broker component.

All hypervisor nodes communicate with the management engine (ovirt-engine) using a stack from Libvirt and VDSM, also known as the "oVirt host agent" in the context of oVirt. The guest systems use the "oVirt guest agents" written in Python to tell ovirt-engine, for example, which applications they are running, how much memory the applications need, or what IP address they have. In RHV, the hypervisor operation-optimized kernel with its minimal footprint is RHVH, and the management system, including the resource manager and management interface, is RHV-M. Currently RHV-M only runs on RHEL 7.2 and requires JBoss EAP 7, Java OpenJDK 8, and PostgreSQL 9.2.

Simplified Deployment

Rolling out RHV is far easier in version 4. As a minimum, you need one hypervisor node (but preferably three or more for cluster functions) that can be implemented on either the RHVH mini-footprint or RHEL. The former version was less popular with RHV insiders up to and including version 3.6 because of the heavily restricted shell. In RHV4, the RHVH footprint is now more convenient in this regard, although it has again shrunk compared with the previous version. After downloading the ISO image, you can complete the basic install for RHVH with Anaconda, which is a matter of a few mouse clicks. Next, sign up with the subscription manager on the Red Hat Content Delivery Network (CDN). An RHVH always automatically subscribes to a freely available RHVH entitlement. Now, you just need to integrate the rhel-7-server-rhvh-4-rpms repository:

$ subscription-manager repos --enable=rhel-7-server-rhvh-4-rpms

Registering the installation against the CDN is now a far more convenient experience than in the previous version; you can also do this in the new Cockpit user interface. To register the host, simply click on Tools | Subscriptions | Register System.

Installing RHV-M

You also need a Manager (RHEV-M) that is based on RHEL, either physically or as a virtual machine (VM). To install RHV (i.e., to "soup up" a standard RHEL machine and create an RHV-M host), you can use the complex engine-setup script. If you have bought an RHV subscription, you only need to subscribe to the corresponding channels with your RHEL VM (or a physical machine) to install the required RHEV packages. If you set up a fresh RHEL machine, first sign up as usual on the CDN and choose the appropriate subscription. The

subscription-manager list --available

command lists the available subscriptions. Now, attach the desired subscription via the pool ID by typing

subscription-manager attach --pool = pool_id

and subscribe to the required channels as follows:

$ subscription-manager repos --enable=rhel-7-server-rpms $ subscription-manager repos --enable=rhel-7-server-supplementary-rpms $ subscription-manager repos --enable=rhel-7-server-rhv-4.0-rpms $ subscription-manager repos --enable=jb-eap-7-for-rhel-7-server-rpms

Finally, install the Manager with yum install rhevm.

Cockpit for the Manager

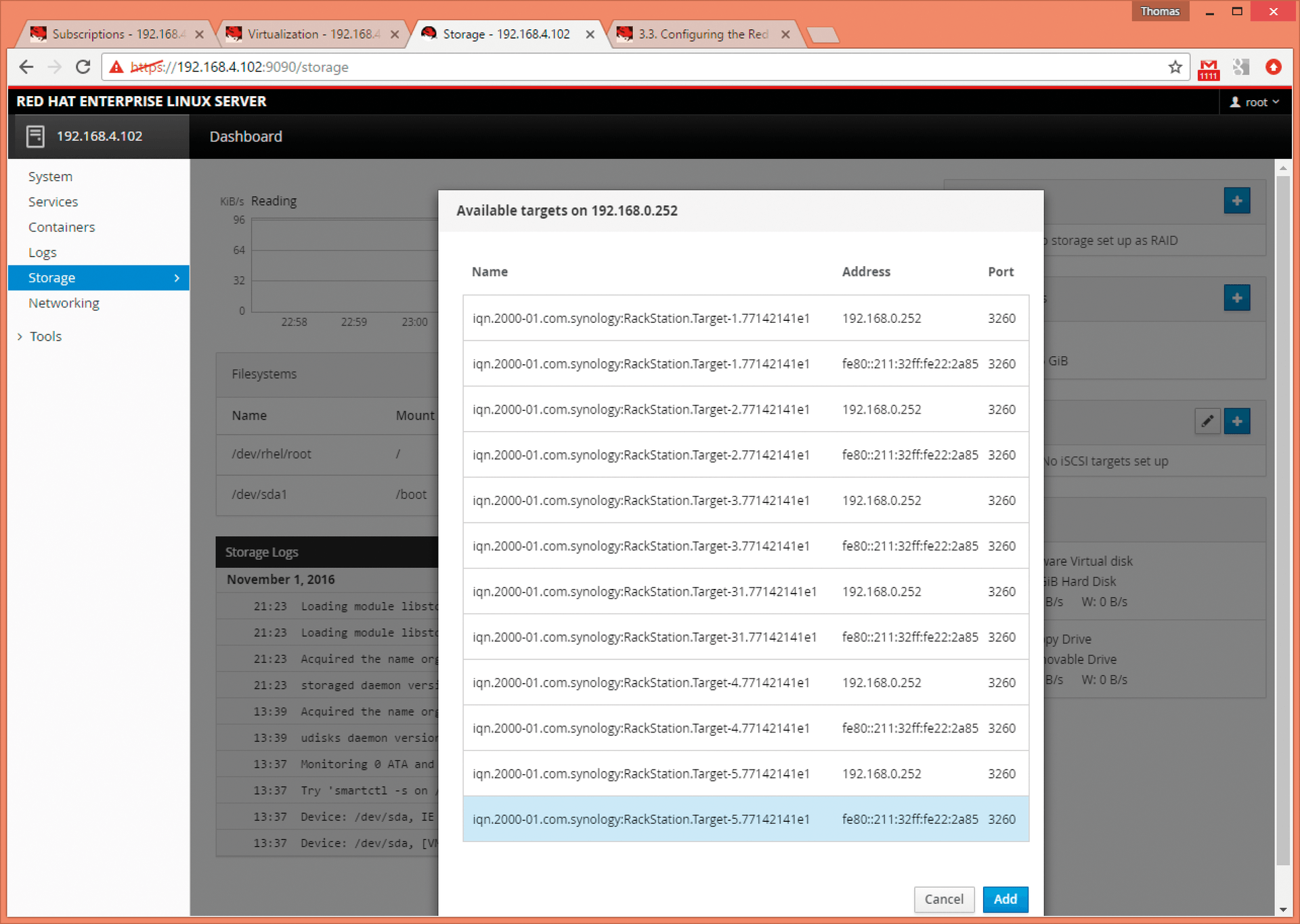

The Cockpit interface installed by default on the RHVH nodes is an interesting option for the Machine Manager, which you can use to set up additional storage devices such as iSCSI targets or additional network devices such as bridges, bonds, or VLANs. In Figure 1, I gave the Manager an extra disk in the form of an iSCSI logical unit number (LUN).

To install the Cockpit web interface, you just need to include two repos – rhel-7-server-extras-rpms and rhel-7-server-optional-rpms – and then install the software by typing yum install cockpit. Cockpit requires port 9090, which is opened as follows, unless you want to disable firewalld.

$ firewall-cmd --add-port=9090/tcp $ firewall-cmd --permanent --add-port=9090/tcp

Now log in to the Cockpit web interface as root.

Setting Up oVirt Engine

To install and configure the oVirt Engine, enter:

$ yum install ovirt-engine-setup $ engine-setup

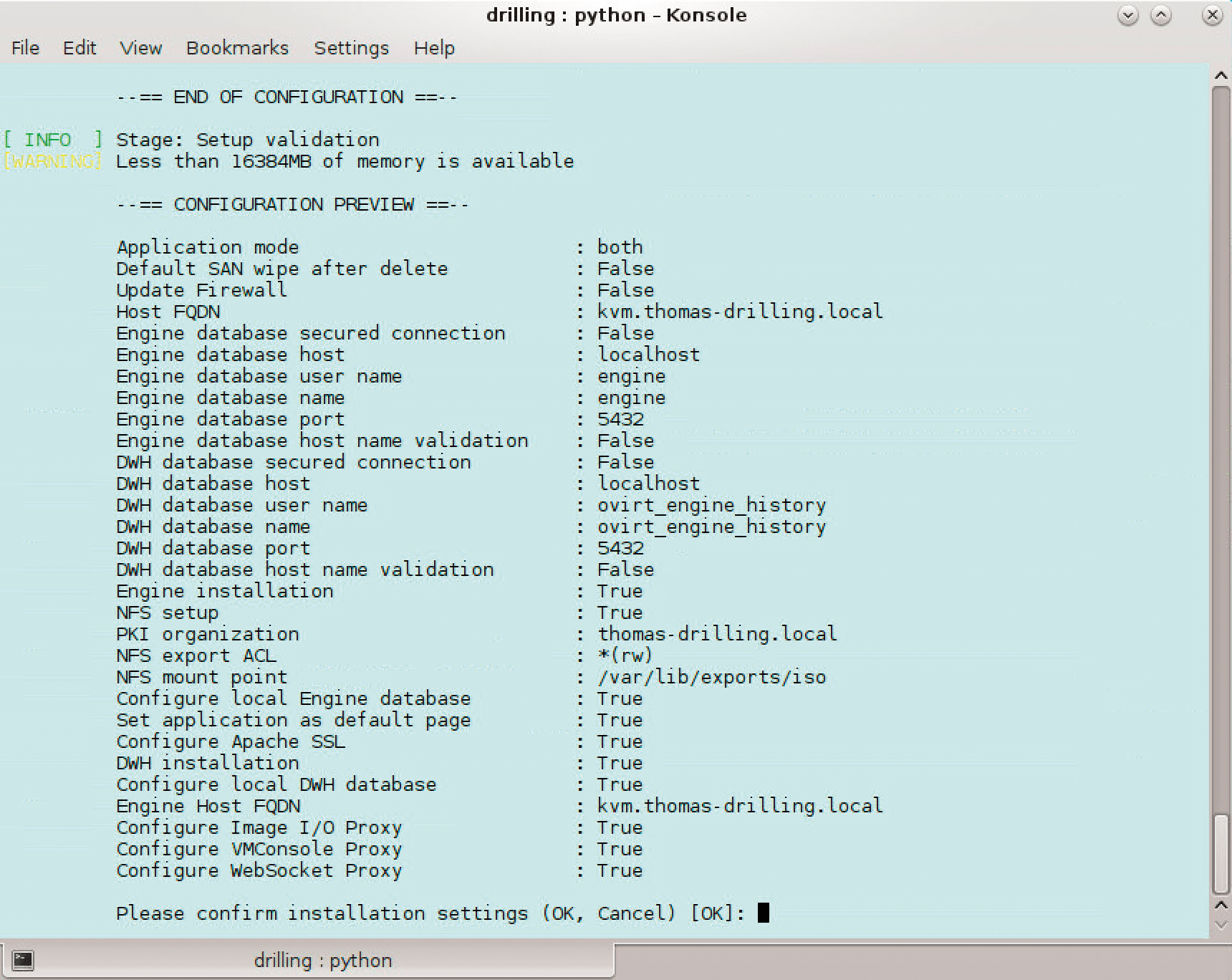

The engine-setup command takes you to a comprehensive command-line interface (CLI)-based wizard that guides you through the configuration of the virtualization environment and should be no problem for experienced Red Hat, vSphere, or Hyper-V admins, because the wizard suggests functional and practical defaults (in parentheses). Nevertheless, some steps differ from the previous version. For example, step 3 lets you set up an Image I/O Proxy and expands the Manager with a convenient option for uploading VM disk images to the desired storage domains.

Subsequently setting up a WebSocket proxy server is also recommended if you want your users to connect with their VMs via the noVNC or HTML5 console. The optional setup of a VMConsole Proxy requires further configuration on client machines. In step 12 Application Mode, you can decide between Virt, Gluster, and Both, where the latter offers the greatest possible flexibility. Virt application mode only allows the operation of VMs in this environment, whereas Gluster application mode also lets you manage GlusterFS via the administrator portal. The public key infrastructure (PKI) configuration wizard continues after the engine configuration and guides you through the setup of an NFS-based ISO domain under /var/lib/export/iso on the Machine Manager.

After displaying the inevitable summary page (Figure 2), the oVirt Engine setup completes; after a short while, you will be able to access the Manager splash page at https://rhvm-machine/ovirt-engine/ using the account name admin and a password, which you hopefully configured in the setup. From here, the other portals, such as the admin portal, the user portal, and the documentation, are accessible.

You now need to configure a working DNS, because access to all portals relies on the fully qualified domain name (FQDN). Because a "default" data center object exists, the next step is to set up the required network and storage domains, add RHVH nodes, and, if necessary, build failover clusters.

Host, Storage, and Network Setup

For the small nested setup in the example here, I first used iSCSI and NFS as shared storage; however, later I will look at how to access Gluster or Ceph storage. For now, it makes sense to set up two shared storage repositories based on iSCSI disks or NFS shares. A 100GB LUN can accommodate, for example, the VM Manager if you are using the Hosted Engine setup described below. Because I am hosting the VM Manager on ESXi, I avoided a doubly-nested VM, even if it is technically possible. As master storage for the VMs, I opted for a 500GB thin-provisioned iSCSI LUN.

Rolling Out RHV-M with Cockpit

The Machine Manager can now be rolled out in RHV as an appliance directly from the dashboard of an RHVH node; this is known as the Hosted Engine setup. Simply click on Virtualization | Hosted Engine | Hosted Engine Setup Start, which automatically starts the deployment of RHV-M and sets up a hosted engine RHV-M as a VM on the hypervisor.

Red Hat provides the appliance as a download; you can accelerate the deployment if you previously install the Open Virtualization Alliance (OVA) package on the RHVH host. The Hosted Engine setup automatically finds a locally available OVA but also downloads from the Internet if it fails to do so. The Hosted Engine setup also supports a hyperconverged setup on the basis of GlusterFS.

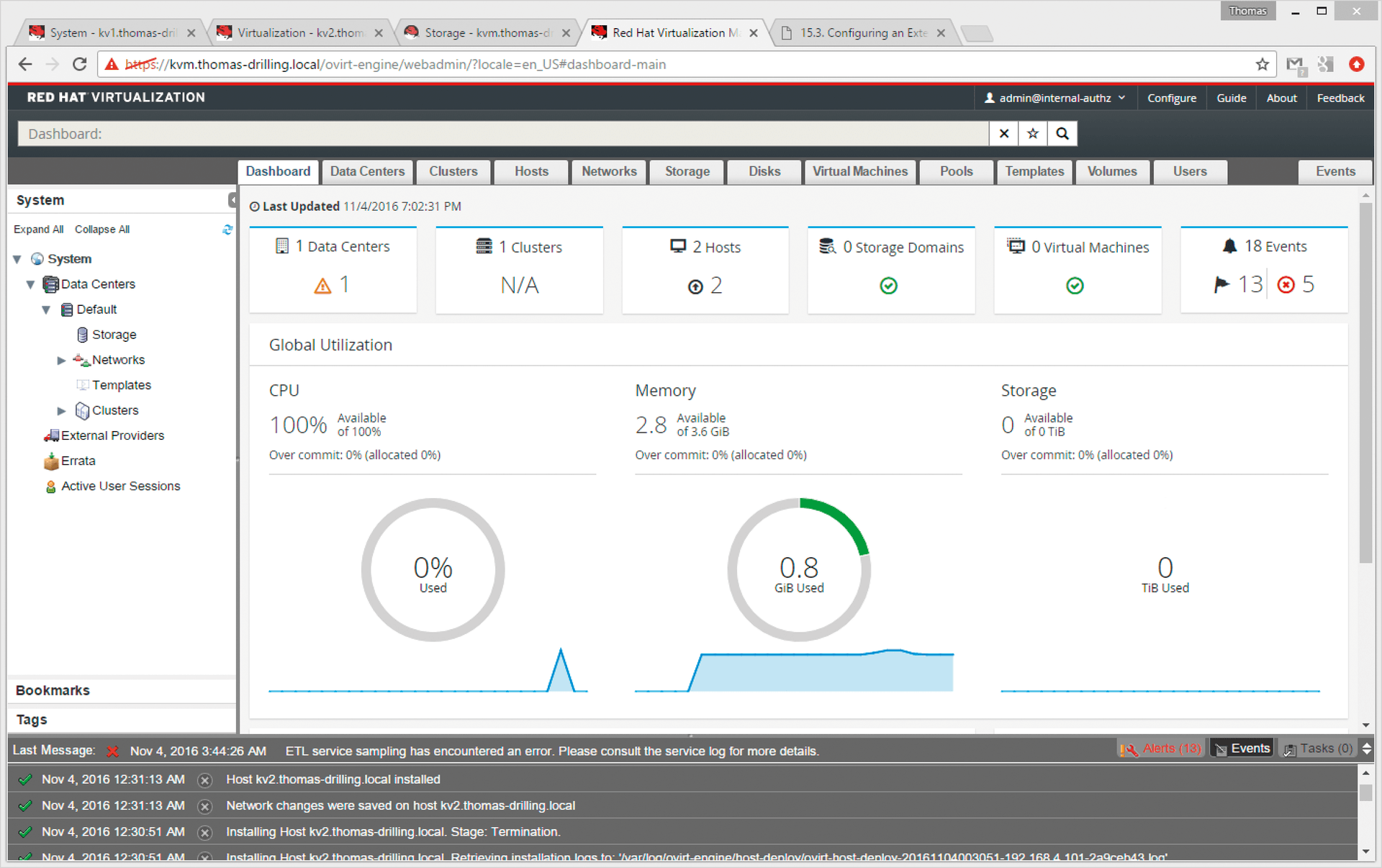

Administrator Portal

Once the Manager and host are set up, the next step is to access the Administrator Portal with the admin account to complete the setup, including configuring clusters, hosts, storage, and networks. The first thing you will notice is that Red Hat has expanded the system dashboard – not to be confused with the dashboard in Cockpit – which provides a quick overview of the system health state. You can access it in the first tab on the left (Figure 3).

By clicking New in the Hosts tab, you can add hosts. Alternatively, you can use Foreman/Satellite very conveniently to fetch the required SSH fingerprint by pressing Fetch in Enter host fingerprint or fetch manually from host, assuming that the DNS/FQDN name is correct. The new host then appears in the Status column with the status Installing, and you can track there how the missing packages (e.g., VDSM) are installed and launched. The status then changes to Up.

Identity Management

In the course of the installation, the Virtualization Manager creates the admin user in the internal domain. The admin@internal account is primarily used for the initial configuration of the environment and for troubleshooting. RHV supports all major directory services, in addition to Red Hat Identity Management (IdM) – that is, FreeIPA, Active Directory (AD), OpenLDAP, Red Hat Directory Server (RHDS), and a few others.

After setting up the preferred directory service, you can retire the admin user. However, the configuration of the directory services, like many other aspects in RHV/RHEL and unlike VMware vSphere, takes place outside of the GUI. OpenLDAP, for example, can be installed on the Virtualization Manager by typing:

yum install ovirt-engine-extension-aaa-ldap-setup

Setting up IdM on the same machine is not possible, however, because IdM is not compatible with the mod_ssl package required by RHV-M. The related IdM client is named ipa-client, by the way, and is included in the rhel-7-server-rpms channel but conflicts with the IPA client/server packages of the same name from free sources (FreeIPA).

An Identity Manager should be run externally, anyway. Getting IPA or AD to cooperate with the role model implemented by RHV is easy. In RHV-M, define which AD/IPA groups are given what level of access in RHV. For IPA integration, the simplest case is to give the ipausers group admin rights. This means that all IPA users can manage RHV.

Connecting Storage

Apart from a couple of exceptions, storage is connected from the GUI. Nevertheless, the concept of storage domains in Libvirt/Qemu (and therefore also in RHV), with its storage pools and volumes, is different from that of logical data stores with a separate VMFS filesystem in VMware or CSV shared storage in Hyper-V. In RHV, a storage domain is nothing more than a collection of images that are served by the same interface (i.e., images of VMs, including snapshots, or ISO files). Each storage domain (backing) comprises either block devices (iSCSI or FCP), a filesystem like NFS (NAS) or GlusterFS, or a POSIX-compliant filesystem.

For a network share (NFS), all vDisks, templates, or snapshots are files that reside on the underlying filesystem. For iSCSI or FCP, however, each vDisk, template, or snapshot forms a logical volume. Block devices are thus aggregated to create a logical entity and are divided by the Logical Volume Manager (LVM) into logical volumes, which are in turn more or less virtual hard drives. The vDisks of the VMs use either the QCOW2 or RAW format. QCOW2 can be thin or thick provisioned (sparse or pre-allocated). To create an iSCSI-based storage domain, you just need to enter the IP address of the iSCSI server and then click Discover (dynamic discovery). Manual addition of iSCSI software initiators is not required. By default, the result of discovery is a list of available targets. For details of the individual LUNs, press the arrow on the far right.

OpenStack Integration

RHV supports NFS, POSIX, iSCSI/fibre channel block storage, and GlusterFS, where Gluster storage is addressed through shares (bricks) from the RHV perspective, just like NFS. However, embedding GlusterFS-based storage requires a functional cluster that must be configured with a server-side and client-side quorum to ensure the integrity of the data. In terms of underpinnings, RHV is traditionally very close to OpenStack and is therefore steadily expanding the supported APIs in RHV. In RHV4, Red Hat points to API integration with Glance (images), Cinder (block storage), and Neutron (software-defined networking), although tests show that some manual labor is still required. However, the handling of storage domains is one of the aspects that nicely demonstrates integration capabilities.

RHV can use, but not manage, Ceph storage with workarounds (OpenStack Cinder). In OpenStack, this only works with Red Hat CloudForms, for example. Ceph integration via Cinder still has a preview status in RHV and, like many other features in RHV, must be configured at the command line. Glance integration for the use of ISO images and VM templates stored in OpenStack is slightly more advanced. RHV4 lets you use, export, and share with an existing Red Hat OpenStack platform; however, the required subscription is not included in RHV4.

Neutron Support

Also new in RHV4 is a free API that allows superior support for external third-party networks and thus ultimately supports centralization and simplification of network management. Red Hat Virtualization Manager uses the API to communicate with external systems (e.g., to retrieve network settings, which can then be applied to VMs).

With regard to integration with OpenStack, RHV4 includes a technology preview of the upcoming integration of Open vSwitch. RHV4 currently works with ordinary Linux bridge devices for network communication between VMs and the physical network layer in the RHV nodes. Creating bridges, VLANs, or bonds is now more convenient on the Manager side, at least, thanks to Cockpit integration. In the future, RHV IP address management (IPAM) will be fully based on Neutron subsets. Neutron is already listed as a network provider in the GUI and supports convenient importing of software-defined networks via Neutron.

Containers and VMs

In addition to OpenStack, RHV4 also supports container-based workloads, as exemplified, for example, in its support of RHEL Atomic Host as a guest system. The expansion or the configurability of policies for live migration is also new, offering benefits in particular for Monster VMs or live migration. For example, RHV4 allows fine tuning of live migration settings for a selected VM cluster level, such as the maximum bandwidth to use. Another new feature is that RHV can now deal with tags and labels that you can assign as a characteristic property to a VM or network.

RHV4 and Competitors

RHV leaves you with a mixed impression. In 2017, you are hardly likely to find companies that have not already gained experience with some kind of virtualization solution. RHV is thus mainly a strategic product both for Red Hat and for users; using it leaves the doors open in many directions, although the dice seem to have fallen in favor of OpenStack for the private cloud. Looking specifically at functions, RHV tends to focus on integration as a whole rather than maximizing virtualization functionalities. For example, the upcoming modeling of the Open vSwitch-based virtual networking stack is long overdue. Competitor VMware has for years offered fully modeled, software-defined Layer 2 switches that operate on par with Cisco in terms of APIs and features.

As another example, consider the ability of RHV4 to add tags and labels to objects – a function that competitors have also had for a long time. VMware also has more to offer in terms of resource management and prioritization, or performance monitoring. Whether the feature overkill in vSphere actually reflects the needs of enterprises in everyday life is a totally different question. However, what is irritating about RHV is that, in principle, no clear separation exists between the functions provided by the Linux kernel and other layers in RHEL that can typically only be configured at the command line. For open source specialists, this might be the norm, but in the context of Hyper-V and vSphere, it becomes difficult to compare capabilities, especially when some features advertised in RHV4 have received only a tech preview status.

Don't forget that the competitors (Hyper-V and vSphere) offer a powerful PowerShell-based Automation API. Die-hard Red Hat aficionados might turn up their noses at the mention of PowerShell, but it has developed into a reliable tool as an automation and management interface outside of the Linux world. If Red Hat is targeting VMware and Microsoft migrants with RHV4, things like this – when taking the fairly jaded look of the GUI and its missing functions into account – will be quite important. Of course, you have to remember that Ansible has a chic oVirt module. Dozens of VMs can be very easily cloned, preconfigured, and booted thanks to playbooks (Cloud-init).

Conclusions

In terms of costs, RHV4 is still a recommended product, especially when stability, enterprise capability, and performance leave little to complain about. Last but not least, RHV is possibly the better container platform than, for example, Windows Server 2016 Container Host or VMware because of its proximity to Linux and Docker.