PostgreSQL Replication Update

Data Duplication

If you have anything to do professionally with the topic of replication as a PostgreSQL consultant [1], you might easily gain the impression that colleagues and clients see only distributed, synchronous, multimaster replication as true replication. But are they right?

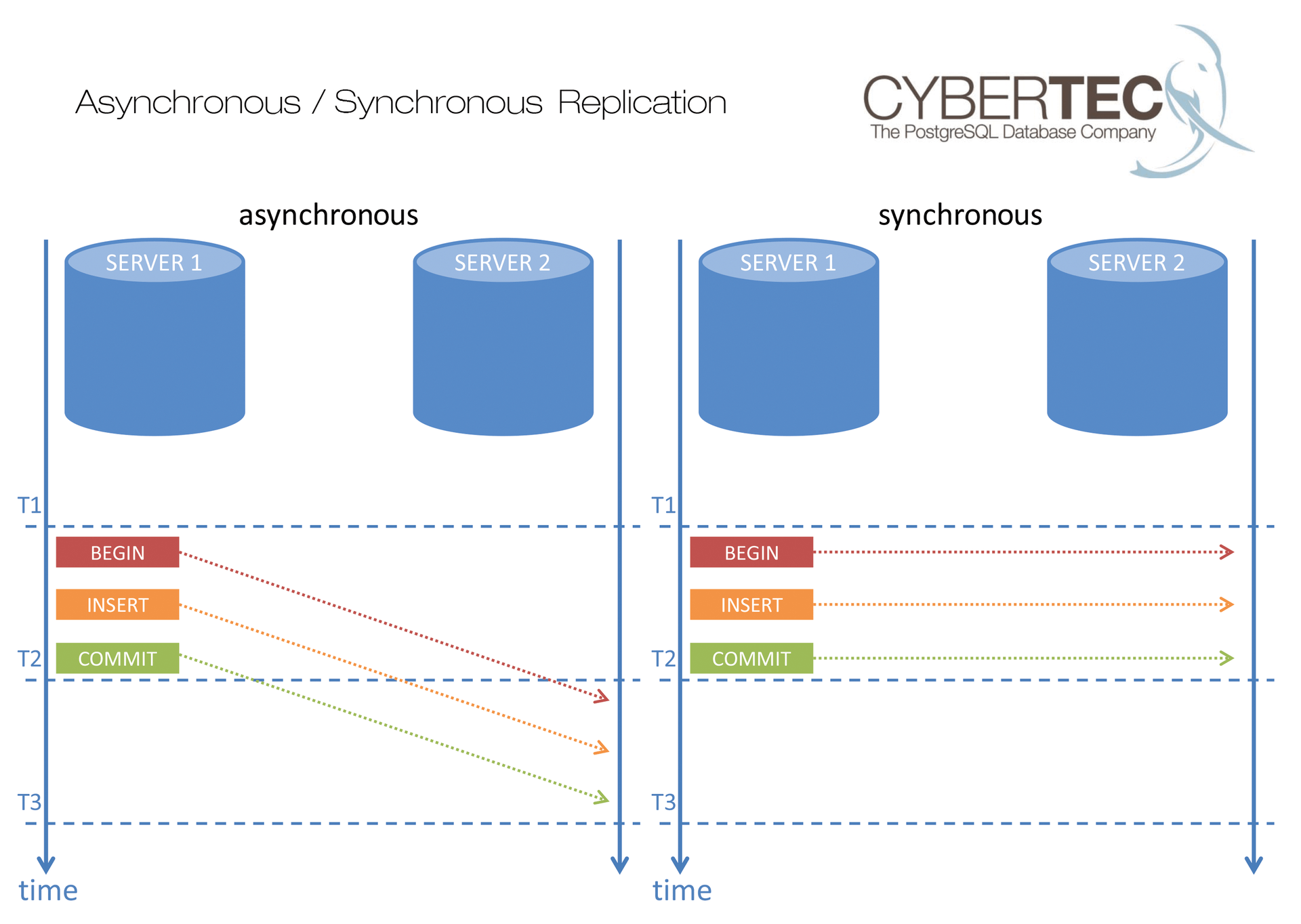

Synchronous vs. Asynchronous

Generally speaking, replication can be classified in different ways (e.g., synchronous or asynchronous) (Figure 1). In the case of synchronous replication, a transaction is not completed until it is acknowledged by the service. The advantage, particularly in the case of a crash, is that the data must have arrived on at least two systems. The drawback is that the data must be written to at least two systems. The advantage is therefore a disadvantage because the double write operations cost time.

Single- and Multimaster

The distinction between single-master and multimaster is important. Many users choose multimaster because they can use it to distribute the read load, although it does not make writing faster because both machines need to write all of the data. A different and often cited argument to choose multimaster is high availability.

Two asynchronous machines can have different data at any given time, in which case conflicts can arise that need to be resolved. Usually, you can only accomplish this by rolling back one of the two transactions ex post (i.e., after the commit). For example, suppose you have $100 in a joint bank account and two people want to withdraw $90. Their transactions end up on different machines in the asynchronous cluster. Both computers debit $90 and commit the transactions before synchronizing. Only then does it become clear that the money has been dispensed twice.

The possibility of conflicts always needs to be considered in asynchronous multimaster replication. The servers might "eventually" be consistent, but not always or at any given time. In some scenarios, this asynchronous condition is not a problem because it does not cause a conflict.

Look at global aircraft sales, for example. Say someone wants to buy a plane in Japan. How likely is it that a second customer in Germany decides to buy the same aircraft at the same second? The probability is close to zero. Asynchronous multimaster replication makes sense when the partners involved are geographically distributed and a conflict effectively cannot occur.

Synchronous multimaster replication is not useful under any circumstances in these cases, however, because it drags the performance down such that only a few transactions per second are possible. The problem is the need to ensure the delivery order of network packets to avoid deadlocks – a detrimental state in terms of latency.

When it comes to availability, keep it simple. When it comes to read performance, synchronous or asynchronous single-master replication is the choice. When an admin wants to scale write performance, it is essential to distribute the data in the cluster (sharding, horizontal partitioning). If availability is the sole focus at a site, then multimaster replication is not suitable, because you would need to accept unnecessary compromises. The simpler the setup, the easier the administration and ability to maintain the sites.

Transaction Log

If you are not familiar with the database transaction log, you will not understand PostgreSQL replication. Generally, PostgreSQL, in contrast to a database that uses the index sequential access method (ISAM), is not allowed to write directly to a data file. Every change first needs to be logged in the transaction log. The reason is obvious: A computer can crash at any time. If a crash occurs precisely when the database is writing to its data file, you will get a corrupt file with incomplete data.

The PostgreSQL solution for this problem is write-ahead logging (WAL). The idea is to log all changes before they reach the data file, which is similar in principle to how a modern journaling filesystem works. In PostgreSQL, the transaction log describes the binary changes to be made in case of a recovery.

The transaction log does not contain any SQL – it's all about changes in the data files, which is why it is also suitable for things like replication. When a slave is created on the basis of the transaction logs, it is almost binary identical.

Streaming Replication

The simplest and easiest form of data duplication to maintain is thus streaming replication. First a backup of the database instance is created; then the admin replicates the changes using the transaction log. If you want to create this kind of setup, edit the postgresql.conf file on the master so that it contains the following entries:

wal_level = replica max_wal_senders = 3 hot_standby = on

Next, you need to add the following line to the pg_hab.conf file

host replication postgres <slave IP address> md5

and restart the master. Now, it's the slave's turn. Install PostgreSQL on the slave and create space for the data:

mkdir /data chmod 700 data

You can then launch the initial backup on the slave:

pg_basebackup -D /data -h <Master_IP> --checkpoint=fast --xlog-method=stream -R

As soon as the data has been copied, the slave should start. The -R option generates the configuration file for the slave. Once it's running, the slave can be used as a read-only machine. Of course, you can create as many slaves as you like; star-shaped replication is possible. In the default case, this replication is an asynchronous single-master type.

Synchronous Replication

If you need synchronous replication, you can also configure it very easily. In the master's postgresql.conf file, set the synchronous_standby_names option; for example,

synchronous_standby_names = node1, node2, node3

and add the following to the primary_conninfo section of the recovery.conf file on the slave:

application_name=node1

node1 then becomes a synchronously replicated node. In this example, it is important that synchronous is the default setting for node1; however, PostgreSQL lets you decide how to replicate each individual transaction, which allows you to avoid the overhead of a synchronous commit for each transaction.

To determine the quality (durability) of a transaction, the synchronous_commit option can be adjusted to one of these five values at the session level:

-

remote_apply: A transaction is valid if it is written on both systems and visible on the slave, which is important for load balancing. -

on: A transaction is flushed on both systems but might not be visible for other reads yet. You can thus replicate more or less synchronously, although the slave displays hours-old data. -

remote_write: A transaction is flushed on the master but only propagated to the kernel on the standby system (without flushing), making the transaction faster and assuming that the two storage systems do not die at the same time. -

local: A transaction is flushed on the master, but no feedback is required from the slave (asynchronous). -

off: Flushing is delayed on the master, and replication is generated asynchronously. The database guarantees integrity at any time but can lose data for some milliseconds in the event of a fault. The idea is to speed up short transactions.

The following example shows how to influence the durability of a transaction:

BEGIN; SET synchronous_commit TO remote_write; INSERT INTO tab VALUES <[...]> COMMIT;

The durability can be set for each transaction individually.

PostgreSQL 9.6 and 10.0

In many cases, a cluster will not be limited to two nodes; therefore, PostgreSQL lets you manage as many slaves as needed and set the number of synchronous slaves. For example, if you have 10 slaves, you can tell PostgreSQL in simple syntax to keep seven of them in sync. A commit only occurs if a sufficient number of machines have given their okay and the data is therefore safe.

A single line in the configuration lets you keep machines on several continents in sync, but keep in mind that the limits of physics continue to apply: no one can replicate data synchronously to half the globe and assume that it has no effect on the speed of write transactions. You always will see a trade-off between durability and performance.

Bidirectional Replication

In some cases, you can't avoid bidirectional replication. A few years ago, the PostgreSQL developers implemented logical decoding, which made it possible to decode the transaction log using a plugin and reuse the content in SQL statements.

For example, suppose a user issues the following SQL statement:

DELETE FROM data WHERE id < 4;

In this case, decoding the transaction logs would deliver:

DELETE FROM data WHERE id = 1; DELETE FROM data WHERE id = 2; DELETE FROM data WHERE id = 3;

The resulting stream could then be replayed on a subscriber.

In the future, it should be possible to use this stream to synchronize slaves and deliver almost downtime-free updates. In upcoming versions of PostgreSQL, bidirectional replication will also be feasible. Bidirectional, asynchronous replication is currently only possible with a tool named BDR, which you would need to install as a package.

In this next example, bidirectional replication makes sense. Assume that an insurance company operates branches in the US and Europe. In this case, although the data line could conceivably fail, it's very unlikely that someone would try to insure the same car in the US and Europe at the same time (i.e., the conflict probability is low).

Premier Class: Automatic Failover

PostgreSQL is a modern database that offers numerous features specifically for replication. However, PostgreSQL is not cluster software – things like automatic failover and the like are not implemented, so external tools are needed.

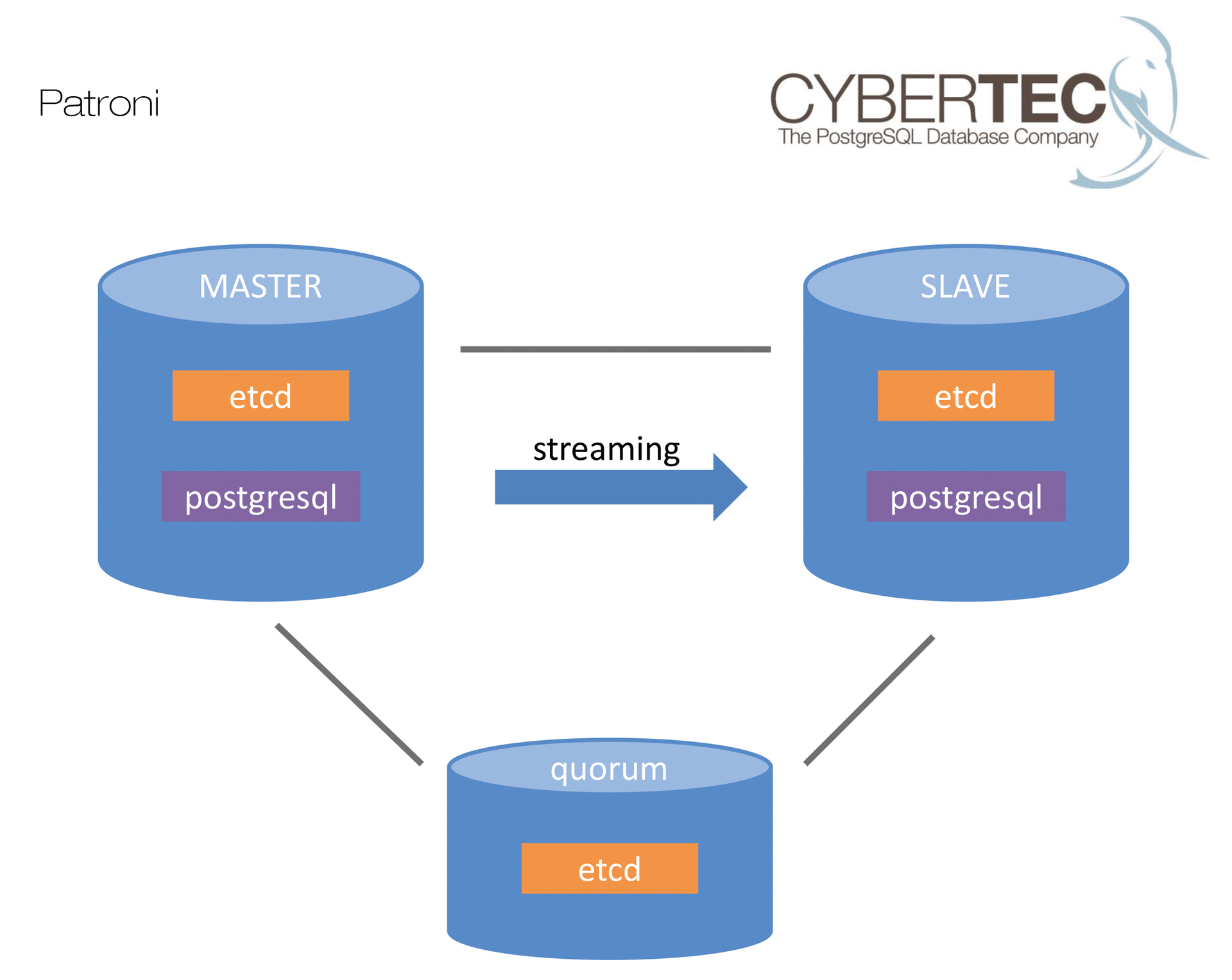

One of the best known tools is Zalandos Patroni [2], which uses the Paxos algorithm to determine who should have what role in the cluster. If Patroni, on the basis of the consensus algorithm, detects that a failover must occur, it triggers the necessary operations. The configuration is relatively simple (Figure 2); note that Patroni starts and stops the services but does not manage the IP addresses. An application that accesses the cluster from the outside thus needs to connect a different IP address in the event of a fault.

One solution is to combine PostgreSQL and Patroni with Consul [3] to modify the DNS records directly in the case of a failover. Cybertec [4], a PostgreSQL database company, will be offering a freely available solution for automated failover and replication in the near future.

Decision-Making Aids

If you are facing a situation in which you need to find a solution for an existing problem, you first need to clarify what you really want to achieve: Is it about availability? Is it about scaling? Do you want to create a geographically distributed system? If availability is the focus, you should rely on simple master-slave replication. If read scaling is most important, you will first want to check the workloads on missing indexes and so forth and then rethink your options.

In many cases, missing indexes can make the workload appear greater than it really is. A modern database server can handle hundreds of thousands of queries per second – it is always useful to check whether the infrastructure is just being used in an inefficient way before scaling your servers. If the read load is still too high, you can rely on streaming replication. If the system is globally distributed, an asynchronous multimaster solution can be useful, but the chances of conflict must be small.

The present PostgreSQL environment is in flux. Hardly a month goes by without the addition of new features. Substantial improvements can be expected in all areas that are unforeseeable today. It is definitely worth your while to see what will happen in the future.