Take your pick from a variety of AWS databases

Choose Carefully

For quick and easy access to databases in the cloud, you will find the most popular types in the form of a database-as-a-service (DBaaS). In addition to relational databases, NoSQL alternatives enjoy growing popularity. Each database type weighs aspects such as flexibility, read and write speed, resilience, license costs, scalability, and maintainability differently.

Amazon RDS: Managed SQL in the Cloud

Although relational database products differ widely in terms of details, similarities in properties and management processes can be abstracted. The Amazon Relational Database Service (RDS) provides a common interface (API) that encapsulates vendor-specific aspects. I look at the shared features and benefits of six RDS engines – Amazon Aurora, Oracle, Microsoft SQL Server, PostgreSQL, MySQL, and MariaDB – before going into the specifics of Amazon Aurora.

The RDS API simplifies administrative processes by orchestrating the necessary procedures at the database (DB) and infrastructure levels. For example, to create a DB instance, you do not have to deploy a server explicitly or run the product-specific installation. Instead, a new DB instance is available within minutes. The RDS API is either accessed from the AWS Management Console or used in automation scripts via a Software Development Kit (SDK), with which you can implement approaches such as infrastructure-as-code or use it to respond automatically to events.

In RDS, the database can consist of a single DB instance, which makes economic sense for testing and development purposes. For production use, you can operate the database with two DB instances in a primary-standby configuration. The instances are distributed across different availability zones (AZs) to cover different risk profiles within a region and are handled like separate data centers. This configuration is known as RDS Multi-AZ deployment.

Changes to the primary instance replicate synchronously to the standby. In a failover case, users can switch without loss of data. For this to happen, the DNS canonical name (CNAME) record is changed to point to the standby instance, which then acts as the new primary instance. Therefore, the DNS entry should not be cached by clients for longer than 60 seconds. RDS provides other features that improve reliability for critical production databases, including automated backups and database snapshots. In Multi-AZ mode, the snapshots can be created by the standby instance without any interruptions.

Vertical and Horizontal Scalability

Several kinds of scalability are supported: Currently, RDS scales vertically by changing the instance type of a virtual CPU (vCPU) and 1GB of RAM to up to 40 vCPUs and 244GB of RAM. If the database is operated in Multi-AZ mode, it is not available during automatic failover. The storage for the DB instance can be scaled in terms of storage type (e.g., General Purpose (SSD) or Provisioned IOPS (SSD) i.e., input/output operations per second) and storage size. The storage can extend up to 6TB (for Aurora, 64TB) and does not affect availability.

Many Amazon RDS engines scale horizontally by distributing read traffic across multiple Read Replica instances [1]. You can create up to five Read Replicas with a simple call. RDS takes care of the operations for creating the new DB instance and replicating the data. The Read Replicas are addressed by clients through their individual connection endpoints.

To restrict access at the network level, locating the instances in an Amazon Virtual Private Cloud (VPC) is recommended. Thanks to network access control lists, DB security groups (stateful firewalls), IPsec VPNs, and routing tables, the traffic can be controlled in a very granular manner.

As with most AWS services, the decision as to where the DB instance is created (i.e., in which of the four regions) is made when calling the API. Thus, the data remains within the selected region to implement regulatory requirements or optimize latency to the database client. Additionally, many types of Amazon RDS engines offer data encryption during transmission and storage. During storage, a simple hook when creating a new DB instance is sufficient in the simplest case. Billing is based on hourly usage, which, if predictable in the long term, could make it worth your while to look at reserved instances.

Individual Features for RDS

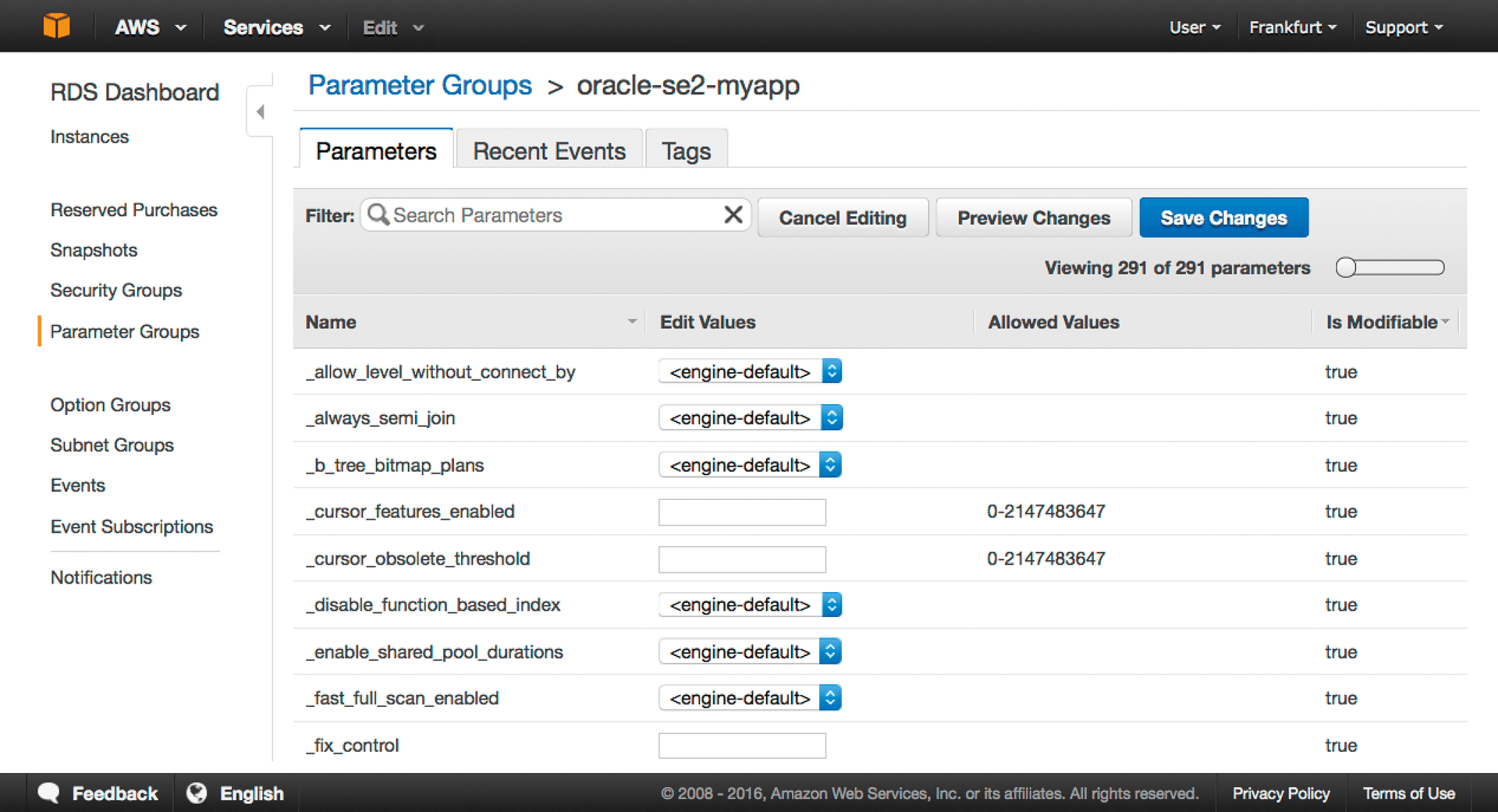

The unified approach for setting up and managing the different RDS engines does not prevent you from making individual settings: Defaults give you a quick start. More fine tuning is possible via DB parameter groups: Up to 300 parameters can be tweaked, depending on the RDS engine and version, and applied to DB instances (Figure 1). Like DB parameter groups, individual product features can be defined in DB option groups (e.g., transparent data encryption) and applied to DB instances.

Launching a DB Instance

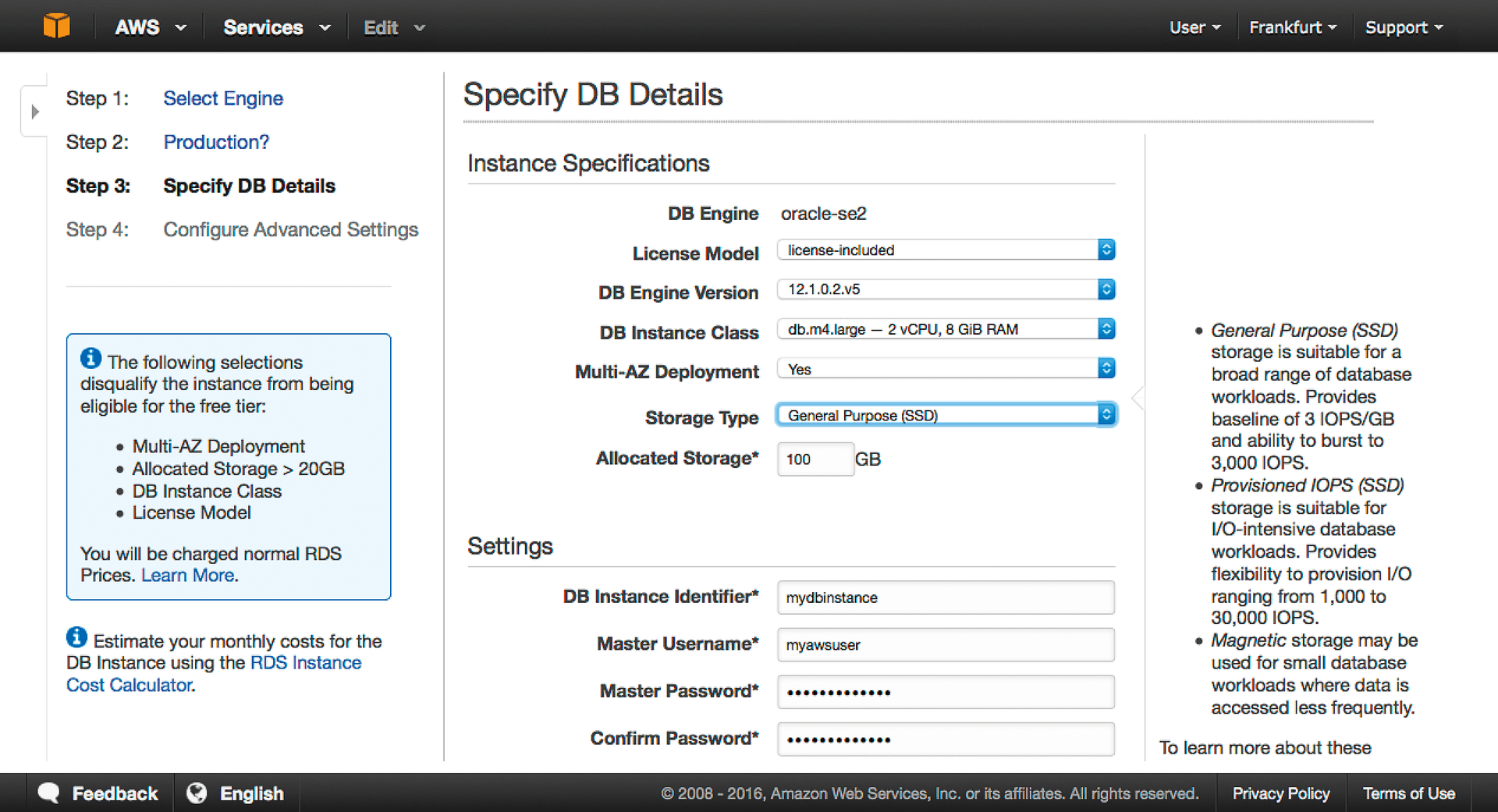

In the AWS Management Console, RDS is found under RDS in the Database section. A new DB instance (Figure 2) is handled by a wizard, which prompts you for the following information:

- Database manufacturer and product (e.g., Oracle Database Standard Edition Two)

- Provisioning in one or more availability zones

- Licensing model: Bring your own license (BYOL) or a license included with the product

- Desired database version

- Storage size and type

- DB instance name

- Master access data

The RDS API provides an overview of the possible constellations of these values by region. For example, you can query options for Oracle SE2 (for the Frankfurt, Germany, region) using the AWS command-line interface (CLI) [2] as follows:

$ aws rds describe-orderable-db-instance-options --output table --region eu-central-1 --engine oracle-se

Launching the DB instance with the AWS CLI looks something like this:

aws rds create-db-instance --engine oracle-se2 --multi-az --db-instance-class db.m4.large --engine-version 12.1.0.2.v5 --license-model license-included --allocated-storage 100 --master-username myawsuser --master-user-password myawspassword --db-instance-identifier mydbinstance --region eu-central-1

Securely Managing Access Data

In AWS Identity and Access Management (IAM), you can define which roles and users will have access to the RDS API (e.g., to create a DB instance or initiate actions such as backups) in a highly granular way. API calls are authenticated using IAM credentials (optionally with multifactor authentication). Scripts running on EC2 (or AWS Lambda) automatically have the permissions of the IAM assigned to the EC2 instance. The IAM permissions have complementary access to the permissions that are assigned within a database, which are defined in the traditional way by the master user.

The master access data, which is created when setting up the DB instance, can subsequently be changed. What other resources and users are set up is the responsibility of the administrator. In the interest of a "least privileged" approach, more database users should be created with restricted permissions, according to their roles in administration, deployment, and use of the application.

Additionally, the credentials should be secured and not stored in the clear in the source code of the application. One possible approach is to store encrypted data in the Amazon Simple Storage Service (S3). The key is created and managed with the AWS Key Management Service (KMS). Only the required EC2 instances gain access to the key and the S3 bucket via EC2 IAM roles. The procedure is described in detail online [3].

Performance with Amazon Aurora

Amazon Aurora is one of the six RDS engines. Because of its specific performance characteristics, I will look at it separately. Aurora combines the speed and reliability of a high-quality commercial database with the efficiency of an open source database. It offers up to five times the throughput of MySQL running on the same hardware, according to AWS benchmarks. Aurora is compatible with MySQL 5.6, so existing MySQL applications and tools can be executed without modification.

In Aurora, two components are operated redundantly to improve availability: storage and the DB instance. Storage is automatically distributed across three availability zones and replicated six times. Redundant data storage automatically detects a disk error and fixes it without affecting availability. Also, the database can be operated redundantly. Unlike other RDS engines, there is no dedicated standby instance. Aurora can be used as a cluster with up to 15 Read Replicas. If the primary instance fails, one of the replicas is automatically appointed the new primary instance. If no Read Replica is configured, a new instance of the DB is created. To improve availability, users should create at least one Read Replica. In contrast to the other RDS engines, the Read Replicas are also available via a shared cluster connection endpoint.

DynamoDB: Scalable NoSQL Database Service

In addition to RDS, AWS offers further databases for specific use cases. Amazon DynamoDB, for example, is a faster, more flexible NoSQL database service for all applications that require consistent latency in the single-digit millisecond range for all sizes. This fully managed cloud database supports both document and key-value storage models.

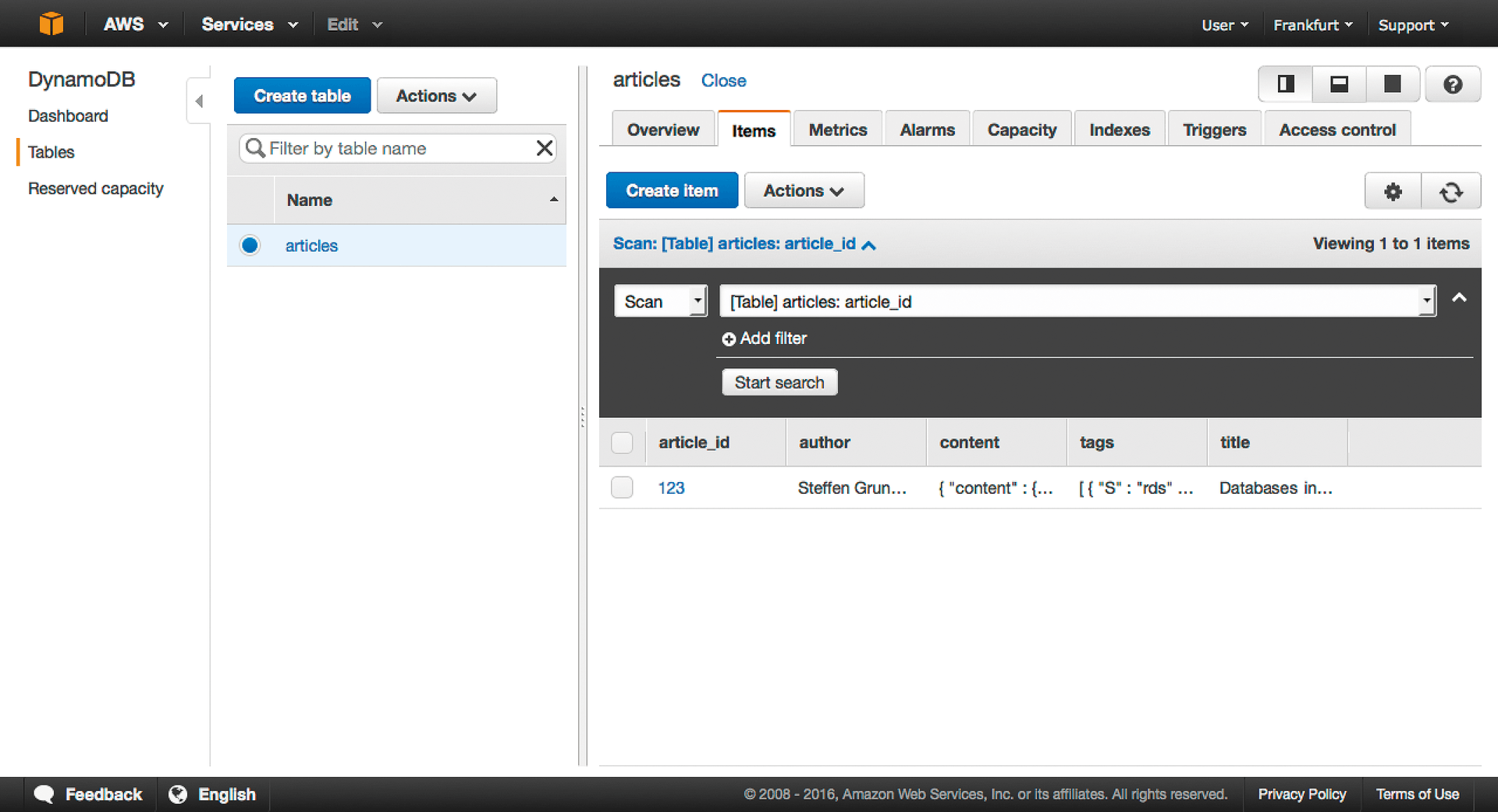

The data is stored in tables without a schema. Tables include items that have attributes (as key-value pairs). One attribute is defined as the partition key, which determines the physical storage location; therefore, tables can scale without limits while offering consistently fast performance at any size.

Indexes allow quick searches against other attributes. Attributes can be lists or maps (Listing 1), so that arbitrarily complex and deep object matrixes can be created. This structure is very flexible and therefore suitable for use in many applications. Here, "content" is a map, and "tags" is a list. The "article_id" is the partition key, and "author" is used as a secondary global index to find articles about the author.

Listing 1: Local Password Policy

{

"article_id": 123,

"title": "Databases in AWS",

"author": "Steffen Grunwald",

"content":

{

"description": "...",

"header": "...",

"content": "..."

},

"tags":

[ "rds", "aurora", "dynamodb"]

}

When a table is generated, the possible throughput for a set of read and write capacity units is defined, which determines how many operations per second can be performed against a table. This value can be changed later and is done either manually or through an automatic mechanism that decides on the basis of metrics whether more or fewer capacity units are needed. An example is presented online [4].

Events let users respond to data changes (e.g., to replicate the data or create derived statistics). Using the AWS Management Console, performance metrics also can be visualized, and items and tables can be edited conveniently (Figure 3).

In contrast to RDS, IAM access control for Amazon DynamoDB includes all actions down to the attribute level. In combination with the large number of SDKs, a browser can write tables directly to Amazon DynamoDB without going through an application using a data access layer. The advantage is that such an access layer does not have to scale for load-intensive read and write operations.

Redshift: Data Warehouse for Analytical Processes

Amazon Redshift is a fast, fully managed data warehouse for data volumes from 100GB to the petabyte range, which, together with existing business intelligence tools, enables easy and economical analyses of all data. As a SQL data warehouse solution, Redshift uses Open and Java Database Connectivity (ODBC and JDBC) in line with the industry standard.

Column-based storage, data compression, and zone assignments are used to reduce the I/O overhead when executing queries. Amazon Redshift has a data warehouse architecture for massively parallel processing that parallelizes and distributes the SQL operations to make optimum use of all available resources. The underlying hardware is geared to high-performance computing; locally attached storage maximizes the throughput between the CPUs and the drives, and a 10Gbps Ethernet mesh network maximizes the throughput between the deployed nodes.

Amazon Redshift scales according to the number and size of compute nodes; each data warehouse manages up to 128 compute nodes in a cluster, distributing the data and computing tasks across them. If more than one compute node is used, a main node serves as the endpoint for requests from the clients and ensures the execution of queries on all compute nodes. A node type is set for the entire cluster. Two groups of node types are available, optimized either for performance (dense compute DC) or storage (dense storage DS).

Thus, the bandwidth of a node can range up to 36 vCPUs and 16TB of storage. The node types can be changed even after creating the cluster. When resizing the existing cluster, Amazon Redshift changes to read-only mode, provides a new cluster of the desired size, and copies data from the old cluster to the new in parallel. During the deployment of the new cluster, the old cluster is still available for read queries. After copying the data to the new cluster, Amazon Redshift automatically forwards the queries to the new cluster and removes the old cluster. Much like RDS, the switch is handled by changing the DNS CNAME record.

Choosing the Correct Tool

When describing the AWS services in the database area, some assistance is available. First, the requirements should be investigated to discover whether the data is processed analytically or transactionally. If analytically, Amazon Redshift is a potential candidate. You also need to consider whether the data matches a relational or non-relational structure and whether the database can scale with the traffic in the long term. Finally, of course, price plays a role. The simple monthly calculator [5] helps you predict the monthly costs.

Relational or non-relational databases often form the basis of an application. Additional functions may be needed depending on the requirements. Examples of this are data life cycle management or functions for aggregation and analysis. Premium AWS services are available for this purpose. For example, Amazon ElastiCache offers a solution for caching, in which the user can choose between a Redis engine or a memcached-compatible engine.

If the data to be stored consists of events that will be processed and analyzed in large quantities as a stream, Amazon Kinesis Streams and Amazon Kinesis Analytics are the right choices. You can quickly perform experiments and feasibility studies, without getting lost in the details of the underlying technology.

Installing Databases on EC2

In addition to the featured AWS services that provide different types of databases, you still have the option of installing a database on EC2 yourself, giving you greater freedom in terms of products, installation, and maintenance processes. To do this, you can run the installation on the basis of an existing Amazon Machine Image (AMI) and import existing virtual machine images into the account.

The account holder has responsibility for the required licenses in a DIY database installation. EC2 provides two options – dedicated hosts and dedicated instances – for using dedicated hardware and optimizing license costs (e.g., through the use of MSDN licenses or licensing of physical cores).

Finally, the AWS Marketplace Databases & Caching category offers more than 200 listed products that are available as preinstalled versions. The offers in the Marketplace are either AMIs that launch with an EC2 instance or groups of resources that are created from a template. Depending on the offer, the license costs are billed along with the infrastructure costs, or the user contributes the licenses via a BYOL model.

Conclusions

Although RDS offers a wide range of relational databases, Amazon DynamoDB gives you unlimited scalability in the NoSQL field. Amazon Redshift is the drug of choice for massively parallel analyses of petabyte data volumes. Not least, EC2 provides the freedom to install and operate almost any other database yourself. When making a choice, priority should be given to managed options that scale well with the amount of data, number of users, and test environments. Additionally, these services allow a fast start and provide early feedback on a solution. As part of the free tier for AWS [6], interested parties can try out Amazon RDS, Amazon DynamoDB, Amazon Redshift, and other services and gain practical experience.