Operating systems for the cloud and containers

Young Heroes

The role of the operating system in the IT universe is at a turning point. In the pre-cloud era, the operating system was the decisive and determining component. The cloud, virtualization, and the container have rocked and permanently changed the model that has the operating system at its heart, and a number of new approaches have emerged in its wake. A lean and secure infrastructure takes care of some of the tasks; the containers built on top of this infrastructure do the rest of the work.

More or Less

Grossly simplified, the value of the service provided by an operating system is measured with reference to the software it supplies. If the expectations of the existing core software drop, then the operating system needs to have less ballast in terms of packages. Distributors offering lean Linux systems follow this principle. In the simplest case, they reduce the amount of software supplied, but if they want to do a better job, they need to look in even more depth at processes, procedures, and other design principles.

The most popular distributions that follow the lean approach are CirrOS [1], Alpine [2], JeOS (just enough operating system) [3], and the operating system formerly known as CoreOS [4], now called Container Linux, which better matches the type of operating system discussed in this article.

Scrawny Ubuntu

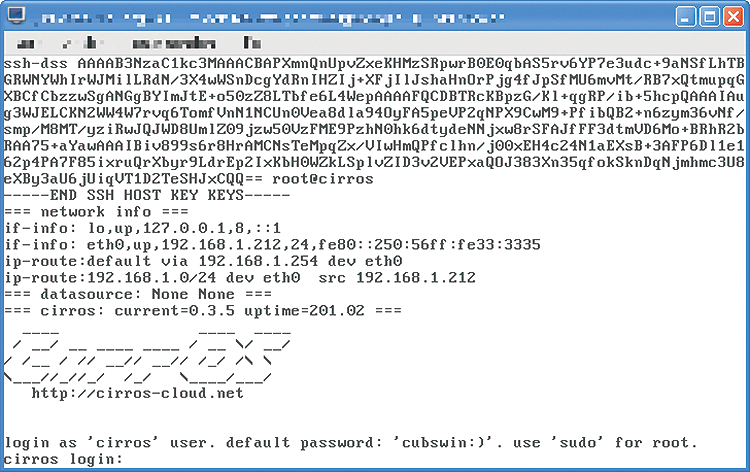

CirrOS (Figure 1) is a good illustration of the principle of "less is more." The project is now more than five years old. The first public version 0.3.0 was released in October 2011; version 0.3.5 is the most recent. The source code is released under the GNU General Public License version 2 (GPLv2).

This tiny cloud operating system comprises two components, an Ubuntu kernel and Buildroot [5], which was designed to install Linux systems for embedded applications where space is usually at a premium – also true of the cloud and container environment. The space occupied by the operating system needs to be as small as possible. The Ubuntu kernel is used because Scott Moser [6] is the guy backing CirrOS; his day job is as Ubuntu Server Technical Lead at Canonical.

At the present time, CirrOS has pre-built images for 32- and 64-bit architectures from the Intel and AMD worlds. It also supports ARM and PowerPC, but not 64-bit systems [7]. The developers primarily understand their cloud operating system is a tool for testing or troubleshooting. OpenStack, for example, officially designates CirrOS as a testing image in its documentation [8].

CirrOS comes with a number of wizards, which all start with cirros- (Table 1). All commands are shell scripts and thus allow a glance behind the scenes; however, they are not very well documented – either in the code or on the project website.

Tabelle 1: CirrOS Helpers

|

Command |

Description |

|---|---|

|

|

Calls appropriate data source |

|

|

Searches for cloud-init data source |

|

|

Queries data from the cloud-init data source |

|

|

Processes a file with user data |

|

|

Specifies options for |

|

|

Runs a command at the desired frequency |

|

|

Outputs important status information from CirrOS |

At bootup, the traditional SysVinit system comes into play. Toward the end of the boot process, CirrOS-specific scripts designed to work with the cloud-init framework [9] then step in; the matching configuration files are found in /etc/cirros-init/. The scope of supply includes templates for Amazon EC2, for a configuration drive (configdrive), and for local starts without a cloud [10]. Thus, you can test the complete process of creating and starting up a cloud instance – including postinstall customization.

No surprises await under the hood. Data storage is handled by the almost venerable ext3. The use of BusyBox [11] for most executable programs and of Dropbear [12] as the SSH daemon is the logical consequence of the Buildroot underpinnings. Seasoned Linux admins should find their way around easily.

Container Service

CirrOS, JeOS, and – with some restrictions – Alpine are stripped-down operating systems; yet, they can be used universally. In principle, they can serve as a basis for any kind of task.

A new variation on the leaner, open source operating system started to enter the scene as containers proliferated. In contrast to the systems already discussed, the new systems are highly specialized: Their main task is to provide a foundation for the container.

Whoever had this idea first, the former CoreOS definitely cast the idea into a product. Purists might argue that Project Atomic [13] (and the commercial variant, Red Hat Atomic Host [14]) and the original Snappy Ubuntu Core [15] showed higher degrees of specialization.

Although the first versions of these lean systems saw the light of day at the end of 2014, their ecosystem is still evolving dynamically. For example, SUSE presented a kind of micro-operating system for containers at SUSECON in November 2016 [16] known as SLE MicroOS [17]. A few months ago, CoreOS renamed its own distribution to Container Linux [18]. The name is designed to make the operating system's orientation and objective more evident.

From the Captain to the Dockers

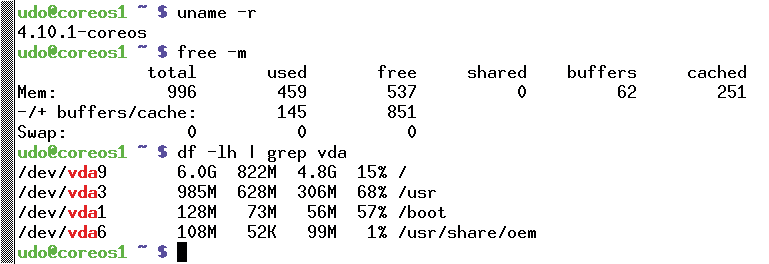

Google's operating system Chrome OS [19] inspired the design of Container Linux. The launch took place in fall 2013 under version 2 of the Apache license. Container Linux builds on components such as the kernel and systemd, so the respective licences apply. The resource consumption proves to be quite low: around 30 and 140MB (Figure 2) for the (virtual) hard disk and in memory.

Container Linux dispenses with a package manager for several reasons. Because the operating system only provides a few basic functions, the number of installed applications were decreased, so the need for a tool to manage them disappeared.

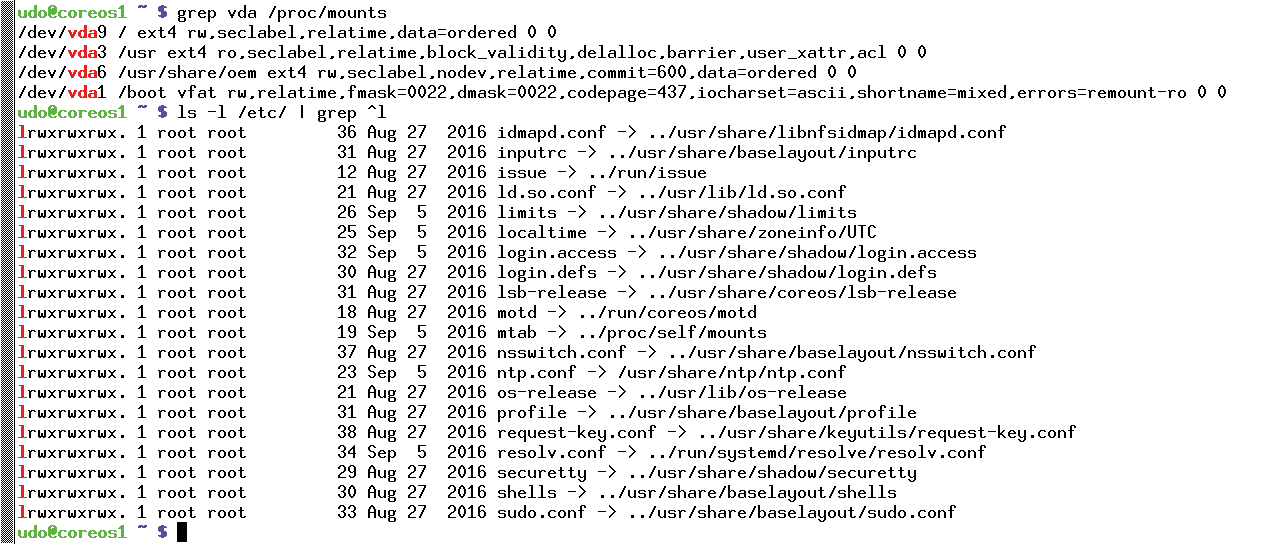

Container Linux ruthlessly replaces the entire /usr directory instead of individual files (Figure 3). The main work is handled by Linux containers à la Docker [20], or Rkt [21], etcd [22], and Kubernetes [23]. In earlier versions, Fleet was also on board, but CoreOS recently dropped it [24]. The name change for the Linux distribution was accompanied by complete orientation on Kubernetes as the orchestration software, making its transformation to managing containers complete.

/usr; this even applies to some files in /etc.Channel Workers

A little logistical information can help get you started working with Container Linux. The developers manage three channels: stable, beta, and alpha. Seasoned Linux veterans are already familiar with this idea from Debian. The developers implement bug fixes or new features for Container Linux in the alpha channel first. Once no (more) errors occur, the changes move to the beta branch, and promotion to the stable channel follows the same principle.

One popular way of versioning Container Linux might be to derive the version from components such as the kernel, etcd, or the Docker version, but the project behind the technology has opted for a different, somewhat obscure approach. The version number is the number of days that have elapsed since the epoch of Container Linux – July 1, 2013. The first version was released October 3, 2013 and was thus numbered 94 as a result [25]. Because the developers also follow a semantic versioning scheme, it was dubbed 94.0.0. New features or bug fixes that are backward compatible thus do not lead to an increase in the number of days.

Bookcase OS

CirrOS and Container Linux still quite clearly show their descent from classic Linux distributions. The server or desktop versions of Linux were the starting point for these leaner, customized versions.

The question of how much operating system is still needed in the cloud can be viewed from a different perspective. MirageOS [26], according to the developers, traces its beginnings back to 2009. The project first offered it to the general public as version 1.0 in December 2013. However, MirageOS is less an operating system and more of a library.

The library operating system harks back to the 1990s [27] [28]. The concept is radical: The application is at the same time the operating system core to be booted – that is, more or less the complete virtual server. Many tasks of a traditional kernel fall away or are reduced significantly, such as managing the network or memory, opening up new options with respect to the choice of programming language.

MirageOS delegates many tasks to the underlying layer. Normally this is a hypervisor, but it can also be a traditional Linux. In the current version 3, MirageOS supports Xen [29] and KVM [30]. On the hardware side, the available architectures are x86 and ARM, where MirageOS is released under the Internet Systems Consortium (ISC) license [31]. Organizationally, the cloud operating system is listed as one of the incubator projects within Xen [32].

Camel Master

If you want to use MirageOS as your cloud operating system, you need to master the OCaml [33] functional programming language. Because the kernel does not need to perform many tasks handled by a traditional kernel, C or C++ are not necessarily the first choice. For kernels acting as a single-application kernel – also known as a unikernel [34] – the tendency is to use languages that are higher level, which offers a few advantages.

In the case of OCaml, the developers cite automatic memory management, type checking at build time, and a modular structure [34]. Also, the generated machine code is reputed to be fast and efficient. For hard-core C programmers, OCaml probably represents a big hurdle when switching over to MirageOS. One important tool is the OPAM [35] package manager, which the cloud operating system uses to install the required libraries when generating the unikernel (Listing 1).

Listing 1: Building MirageOS with OPAM Support

$ mirage configure --unix Mirage Using scanned config file: config.ml Mirage Processing: /home/user/mirage-skeleton/console/config.ml Mirage => rm -rf /home/user/mirage-skeleton/console/_build/config.* Mirage => cd /home/user/mirage-skeleton/console && ocamlbuild -use-ocamlfind -tags annot,bin_annot -pkg mirage config.cmxs console Using configuration: /home/user/mirage-skeleton/console/config.ml console 1 job [Unikernel.Main] console Installing OPAM packages. console => opam install --yes mirage-console mirage-unix The following actions will be performed: - install mirage-unix.2.0.1 - install mirage-console.2.0.0 === 2 to install === =-=- Synchronizing package archives -=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-= =-=- Installing packages =-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-= Building mirage-unix.2.0.1: make unix-build make unix-install PREFIX=/home/user/.opam/4.01.0 Installing mirage-unix.2.0.1. Building mirage-console.2.0.0: make make install Installing mirage-console.2.0.0. console Generating: main.ml $

If OCaml and OPAM are already running in your development system, you can get started without delay. If not, you should refer to the installation guide [36].

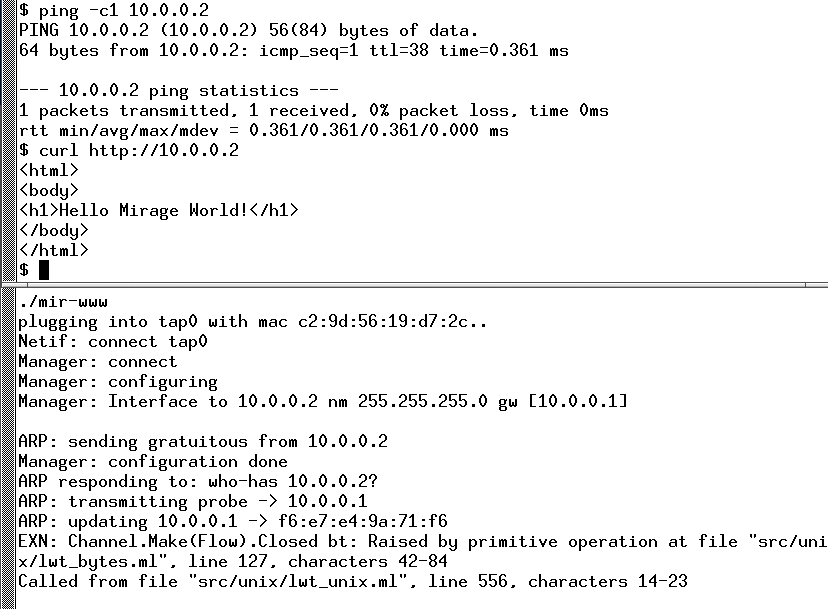

Of course, a "Hello World" example is obligatory [37], as well as very educational, because it explains how essential aspects of MirageOS work. The operating system is so different. To begin, the guide explains how the application handles output to a console. Because the application is the kernel, managing devices for your own use can be difficult to outsource. The application thus needs to handle these tasks. The second lesson relates to basic disk access; these are the popular block devices in the cloud environment. The tricky stuff then starts with the network stack, including a simple web server (Figure 4).

MirageOS allows the user to generate the application binary in two different formats. The mirage configure --unix command produces a compiled file that traditional Linux environments run as a normal process (Listing 2). With mirage configure --xen, the application is booted as a Xen kernel, in which case, you end up with a configuration file that you can process with the familiar xl commands. The MirageOS machine also produces the corresponding file in XML format.

Listing 2: Building the MirageOS Unikernel

01 $ mirage configure --unix 02 [...] 03 $ 04 $ make depend 05 [...] 06 $ 07 $ make 08 ocamlbuild -classic-display -use-ocamlfind -pkgs lwt.syntax,mirage-console.unix,mirage-types.lwt -tags "syntax(camlp4o),annot,bin_annot,strict_sequence,principal" -cflag -g -lflags -g,-linkpkg main.native 09 [...] 10 ln -nfs _build/main.native mir-console 11 $ 12 $ file _build/main.native 13 _build/main.native: ELF 64-bit LSB executable, x86-64, version 1 (SYSV), dynamically linked (uses shared libs), for GNU/Linux 2.6.32, BuildID[sha1]=734e3fc7a56cd83bc5ac13d124e4a994ce39084f, not stripped

The examples on the MirageOS project website are smart and work well. Installing an existing application on this cloud operating system always requires a new implementation. In addition to writing the actual application, developers also have to worry about accessing block devices and the network, which – combined with a new programming language – is not a small price to pay.

This and That

The MirageOS approach is quite dramatic and may go somewhat beyond what many cloud operators are prepared to do. However, there is a less painful alternative: OSv [38] is another well-known representative of the unikernel camp, which includes other candidates [39].

The new lean systems (including CirrOS and Container Linux) are attracting interest among security experts. The stripped-down userspace reduces the number of potential vectors for attackers. Lean software management reduces the risk of a security hole from procedural red tape or simple technical problems during an upgrade.

Anything that works in userspace can be transferred easily to the operating system core. The easiest way would be to create a customized kernel that contains only the necessary software and configuration.

However, unikernels take it one step further by questioning even the ingredients and the recipe for building a kernel. Some people point out that exotic systems are less vulnerable because they have fewer tools and because people lack the necessary knowledge to wreak havoc, but it's a double-edged sword: With fewer users and experts, the risk of an undiscovered vulnerability is also greater. The relevant points are the reduced attack surface, fewer active functions, and simplified software management.

Conclusions

Clouds and containers have given rise to demand for more new open source operating systems. Some are quite close to the classic Linux, whereas others started life in a green field and openly ignore any historical ballast. CirrOS and Container Linux are two candidates from the first camp. MirageOS (and OSv) are representatives of the green field, "start from scratch" generation.

No general recommendations can be made for IT environments, but the more recent operating systems clearly show that the playing field has changed. Studying implementations of CirrOS, Container Linux, or MirageOS can deliver ideas and new approaches.