Troubleshooting Kubernetes and Docker with a SuperContainer

Super Powers

We're often advised that containers should be as small as possible. Containers are designed for microservices – the decoupling of components into small, manageable applications. And by applications I mean processes. In other words, your containers should run one main process with a few supporting child processes to assist the parent on its travels.

A smaller container means better security, with less code, fewer packages, and a smaller attack surface. From a performance perspective, large, bloated, and unwieldy images lead to slower execution times. Another issue is image registry costs. If developers are frequently altering chunky binaries within their builds, which don't get absorbed into the sophisticated image layering process (designed to save only differences or diffs between the last saved version of an image and the newly pushed image), then with each subsequent tag update, you can increase an image's size by another couple of hundred megabytes per push without much effort. That might sound trivial, but what about hundreds of developers pushing to a registry several times a day? Cloud storage costs soon add up.

But what happens when you try to troubleshoot the container using your favorite admin tools? In this article I'm going to help you create what I'll call a SuperContainer. The following approach suits both Kubernetes pods running the Docker run time and, indeed, straightforward Docker containers.

The concept of this powerful container was inspired by the ideas of others. The idea for SuperContainers comes from a well-written blog post by Justin Garrison [1], who in turn attributes the mechanics to the frighteningly clever Justin McCormack [2] of Docker and LinuxKit fame. In this case, I'm using Docker as an example container run time for a SuperContainer. If you use this approach with Kubernetes, a good starting point is to run it directly on the minion (or node), which is running a troublesome container.

The SuperContainer described in this article will be able to access the filesystem, query the process table, and use the network stack of a neighboring container without tainting the target container.

Hopefully you agree that sounds intriguing and highly useful. A SuperContainer can give you full access to your everyday admin tools, without the need to install them inside your troubled container and without affecting your precious services. Bear in mind that SuperContainers are extremely powerful, and you have to use them carefully – you should definitely test them in a sandbox before using them in production (you've been warned!) – and don't leave them lying around when you are finished with them – hackers may take advantage of the the admin tools.

Super User

When you are running the smallest containers that you can create, especially in enterprise environments, you're sometimes left in a difficult position if you need to troubleshoot that container without affecting its packages and codebase. Conventional wisdom says you shouldn't edit or add to a running container because they're ephemeral and short-lived. However, it is often necessary to debug an issue directly from inside a container to view a problem from the container's perspective.

The docker exec command lets you access a running container, but once you're inside, you might find that your favorite admin tools are missing because of a desire to keep the container's footprint as small as possible. To compound the issue, a command like

$ docker exec -it chrisbinnie-nginx sh

sometimes opens up an old fashioned shell, not even a Bash shell. Sometimes I don't even find the ps command present to query the process table and check which processes are running.

Don the Cape

To make sure I have the tools I need, I constructed the Dockerfile shown in Listing 1.

Listing 1: SuperContainer Dockerfile

FROM debian:stable LABEL author=ChrisBinnie LABEL e-mail=chris@binnie.tld RUN apt update && \apt install -y netcat telnet traceroute libcap-ng-utils curl \wget tcpdump ssldump rsync procps fping lsof nmap htop \strace net-tools && apt clean CMD bash

If you look at Listing 1, I'm sure you'll spot all the usual suspects. Look out for a couple of details, too, such as my preference for Debian (even though Alpine would be sleeker) and the procps package, which provides access to the often-taken-for-granted ps command.

Also if you look at the RUN command in the Dockerfile in Listing 1 I've managed to keep the container's layers to a minimum by squeezing an "apt update" into one line, installing my packages into another and then adding a double ampersands before the "apt clean". This avoid many layers for multiple packages.

It should go without saying that you can add a heap of stuff into that Dockerfile if you feel the need; once built, the image in Listing 1 sits at a whopping 276MB the last time I checked. Simply prune it to your needs for quicker pull access and build times.

Save the Day

Inside the directory of your Dockerfile you can build the Dockerfile in Listing 1 super-simply with the following command:

$ docker build -t supercontainer .

Next, tag and push the newly created image to a registry, so that it is accessible later. My remote registry's repository is called chrisbinnie, so I use the following commands:

$ docker tag supercontainer chrisbinnie/supercontainer:latest $ docker login username: chrisbinnie password: not-telling-you $ docker push chrisbinnie/supercontainer:latest

You now have a troubleshooting SuperContainer (as long as you have network access to run a docker pull from the registry). The next step is to put that SuperContainer to good use.

The Target

The final step is identifying a problematic container to troubleshoot and then firing up a SuperContainer on the same host as that misbehaving container. Without further ado, here's the clever part.

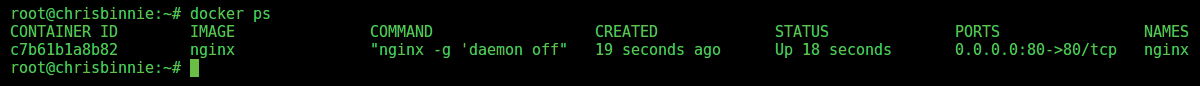

The target container is shown below in Figure 1 using the docker ps command. Note that the container is an Nginx container, which I have explicitly named nginx. In Kubernetes, the name would be a pod name such as nginx-kv152x. You should first find out which minion (or node) your pod is running on using a command such as:

$ kubectl get nodes

In Figure 1, you can see the target container has the name nginx, on the right-hand side, and is exposing the HTTP port (TCP port 80) from the host via TCP port 80 on the container under PORTS.

The run-time parameters, which make the SuperContainer so useful, are based on launching the SuperContainer within the same process namespace and network namespace as the target container.

To achieve this kernel-trickery, you need to fire up the SuperContainer with the following command line switches and also provide the CAP_SYS_ADMIN capability. If you're not familiar with the CAP_SYS_ADMIN capability, it is a powerful mechanism for opening up system permissions. However, it is not quite as daunting as running a container in privileged mode, which also circumvents a kernel's cgroup resource limitations. Look online for more information on kernel capabilities [3].

The capabilities man page warns about the power of SYS_ADMIN access by saying "Don't choose CAP_SYS_ADMIN if you can possibly avoid it!" A long overloaded list shows the complications associated with running a process at this capability level.

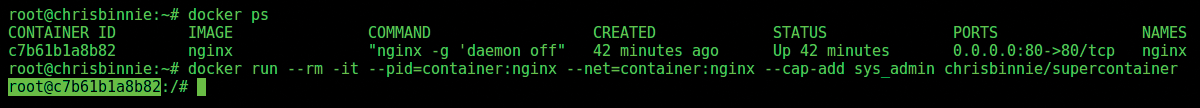

Back to the all-important command line, I run the SuperContainer as follows:

$ docker run --rm -it --pid=container:nginx --net=container:nginx --cap-add sys_admin chrisbinnie/supercontainer

Take a closer look at this clever command line. I am running the container with --rm, so it is destroyed afterwards (so attackers can't take advantage of it), and I'd also recommend deleting the image after using it on a host. I use the --pid switch to enter the same process namespace as the container name nginx. In exactly the same way, I use --net to access its network stack. Then I finally add the tricksy SYS_ADMIN.

If you struggle to get access to your applications from inside the target container, you can add other capabilities and, as a last resort, use --privileged mode. For example for running the strace package as a syscall debugging tool (syscalls are made whenever a process needs to request a service from the kernel), you should change your custom Dockerfile as follows:

CMD ["strace", "-p", "1"]

You would also need to add the CAP_SYS_PTRACE capability.

If you're interested in more detail about CAP_SYS_PTRACE, the kernel man page lists the following system resource access:

- Use ptrace(2) to trace arbitrary processes.

- Apply get_robust_list(2) to arbitrary processes.

- Use process_vm_writev(2) to transfer data to or from the memory of arbitrary processes.

- Use kcmp(2) to inspect processes.

Super Power

Having executed the run command you have spawned an interactive terminal offering access to your SuperContainer.

Figure 2 shows the SuperContainer running with an arbitrary hashed name (c7b61b1a8b82).

Super Process Tables

Be warned that what follows might take a moment to get used to; it is clever, simple, and yet a little surprising. If you have ever used a chroot in the past, it isn't quite as discombobulating.

A reminder that the plan is to access another container from within the SuperContainer by using the same kernel namespaces as the target.

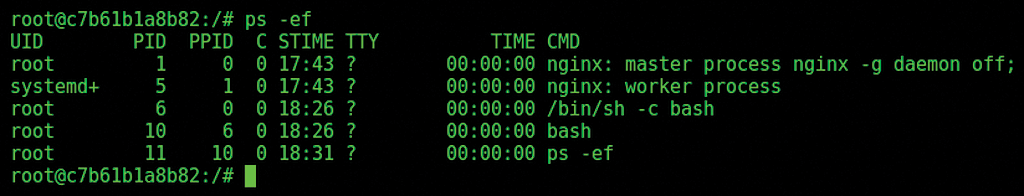

Figure 3 shows a standard command to check the process table. But… look! The Nginx web server is definitely not running inside the SuperContainer, so I know for certain that I'm accessing the target container, named nginx.

I can identify which processes are running within the container, under which user, and with which PID and parameters, as shown in Figure 3.

Super Filesystems

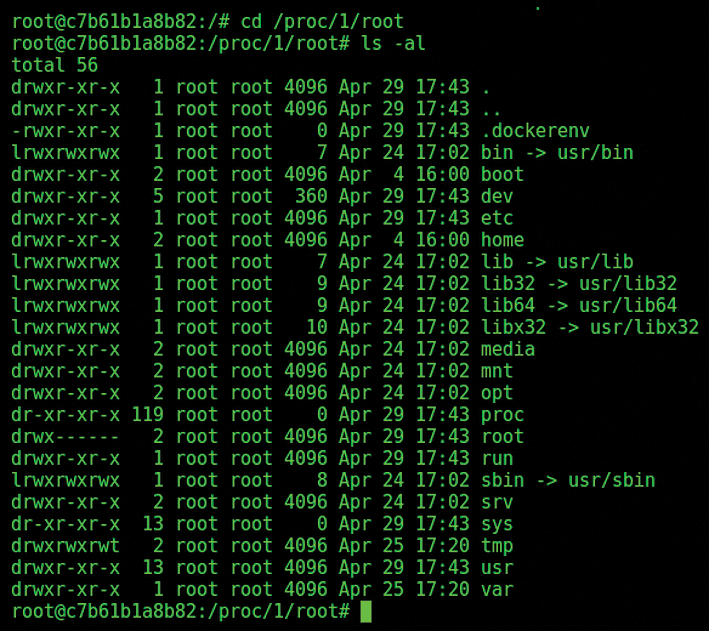

In order to access the target container's filesystem, I need to enter the local SuperContainer pseudo filesystem, which resides under /proc.

In Figure 4, you can see a directory listing for the path /proc/1/root.

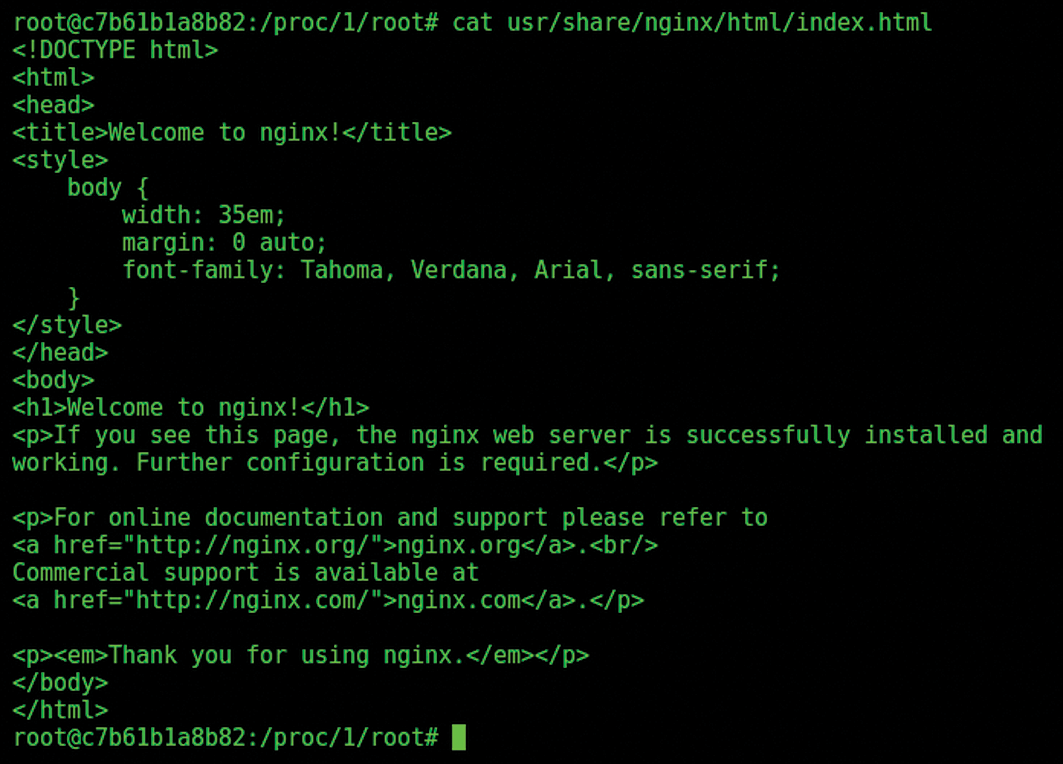

/proc pseudo filesystem in the SuperContainer.It's agreed, however, that the file listing in Figure 4 could potentially be from the local container and not the target container. In Figure 5, you can see the proof of the pudding. The figure shows the index.html file. The full path from the SuperContainer directory /proc/1/root is accessed at usr/share/nginx/html/index.html.

Super Network Stacks

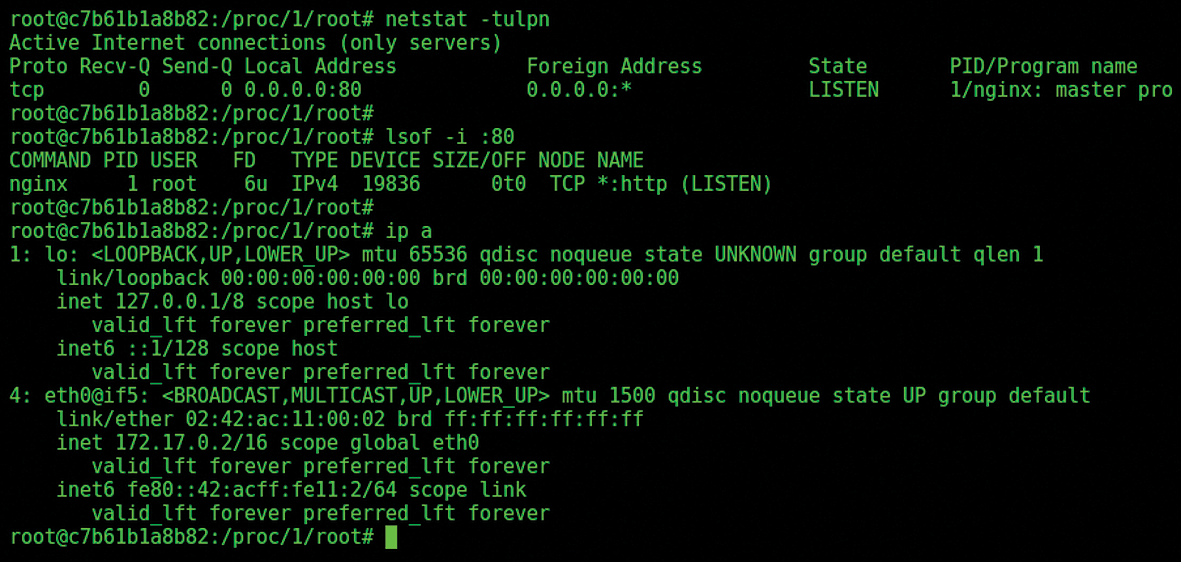

Next I'll check to see if I can access the target container's network. I'll run a couple of the networking tools I chucked into the Dockerfile earlier (see Figure 6).

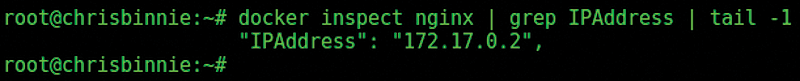

I used the networking tools in Figure 6 to check the internal IP address. I could see TCP port 80, which HTTP runs on, but I need to confirm that I am actually seeing the correct network stack from outside the target container. This time I work from the host with the following command:

$ docker inspect nginx | grep IPAddress | tail -1

Figure 7 shows the output from the docker inspect command after querying the container, which is named nginx.

nginx.Step Away

With a smattering of lateral thinking, I am certain that this tool can be extremely useful in a number of differing scenarios. Using debugging tools like strace (carefully) on production services is just one suggestion.

If you use the concept creatively, a SuperContainer might just save the day sometime in the future.

I for one will enjoy experimenting with SuperContainers across different use cases. You don't necessarily need access to a remote registry to pull the prebuilt image. Once you've memorized the command-line switches, you can create that simple Dockerfile with ease.