Time-series-based monitoring with Prometheus

Lighting Up the Dark

Wherever container-based microservices spread, classic monitoring tools such as Nagios [1] and Icinga [2] quickly reach their limits. They are simply not designed to monitor short-lived objects such as containers. In native cloud environments, Prometheus [3], with its time series database approach, has therefore blossomed into an indispensable tool. The software is related to the Kubernetes [4] container orchestrator: Whereas Kubernetes comes from Google's Borg cluster system, Prometheus is rooted in Borgmon, the monitoring tool for Borg.

Matt Proud and Julius Volz, two former site reliability engineers (SREs) with Google, started the project at SoundCloud in 2012. Starting in 2014, other companies began taking advantage of it. In 2015, the creators published it as an open source project with an official announcement [5], although it previously also existed as open source on GitHub [6]. Today, programmers interested in doing so can develop Prometheus under the umbrella of the Cloud Native Computing Foundation (CNCF) [7], along with other prominent projects such as Containerd, rkt, Kubernetes, and gRPC.

What's Going On

Thanks to its minimalist architecture and easy installation, Prometheus, written in Go, is easy to try out. To install the software, first download Prometheus [8] and then unpack and launch:

tar xzvf prometheus-1.5.2.linux-*.tar.gz cd prometheus-1.5.2.linux-amd64/ ./prometheus

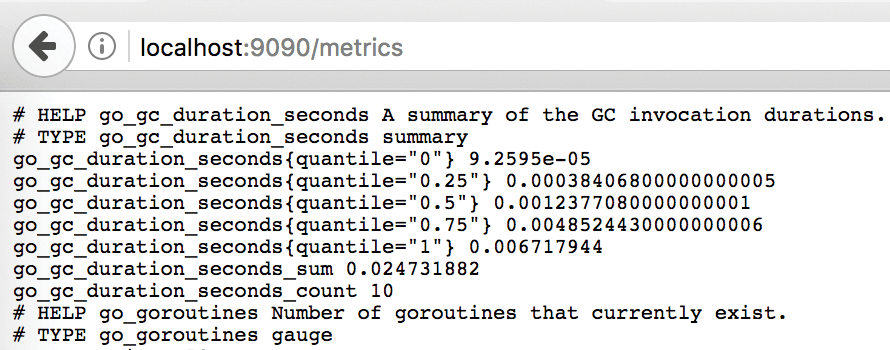

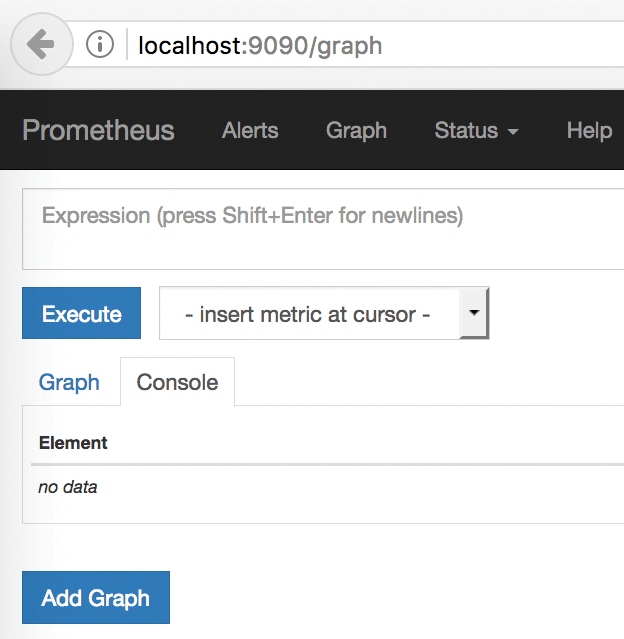

If you then call http://localhost:9090/metrics in your web browser, you will see the internal metrics for Prometheus (Figure 1). You can reach the Prometheus web interface shown in Figure 2, which is intended more for debugging purposes, from http://localhost:9090/graph. Many articles, blog posts, and conference keynotes [9]-[11] can help you delve the depths of Prometheus. In this article, I focus on retrieving metrics.

Collecting Metrics

Classic monitoring tools like Icinga and Nagios monitor components or applications with the help of small programs (plugins). This approach is known as "blackbox monitoring." However, Prometheus is a representative of the "whitebox monitoring" camp, wherein systems and applications voluntarily provide metrics in the Prometheus format. The ever-increasing number of applications that already do this, including Docker, Kubernetes, Etcd, and recently GitLab, are thus known as "instrumented applications."

Supported by exporters, Prometheus watches more than just instrumented systems and applications. As independent programs, exporters extract metrics from the monitored systems and convert them to a Prometheus-readable format. The most famous of these is node_exporter [12], which reads and provides operating system metrics such as memory usage and network load. Meanwhile, a number of exporters [13] exist for a wide range of protocols and services, such as Apache, MySQL, SNMP, and vSphere.

Making Nodes Transparent

To test node_exporter (like Prometheus, also written in Go), enter

tar xzvf node_exporter-0.14.0.*.tar.gz cd node_exporter-0.14.0.linux-amd64 ./node_exporter

to find a list of metrics for your system, which you can view at http://localhost:9100/metrics. You are now in a position to set up quite meaningful basic monitoring.

Node Exporter is confined to machine metrics by definition, whereas information about running processes is typically found at the application level, which requires other exporters. If you pass a directory into node_exporter with the --collector.textfile.directory option, Node Exporter reads the *.prom text files stored in the directory and evaluates the metrics they contain. A cronjob passes its completion time to Prometheus like this:

echo my_batch_completion_time $(date +%s) > </path/to/directory/my_batch_job>.prom.$$ mv </path/to/directory/my_batch_job>.prom.$$ </path/to/directory/my_batch_job>.prom

Prometheus queries the configured monitoring targets at definable intervals. The software distinguishes between jobs and instances [14]: An instance is a single monitoring target, whereas a job is a collection of similar instances. The polling intervals are usually between 5 and 60 seconds; the default is 15 seconds. The metrics are transmitted via HTTP if you use a browser. Prometheus uses the more efficient protocol buffer internally. This two-pronged approach makes it easy to see exactly what metrics an application or an exporter provides.

Target Practice

Monitoring only yourself doesn't make much sense. Targets for Prometheus are stored in the prometheus.yml file. If the file is not in the program directory, you can pass in the path to the file to your distributed monitoring system with the -config.file option. To query the metrics of a node_exporter running locally, you would add the following definition to the scrape_configs section:

- job_name: 'node'

static_configs:

- targets: ['localhost:9100']

You can add more instances by expanding the list of targets (e.g., ['localhost:9100', '10.1.2.3:9100', …]). You can stipulate other types of jobs, such as instrumented applications or other exporters, as separate job_name sections.

Discovery

In environments with short-lived instances, such as Docker or Kubernetes, you would not copy the instances manually to a configuration file. Another Prometheus feature, service discovery, can help. The monitoring software independently discovers monitoring targets for Azure, the Google Container Engine (GKE), Amazon Web Services (AWS), or Kubernetes. The entire list, configuration parameters, and formats are available online [15].

File-based service discovery plays a special role: It parses all the pattern-matching files listed in prometheus.yml. Prometheus automatically detects changes to the files. With this mechanism, you can tap into configuration tools such as Ansible – only your imagination sets the limits.

Architecture

As a diagram of the project architecture shows [16], Prometheus takes care of querying the targets and saving the metrics in a (local) time series database. Furthermore, it comes with the intelligent PromQL query language that lets you query stored metrics for visualization, aggregation, and alerting.

Prometheus forwards alerts to the alert manager, which can deduplicate and mute the alerts (e.g., during maintenance windows). The manager then uses email, Slack, or a generic webhook to forward the alerts to the admin.

The Pushgateway is another component that fields and caches results from short-lived programs. Prometheus can receive metrics sent from batch jobs, as well.

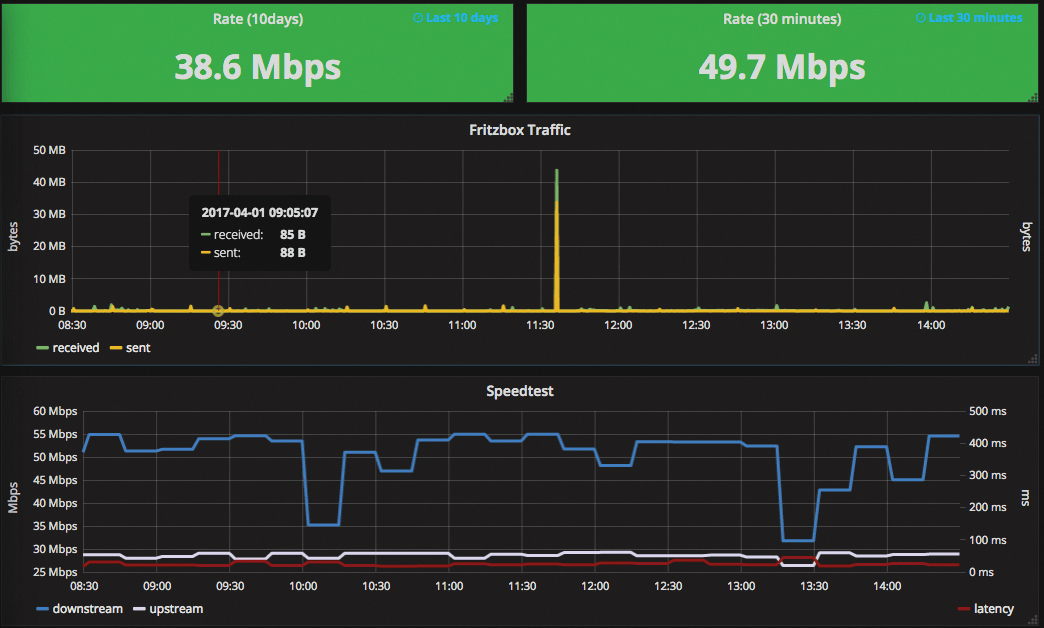

To replace the built-in web interface referred to earlier, Grafana [17], which is not associated with the Prometheus project, can be used for more elaborate dashboards (see also the article on Grafana in this issue). Grafana is now regarded as the standard tool for visualization and dashboards and already offers a wide variety of prebuilt dashboards for Prometheus that you can import into your own Grafana installation [18]. Figure 3 shows one that I use to monitor my VDSL Internet connection [19].

Prometheus Metrics

Prometheus basically supports counters and gages as metrics; they are stored as entries in the time series database. A counter metric,

http_requests_total{status="200",method="GET"}@1434317560938 = 94355

comprises an intuitive name, a 64-bit time stamp, and a float64 value. A metric can also include labels, stored as key-value pairs, that offer a very easy approach to making a multidimensional metric. The above example extends the number of HTTP requests by adding the status and method properties. In this way, you can specifically look for clusters of HTTP 500 errors.

Take care: Each key-value pair of labels generates its own time series, which dramatically increases the time series database space requirements. The Prometheus authors therefore advise against using labels to store properties with high cardinality. As a rule of thumb, the number of possible values should not exceed 10. For data of high cardinality, such as customer numbers, the developers recommend analyzing the logfiles, or you could also operate several specialist instances of Prometheus.

At this point, note that for a Prometheus server with a high number of metrics, you should plan a bare-metal server with plenty of memory and fast hard drives. For smaller projects, the monitoring software can also run on less powerful hardware: Prometheus monitors my VDSL connection at home on a Raspberry Pi 2 [19].

Prometheus has limits for storing metrics. The storage.local.retention command-line option states how long it waits before deleting the acquired data. The default is two weeks. If you want to store data for a longer period of time, you can forward individual data series via federation to a Prometheus server with a longer retention period.

The options for storing data in other time series databases like InfluxDB or OpenTSDB are in a state of flux. The developers also are currently replacing the existing write functions with a generic approach that will enable read access. For example, a utility with read and write functionality has been announced for InfluxDB.

The official best practices by the Prometheus authors [20] is very useful reading that will help you avoid some problems up front. If you provide Prometheus metrics yourself, make sure you specify the time values in seconds and the data throughput in bytes.

Data Retrieval with PromQL

The PromQL query language retrieves data from the Prometheus time series database. The easiest way to experiment with PromQL is in the web interface (Figure 2). In the Graph tab you can test PromQL queries and look at the results. A simple query for a metric provided by node_exporter, such as

node_network_receive_bytes

has several properties, including, among other things, the names of the network interfaces. If you only want the values for eth0, you can change the query to:

node_network_receive_bytes{device='eth0'}

The following query returns all acquired values for a metric for the last five minutes:

node_network_receive_bytes{device='eth0'}[5m]

At first glance, the query

rate(node_network_receive_bytes{device='eth0'}[5m])

looks similar, but far more is going on here: Prometheus applies the rate() function to the data for the last five minutes, which determines the data rate per second for the period.

This kind of data aggregation is one of Prometheus' main tasks. The monitoring software assumes that instrumented applications and exporters only return counters or measured values. Prometheus then handles all aggregations.

The data rates of all network interfaces are found for the individual interfaces with the query:

sum(rate(node_network_receive_bytes[5m])) by (device)

These examples only scratch the surface of PromQL's possibilities [21]. You can apply a wide variety of functions to metrics, including statistical functions such as predict_linear() and holt_winters().

Sound the Alarm

Prometheus uses alerting rules to determine alert states. Such a rule contains a PromQL expression and an optional indication of the duration, as well as labels and annotations, which the alert manager picks up for downstream processing. Listing 1 shows an alert rule that returns true if the PromQL expression up == 0 is true for a monitoring target for more than five minutes. As the example shows, you can also use variables in the comments.

Listing 1: Alerting Rule

01 # Monitoring target down for more than five minutes

02 ALERT InstanceDown

03 IF up == 0

04 FOR 5m

05 LABELS { severity = "page" }

06 ANNOTATIONS {

07 summary = "Instance {{ $labels.instance }} down",

08 description = "{{ $labels.instance }} of job {{ $labels.job }} has been down for more than 5 minutes.",

09 }

If an alert rule is true, Prometheus triggers an alarm (is firing). If you specified an alert manager, Prometheus forwards the details of the alert to it via HTTP. This happens while the alert condition for the alert exists and restarts whenever the rule is evaluated; thus, letting Prometheus notify you directly would not be a good idea.

Instrumenting Your Own Application

The Prometheus project offers ready-made libraries for some programming languages (e.g., Go, Java, Ruby, Python, and others) to simplify the instrumentation of your own applications with metrics. Listing 2 shows an example of how to instrument a Python application for Prometheus [22]. After you launch the demo application, you check out the generated metrics at http://localhost:8000.

Listing 2: Instrumenting a Python Application

01 from prometheus_client import start_http_server, Summary

02 import random

03 import time

04

05 # Create a metric to track time spent and requests made.

06 REQUEST_TIME = Summary('request_processing_seconds', 'Time spent processing request')

07

08 # Decorate function with metric.

09 @REQUEST_TIME.time()

10 def process_request(t):

11 """A dummy function that takes some time."""

12 time.sleep(t)

13

14 if __name__ == '__main__':

15 # Start up the server to expose the metrics.

16 start_http_server(8000)

17 # Generate some requests.

18 while True:

19 process_request(random.random())

Sending metrics from batch jobs to Prometheus also is very easy. An example is provided by speedtest_exporter [23]. The simple Perl script processes the output of another program (e.g., speedtest_cli) and uses curl to send the results to the Pushgateway. Prometheus picks up the collected data from there. To do so, it uses an HTTP POST to send a string with one or more metrics line by line to the Pushgateway. The metrics sent by speedtest_exporter, for example, look like this:

speedtest_latency_ms 22.632

speedtest_bits_per_second {direction="downstream"} 52650000speedtest_bits_per_second{direction="upstream"} 29170000

The URL specifies a unique job name, such as http://localhost:9091/metrics/job/speedtest_exporter.

Conclusions

Prometheus offers simplicity and the versatility of PromQL. For developers who want to monitor their applications or container environment, Prometheus is certainly a very good choice. For example, it can monitor a complete Kubernetes cluster easily and simply [24].

In business use, however, you need to put up with a few quirks: Long-term archiving of metrics is still a construction site, and you do need to build any required encryption or access controls around Prometheus. If you can live with this, what you get is a powerful and effective tool that will also almost certainly address the above-mentioned shortcomings in the next few years.