Best practices for secure script programming

Small Wonders

Holistic system security means paying attention to even the smallest detail to avoid it becoming an attack vector. One example of these small, barely noticed, but still potentially very dangerous parts of IT that repeatedly cause serious security issues is shell scripts. Employing best practices can ensure secure script programming.

Letting Off Steam

Impressive proof of the potential harmfulness of shell scripts was provided by US software vendor Valve. The Linux-based version of the Steam game service included a script that was normally only responsible for minor setup tasks [1]. Unfortunately, it contained the following line:

rm -rf "$STEAMROOT/"*

This command, which is responsible for deleting the $STEAMROOT directory, gets into trouble if the environment variable is not set. Bash does not throw an error but simply "disassembles" the environment variable into an empty string. The reward for this is the command

rm -rf /*.

which works its way recursively through the entire filesystem and destroys all information.

Some users escaped total ruin by running their Steam execution environment under an SELinux chroot jail. Others were not so lucky, so it is time to take a closer look at defensive programming measures for shell scripts.

Defining the Shell Variant

On Unix-style operating systems, dozens of shells are available – similar only in their support of the POSIX standard – that come with various proprietary functions. If shell-specific code from one shell is used in other shells, the result is often undefined behavior, which might not be a problem in a controlled VM environment, but deployment in a Docker or other cluster changes the situation.

The most common problem is the use of the #!/bin/sh sequence, or "shebang," which on Unix, is the first line of a script. According to the POSIX standard, the shebang affects the shell pre-selected by the system. It determines which interpreter is to be used for processing the script. The terms sha-bang, hash-bang, pound-bang, or hash-pling can also be found in the literature.

Bash, which is widely used among script programmers, is rarely the default shell. On a workstation based on Ubuntu 18.04, you can check the default by entering the which command. The which sh command returns the output /bin/sh, and the which bash command returns /bin/bash.

Several methods help you work around this problem. The simplest is to stipulate explicitly the use of Bash with #!/bin/bash in the shebang. Especially in cloud environments, it is not a good idea to assume the presence of the Bash shell. If you build your script without specific instructions, you can save yourself some extra work during deployment.

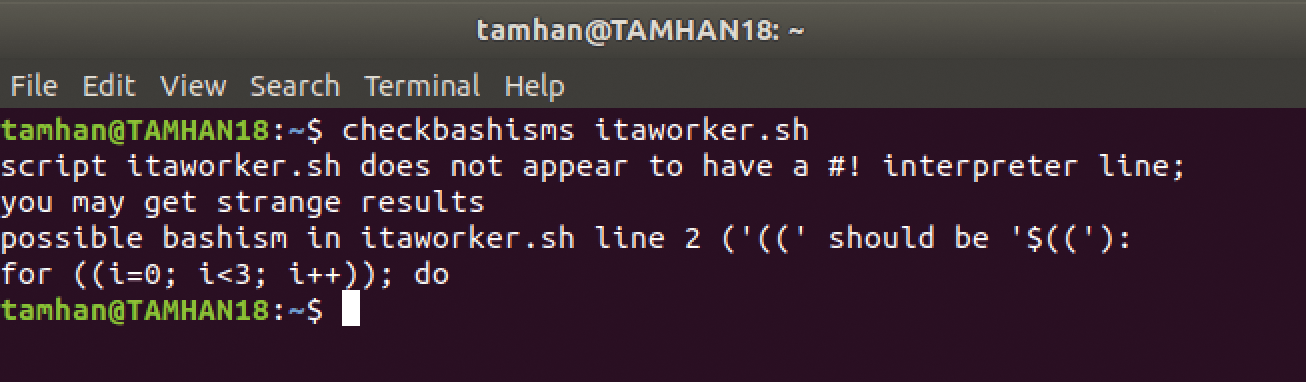

The checkbashisms command helps administrators find and remove Bash-specific program elements. To install the tool, you need to load the devscripts package then create a small shell script (here, itaworker.sh) that contains a Bash-specific for loop:

!#/bin/sh for ((i=0; i<3; i++)); do echo "$i" done

If you parse this script with checkbashisms, it complains about the loop (Figure 1). Note that the tool only warns you of Bash-specific code if the shebang does not reference Bash. The following version of the script,

#!/bin/bash for ((i=0; i<3; i++)); do echo "$i" done

checkbashisms command identifies Bash-specific code.passes the checkbashisms check without problems.

Saving Passwords Securely

Administrators like to use shell scripts to automate system tasks, such as copying files or updating information located on servers, which requires a password or username or both that should not be shared with the general public.

On the other hand, you do need to provide the credentials or enter them manually every time you run the script. Attackers like to capture all the shell scripts they can get on hijacked systems. If they find a group of passwords in the process, the damage done is multiplied.

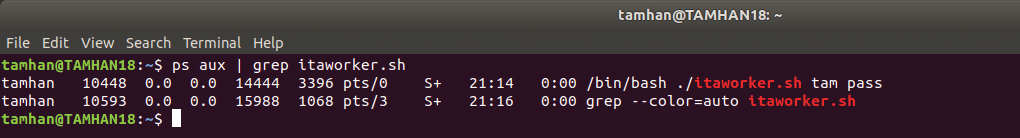

The first way to fulfill this condition is to pass the credentials to the script as parameters. The following procedure would be suitable:

#!/bin/bash a=$1 b=$2 while [ TRUE ]; do sleep 1; done

The variables $1 and $2 stand for the first and second parameters. This script is run by typing ./itaworker.sh tam pass, which is critical from a security point of view. The Linux command-line tool ps for listing running processes can output the parameters entered in the call if so desired. Figure 2 shows how the credentials can be delivered directly to the attacker's doorstep; entering ps is one of an attacker's first steps.

If dynamic parameterization of the shell script is absolutely necessary, the recommended approach is to store the credentials in environment variables. These cannot be found by an ordinary ps scan, because access to /proc/self/environ requires advanced user rights if the configuration is correct. However, this approach is not universally accepted – the Linux Documentation Project [2] recommends providing the information through a pipe or through redirection.

Consensus across the board in literature is that it is definitely better if the password is not found in plain text in the script file. One way to achieve this is to use a reversible hash function that converts its output values, also known as the "salt," back to the original value.

If you use a program for salt conversion that the attacker does not catch in a scan, you can make life more difficult for them. If the attacker wants to have the credentials in plain text, they have to log in again and run the command. Ideally, the binary file will belong to an account that is exclusively used for shell script execution.

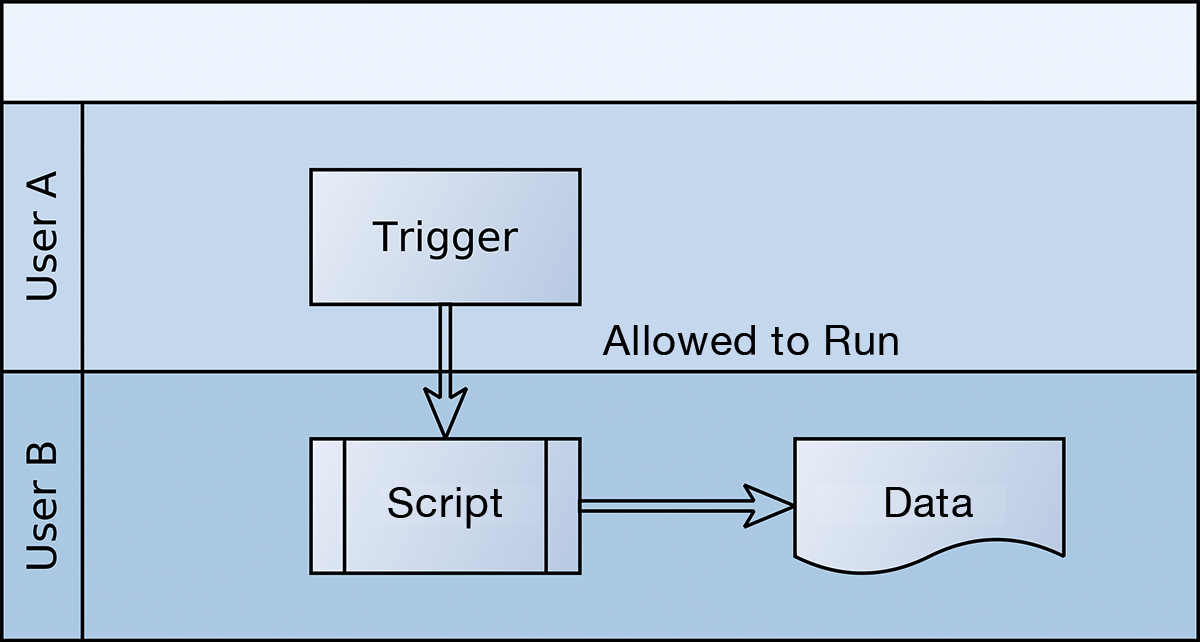

Option number two (Figure 3) relies on a separate configuration file that has different read permissions. As long as the user responsible for executing the scripts is the only one who is allowed to read this file, an attack on the other user accounts is not critical in terms of credentials.

To hide the structure of the script, you can use shc [3], developed by Francisco Rosales. The package can be installed by the package manager on Ubuntu. The call expects the -f parameter to specify the source file:

shc -f itaworker.sh ls itaworker.sh

After processing, you will find two additional files: In addition to the executable file with the extension .X is the file .X.C, which provides the C code of the script.

SHC wraps the shell script in a C wrapper, which is then converted into a binary file by the C compiler. If you delete the itaworker.sh and itaworker.sh.x.c files, it is more difficult for the attacker to capture the code of the script later on. It should be noted that the wide distribution of SHC has led to the existence of decompilation tools [4].

Vulnerable Temporary Files

Assume a script needs to make the credentials available to other applications. Temporary files are often used for this purpose. If you create the file in the /tmp/ folder, it should disappear after the script is processed. A classic example of this is the following script, which creates a file with a password:

#!/bin/sh SECRETDATA="ITA says hello" echo > /tmp/mydata chmod og-rwx /tmp/mydata echo "$SECRETDATA" > /tmp/mydata

Problems arise if an attacker monitors the temp file with the very fast acting FileSystem Watcher API. In the case of the present script, the attacker could call fopen and tag the file as open to harvest the information deposited there immediately or later.

As before with plaintext passwords, the safest way is not to write the credentials to a temporary file. However, if this is essential to perform a function, the following approach is recommended:

#!/bin/sh SECRETDATA="ITA says hello" umask 0177 FILENAME="$(mktemp/tmp/mytempfile.XXXXXX)" echo "$SECRETDATA" > "$FILENAME"

First, the umask command assigns the access attributes passed in as parameters to newly created files. Second, the mktemp command creates a random file name each time, which makes configuring the FileSystem Watcher API more difficult. Numerous sources are critical of the use of temporary files in shell scripts in general [5].

Securing Against Incorrect Parsing

The most frequent point of criticism levied against the shell execution environment is that it lacks methods for string processing. In combination with passing in variables directly to the shell, serious vulnerabilities arise. A classic example is a script that reads in a parameter from STDIN and executes it with an eval command:

#!/bin/bash read BAR eval $BAR

The eval command is normally intended to perform calculations and harmless commands. One legitimate use case would be to deliver an echo command (with a pipe redirect if needed):

./itaworker.sh echo hello

A malicious user would not pass an echo call with redirection at this point but a command such as rm, as mentioned earlier. As a matter of principle, the shell does not recognize this, which is why the script destroys data.

Because some eval-based tasks are not feasible without this command, shell-checking tools like shellcheck do not complain about the use of the command. The script

#!/bin/bash read BAR eval "$BAR"

would trigger the error message Double quote to prevent globbing and word splitting. However, because this change does not verify the values contained in BAR, the vulnerability still exists. From a security perspective, the only correct way to handle the eval command is not to use it at all. If this is not an option, be sure to check the commands entered for strings like rm and for non-alphanumeric characters.

Queries are another way to provoke problems. In the next example, I'll look at a script that checks to see whether the user enters the string foo. The payload, which here consists only of a call to echo, should only be executed if foo is entered:

#!/bin/bash read FOO if [ x$FOO = xfoo ] ; then echo $FOO fi

In a superficial check of the script behavior, the input (e.g., itahello) would not lead to the execution of the payload. However, if you pass a string following the pattern foo = xfoo <any>, the payload is processed:

./itaworker.sh foo = xfoo -o sdjs foo = xfoo -o sdjs ~$

Damage occurs if something more sensitive, such as an eval call, needs to be secured instead of the echo payload. In the worst case, the payload then contains a command that causes damage because of invalid values.

To work around this problem, you can use quoting, as in other shell application scenarios:

if [ "$FOO" = "foo" ] ; then echo $FOO fi

The "new" version of the script evaluates the content of the variable completely, which results in the rejection of invalid input:

./itaworker.sh foo foo ./itaworker.sh foo = xfoo

Quoting is even more important when working with older shells. A program built inline with the following pattern could be prompted to execute arbitrary code by entering a semicolon-separated string:

#!/bin/sh read VAL echo $VAL

The cause of this problem is that older shells do not parse before substituting the variable: The semicolon would split the command and cause the section that followed to be executed.

Ruling Out Parsing Errors

The rather archaic language syntax sometimes makes for strange behavior, as in the following example, which is supposed to call the file /bin/tool_VAR:

ENV_VAR="wrongval" ENV="tool" echo /bin/$ENV_VAR

If you run this at the command line, you will see that it uses the value stored in the variable ENV_VAR. The shell separates echo /bin/$ENV_VAR at the underscore. To work around this problem, it is advisable to set up all access to variables in curly brackets as a matter of principle. A corrected version of the script would be:

ENV_VAR="wrongval"

ENV="tool"

echo /bin/${ENV}_VAR

Running the corrected script shows that the string output is now correct:

./itaworker.sh /bin/tool_VAR

Problem number two concerns the analysis of the shell user permissions. The $UID and $USER variables can be used to analyze the permissions of the currently active user:

if [ $UID = 100 -a $USER = "myusername" ] ; then cd $HOME fi

Unfortunately, the two variables are not guaranteed to contain the value corresponding to the active user at runtime. Although many shells now save the variable $UID, this is usually not the case for $USER: In older shells it is even possible to provide both variables with arbitrary values. To circumvent this problem, it is a good idea to determine the username and user ID by calling operating system commands. The values returned in this way can only be influenced by setting the path variable, which complicates the attack.

In particularly critical execution environments, it is always advisable to write out paths in full. For example, if you call echo by its path /bin/echo, the activated program is independent of the path variable.

Finally, I should point out that the Bash shell supports a special version of the shebang: #!/bin/bash -p. This variant instructs the shell not to execute the .bashrc and .profile files as part of the startup. The SHELLOPTS variable and the start scripts created in ENV and BASH_ENV are also cast aside in this operating mode. The purpose of this procedure is that manipulations of the environment carried out by the attacker cannot be activated by random script executions.

Stricter Execution Verification

Shell scripts are intended for non-programmers to speed up common tasks in their daily lives on Unix operating systems. As a courtesy to this group of users, virtually all shells are set to be "permissive": In case of an error, they try to continue executing the script. This approach, which is quite friendly for a less technically experienced user, is critical because ambiguities that cause security problems do not stop the execution of the script.

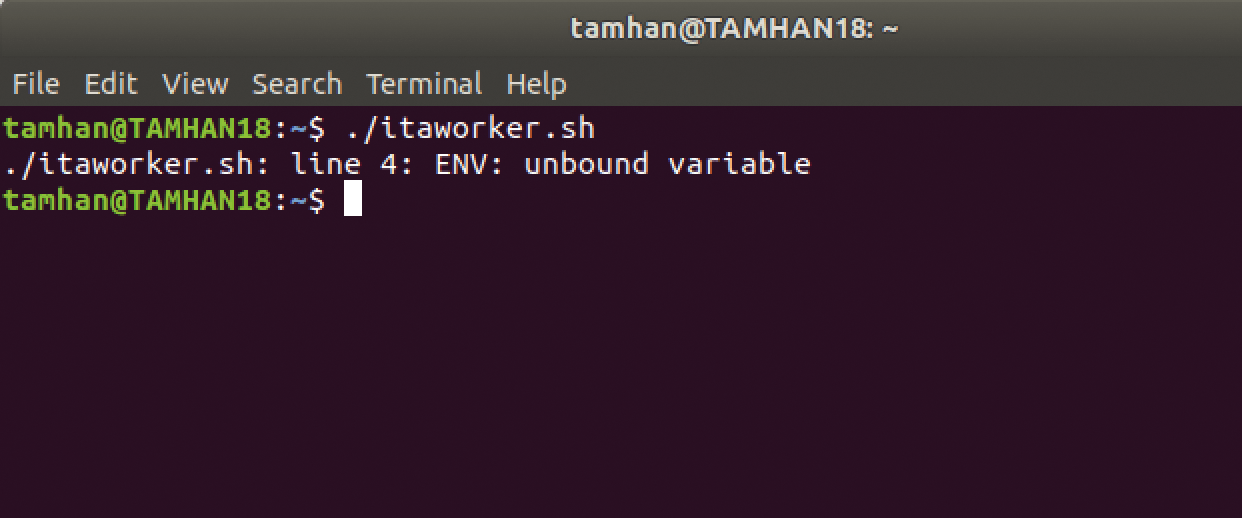

The previously mentioned problem of destroying files would not have occurred with the following shell setting:

#!/bin/bash

set -o nounset

TAMS_VAR="wrongval"

echo /bin/${ENV}_VAR

When called with the -o parameter, the set command activates execution options that influence the runtime behavior of the interpreter. The nounset option used here considers unset variables to be errors. Execution of the shell script shown before now fails with the error shown in Figure 4.

nounset option terminates program execution if it encounters missing variables.The set -o errexit command terminates a program if a command called by the shell script does not return a value of zero. Its use can be checked by copying a non-existent file:

#!/bin/bash set -o errexit cp "doesnotexist" "tree.txt" echo "Successfully copied"

The cp command does not return zero if it fails to find a parameter, which is why the status message passed to echo does not appear at the command line.

In scripts, you will always run into situations in which some of the code to be executed is non-critical, and the commands specified by -o can be disabled by calling +o. In the following script, the errexit option is no longer active when the copy command is processed:

#!/bin/bash set -o errexit set +o errexit cp "doesnotexist" "tree.txt" echo "Succesfully copied"

A problem can occur when processing commands connected by a pipe. Normally, this kind of command only fails if the last command in the pipe sequence does not return a value of zero. If you want errors somewhere in the hierarchy to cause a termination, then enable the pipefail option with the command set -o pipefail.

Changes to Global Variables

Another unintended result of lax language syntax is that variables defined in subfunctions develop unexpected side effects. As an example, look at a script that uses the variable o both inside and outside a function:

#!/bin/bash

hello_world () {

o=2

echo 'hello, world'

}

o=1

echo $o

hello_world

echo $o

Developers who grew up with other scripting languages would expect both calls to echo $o to return the value 1. This is not inherently the case; the changes made in the function remain valid even after they have been executed. To fix the problem, you just need to tag the variables in the function with the local keyword:

#!/bin/bash

hello_world () {

local o=2

echo 'hello, world'

}

o=1

echo $o

hello_world

echo $o

Although longer shell scripts are not necessarily recommended for maintenance reasons, you should always protect variables used in functions with the local keyword. A colleague that recycles shell code at some point will be better protected from unexpected side effects.

ShellCheck

C and C++ programmers have used static analysis to check the results of their work for a long time. A static analysis tool has a knowledge base in which it stores programming errors that it checks against the code at hand. A similar tool for shell scripts is available with ShellCheck [6]. The tool, licensed under the GPLv3, behaves much like lint in terms of syntax: It expects the name of the shell file as a parameter, which it then checks for dodgy elements.

ShellCheck doesn't only look for security-relevant errors. In a list of ShellCheck checks available online [7], four dozen test criteria also identify and complain about general programming errors. Last but not least, a web version of the tool [8] checks scripts for correctness and security without a local installation.

Conclusions

The close integration between scripts and the shell designed to execute commands, accompanied by lax syntax verification, guarantees that a lack of attention in shell programming is quickly punished by dangerous security vulnerabilities.

The criteria presented here can help you work around most of the problems. Unlike complicated security vulnerabilities, in the case of shell scripts, the cause is usually a simple lack of understanding that a certain construct can have side effects.