Kubernetes clusters within AWS EKS

Cloud Clusters

When a Kubernetes laboratory environment is required, the excellent localized Minikube and the tiny production-ready k3s distributions are fantastic. They permit a level of interaction with Kubernetes that lets developers prove workloads will act as expected in staging and production environments.

However, as cloud-native technology stacks lean more on the managed service offerings of Kubernetes, courtesy of the popular cloud platforms, it has become easier to test more thoroughly on a managed Kubernetes service. These managed services include the Google Kubernetes Engine (GKE) [1], Azure Kubernetes Service (AKS) [2], and Amazon Elastic Kubernetes Service (EKS) [3].

In this article, I walk through the automated deployment of the Amazon Elastic Kubernetes Service (EKS), a mature and battle-hardened offering that is widely trusted for production workloads all over the world by household names. For accessibility, suitable for those who do not want to run their own clusters fully, EKS abstracts the Kubernetes control plane away from users completely and provides access directly to the worker nodes that run on AWS Elastic Compute Cloud (EC2) [4] server instances.

In the Box

By concealing the Kubernetes control plane, EKS users are given the luxury of leaving the complexity of running a cluster almost entirely to AWS. You still need to understand the key concepts, however.

When it comes to redundancy, the EKS control plane provides a unique set of resources that are not shared with other clients – referred to as a single tenant – and the control plane also runs on EC2 instances that are abstracted from the user. The control plane of Kubernetes typically includes the API server etcd [5] for configuration storage, the scheduler (which figures out which nodes aren't too busy and can accept pods), and the Controller Manager (which keeps an eye on misbehaving worker nodes). For more information about these components, along with a graphical representation, visit the official website [6].

In addition to hosting worker nodes on EC2 across multiple availability zones for resilience (which are distinctly different data centers run by AWS at least a few miles away from their other sites), the same applies to the abstracted EKS cluster's control plane. Positioned in front of the worker nodes, which will serve your applications from containers, is an Elastic Network Load Balancer to ensure that traffic is evenly distributed among the worker nodes in their Node group.

In basic terms, EKS provides an endpoint over which the API server can communicate with kubectl, in the same way that other Kubernetes distributions work. Also, a unique certificate verifies that the endpoint is who it claims to be and, of course, encrypts communications traffic. If required, it is possible to expose the API server publicly, too. AWS documentation [7] explains that, by default, role-based access control (RBAC) and identity and access management (IAM) permissions control access to the API server, which by default is made public. If you opt to make the API endpoint private, you restrict traffic to resources that reside inside the virtual private cloud (VPC) into which you have installed your EKS cluster. However, you can also leave the API server accessible to the Internet and restrict access to a specific set of IP addresses with a slightly more complex configuration.

Although not the norm, you can also have transparent visibility of the cluster's worker nodes. With the correct settings, you can log in over SSH to tweak the Linux configuration, if necessary. However, such changes should really be made programmatically; therefore, the use of SSH is usually just for testing. In this cluster creation example, I'll show the setting to get you started, plus how to create a key pair, and you can experiment with security groups and other configurations if you want to pursue the SSH scenario.

The marginal downside of using a platform as a service (PaaS) Kubernetes offering is that, every now and again, a subtle version upgrade by the service provider might affect the cluster's compatibility with your workloads. In reality, such issues are relatively uncommon, but bear in mind that by relying on a third party for production service provision you are subject to upgrades with much less control. Far from just applying to EKS, where you are encouraged to keep up with the latest Kubernetes versions as best as you can, many of today's software applications are subject to the chase-the-leader syndrome.

From a thousand-foot view, EKS is designed to integrate easily with other AWS services such as the Elastic container registry (ECR), which can store all of your container images. As you'd expect, access to such services can be granted in a granular way to bolster security.

Now that the core components of the Kubernetes cluster aren't visible to users in EKS, take a look at what you can control through configuration.

Automation, Circulation

Although you could use a language like Terraform to set up EKS with infrastructure as code (IaC), I will illustrate the simplicity of using the native AWS command-line interface (CLI). Note that it will take anything up to 20 minutes to create the cluster fully. Also, be aware that some costs are associated with running a fully blown EKS cluster. You can minimize the costs by decreasing the number of worker nodes.

The billing documentation states (at the time of writing, at least, so your mileage might vary): "You pay $0.10 per hour for each Amazon EKS cluster that you create. You can use a single Amazon EKS cluster to run multiple applications by taking advantage of Kubernetes namespaces and IAM security policies."

I use version 2 of the AWS CLI to remain current with the latest CLI, as you can see by the v2 path in the EKS reference URL [8].

From the parent page of the EKS instructions, I focus on the page that deals with the create cluster command [9]. From that page, you can become familiar with the options available when creating a cluster.

The eksctl tool [10] from Weaveworks has been named the official AWS EKS tool for "creating and managing clusters on EKS – Amazon's managed Kubernetes service for EC2. It is written in Go and uses CloudFormation …." Although you could set up a Kubernetes-friendly YAML to configure a cluster, for the purposes here, you can use a set of commands to create your EKS cluster instead. Start by downloading eksctl from the website and then move it into your path:

$ curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp $ ls /tmp/eksctl $ mv /tmp/eksctl /usr/local/bin $ eksctl

The final command produces lots of help output if you have the tool in your path.

As mentioned, SSHing into your worker nodes takes a little bit more effort to permit access by security groups, but Listing 1 has the setting in the cluster creation; then, you can create a key pair that you can use for SSH.

Listing 1: Creating a Key Pair for SSH

01 $ ssh-keygen -b4096 02 ** 03 Generating public/private rsa key pair. 04 Enter file in which to save the key (/root/.ssh/id_rsa): eks-ssh 05 Enter passphrase (empty for no passphrase): 06 Enter same passphrase again: 07 Your identification has been saved in eks-ssh. 08 Your public key has been saved in eks-ssh.pub. 09 The key fingerprint is: 10 SHA256:Pidrw9+MRSPqU7vvIB7Ed6Al1U1Hts1u7xjVEfiM1uI 11 The key's randomart image is: 12 +---[RSA 4096]----+ 13 | .. ooo+| 14 | . ...++| 15 | . o =oo| 16 | . + . + =o| 17 | S o * . =| 18 | o o.+ E o.| 19 | .B.o.. . .| 20 | o=B.* + | 21 | .++++= . .| 22 +----[SHA256]-----+

When prompted for a name, don't save the key in ~/.ssh; instead, type eks-ssh (line 4) and then save the key in the /root directory for now. The path to the public key is shown to be /root/eks-ssh.pub (line 8), which is then used in the --ssh-public-key command in Listing 2 (line 11).

Listing 2: Creating a Cluster

01 eksctl create cluster 02 --version 1.18 03 --name chrisbinnie-eks-cluster 04 --region eu-west-1 05 --nodegroup-name chrisbinnie-eks-nodes 06 --node-type t3.medium 07 --nodes 2 08 --nodes-min 1 09 --nodes-max 2 10 --ssh-access 11 --ssh-public-key /root/eks-ssh.pub 12 --managed 13 --auto-kubeconfig 14 --node-private-networking 15 --verbose 3

Now you're ready to create a cluster with the content of Listing 2. At this stage, I'm assuming that you have already set up an AWS access key ID and secret access key [11] for the API to use with the CLI.

Put the Kettle On

The command in Listing 2 will take a while, so water the plants, wash the car, and put the kettle on while you patiently wait for the cluster to be created.

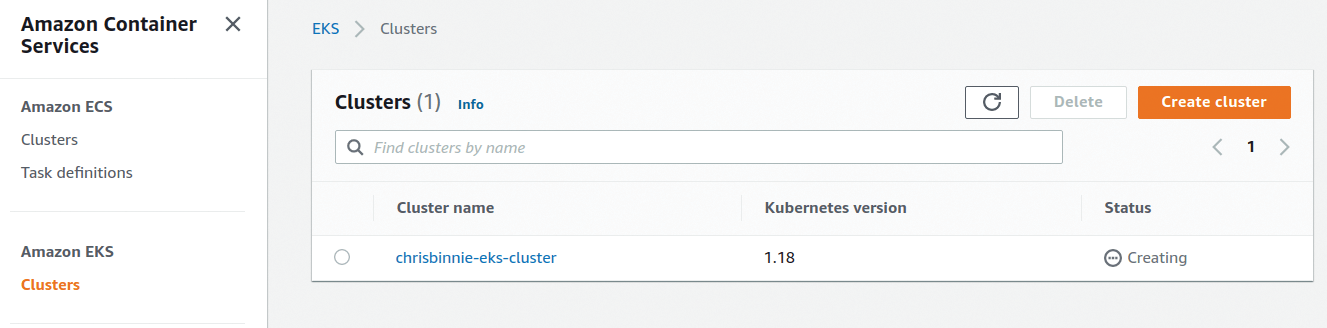

A word to the wise would be to make certain that you are in the correct region (eu-west-1 is Dublin, Ireland, in my example). In the AWS Management Console, as you wait for your cluster, look in the correct region for progress. In Figure 1 you can see the first piece of good news: the creation of your control plane in the AWS Console (which is still in the Creating phase).

Note as the text in your CLI output increases that AWS is using CloudFormation (IaC, native to AWS) to create some of the services in the background, courtesy of eksctl. Incidentally, EKS v1.19 is offered as the cluster comes online, even though v1.18 is marked as the default version at the time of writing.

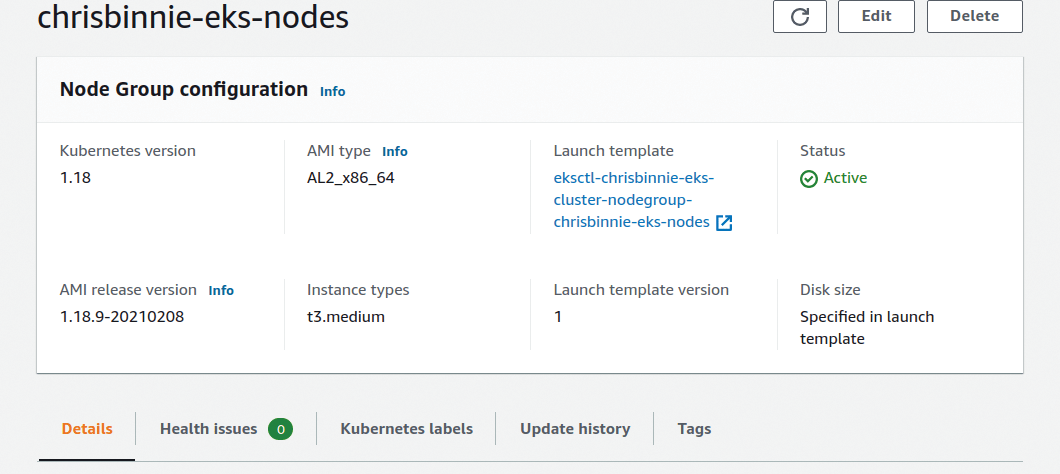

In Figure 2, having waited patiently after the creation of the control plane, you can see the Node group has been set up, and the worker nodes are up and running within their Node group; you can scrutinize their running configuration, as well.

All Areas Access

Now, you need to authenticate with your cluster with AWS IAM authenticator:

$ curl -o aws-iam-authenticator https://amazon-eks.s3.us-west-2.amazonaws.com/1.19.6/2021-01-05/bin/linux/amd64/aws-iam-authenticator $ mv aws-iam-authenticator /usr/local/bin $ chmod +x /usr/local/bin/aws-iam-authenticator

Now run the command to save the local cluster configuration, replacing the cluster name after --name:

$ aws eks update-kubeconfig --name <chrisbinnie-eks-cluster> --region eu-west-1 Updated context arn:aws:eks:eu-west-1:XXXX:cluster/chrisbinnie-eks-cluster in /root/.kube/config

Now you can authenticate with the cluster. Download kubectl to speak to the API server in Kubernetes (if you don't already have it) and then save it to your path:

$ curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl" $ mv kubectl /usr/local/bin $ chmod +x /usr/local/bin/kubectl

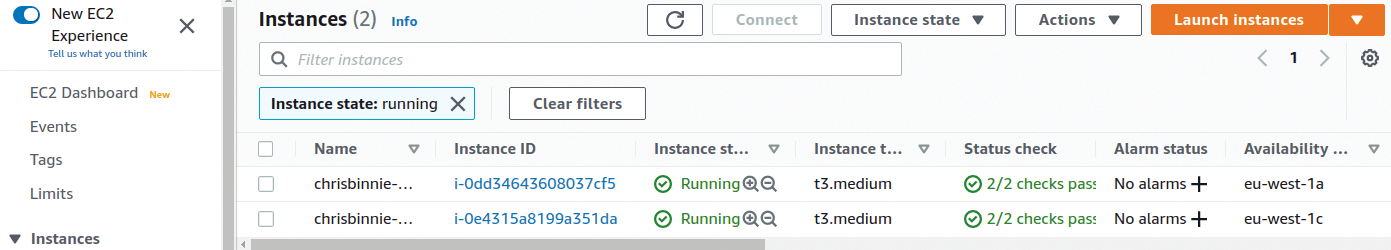

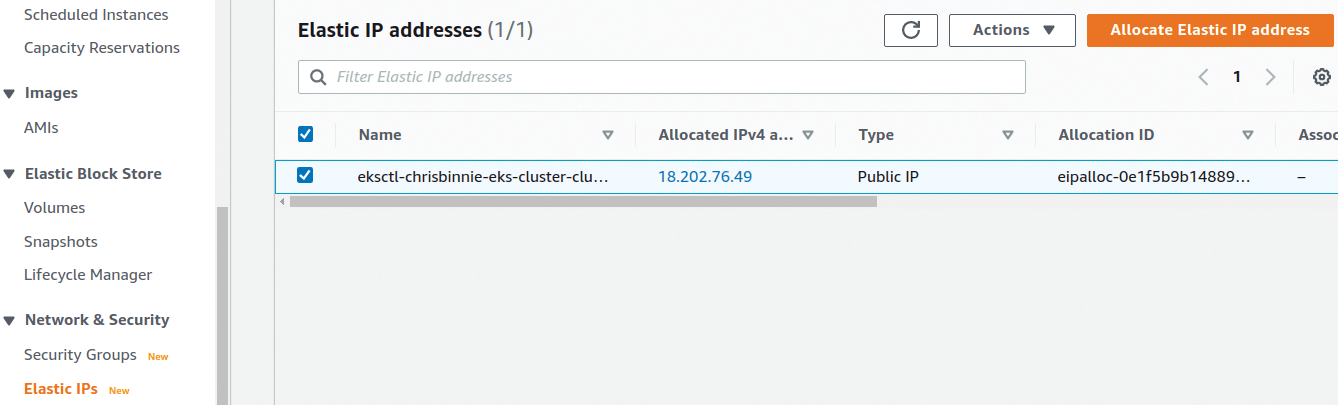

This command allows you to connect to the cluster with a local Kubernetes config file. Now for the moment of truth: Use kubectl to connect to the cluster to see what pods are running across all namespaces. Listing 3 shows a fully functional Kubernetes cluster, and in Figure 3 you can see the worker nodes showing up in AWS EC2. Finally, the EC2 section of the AWS Management Console (Figure 4; bottom-left, under Elastic IPs) displays the Elastic IP address of the cluster.

Listing 3: Running Pods

$ kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system aws-node-96zqh 1/1 Running 0 19m kube-system aws-node-dzshl 1/1 Running 0 20m kube-system coredns-6d97dc4b59-hrpjp 1/1 Running 0 25m kube-system coredns-6d97dc4b59-mdd8x 1/1 Running 0 25m kube-system kube-proxy-bkjbw 1/1 Running 0 20m kube-system kube-proxy-ctc6l 1/1 Running 0 19m

Destruction

To destroy your cluster, you first need to wait for the Node group to drain its worker nodes by deleting the Node group in the AWS Console (see the "Node groups" box); then, you can also click the Delete Cluster button; finally, make sure you delete the Elastic IP address afterward. Be warned that the deletion of both of these components takes a little while, so patience is required. (See the "Word to the Wise" box.)

The End Is Nigh

As you can see, the process of standing up a Kubernetes cluster within AWS EKS is slick and easily automated. If you can afford to pay some fees for a few days, the process covered here can be repeated, and destroying a cluster each time is an affordable way of testing your applications in a reliable, production-like environment.

A reminder that once you have mastered the process, it is quite possible to autoscale your cluster to exceptionally busy production workloads, thanks to the flexibility made possible by the Amazon cloud. If you work on the programmatic deletion of clusters, you can start and stop them in multiple AWS regions to your heart's content.