Tinkerbell life-cycle management

Magical Management

The subject of bare metal life-cycle management is a huge topic for providers today (see the "Early Efforts" box). Red Hat, Canonical, and SUSE all have powerful tools on board for this task. Third-party vendors are also trying to grab a piece of the pie, one of them being Foreman, which enjoys huge popularity.

A vendor you might not expect is now also getting into the mix, with Equinix launching its Tinkerbell tool. Primarily a provider of data center and network infrastructure, Equinix is looking to manage a kind of balancing act with Tinkerbell. The tool is intended to enable customers to provision bare metal nodes in Equinix data centers just as easily as virtual instances in cloud environments.

Open Source Tinkerbell

With its Metal service, Equinix has been hunting for customers for several years. Customers used the company almost exclusively as a hoster. If you were looking for a collocation for your own setup, Equinix was the right choice. In this constellation, however, the customer has a number of different tasks ahead of them: Procuring the hardware, mounting it in the rack, and cabling correctly are just a few.

Equinix Metal instead offers servers in the form of bare metal at the push of a button: Servers that Equinix keeps on hand are automatically configured to be available exclusively to a customer. Tinkerbell makes it possible to provide the systems with exactly the kind of basic equipment admins needs for their environments. In the meantime, Equinix put Tinkerbell under a free license and published it on GitHub. The service can therefore also be used outside of Equinix Metal. In this article, I show in more detail what distinguishes this solution from other systems for bare metal management.

They Already Have That?

Although bare metal life-cycle management sounds very much like marketing hype, in essence, it's all about the ability to (re)install automatically any infrastructure (e.g., servers) at any time. Moreover, the automatic removal of a machine from a setup, known as decommissioning, plays a role – albeit a noticeably subordinate one. A system that has to be reinstalled during operation because of a misconfiguration is a more common occurrence than the final shutdown of a component.

In fact, bare metal life-cycle management is a concise term for a principle that has been around for decades. The protocols that are still in use today – in Tinkerbell, too, by the way – can all look back on more than 30 years of existence. Combining them to achieve a fully automated installation environment is not new either. As an admin, you will always encounter the same old acquaintances: DHCP, PXE, TFTP, HTTP or FTP, NTP – that's it. This begs the question: What does Tinkerbell do differently than Foreman or an environment you create [1]?

A Bit of History

A detailed answer to this question can be found in a blog post by Nathan Goulding [2], who is part of the inner core of the Tinkerbell developer team and cofounder a few years ago of Packet, the company that launched Tinkerbell and now goes by the name Equinix Metal. Packet was originally independent and offered a kind of global service that could roll out systems to any location. After its acquisition by Equinix, the focus is now on Equinix's data centers, but Tinkerbell can be used entirely without an Equinix connection.

The developers' original motivation, according to Goulding, was to create a generic tool for bare metal deployments that would be as versatile as possible. However, it was by no means intended to mutate into a multifunctional juggernaut – unlike Foreman, for example, which has long since ceased to be all about bare metal deployment and, instead, also integrates automators and performs various additional tasks. One of the motivations behind Tinkerbell, claimed Goulding, was that existing solutions had made too many compromises and were therefore unable to complete the task at hand in a satisfactory way.

Bogged Down in Detail

The basics were not the big problem, said Goulding. Taking a server out of the box, mounting it in a rack, and then booting it into an installer in a preboot execution environment (PXE) is not the challenge. In most cases, however, this is only a small part of the work that needs to be done.

Saying that commodity hardware always behaves in the same way is simply not true. Anyone who has ever had to deal with different server models from the same manufacturer can confirm this. Bare metal life-cycle management therefore also includes updating the firmware, observing different hardware requirements for specific servers, and implementing specific features on specific systems – not to mention the special hardware that needs to be taken into account during deployment.

Imagine a scenario in which a provider uses special hardware such as network interface controllers (NICs) by Mellanox, for which the driver is also integrated into its own bare metal environment. If you need to buy a successor model for a batch of additional servers because the original model is no longer available, you face a problem that quite often requires a complete rebuild. Tinkerbell has looked to make precisely these tasks more manageable right from the outset.

The Tinkerbell community particularly sorely misses the ability to intervene flexibly with individual parts of the deployment process in other solutions. Indeed, Red Hat, Debian, and SUSE offer virtually no controls once the installer is running. Moreover, changing the installer with a view to extended functionality turns out to be very much nontrivial.

One Solution, Five Components

To achieve these goals, the Tinkerbell developers adhere to virtually all the specifications of a modern software architecture. Under the hood, Tinkerbell comprises five components that follow the principle of microarchitecture; it thus has a separate service on board for each specific task (Figure 1).

![Tinkerbell comprises various individual components such as Boots and Hegel, each of which provides only one function. This architecture is typical of a microcomponent architecture (Tinkerbell docs [3]). Tinkerbell comprises various individual components such as Boots and Hegel, each of which provides only one function. This architecture is typical of a microcomponent architecture (Tinkerbell docs [3]).](images/F01-b02.png)

Tinkerbell does not rely on existing components; rather, it is a construct written from scratch. Consequently, the developers implemented the services for basic protocols such as DHCP or TFTP from scratch, too. Experienced administrators automatically react to this with some skepticism – after all, new wheels are rarely, if ever, rounder than their predecessors. Is Tinkerbell the big exception?

An answer to this question requires a closer examination of Tinkerbell's architecture. The authors of the solution distinguish between two instances: the Provisioner and the Worker. The Provisioner contains all the logic for controlling Tinkerbell. The Worker converts this logic in batches into logic tailored for individual machines, which it then executes locally.

Tink as a Centralized Tool

Anyone who has ever dealt with the approach of microarchitecture applications will most likely be familiar with what "workflow engine" means in this context. Many recent programs rely on workflows, which define individual work steps and specify the order in which the work steps need to be completed.

In a bare metal context, for example, a workflow might consist of a fresh server first booting into a PXE environment over DHCP, and then receiving the kernel and RAM disk for a system inventory and performing the installation. During the transition from one phase to the next (i.e., from one element of the workflow to the next), the server reports where it is in the process directly to the workflow engine, which enables it to take corrective action if necessary and to cancel or extend processes.

Tink, one of the five core components in Tinkerbell, follows exactly this approach. As Tinkerbell's workflow engine, it acts as the solution's control center. You communicate with Tink over the command-line interface (CLI) and inject templates into it in this way. A template contains the instructions to be applied to a specific piece of hardware (e.g., a server) or the workflow that the server runs through in Tinkerbell, if you prefer. Moreover, Tink contains the database listing the machines Tinkerbell can handle.

Furthermore, Tink includes a container registry, which will become important later on. All the work that Tink does on the target systems takes the form of containers. On the one hand, this allows you to define your own work steps and store them in the form of generic containers. On the other hand, it makes the standard container images of the major distributors usable, even if Tink provides a small detour.

DHCP and iPXE with Boots

Tink is surrounded by various components that do the real work on the target systems. These include Boots, a DHCP server, and an iPXE server written especially for Tinkerbell. As a reminder, the PXE extension iPXE offers various additional features such as chain loading (i.e., the ability to execute several boot commands one after the other).

The only task Boots has is to field the incoming DHCP requests from starting servers and to match the queried MAC address with the hardware stored in Tink. When it identifies a machine, it assigns it an IP address and then sends the machine an iPXE image, ensuring that the server boots into the third Tinkerbell component, the operating system installation environment (OSIE). This mini-distribution is based on Arch Linux, which processes the various steps defined in the template for the respective server, one after the other. OSIE uses Docker for this purpose, which lets you use simple containers of your own that OSIE calls in the sequence your define. Alternatively, you can rely on standard containers from the major vendors.

OSIE is supported by a metadata service named Hegel, the fourth component, that stores the configuration parameters you specify in a template for the respective server so that they can be retrieved directly from OSIE. In principle, this process works like cloud-init in various cloud environments. The script talks to a defined API interface over HTTP at system startup; by doing so, it obtains all the parameters defined for the machine (e.g., a special script that calls the virtual instance or, in the Tinkerbell example, the physical server at system startup).

Hegel imposes virtually no limits on your imagination, although a reminder is in order: Especially in the context of virtual instances, many administrators get carried away with re-implementing half an Ansible setup in the boot script that the VM receives from its metadata. However, this is not exactly what the scripts are designed to do. In fact, they are only supposed to do the tasks that are immediately necessary. If any additional work is to be done later, you will want it done by components that are made precisely for those purposes.

Bare Metal Is Not the Focus

The fifth and most recent Tinkerbell component is the power and boot service, which can control the machine by out-of-band management. This function was not originally part of the Tinkerbell design; however, it quickly became clear that reinstalling a system on the fly is also part of life-cycle management and only works conveniently if the life-cycle manager controls the hardware. Otherwise, you would have to use IPMItool or the respective management tool for the out-of-band interface to change the boot order on the system to PXE first and then trigger a reboot locally.

However, you can only get this to work if the affected system still lets you log in over SSH. If this does not work, you have to use the BMC interface. Tinkerbell's power and boot service allows all of this to be done automatically and conveniently from the existing management interface.

The developers clearly focus on IPMI. All BMC vendor implementations offer IPMI support, even though you might have to enable it separately. In any case, the only alternative to IPMI would be to fall back on Dell's remote access admin tool, Racadm. Tinkerbell does not implement these protocols itself but relies on existing tools in the background, which undoubtedly saves a lot of work.

Communication by gRPC

By now, you know the components of the Tinkerbell stack. As befits a solution in the year 2021, the individual services do not communicate with each other in any way; rather, they use the gRPC protocol that was originally developed by Google. Therefore Tink, as the central component, ultimately controls the other services remotely in a certain way. gRPC is designed to be both robust and stable, so the developers' decision in favor of the protocol is understandable.

Practical Tinkerbell

If you want to try out Tinkerbell, you can access the developers' Vagrant containers. The objective of this exercise is not to install an operating system on a server. Instead, the Vagrant environment is intended to show the basic workings of Tinkerbell and the opportunities it offers.

The Vagrant files and detailed instructions [4] supplied build a Tinkerbell environment in a very short time without disabling any features. The essence of the process is not complicated. First, you roll out the Provisioner with Vagrant that contains all the services described above: Tink, Boots, and Hegel, including their sub-services and everything else an admin needs.

From Vagrant, Tinkerbell then runs in Ubuntu containers, which you roll out with a Docker Compose file. Therefore, you also have the Tink CLI. You also need an image to get things started: In this example, I used the hello-world image provided by the Docker developers themselves. Tinkerbell runs a local container registry. You first need to run the pull command to retrieve the image from Docker Hub and then push it into the local registry with a push command.

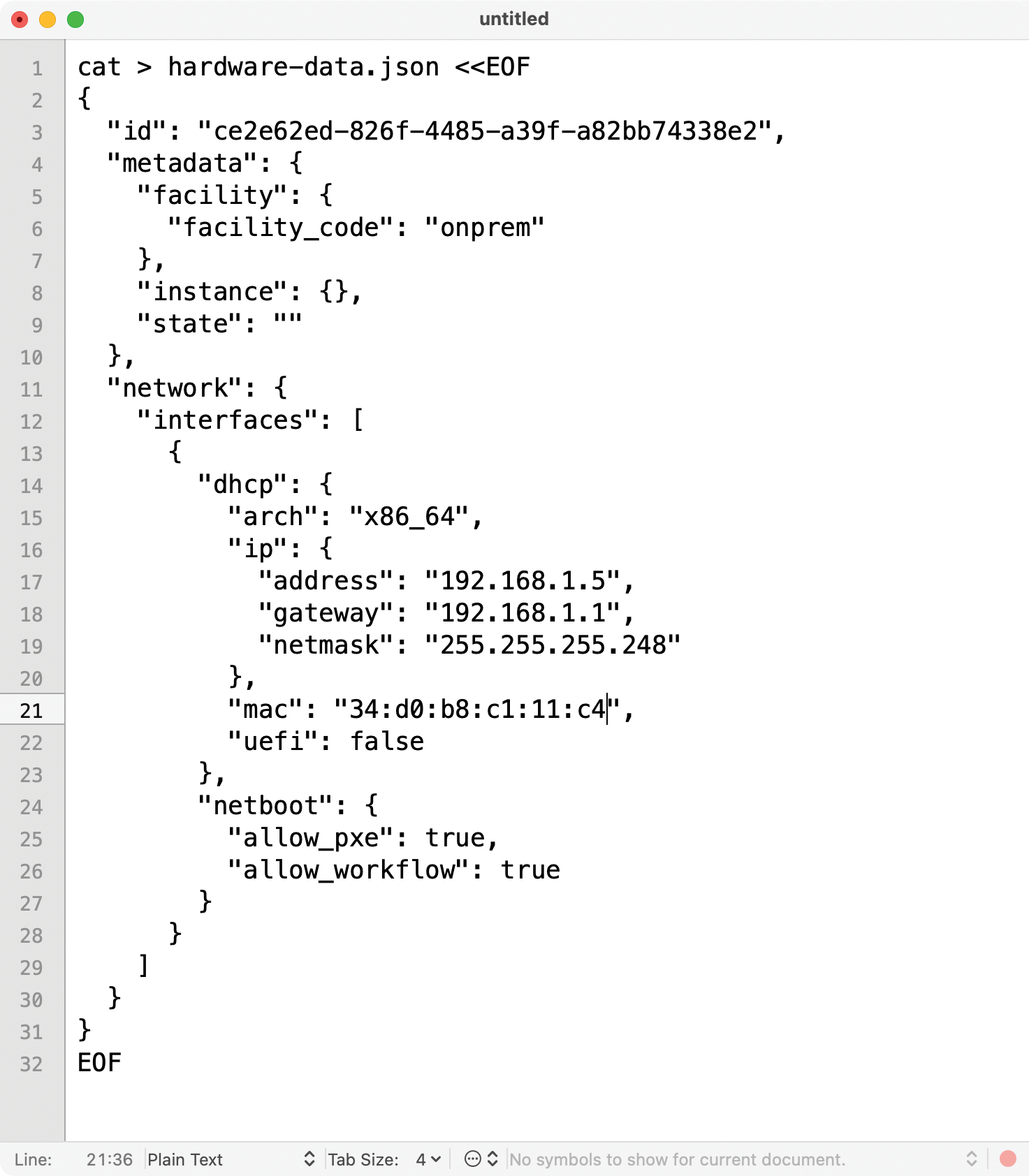

Next, you inject the physical server configuration into Tinkerbell with the help of a file in JSON format (Figure 2) that gives the machine an ID, a few configuration details, and – very importantly – its network settings. This is where you specify which IP address the system will get from Boots later on and whether it uses UEFI or a conventional BIOS.

Server Template

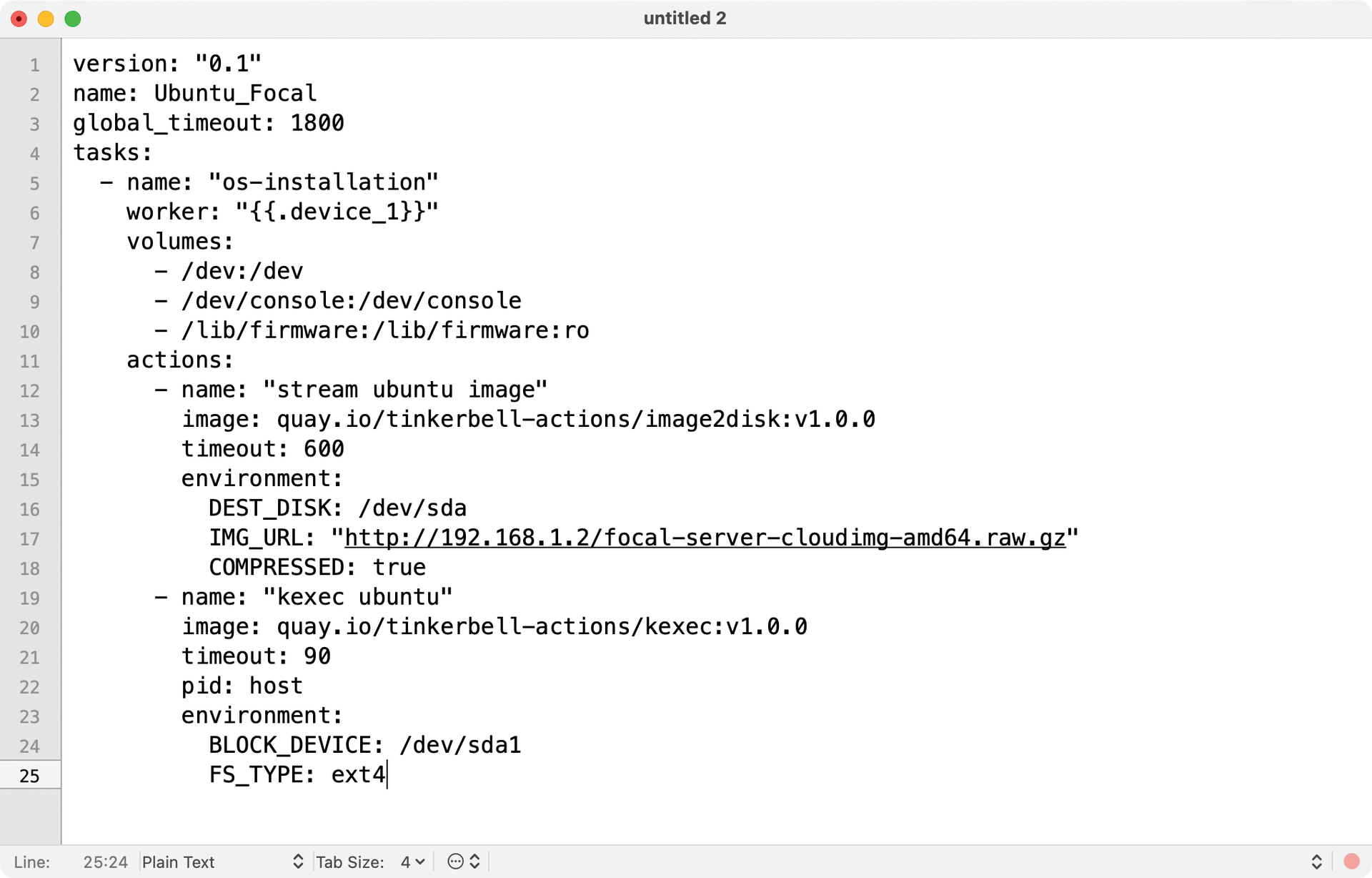

The Tinkerbell services are running and the server configuration is where it belongs. However, Tinkerbell would not yet know what to do with the server when it phones home. It would start OSIE on the machine, but it would not install an operating system for a lack of instructions. Therefore, the template in Listing 1 is used in the next step.

Listing 1: Tinkerbell Template

cat > hello-world.yml <<EOF

version: "0.1"

name: hello_world_workflow

global_timeout: 600

tasks:

- name: "hello world"

worker: "{{.device_1}}"

actions:

- name: "hello_world"

image: hello-world

timeout: 60

EOF

In Tinkerbell, a template associates a particular machine with a set of instructions (Figure 3), which is strongly reminiscent of Kubernetes pod descriptions in style and form, even though it does not ultimately implement the Kubernetes standard. The most important thing in the template is the tasks section, which ensures that Tinkerbell executes the named image on the system after the OSIE startup.

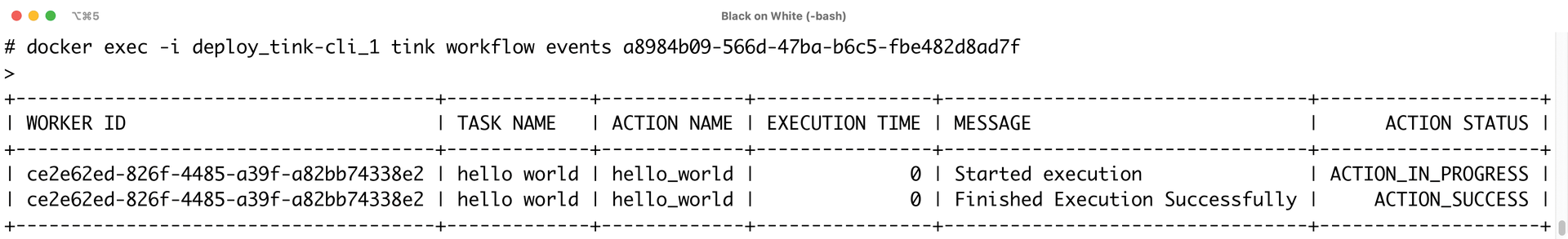

In the next step, if you start a Tinkerbell Worker and give it the ID of the template and the MAC address of the target machine, the process starts up. As soon as the corresponding server boots into a PXE environment, Tink kits it out with the OSIE image and then calls the container in the image, which outputs Hello World.

The individual steps of the process can be displayed directly in Tinkerbell by the workflow engine (Figure 4). To help with debugging, you can also see which parts of the workflow were executed and whether the execution worked.

A Practical Example

In the daily grind, the rudimentary Vagrant example is admittedly unlikely to make an administrator happy. However, it shows basically what is possible. Tinkerbell's documentation also comes with concrete examples of templates that ultimately install Ubuntu or RHEL from official container images.

If you're now thinking, "Wait a minute, they don't have kernels and they don't install GRUB out of the box, either," you're absolutely right: You have to specify these steps individually in the respective templates – and in the way you want them to be executed. As with other solutions, the greater flexibility in Tinkerbell comes at a price of additional overhead in terms of needing to specify what you want to accomplish.

Conclusions

Tinkerbell may seem very hip to some die-hard admins – after all, it has been possible to provide servers with an operating system automatically without gRPC, Docker, and all the other stuff for quite some time now. But if you dismiss Tinkerbell because of this, you are doing the solution an injustice. It shows not only its strengths in its interaction with Equinix Metal, but also in real-world setups. A deployment system that allows such elementary intervention with the process in every phase of the setup is unparalleled on the market. It can't hurt to take a closer look at Tinkerbell and try it out.