Managing network connections in container environments

Switchboard

Traefik wants to make "networking boring … with a cloud-native networking stack that just works" [1]. The program aims to make working in DevOps environments easy and enjoyable and ultimately ensure that admins who are not totally network-savvy are happy to deal with DevOps and related areas. Traditionally, this has not been the case. Many administrators who grew up in the classic silo thinking of IT in the early 2000s see networks (and often storage as well) as the devil's work.

Traefik counters this: It aims to make software-defined networking (SDN) approaches in container environments obsolete by replacing them with simple technology. Traefik acts as a reverse proxy and load balancer, but also as a mesh network. I put the entire construct to the test and look into applications where Traefik might be of interest.

Complexity

No matter how much the container apologists of modern IT try to convince admins, virtualized systems based on Kubernetes and the like are naturally significantly more complex than their less modern predecessors. This complexity becomes completely clear the moment you compare the number of components in a conventional environment with that in a Kubernetes installation.

Web server setups follow a simple structure: a load balancer and a database, each redundant in some way, and then several application servers to which the load balancers point. The job is done. Virtualized container environments with Kubernetes and others also contain these components but additionally have virtualization and orchestration components weighing them down.

Many virtualized services comply with components such as those provided by a cloud-ready architecture: dozens of fragments and microservices that somehow want to communicate with each other in the background, even if they are running on different nodes in far-flung server farms and rely on SDN to communicate.

DevOps also takes on a new meaning in such environments. Even if you want to implement the typical segregation into specialized teams that take care of network, applications, and operations, it would simply be impossible. The individual levels are too interlocked, and it is too difficult to disentangle them now.

Therefore, a category of programs has emerged with the aim of making shouldering this complexity easier for admins and developers alike by setting up a dynamic load balancer environment that includes encapsulated traffic between the individual microcomponents to retrofit secure sockets layer (SSL) encryption and endpoint monitoring on the fly.

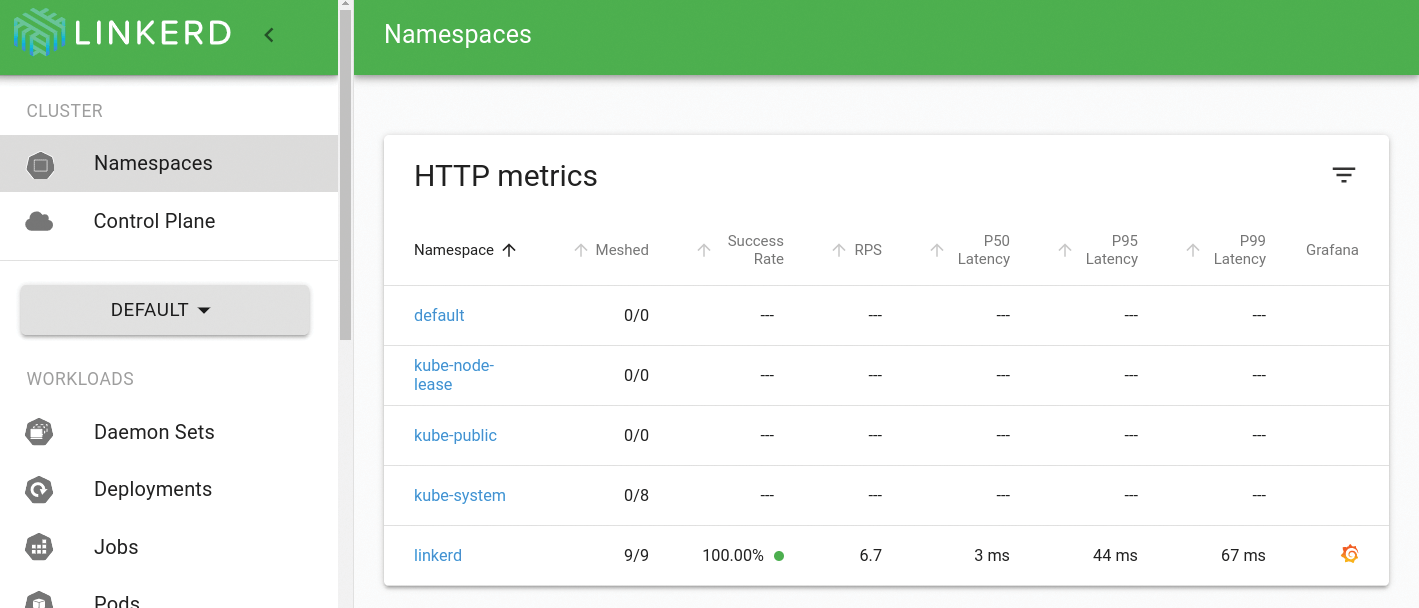

In addition to the Istio service mesh, various alternatives can be found: Consul, originally an algorithm for consensus finding in distributed environments, now offers mesh functionality, as does Linkerd (Figure 1). Mesh networks, then, are a cake from which many companies want a slice (and maybe even a large one). Before continuing, however, it is helpful to answer a few very basic questions, especially about terminology.

Clarifying Terms

The component that is now known as Traefik Proxy [2] did not always go by that name. Initially, it was the only component by the Traefik project; the project and product were both simply named Traefik. However, when the feature set was expanded to include a mesh solution along the lines of Istio, Traefik became Traefik Proxy, and the mesh solution was redubbed Traefik Mesh [3].

Today, the vendor has two more products in its lineup: Traefik Pilot is a graphical dashboard that displays connections from Traefik Mesh and Traefik Proxy in a single application, and Traefik Enterprise – not a technical product in the strict sense – is a combination of the three software products with additional features and support. In this article, I focus on Traefik Proxy and Traefik Mesh, but the Traefik dashboard is also discussed.

If you think you now know your way around Traefik terms, you haven't reckoned with the creativity of the Traefik developers, because the proxy, according to Traefik's authors, is far more than a mere proxy. Although the company does not want to rename the product, Traefik is more of an edge router that can be used as a reverse proxy or load balancer. What the differences between an edge router, a reverse proxy, and a load balancer are supposed to be is something the developers let you guess with the help of colorful pictures. It's high time, then, to get to the bottom of the issue.

Traefik and Kubernetes

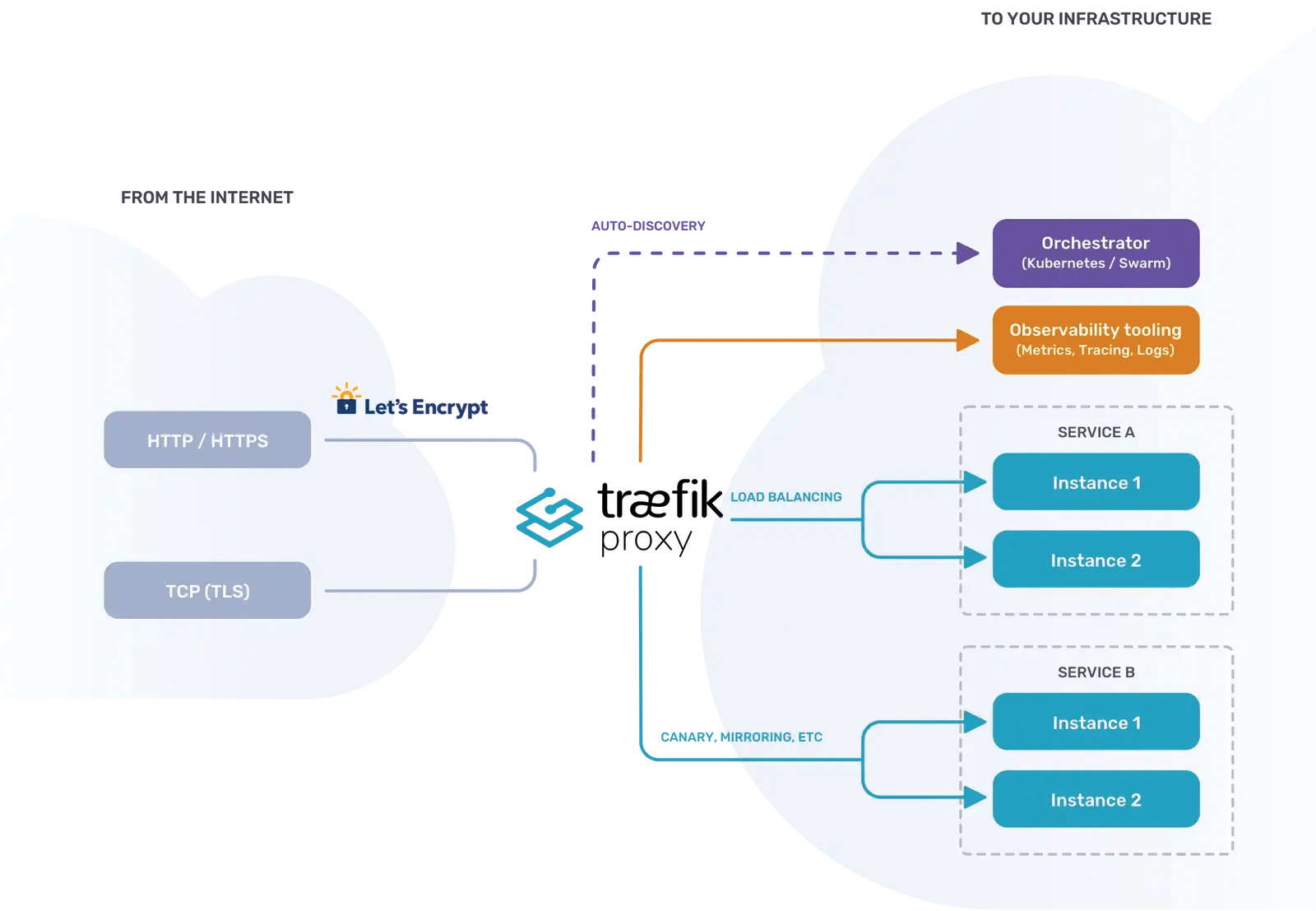

The original idea behind Traefik (Figure 2) was to develop a reverse proxy server that would work particularly well in tandem with container orchestrators like Kubernetes. In this specific case, "particularly well" means that the proxy server at the Kubernetes level should be a first-class citizen (i.e., a resource that can be operated by the familiar Kubernetes APIs).

Although a given now, the Kubernetes API was not as functional in the early Kubernetes years as it is today. Also, the entire ecosystem surrounding Kubernetes was not so well developed. Admins often relied on DIY solutions. If you needed a high-availability (HA) proxy, for example, you built a pod with an HA proxy container that had the appropriate configuration installed by some kind of automation mechanism. This arrangement worked in many cases, but was unsatisfactory in terms of controllability.

Therefore, the Traefik developers took a different approach to their work right from the outset. The idea was for Traefik to be a native Kubernetes resource and, like the other resources in Kubernetes, be manageable through its APIs. Even before the release of a formal version 1.0, the developers achieved this goal, and Traefik was one of the first reverse proxies on the market with this feature set.

Traefik as Reverse Proxy

The term "reverse proxy" is probably familiar to administrators of conventional setups from the Nginx context. Nginx often acts as a tool in reverse proxy mode. Where an internal system is not allowed to have a foothold on the Internet, a reverse proxy is a good way of making it accessible from the outside. The reverse proxy can be located in a demilitarized zone (DMZ, perimeter network, screened subnet), for example, and expose a public IP address including an open port to the Internet. It forwards connections to this DMZ IP to the server in the background, giving you several options that would not be available without a reverse proxy.

With nftables (the successor of iptables), you can restrict access to certain hosts. Reverse proxies are also regularly used as SSL terminators: They expose an HTTPS interface to the outside world without necessarily having to talk SSL with their back end. The same applies to the ability to control access to a web resource via HTTP authentication. Both features are often used in web applications that do not offer these functions themselves.

Traefik as a Load Balancer

Mentally, the jump from a reverse proxy to a load balancer is not far. The load balancer differs from the reverse proxy in that it can distribute incoming requests to more than one back end and has logic on board to do this in a meaningful way, including different forwarding modes and the algorithms for forwarding.

Originally, Traefik was launched as a reverse proxy; it was therefore foreseeable that the tool would eventually evolve into a load balancer, and now Traefik Proxy can be operated not only as a proxy with a back end, but also as a load balancer with multiple target systems.

Adding Value in the Kubernetes Context

The functional scope of Traefik Proxy described up to this point matches that of a classic reverse proxy and load balancer: The service accepts connections to be forwarded to the configured back end in a protocol-agnostic way in OSI Layer 4, or specifically for HTTP/HTTPS at Layer 7.

Traefik's original killer feature was always its deep integration with Kubernetes: Anyone running their workload in Kubernetes did not need to worry about the proxy configuration – it would just come along as part of the pod definitions from Kubernetes. Moreover, the Traefik developers have added several features that make a lot of sense, especially in the Kubernetes context.

One part is that Traefik can act as an API gateway. Although the term is not defined in great detail, admins typically expect a few basic functions from an API gateway. At its core, it is always a load balancer, but it is protocol aware (hence OSI Layer 7). It not only understands the passing traffic but also intervenes with it at the request of the admin, such as upstream authentication and transport encryption by SSL, as already discussed.

Add to that Traefik's ability to function as an ingress controller in Kubernetes. Ingress controllers are a special prearrangement in the Kubernetes API for external components that are to handle incoming traffic. By making a service an ingress controller in its pod definitions, admins tell Kubernetes to push specific types of traffic or all traffic through that controller. This special feature is what characterizes the Traefik implementation as a first-class citizen, as discussed in more detail earlier.

Certificate Management

The Traefik Proxy implements what many admins might consider a killer feature: It has a client for the ACME protocol and therefore handles communication with services like Google's Let's Encrypt.

Certificate handling is usually very unpopular with admins, because running your own certificate management setup is tedious and time consuming. Even creating a certificate signing request (CSR) regularly forces admins to delve the depths of the OpenSSL command-line parameters. Automatically requesting SSL certificates from Let's Encrypt not only relieves the admin of this tedious work but also ensures that expired certificates are no longer a source of problems. Traefik Proxy talks to Let's Encrypt on demand and fetches official certificates for public endpoints from services in pods.

A robust reverse proxy and load balancer that can be controlled from within Kubernetes may be considered valuable assets per se, but the world has become more complex in recent years, especially in the container context, and microservices play a crucial role in ensuring that plain vanilla reverse proxies and load balancers are no longer all you need. Microservices make an application highly flexible on the one hand, but difficult to operate on the other. Depending on the load and its own configuration, Kubernetes launches an arbitrary number of instances of certain services or causes them to disappear ad hoc into a black hole (e.g., if the load is light at the moment and the resources are needed to handle other tasks).

Therefore, it is enormously difficult to keep track of all the paths for inter-instance communication or to configure those paths – in fact, it's impossible. Hand-coding all instances of service A to talk to all known instances of host B is not feasible in practice with any reasonable amount of effort – not to mention that most components of microarchitecture applications today communicate with each other by REST or gRPC, which are based on HTTP at their core or at least make heavy use of it. However, HTTP does not provide for specifying multiple possible destinations for a connection.

Meshes as Brokers

On the application level, communication between the different components of an application can hardly be implemented in a meaningful way. In the wake of Kubernetes and its ilk, mesh solutions have therefore enjoyed great popularity for some time. They interpose themselves between the instances of all services of a microservices application and act as a kind of broker. They automatically register outgoing and incoming instances of the individual services, forward new connections to them, and thus establish an end-to-end communication network.

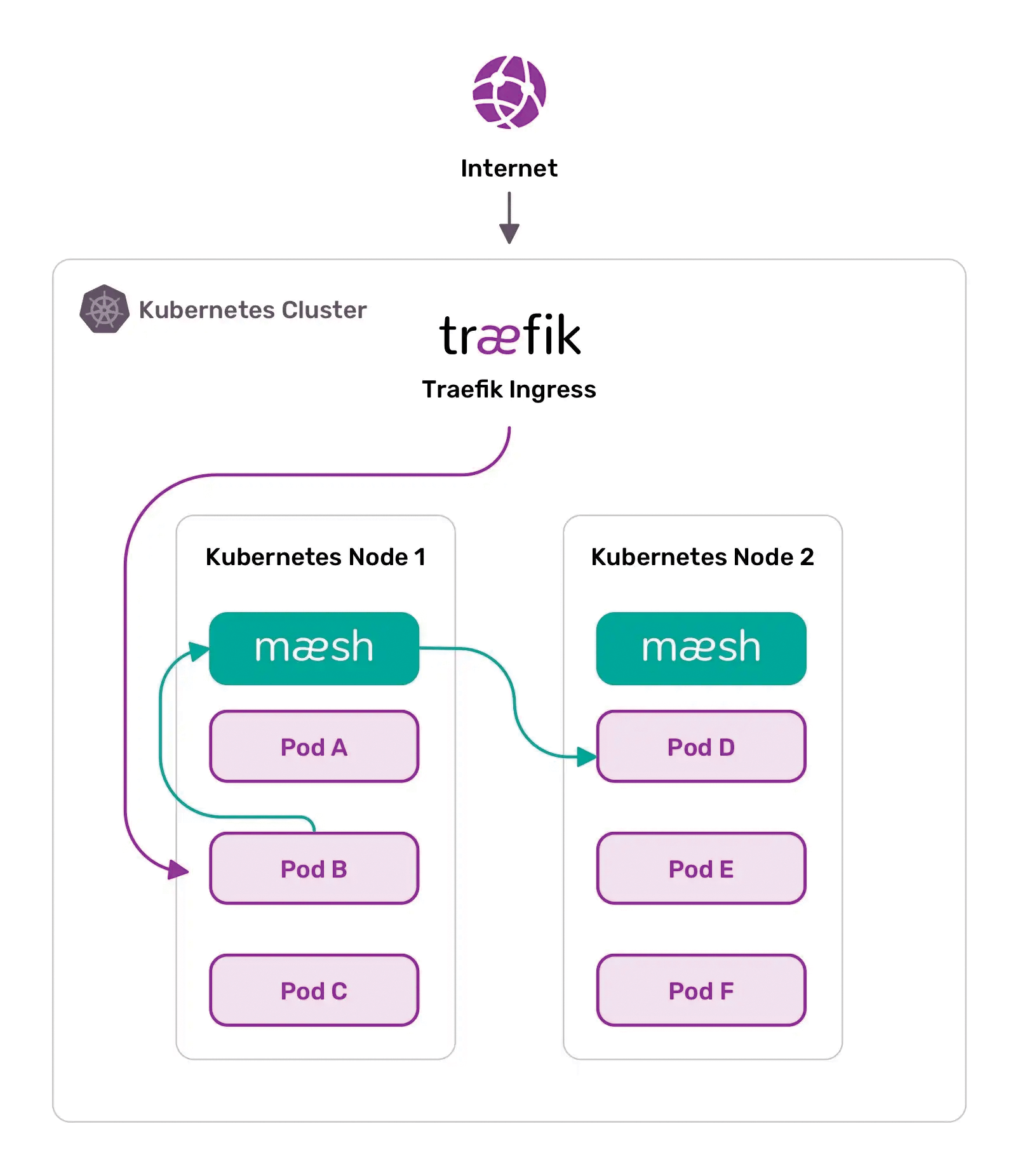

Not wanting to miss out on market developments, Traefik developers expanded the functionality of their solution and put together a product named Traefik Mesh (Figure 3). The core of Traefik Mesh is still Traefik Proxy, but the technical foundation of this solution is fundamentally different from the approach that some admins may be familiar with from Istio and others.

Resource-Intensive Sidecars

The architecture of solutions like Istio is often known as a sidecar architecture, because it sets up a proxy server alongside each service in an environment directly in the pod; therefore, it requires the proxy component to be part of the pod definition. For example, to use Istio in this way, the admin or developer has to make Istio part of a pod at the Kubernetes level.

In such a construct, the various instances of microservices no longer talk to each other directly; instead, each instance has its own proxy that can dynamically forward incoming connections to its own or another instance. Traefik calls this design "invasive" precisely because it requires every definition at the pod level to contain the components necessary for Istio.

This approach undoubtedly has technical advantages, such as comprehensive mutual transport layer security (mTLS) encryption between all instances and all apps. Nevertheless, Traefik fixes another problem: the resource consumption of the proxy servers that act as sidecars in the pod. Twenty sidecar instances of the proxy with 20 instances of different apps in a microservice environment logically cause significant overhead.

Without a Sidecar

The Traefik mesh works differently. Precisely because the proxy was there at the beginning as the first service with native Kubernetes support, it was obvious to drill it down to a mesh. Anyone who wants to use Traefik as a mesh will not roll out one sidecar per pod with the application in question in Kubernetes, but one Kubernetes proxy per physical host. Ultimately, the admin has no direct influence on the numbers per host when deploying the containers anyway if you roll out the proxy as a native Kubernetes resource.

In practice, this setup leads to a changed communication matrix as soon as the developer of an application configures it to use the proxy server. Each application then communicates with other services in other pods and on other hosts through the centralized Traefik Proxy on each host. The overhead caused by multiple proxy servers per server is eliminated, as is the additional configuration overhead caused by modified pod definitions. Another practical feature, especially for debugging purposes, is that the original communication paths to the individual apps are retained in the various pods. The address on which apps and users contact a service essentially decides whether or not communication is routed by way of Traefik Proxy.

In Traefik, the developers' attention to detail is noticeable in many places. You will regularly find admins and developers cursing because it is difficult to search for or even find errors in dynamic, interwoven mesh environments. In the meantime, therefore, a separate class of tools has established itself on the market to facilitate this task: tracing tools such as Jaeger.

However, for Jaeger to work as a sniffer dog, it needs a communication interface to plug into the ongoing exchange between all the components. Traefik Proxy provides such an interface, making debugging much easier for developers. Additionally, Traefik Proxy integrates easily with a range of monitoring applications focused on modern infrastructure. Interfaces for Prometheus (Figure 4) or InfluxDB are also available out of the box. Even for the open source version of Traefik, this results in comprehensive monitoring capability along with the admin's good feeling of knowing what is going on in their environment.

![Traefik Proxy provides interfaces for metrics data to various solutions such as Prometheus or InfluxDB, where the data can then be displayed graphically in Grafana [4]. © Nick Babcock Traefik Proxy provides interfaces for metrics data to various solutions such as Prometheus or InfluxDB, where the data can then be displayed graphically in Grafana [4]. © Nick Babcock](images/b04_traefik_4.png)

Mesh environments also benefit from the other features available in Traefik Proxy, including dynamic detection of new instances of individual services, instances that have been dropped in the meantime, and configurable rate limiting so as not to overuse individual instances of individual services.

Traefik Pilot

Traefik turns out to be a proxy that can be used in a mesh context, with the versatile solution significantly reducing complexity (e.g., compared with Istio). Nevertheless, the developers attach great importance to keeping the construct transparent and understandable for the admin. Even without additional components like Jaeger, admins and developers always need to be aware of the state of Traefik.

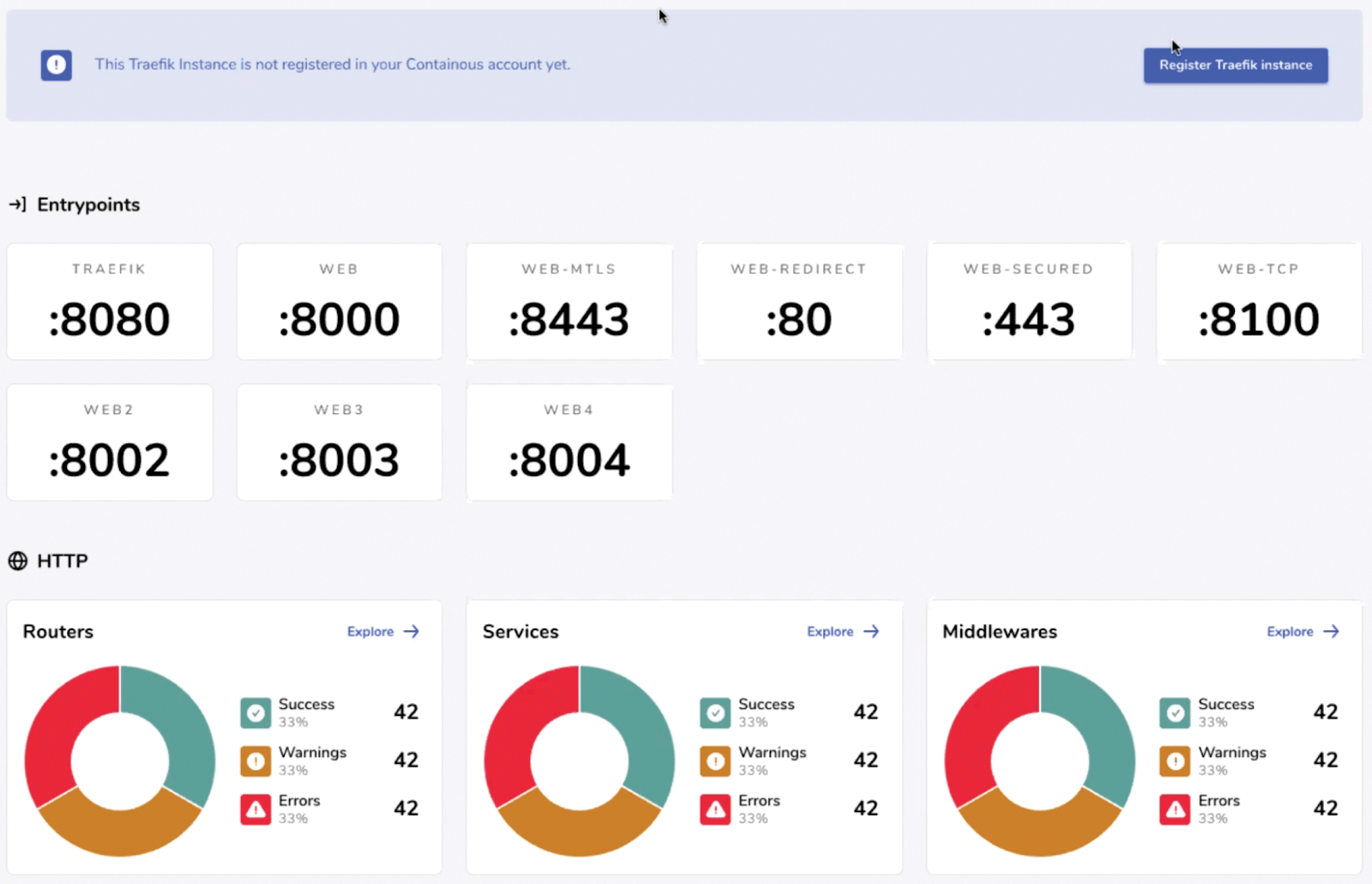

For this purpose, the developers launched Traefik Pilot (Figure 5): in essence, a GUI for monitoring and supervising Traefik. It uses its interfaces for data acquisition, draws monitoring and trending information from the acquired metrics data, and visualizes the results. Moreover, Traefik instances can be controlled directly in Pilot (e.g., to use Traefik's plugin interface). Traefik Pilot lets you extend Traefik with additional features that are not included by the vendor.

Traefik Enterprise

Finally, I'll look at the commercial version of Traefik, Traefik Enterprise, which is aimed at enterprises that want to use Traefik on a large scale and for whom the feature set of the open source variant is not sufficient.

For developers, this is always like riding on a cannonball: On the one hand, many companies from the container environment attach importance to offering open source software and belonging to the open source community. On the other hand, increasing numbers of solutions are becoming established on the market in which even very basic functions are only available in the commercial version. Traefik manages to find a sensible middle ground. Traefik Proxy itself as well as the mesh solution based on it are open source software and can be used without a bill from Traefik. The situation is different with Traefik Pilot, which is only available for cash and not as an open source application.

For some features that Traefik reserves exclusively for the Enterprise variant, it can also be argued that they are actually necessary for regular operation in the year 2021. If you want to connect Traefik to any form of external identity data management (e.g., LDAP or OAuth 2), you will need the commercial version of the software. The factory-installed functions for backup and restore as well as a compatibility interface to conventional environments are also reserved for the commercial variant. However, at least backups can be handled in some other way.

All in all, Traefik does not yet fall into the category of open source pretender. However, the manufacturer follows the bad habit of not clearly naming horse and rider in terms of pricing on its website. What Traefik charges enterprise customers depends on the number of proxies and the level of critical infrastructure – in other words, how subjectively important the particular proxy is to the admin, according to the vendor's statement.

Conclusions

Reverse proxies and load balancers face completely different challenges in container environments than in conventional setups. On the one hand, the dynamics with which existing services disappear and new instances of applications spring up play a major role. On the other, it is of great importance that the connections between all these instances turn out to be far more flexible than was the case in previous environments. The container circus even invented its own software genre for this – mesh networks for Kubernetes.

Traefik turns out to be an exciting alternative to Istio, Linkerd, and the like, because it achieves very similar effects with far less technical effort and considerably lower overheads. Although a few features fall by the wayside, Traefik is well worth a look for admins of average container solutions.

In the open source version, the feature set of the solution is quite sufficient for everyday tasks, even if you as a developer or administrator would certainly want some of the features from the enterprise edition. However, Traefik gets full marks for the most important points: Traefik resources can be easily managed from within Kubernetes and are true first-class citizens. If you don't like Istio and the sidecar principle, you will probably find a good and efficient alternative in Traefik.