HTTP/1.1 versus HTTP/2 and HTTP/3

Turboboost

The Hypertext Transfer Protocol (HTTP) no longer just serves as the basis for calling up web pages, it is also the basis for a wide range of applications. The best known version, which is still used on most servers, is HTTP/1.1 – even though HTTP/3 has already been defined. In this article, I look at the history of the protocol and the practical differences between versions 1.1, 2, and 3.

The foundation for today's Internet was laid by British scientist Tim Berners-Lee in 1989 while working at CERN in Geneva, Switzerland. The web was originally conceived and developed to meet the demand for an automated exchange of information between scientists at universities and institutes around the world. As a starting point, scientists relied on hypertext, which refers to text with a web-like, dynamic structure. It differs from the typical linear text found in books in that it is not written so that readers consume it from beginning to end in the published order. Especially in scientific works, authors work with references and footnotes that point and link to other text passages.

Technically, hypertext is written in what is known as a markup language, which in addition to design instructions invisible to the viewer also contains hyperlinks (i.e., cross-references to further text passages and other documents in the network). Hypertext Markup Language (HTML) has become established on the Internet.

Starting with hypertext, scientists created HTTP, now used millions of times a day. The goal was to exchange the hypertext in the HTML markup language with other computers, for which a generally valid protocol was required. Today's Internet can be summarized in four points:

- The text format for displaying hypertext documents (HTML)

- Software that displays HTML documents (the web browser)

- A simple protocol for exchanging HTML documents (HTTP)

- A server that grants access to the document (the HTTP daemon, HTTPD)

Single-Line Protocol HTTP/0.9

The original version of HTTP had no version number. To distinguish it from later versions, it was subsequently referred to as 0.9. HTTP/0.9 is quite simple: Each request is a single line and starts with the only possible method, GET, followed by the path to the resource (not the URL because naming the protocol, and the server, and the port for connecting to the server are not required):

GET /it-administrator.html

The response to the GET command also was quite simple and contained only the content of the one file:

<HTML> IT Administrator -- practical knowledge for system and network administration </HTML>

This forerunner of today's protocols did not yet support HTTP headers, which means that only HTML files could be transmitted and no other document types – also, no status or error codes. Instead, in the event of a problem, the server sent back an HTML file that it generated with a description of the error that occurred.

First Standard: HTTP/1.1

In 1996, a consortium started working on the standardization of HTTP in version 1.1 and published the first standard in early 1997 (RFC 2616), which can still be read in today's extended version from June 1999 [1]. In the meantime, the Internet Engineering Task Force (IETF) had also taken over centralized work on the definition of HTTP. RFC 2616 cleared up ambiguities and introduced numerous improvements:

- A connection can be reused to save time and reload resources.

- Pipelining allows a second request to be sent, even before the server has fully transmitted the response for the first, which can reduce latency in communication.

- Chunked transfer encoding is the transfer of data with additional information that allows the client to determine whether the information it receives is complete.

- Additional cache control mechanisms were added.

- For the sender and receiver to achieve the best compatibility, they perform a handshake, in which the server and client negotiate certain fundamentals to establish the best basis for communication.

- The host header introduced here is groundbreaking and still indispensable today, for the first time allowing the server to store different domains under the same IP address.

The host header is absolutely crucial for today's Internet. Without this definition, set out in RFC 7230 section 5.4 [2], it would be impossible today to provide a large number of Internet sites on a single server. Each Internet site would need its own HTTP daemon and its own server. For a server to manage multiple domains under the same IP address, HTTP/1.1 makes it mandatory to send the desired host with the GET request, as well. For example, a GET request for http://www.it-administrator.de/pub/WWW/ would be:

GET /pub/WWW/ HTTP/1.1 Host: www.it-administrator.de

If this header is missing, the server must respond with the status 400 Bad Request by definition. To open the page https://www.it-administrator.de/trainings/, the browser sends the header, as shown in Listing 1. The server first responds with a status 200, which signals to the client that its request is being processed without errors, and then adds further information (Listing 2) followed by the content of the requested page. When requesting an image, the host header looks slightly different (Listing 3), and the server responds as in Listing 4, followed by the image with the previously announced size of 32,768 bytes.

Listing 1: Opening a Page

GET /trainings HTTP/1.1 Host: www.it-administrator.de User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:79.0) Gecko/20100101 Firefox/79.0 Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8 Accept-Language: de,en-US;q=0.7,en;q=0.3 Accept-Encoding: gzip, deflate, br Referer: https://www.it-administrator.de/trainings/

Listing 2: Adding Chunked Data

200 OK Connection: Keep-Alive Content-Encoding: gzip Content-Type: text/html; charset=utf-8 Date: Wed, 10 Jul 2020 10:55:30 GMT Etag: "547fa7e369ef56031dd3bff2ace9fc0832eb251a" Keep-Alive: timeout=5, max=1000 Last-Modified: Tue, 19 Jul 2020 11:59:33 GMT Server: Apache Transfer-Encoding: chunked Vary: Cookie, Accept-Encoding

Listing 3: Host Header

GET /img/intern/ita-header-2020-1.png HTTP/1.1 Host: www.it-administrator.de User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:79.0) Gecko/20100101 Firefox/79.0 Accept: */* Accept-Language: de,en-US;q=0.7,en;q=0.3 Accept-Encoding: gzip, deflate, br Referer: https://www.it-administrator.de/trainings/

Listing 4: Server Response

200 OK Age: 9578461 Cache-Control: public, max-age=315360000 Connection: keep-alive Content-Length: 32768 Content-Type: image/png Date: Wed, 8 Jul 2020 10:55:34 GMT Last-Modified: Tue, 19 Jul 2020 11:55:18 GMT Server: Apache

In contrast to the text/html content type, the browser receives two additional pieces of information: Age tells the client how long the object has been in the proxy cache, and Cache-Control tells all the caching mechanisms whether and how long the object may be stored. Armed with this information, servers can ensure that files such as images, which usually do not change, are loaded from the local cache of the browser and not retrieved from the server.

Faster Transmission with HTTP/2

Thanks to the flexibility and extensibility of HTTP/1.1 and the fact that the IETF extended the protocol with two revisions – RFC 2616 was published in June 1999 and RFCs 7230-7235 in June 2014 – it took 16 years until the IETF presented the new standard HTTP/2.0 to the public as RFC 7540 [3] in May 2015.

The main purpose of HTTP/2.0 was to speed up the Internet and the transmission of data. Compared with the early days, web pages have become complex and are no longer pure representations of information, but interactive applications. The number of files required per page and their size have increased. As a result, significantly more HTTP requests are necessary. HTTP/1.1 connections must send requests in the correct order. Even if several parallel connections can theoretically be opened, it is still a not inconsiderable complexity of requests and responses.

Google and Microsoft

As early as the beginning of the 2010s, Google presented an alternative method for exchanging data between client and server with the SPDY protocol, which defined an increase in responsiveness and solved the limitation of the amount of data to be transferred. In addition to SPDY, Microsoft's development of HTTP Speed+Mobility also served as the basis for today's HTTP/2 protocol.

One major change from HTTP/1.1 is that it is a binary protocol, compared with the previous text-based protocol. Therefore, HTTP/2 can no longer be read or created manually. It also compresses the header. Because the header is similar for most requests, the new protocol combines them, preventing unnecessary duplicate transmission of data. Furthermore, HTTP/2 allows the server to send data in advance to the client cache before the client requests it. For example, assume an index.html file loads the style.css and script.js files within the data structure. If the browser connects to the server, the server first delivers the content of the HTML file. The browser analyzes the content and finds the two instructions in the code to reload the style.css and script.js files.

Only now can the browser request the files. With HTTP/2, it is possible for web developers to set rules so that the server takes the initiative and delivers the two files (style.css and script.js) directly with index.html, without waiting for the client to request them first. Therefore, the files are already in the browser cache when index.html is parsed, eliminating the wait time that requesting the files would otherwise entail. This mechanism is known as server push.

Server Push with Apache and Nginx

Because a server cannot know which files depend on what others, it is up to the web developers or administrators to set up these relationships manually. On Apache 2, the instruction can be stored in httpd.conf or .htaccess. For example, to push a CSS file whenever the browser requests an HTML file, the instruction for the httpd.conf or .htaccess file is:

<FilesMatch "\.html$"> Header set Link "</css/styles.css>; rel=preload; as=style" <FilesMatch>

To add multiple files, you can use add Link instead of set Link:

<FilesMatch "\.html$"> Header add Link "</css/styles.css>; rel=preload; as=style" Header add Link "</js/scripts.js>; rel=preload; as=script" </FilesMatch>

Here, you could also imitate pushing files from foreign sites by adding crossorigin to the tag:

<FilesMatch "\.html$"> Header set Link "<https://www.googletagservices.com/tag/js/gpt.js>; rel=preload;as=script; crossorigin" </FilesMatch>

If you cannot or do not want to change the Apache files, you could still imitate pushing files with the header function of PHP or other server-side scripting languages:

header("Link: </css/styles.css>; rel=preload; as=style");

To push multiple resources, you separate each push directive with a comma:

header("Link: </css/styles.css>; rel=preload;as=style, </js/scripts.js>;rel=preload;as=script, </img/logo.png>;rel=preload; as=image");

You can also combine this directive with other rules included with the Link tag. For example, simultaneously issuing a push directive with a preconnect hint,

header("Link: </css/styles.css>; rel=preload; as=style,<https://fonts.gstatic.com>;rel=preconnect");

tells the server to push the style.css file to the client and simultaneously to establish a connection to fonts.gstatic.com. Because Nginx does not process .htaccess files or the like, the instruction can also be implemented, as described earlier, with PHP or directly in the server configuration by extending the location paragraph to include the http2_push command (Listing 5).

Listing 5: location Paragraph

location / {

root /usr/share/nginx/html;

index index.html index.htm;

http2_push /style.css;

http2_push /logo.png;

}

Many Small Files vs. One Big File

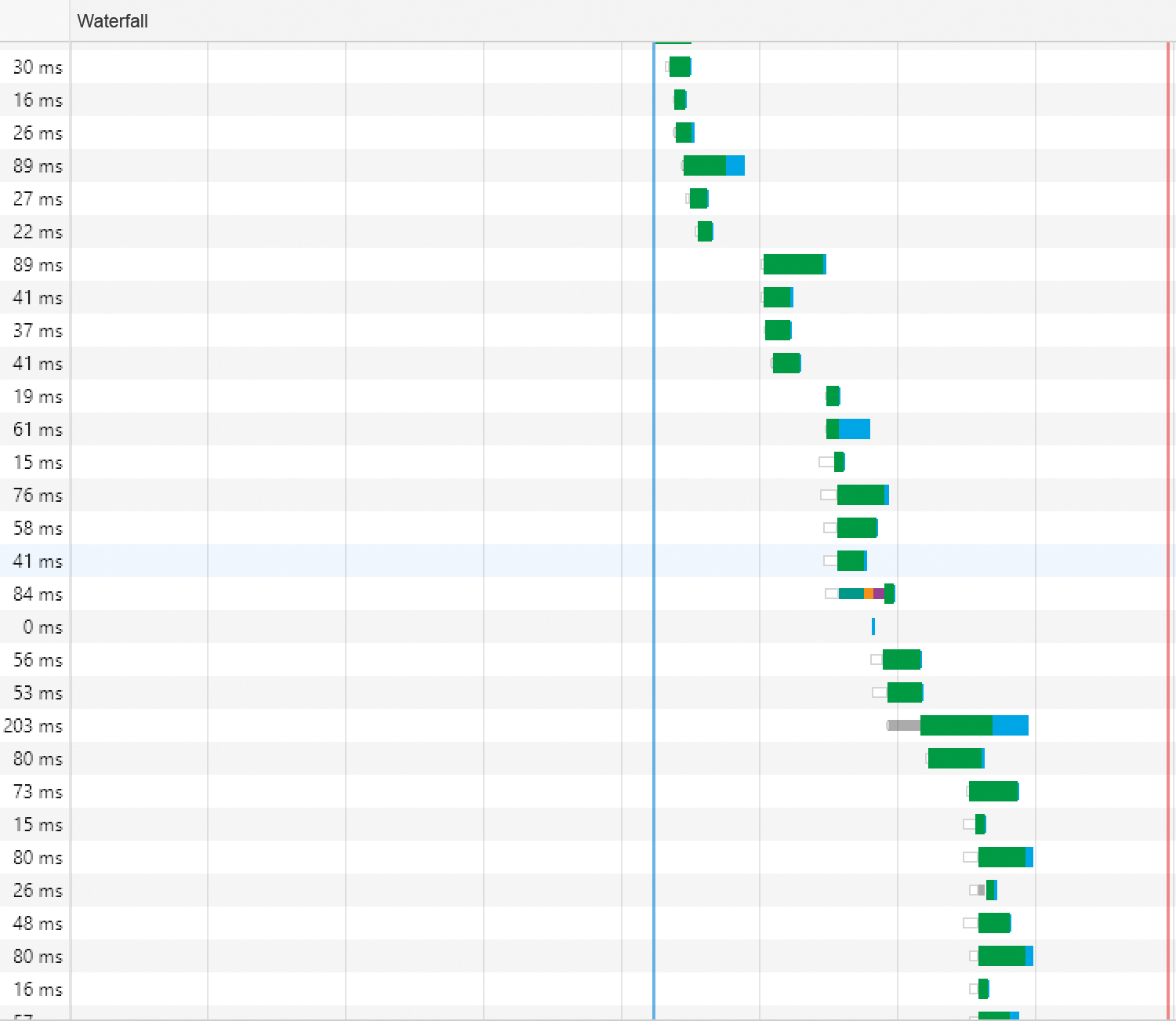

Over the years, HTTP/1.1 has shown that HTTP pipelining places a heavy load on a server's resources. From a purely technical point of view, this means that the browser puts the requests for the required files into a pipeline, and the server processes them one by one (Figure 1). For web developers, this previously meant that their goal had to be to reload as few individual files as possible. Therefore, combining individual CSS or JavaScript files, for example, has proven to be very helpful. Loading a single 80KB CSS file is faster than loading eight 10KB files, because each request for a new file again takes time to establish the TCP connection.

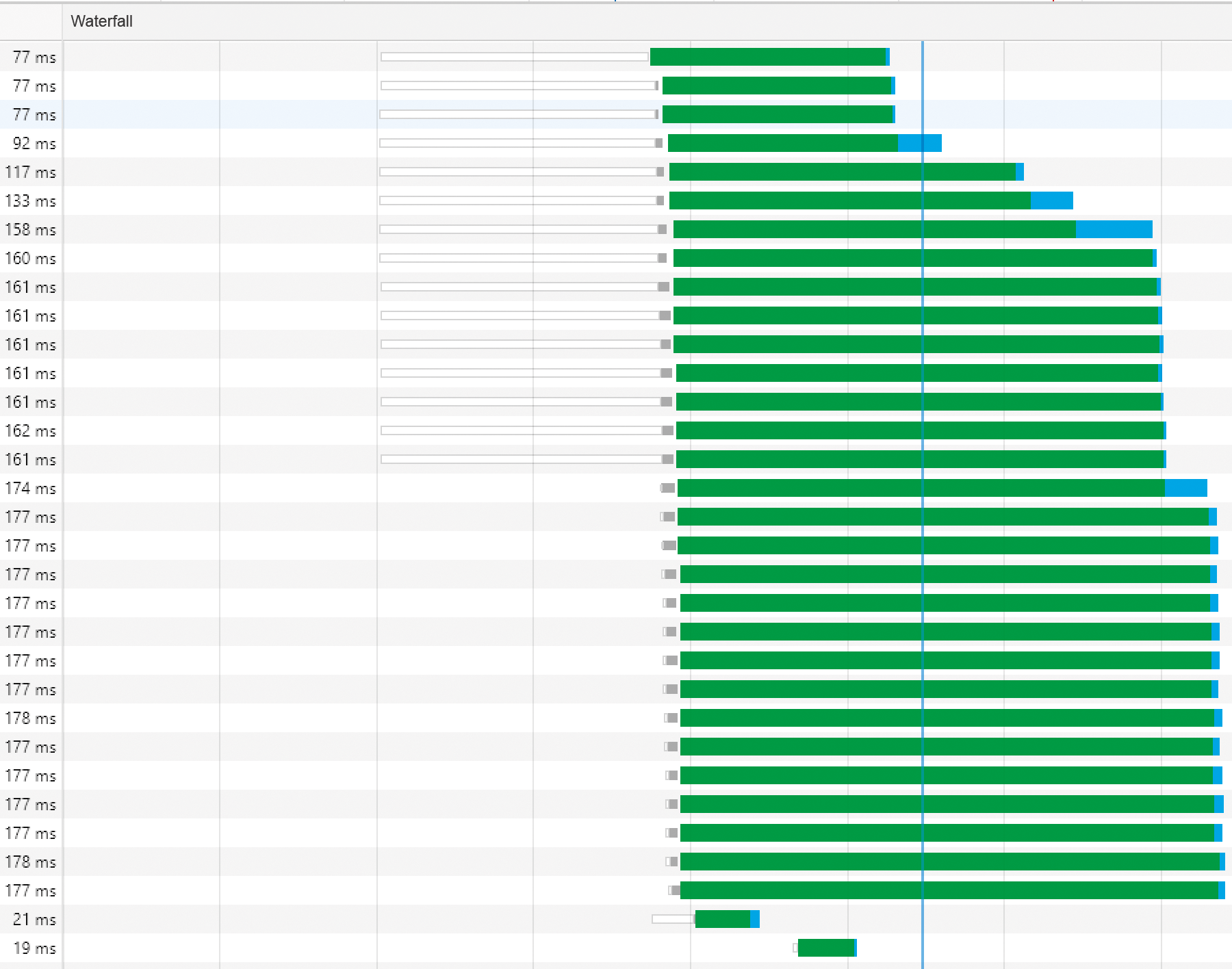

Not all files can be combined and downloaded as a single file, especially for images, so HTTP/2 has become a multiplex protocol. One of the two core changes is that a client only opens one stream to the server over which all files can be transferred, without opening a new stream for each new request. The existing TCP connections are thus more durable, which in turn means they can achieve full data transfer speeds more often.

The second change is that this adaptation allows the server to send files in parallel rather than serially. Instead of one file at a time, the server now delivers many files at once (Figure 2). Conversely, the previously mentioned rule with the eight CSS files now no longer applies. On the contrary, applying this rule slows down the website unnecessarily, because the maximum transfer time for all files is the same as the transfer time for the largest file. For example, if transferring an 80KB file takes 347ms, transferring eight 10KB files takes only about 48ms, because the server delivers them at the same time. With HTTP/2, browsers typically use only one TCP connection to each host, instead of the up to six connections with HTTP/1.1.

Activation Under Apache 2

On Apache 2, HTTP/2 is not enabled by default; you have to do this manually by installing http2_module and enabling it,

LoadModule http2_module modules/mod_http2.so

in the configuration file. The second directive to be entered is,

Protocols h2 h2c HTTP/1.1

which makes HTTP/2 the preferred protocol if the client also supports that version; otherwise, the fallback is to HTTP/1.1.

Downward Compatibility

According to statistics from w3techs.com [4], a good year after the HTTP/2 specification was published, less than 10 percent of the websites at the time were using the new protocol. More importantly, however, these sites handled about two thirds of all page views. This rapid acceptance has been helped because HTTP/2 is fully downward compatible, so a change in technology does not initially require any adjustments to websites and applications.

Only if a company fully wants to leverage HTTP/2 with its website or application do the web developers have to step in and break down the individual files to the extent possible – as described earlier – and define the server push rules.

From TCP and TLS to QUIC

As mentioned earlier, the main focus of the change from HTTP/1.1 to HTTP/2 was multiplexing and the resulting superior bandwidth utilization. However, if a packet is lost during transmission, the entire TCP connection stops until the packet has reached the receiver again from the sender. As a result, depending on the loss rate, HTTP/2 can have a lower speed than HTTP/1.1, because all data is sent over a single TCP connection; therefore, all transmissions are affected by the packet loss.

To solve this problem, a working group at Google standardized the Quick UDP Internet Connection (QUIC) network protocol in July 2016, which removes the restrictions on the use of TCP and is based on UDP. One requirement is that data is always encrypted with TLS 1.3, which increases security. A split header creates further advantages. The first part is only used to establish the encrypted connection and does not contain any other data. Only when the connection is established does the system transmit the header to transfer the data. When a new connection is established to the same host, QUIC does not have to renegotiate the encryption but can start transmission directly over the secured connection. Another advantage is that QUIC is no longer limited to HTTP transmissions.

Because it is difficult to establish completely new protocols on the Internet, Google presented QUIC to the IETF. The latter has adopted QUIC as the basis for HTTP/3 and has been working on standardization since the beginning of 2017. The latest draft is from December 14, 2020 [5]. HTTP/3 completely breaks with TCP and will use QUIC in the future. For HTTP/3 to replace HTTP/2 in the future, web servers will need to support QUIC. Apache, still the most widely used HTTP daemon, has not yet made an announcement in this direction. Nginx unveiled a first version in June 2020 that is based on the IETF QUIC document. LiteSpeed is currently the only server that has fully implemented QUIC and, as a consequence, HTTP/3.

Long Path to the Browsers

Servers must do more than understand QUIC, so it follows that the clients also need to support HTTP/3. Because QUIC originates from Google, it is no surprise that Chrome already supports QUIC. Firefox also started offering experimental support in version 72 according to the definition published by the IETF. Microsoft Edge, which is now based on the open source Chromium project, has started the implementation work, which is currently available through Microsoft Edge Insider Canary Channel [6].

Conclusions

Today's standard for delivering web pages and applications is clearly HTTP/2. It solves the most serious problem of HTTP/1.1 – the HTTP head-of-line blocking – which required a client to wait until a request it had made was completed before it could make the next one. This bottleneck in turn caused massive delays, because today's web pages no longer comprise just a handful of files but load a huge volume of additional data. Administrators don't have to go to great lengths to get HTTP/2 up and running. Meanwhile, HTTP/3 is now in the starting blocks and brings with it the change from TCP to QUIC.