Keeping it simple: the Ansible automator

The Easiest Way

The Ansible IT automation tool makes it easy to introduce automation into your environment. In this article, I introduce Ansible and its basic capabilities and how to use them, and I discuss which components in the Ansible camp make an admin's life easier. Toward that end, I venture a look at Ansible's future under the current owner Red Hat.

Early Steps

In 2014, the world of automation on Linux was very different from today. I had just started a job in Berlin that involved building an OpenStack platform. Puppet fans set the tone in the company, so the idea was for the OpenStack cloud to be something for the Puppet players, too. However, OpenStack was far removed from its current level of maturity at the time, and the modules offered by the developers were more of a big mess than a solution that could be used in production. What this meant in terms of daily development of the platform soon came to light in an extremely unpleasant way.

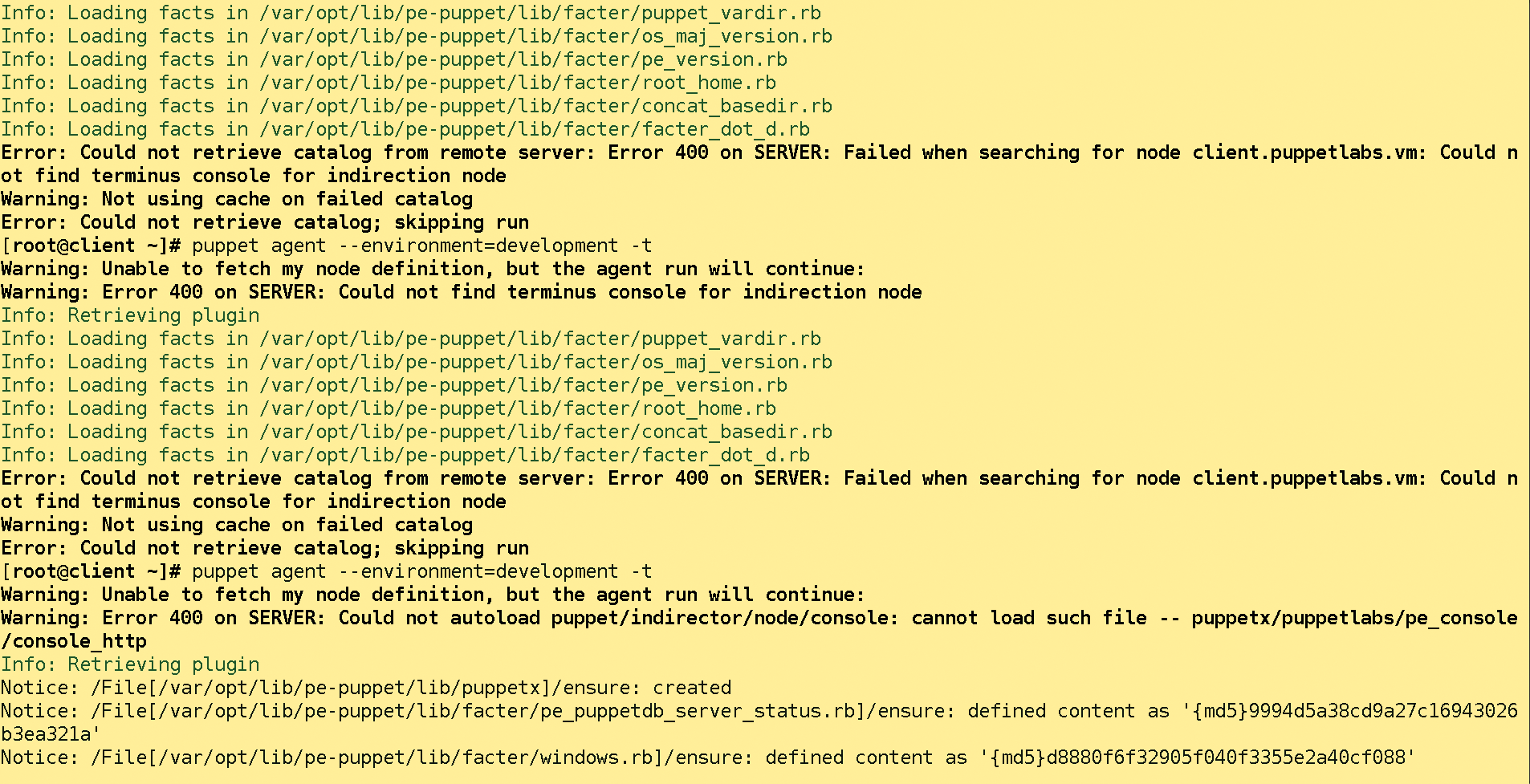

The Puppet usage that the OpenStack developers specified was inefficient and slow. Before Puppet even started to change anything on the systems, several minutes could pass. In the worst case, the process ended up producing an error that kicked an entire hour of Puppet runtime into the gutter (Figure 1). Puppet offered little comfort because the OpenStack developers did not have a handle on versioning their modules but allowed the individual modules to reference each other wildly.

Moreover, preparing configurations for services proved to be extremely tedious: Puppet looked to obtain its configuration from the built-in key-value component, Hiera. The developers endeavored to map every configurable parameter from OpenStack in Puppet and thus also in Hiera. Therefore, sooner or later they translated entire OpenStack configuration files from the INI format to YAML for Hiera. The occasionally used term "YAML scoops" was born at that time.

Not only was the Puppet configuration in need of improvement, but its feature set with regard to OpenStack, as well. The procedure "Do this on Host A, then that on Host B, and finally the next on Host C" was impossible to implement with Puppet. However, it was central to OpenStack deployment (e.g., with Galera).

A colleague of mine brought Ansible into play. At that time, it was just two years old and far away from its current feature set. Where Puppet was pretty much a shock, Ansible impressed right from the outset because of its simplicity of use, the way it made nesting unattractive, and the way it more or less self-documented. Instead of a huge YAML bucket, Ansible simply gave you the option of storing a template for configuration with all the desired parameters. Only the host-specific entries needed to be exchanged dynamically (e.g., the IP addresses).

Terminology

As with any automator, Ansible has by now developed a kind of jargon that admins need to know before embarking on the Ansible adventure. Because this article would be difficult to understand without these terms and the knowledge of them, I will go into them here.

The term inventory is of central importance. The inventory captures the target systems on which Ansible executes commands once the admin instructs it to do so with a playbook, which lists the host definitions from the inventory to which the specific playbook refers, as well as the roles to be invoked. A role, in turn, consists of a list of tasks to be processed, as well as configuration files and templates associated with this task, along with handlers (i.e., services that restart after a configuration change or perform other event-driven tasks).

This triple of inventory, playbook, and role is enough to let Ansible do tasks on a specific host – almost: Under the hood, Ansible has modules that take specific steps and are designed to be as granular as possible; the administrator calls them in playbooks. If required, you can also write your own modules, but thanks to Ansible's enormous range of functions out of the box, this is not normally necessary.

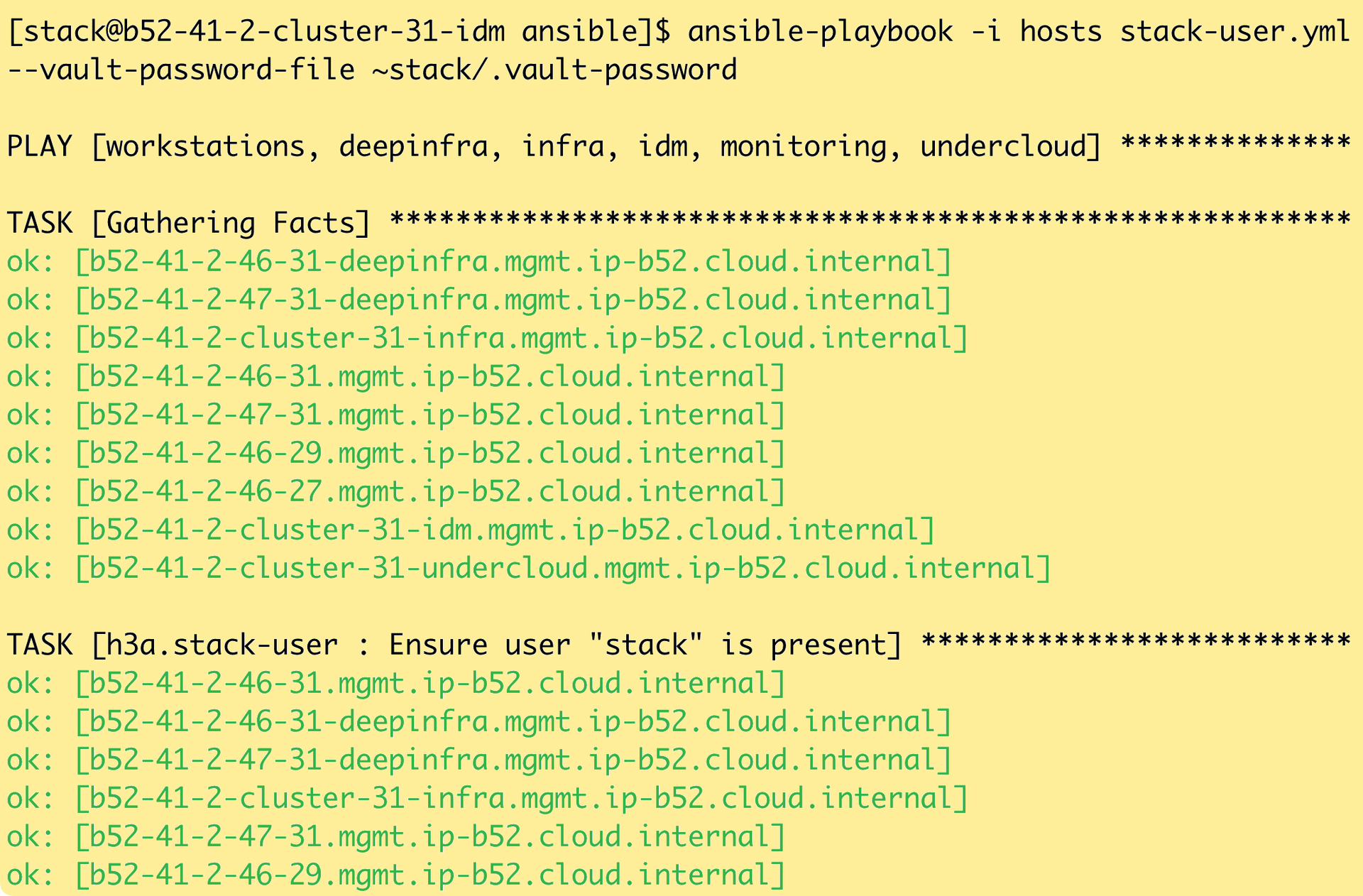

Simple SSH Instead of Client-Server

The requirements to get Ansible up and running are simple, and so is the architecture of the solution. Other automators chose client-server protocols and require appropriate services on both the central management server and the target systems. Ansible was designed differently from the beginning: It uses the established and high-performing SSH protocol (Figure 2) to execute commands on other systems.

This setup inherently has many advantages, but also some disadvantages. Users can use SSH to execute commands on systems, for example, if their own SSH key is stored there and forwarding is working in the SSH agent. Additionally, they must be able to use sudo to assume sys admin privileges on the affected system. To ensure performance, Ansible also uses scp to copy the modules it needs to execute on the target systems beforehand. Whereas Puppet and Chef require the automation server to execute the modules for all target computers, Ansible distributes this task to the systems themselves, which naturally scales far better.

Getting Started with Ansible

The lack of a client-server architecture, from an admin's point of view, makes getting started with Ansible a quick experience, given a user with an SSH-based login on all systems and permission to run sudo without a password. On any system that can access the other systems over SSH, you then install the Ansible package intended for your distribution. Because Ansible has now reached a very wide level of distribution, you can choose from packages for all common Linux systems. After that, calling ansible-playbook should take you to a matching help text.

The next step is to create an inventory. You can store this either in YAML or INI format. The inventory is divided up into host groups, for which the names are the block headers. The code in Listing 1 creates an inventory with two host entries that belong to the lmtest group. The ansible_user directive instructs Ansible to log on to the host as the mloschwitz user. The

ansible -i <file> all -m ping

command then pings all the hosts from the inventory. The <file> argument specifies the name of the inventory file – which is normally hosts.

Listing 1: Inventory (excerpt)

<pre> [lmtest] server1.local ansible_user=mloschwitz server2.local ansible_user=mloschwitz <pre>

Directory Structure

The Ansible developers provide useful insights in the documentation [1] on how the admin should ideally design the Ansible directory. The example provides for a folder containing a file named hosts. The first playbook – in the example, lmtest.yml – is located at the same directory level, as is an Ansible vault file (more on that later). Great importance is given to two additional folders, host_vars/ and group_vars/, the latter of which is used to create files whose names match the groups defined in the inventory.

The group in the example is named lmtest; variables could be defined for this group in the group_vars/lmtest file. Files for the individual hosts (e.g., server1.local.yml) are stored in host_vars/. It is important to always use the full hostname as it appears in the inventory; otherwise, Ansible fails to establish the connection between the file and the host and fails with an error message because variables are not defined.

The last folder needed is the most important one, roles/, which you use to create roles that perform individual steps on the target systems. Although you could also add the roles tasks directly to your playbooks, doing so would become confusing over time. Therefore, it has become common practice to write roles that handle logically related tasks on the setup's servers. In the roles/ folder are subfolders with the names of the roles (e.g., lmtestrole). These folders also have a specific structure.

The most important folder for a role, tasks/, has at least one file named main.yml that optionally contains includes to other files in the same directory or the desired tasks directly. The task of installing a package on a target system would look something like Listing 2 in the lmtestrole/tasks/main.yml file.

Listing 2: Package Installation

<pre>

- name: Install helloworld

package:

name: hello-world

state: present

<pre>

Task entries, and all entries in Ansible in general, follow a similar pattern: name with a short description, then the module to be called, and finally its parameters. The structure of a role in Ansible is similar to that of a shell script, a fact that significantly lowers the barriers to entry for automation novices. The mandatory name entry also makes Ansible roles at least partially self-documenting, which makes them understandable to people other than the authors themselves.

The example shown here covers only a fraction of Ansible's functionality. For example, in the tasks/ folder within the role, you could define triggers that start, stop, or restart services. With the notify parameter in the task, you could call the defined trigger afterward. The templates/ and files/ folders are equally important, if you need them. Files that need to find their way onto the systems unchanged end up in files/. In contrast, templates/ contains placeholders in various places that Ansible dynamically replaces with the value that applies for the host before rolling the results out to a host. In this way, you only need one template to roll out the configuration file to multiple hosts with the appropriate IP for each host.

Predefined Variables

To help the administrator do just that, Ansible defines a variety of environment variables per host. In Ansible jargon, these are known as facts, but accessing them works like accessing normal variables.

For example, one often-used variable is ansible_distribution, which tells the admin in the module whether they are dealing with Debian, Ubuntu, CentOS, or SUSE. With the help of the ansible_distribution_version fact, you can additionally check the specific version. Ansible only executes the items where when conditions are possible if the criteria specified in the condition are true.

Using the facts described here, an admin could design the role such that Ansible performs certain tasks on specific distributions only, which makes working with Ansible in a heterogeneous environment far easier.

Compact Playbook

Roles used in the recommended way keep the playbook very short. It defines only the host group it refers to and the roles it applies to it (Listing 3). Afterward, the playbook can be invoked by:

ansible-playbook -i hosts playbook.yml

Listing 3: A Playbook

<pre>

- hosts: infra

become: yes

roles:

- lmtestrolle

<pre>

The task from this example would result in hello-world being installed on all target systems.

Dynamic Inventory

Critics and supporters alike say that one of Ansible's greatest strengths comes from the compilation of its inventory. The easiest way to build one is to use a good old plain text file in the main Ansible installation folder. However, this does not offer a great deal of flexibility; especially in dynamic environments such as clouds, in which you are soon likely to reach the limits of the approach. Ansible therefore offers a dynamic inventory option, in which ansible or ansible-playbook compiles the inventory file from various sources at runtime.

In particular, setups with a central single source of truth with information about all systems will benefit from this principle. For example, if you use Cobbler for bare-metal deployment, you can use a custom Python script [2] to generate the inventory from the host list of systems stored in Ansible. If you use OpenStack, either as a tool for managing bare-metal systems or for running virtual machines, you can retrieve the list of target instances directly from the OpenStack API with the openstack_inventory script [3].

Connecting Ansible directly to a data center inventory management (DCIM) tool like NetBox is a tad more elegant. A DCIM tool lists the existing servers by definition. Because NetBox in particular contains a completely machine-readable API, establishing a connection is particularly easy. A sample NetBox script [4] for Ansible's dynamic inventory illustrates this functionality.

Infrastructure as Code

If you just write a few Ansible roles for your automation or combine a few ready-made roles off the web, you will get a reasonably clear, but still very powerful, Ansible directory. In the spirit of infrastructure as code, managing the entire Ansible directory in a Git repository offers a number of benefits.

On the one hand, you can automatically establish a single source of truth for the code in use by specifying that the HEAD in this Git directory must always be the currently rolled out production version. On the other hand, managing an Ansible directory in Git makes it easy to work on the directory as a team and track changes.

Ansible playbooks and roles must always be idempotent by definition. If you run Ansible against your systems 10 times, you must end up with a working system 10 times over that has successfully completed the steps anchored in Ansible. If you make a mistake while working with Ansible, you can very easily revise changes and run Ansible again if a Git directory is available. This normally restores the previous state and solves problems caused by a (temporary) misconfiguration.

Dealing with Passwords

Sooner or later, any administrator has to deal with passwords as part of their daily work. Although they should not slow down automation, storing them in plaintext in an Ansible playbook is not an option. This is even more true if, as suggested, the Ansible playbooks reside in a central Git directory to which many people have access. In many companies, the compliance policy rules out such a situation anyway: In many places, teams are not allowed to access any data other than that they absolutely need for their own work in the scope of segregation of duties. However, if a team posts passwords from its own infrastructure to the Git directory, which is then accessed by the entire company, there is no longer any protection.

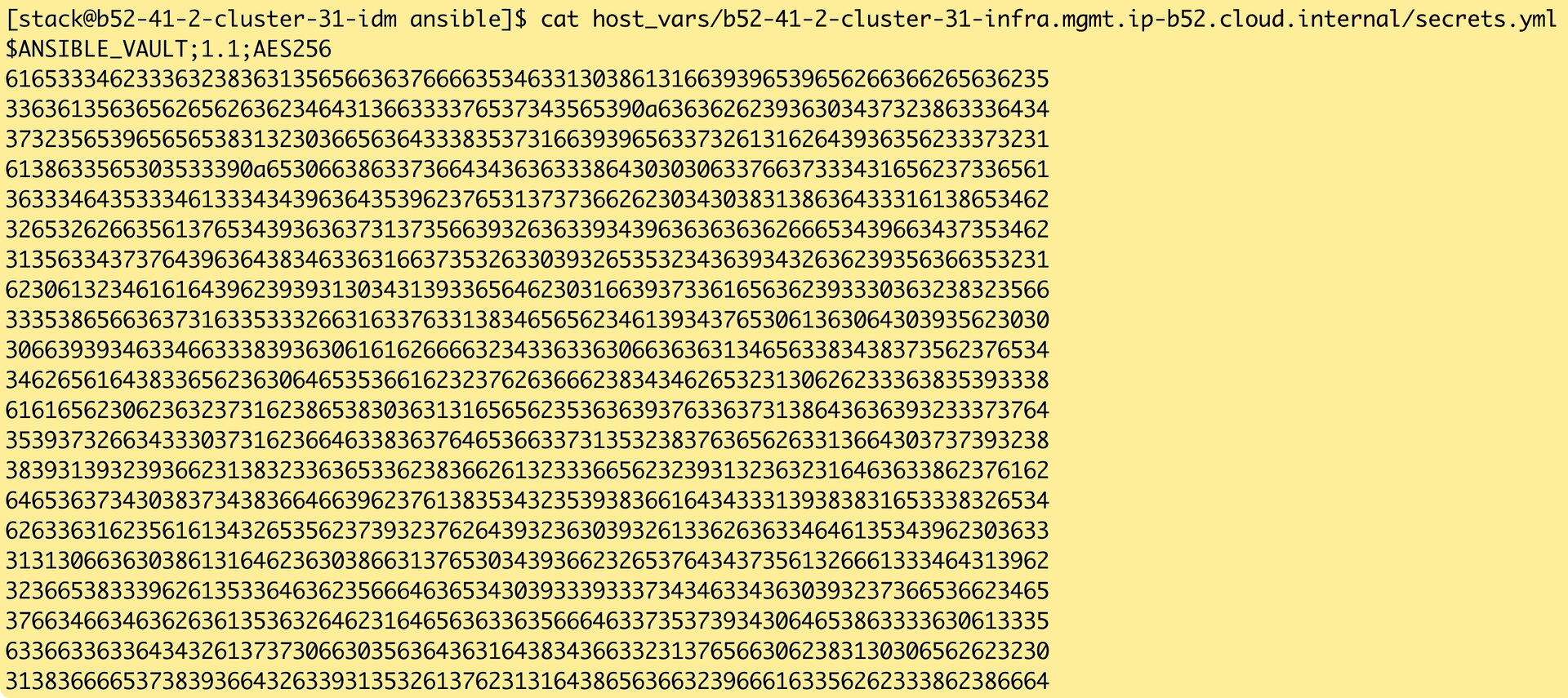

The Ansible developers are aware of the problem and are addressing it with Ansible Vault. The name is somewhat unfortunate in that it clashes with HashiCorp Vault. Although an Ansible community module ties Ansible directly to HashiCorp Vault, it has nothing to do with Ansible Vault. The small Ansible Vault tool encrypts a text file created by the admin (Figure 3) so that Ansible can read it like any other variable file.

Once the admin has installed Ansible, ansible-vault is also available as a command in the shell. You can use

ansible-vault create passwords.yml

to create a vault for passwords. In it, you then define your passwords as variables (e.g., ldap_admin_password: verysecret). In your roles and playbooks, you can then access the variable as you would any other. However, when Ansible is invoked, it adds a --vault-id to the command to reference the password file.

Also for Certificates

The Vault mechanism is suitable not only for passwords, but also for other confidential content, such as the SSL keys that belong to certificates. If a web server with SSL enabled needs to be rolled out without automatic Let's Encrypt, it usually needs the key matching the certificate without a set password, which you can do quite easily with the Vault command that encrypts the key for the certificate:

ansible-vault encrypt key.pem

The encoded file is stored in the files/ subfolder of the role that rolls out the certificate. You can then access the encrypted file with a content directive in the playbook. If you pass ansible-playbook the vault with --vault-id, Ansible automatically decrypts the vault when the playbook is called and uses the contents of the file.

Ansible Tower

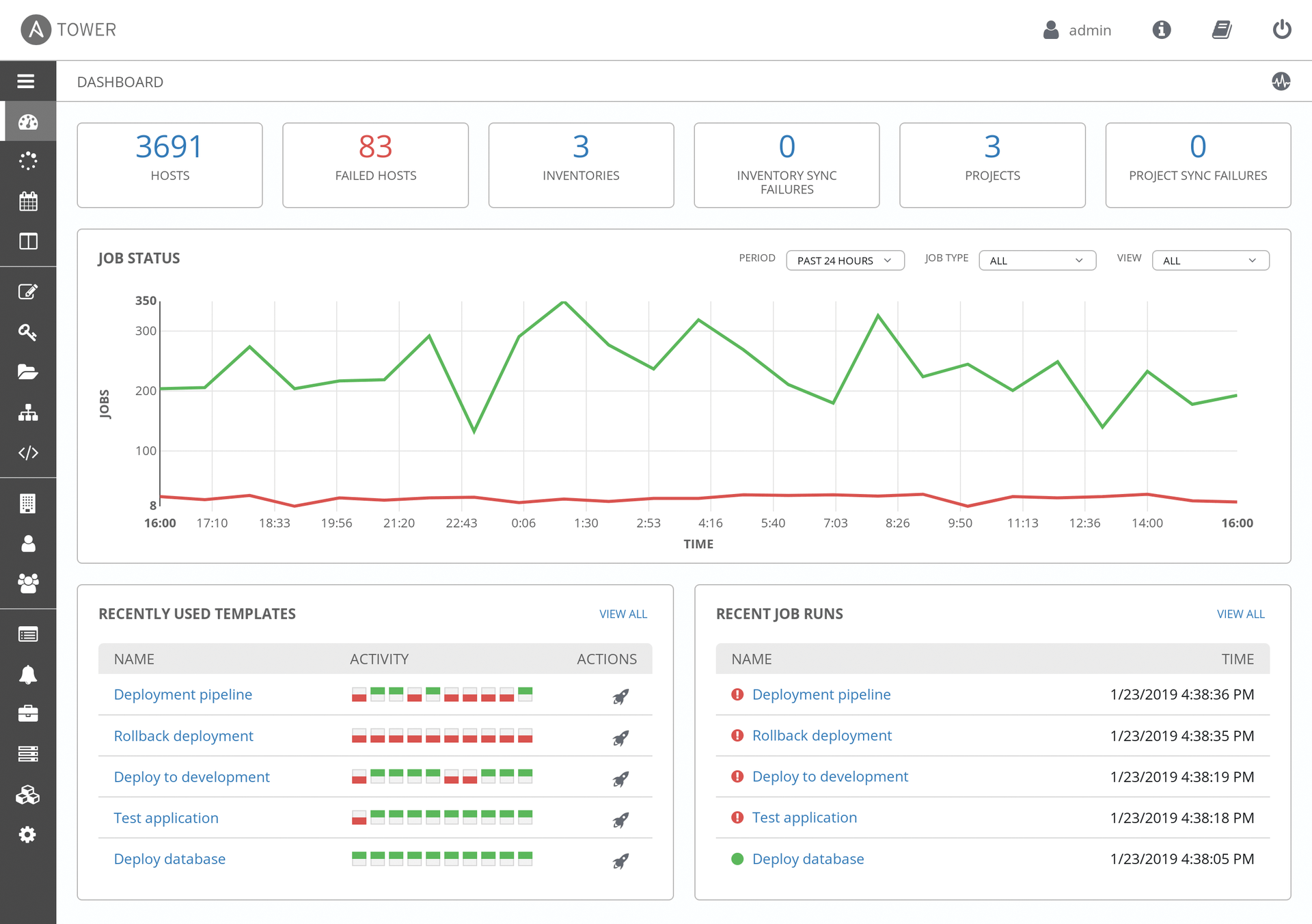

It was to be feared that Ansible would not remain the small, no-frills automation tool of the first hour forever. However, Red Hat, as the new owner of the solution, is doing its best to implement changes carefully and, moreover, to design them such that administrators do not necessarily have to use them. In parallel, the vendor is trying to build a portfolio around Ansible that provides additional functionality for its own clientele. Ansible Tower, which is based on the open source Ansible AWX software, is a good example of this effort.

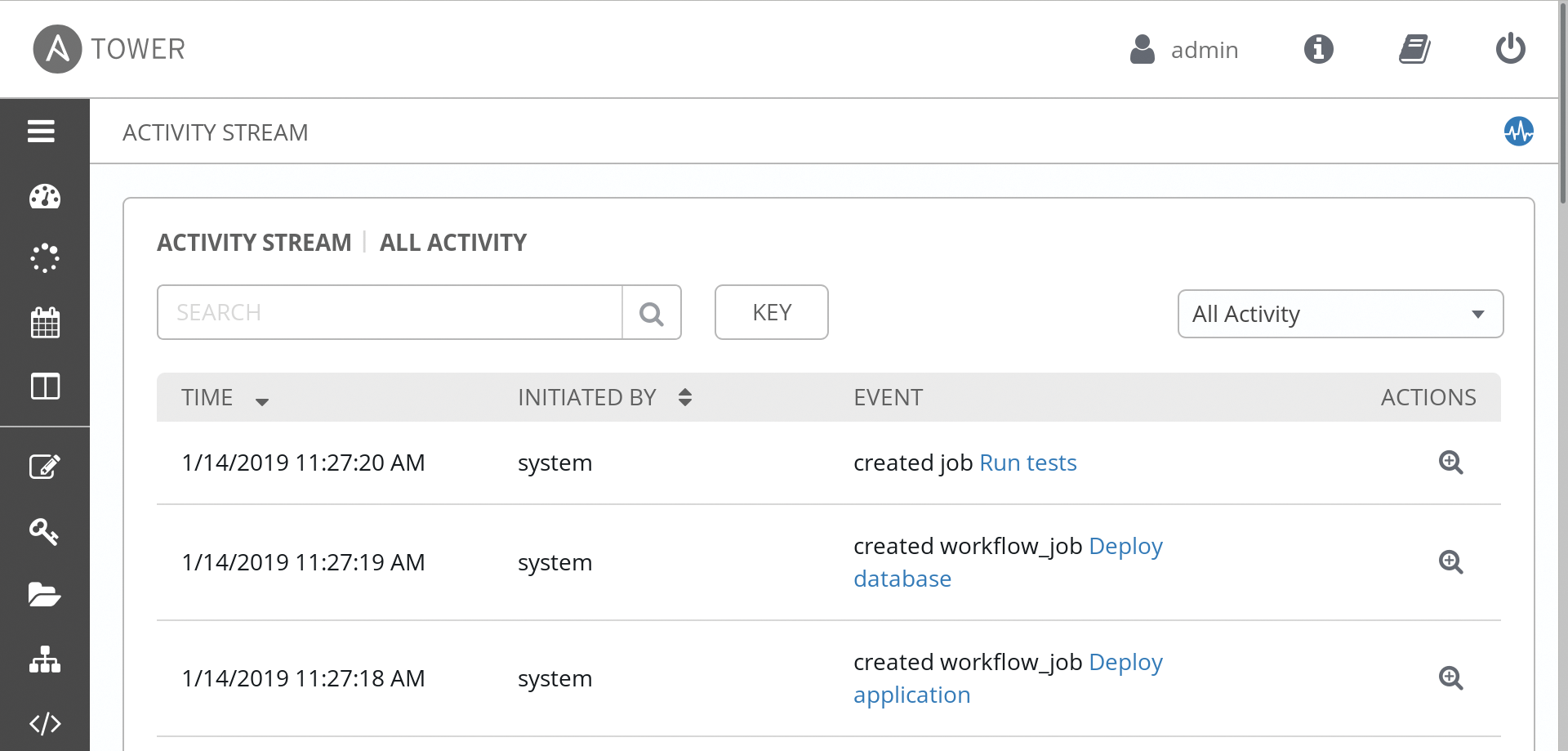

At its core, Tower is on one hand a REST API and on the other a graphical user interface (Figure 4), including its own user and rights management with which Ansible can be conveniently controlled on a variety of target systems. Additionally, Tower can also be linked to existing user directories such as LDAP, which forms a kind of front end for quick access to an organization's entire Ansible setup. In Tower, you can add new playbooks and roles to the setup, run them on hosts, and integrate them into continuous integration/continuous deployment (CI/CD) toolchains. Additionally, recurring tasks can be defined in Tower that Tower then automatically executes at the defined intervals.

One special feature of newer Tower versions is its seamless integration with various public cloud providers. Ansible itself comes with modules for quite a few clouds, including Amazon EC2 and Microsoft Azure. The cloud workloads rolled out from Tower can then be visualized by the tool itself and monitored within the scope of its capabilities (Figure 5).

However, only death is free of charge, and that costs you a life. Tower can be seen as Red Hat's predominant attempt to generate additional revenue from the Ansible acquisition for the first time. As for other management tools, you need a separate subscription for Tower. According to various sources on the web, the premium version costs around $14,000 per year. Companies would do well to evaluate Ansible Tower extensively before making a purchase.

Quo Vadis, Ansible?

It's difficult to believe that another five years have passed since Red Hat bought Ansible, especially because, so far, Red Hat hasn't changed Ansible as much as you might have expected or predicted on the basis of other Red Hat purchases. In some places, it is noticeable that Red Hat goals are dominating the to-do list for the automator, but all in all, it seems as if its developers are still largely free to do as they please.

Accordingly, the Ansible team has also maintained its modus operandi of limiting feature planning to the period between two major releases. The current major release of Ansible at the time of going to press was 2.10, but by the time this issue is published, version 3.0 will have probably seen the light of day. In this respect, there is no vision for Ansible development within the coming years. Instead, the developers of the automation solution are happy to respond to feature requests either directly from Red Hat or from the ranks of users.

The only thing that can be predicted with some certainty for Ansible is that Red Hat will continue to view and treat it as an important asset in the context of its own products. For Ansible fans, this means that, for now, you should not expect too much in the way of profound changes in the design of the software or in the way Ansible development proceeds.

Conclusions

Ansible does not try to impress admins with a huge feature set, fancy technology, or daring features with great automation. Instead, the tool sees itself first and foremost as an honest automator: If you come from a world of Bash and shell scripts, you will find it easy to get started. That Ansible roles almost inevitably self-document makes working with the tool even easier.

The software scores other bonus points: It has a number of integrations with external tools, the inventory can be maintained as a text file or generated dynamically, and extending Ansible doesn't present problems for admins. Even less experienced admins can use Ansible to introduce automation into their environments in no time at all. Ansible is therefore recommended to anyone looking for a jumping-off point into the world of automation or simply anyone who prefers an automator without all the bells and whistles.