Software instead of appliances: load balancers compared

Balancing Act

For a long time now, load balancers have supported any number of back-end servers. If the load in the setup becomes too high, you just add more servers. In this way, almost any resource bottleneck can be prevented. Understandably, the load balancer is a very important part of almost every web setup – if it doesn't work, the website is down. Appliances from F5, Citrix, Radware, and others were considered the quasi-standard in the load balancer environment for a long time, although powerful software solutions that ran as services on normal Linux servers (e.g., HAProxy or Nginx) were available.

The cloud-native trend is a major factor accelerating the shift toward software-defined load balancing these days, not least because typical appliances are difficult to integrate into cloud-native applications – reason enough for a brief market overview. What tools for software-based load balancing are available to Linux admins? What are their specific strengths and weaknesses? Which software is best suited for which scenario?

Before I get started, however, note that not all of the load balancers presented here offer the same features.

OSI Rears Its Head

As with hardware load balancers, different balancing techniques exist for their software counterparts. In a nutshell, many load balancers primarily differ in terms of the Open Systems Interconnection (OSI) layer on which they operate, and some load balancers offer support for multiple OSI layers. What sounds dull in theory has significant implications in practice.

Most software load balancers for Linux operate on OSI Layer 4, which is the transport layer. They field incoming connections by certain protocols – usually TCP/IP – on one side and route them to their back ends on the other. Load balancing at this layer is agnostic with respect to the protocol being used. Therefore, a Layer 4 load balancer can distribute HTTP connections just as easily as MySQL connections, or indeed any other protocol.

Conversely, Layer 4 also means that protocol-specific options are not available for balancing. For classical load balancing on web servers, for example, many applications expect their session to be sticky, which means that the same clients always end up on the same web server for multiple successive requests according to various parameters (e.g., session IDs).

However, the web server can only guarantee this stickiness if it not only forwards packets at the protocol level, but also analyzes and interprets the data flow. Many load balancers support this behavior, but it is then referred to as Layer 7 (i.e., the application layer) load balancing.

For the choice of load balancer to be used, the question of support for Layer 7 load balancing is of great relevance. Some balancers in this comparison explicitly support Layer 4 only; others also offer protocol support. In this article, I discuss the OSI layers supported by the solutions presented individually for every solution tested.

High Availability

When admins are considering software load balancers, they need to keep the load balancer itself in mind as a potential source of problems, rather than just considering the website's functionality. Appliance vendors draw on this as a welcome opportunity to cash in once again. Load balancer appliances from all manufacturers can be operated in high-availability (HA) setups, but you need at least two of the devices. That the firmware has this functionality doesn't make any difference. In addition to the second device, a license extension is usually required to install the two balancers in an HA cluster.

With self-built load balancers, admins resolve the HA issue themselves by designing for hardware redundancy. However, now that the infamous half-height pizza boxes come with 256GB of RAM and multicore CPUs, the financial outlay is manageable. Moreover, the software load balancers presented here are not particularly greedy when it comes to resources. The software side is a bit more uncomfortable: IP addresses that switch in combination with certain services can be implemented in Linux as a typical HA cluster with Pacemaker.

Classic Choice: HAProxy

The first test candidate in the comparison is a true veteran that most admins will have encountered. HAProxy [1] is one of the oldest solutions in the test field; the first version saw the light of day at the end of 2001. With regard to the OSI model, HAProxy is always based on Layer 4; it implements balancing for any TCP/IP connection. HAProxy is therefore always suitable as a load balancer for MySQL or other databases. Complementary Layer 7 functionality can be used, but only for HTTP – other protocols are excluded.

Because HAProxy has been on the scene for such a long time, it offers several features that often are not needed in many use cases. However, HAProxy has no weaknesses in terms of basic functions. It supports HTTP/2 as well as compressed connections with Gzip, HTTP URL rewrites, and rate limiting to counter distributed denial of service (DDoS) attacks. Because the program has been capable of multithreading for several years, it benefits from modern multicore CPUs, especially those that also support hyperthreading. In any case, a reasonably up-to-date multicore processor is not likely to faze HAProxy.

Secure sockets layer (SSL) and load balancing is a complex issue, because a middleman like HAProxy and SSL encryption are mutually exclusive. Therefore, an established practice is to let the load balancer handle SSL termination instead of the HTTP server. In such cases, of course, the admin can additionally set up SSL encryption between the individual web servers and the load balancer. HAProxy can handle both: The service terminates SSL connections on demand and also talks to the back ends in encrypted form (e.g., when checking their states). HAProxy has extensive options for checking the functionality of its own back ends. If a back end goes offline or the web server running there does not deliver the desired results, HAProxy automatically removes it from the rotation setup.

All told, HAProxy is a reliable load balancer with a huge range of functions, which, however, makes the program's configuration file difficult to understand. That said, any admin in a state-of-the-art data center will probably generate the configuration from the automation setup anyway, so complexity is not a difficulty.

HAProxy connections are available for all common automation systems, either from the vendor or from third-party providers. I also need to mention the commercial version of HAProxy: In addition to support, it comes with a few plugins for additional functions, such as a single sign-on (SSO) module and a real-time dashboard in which various HAProxy metrics can be displayed.

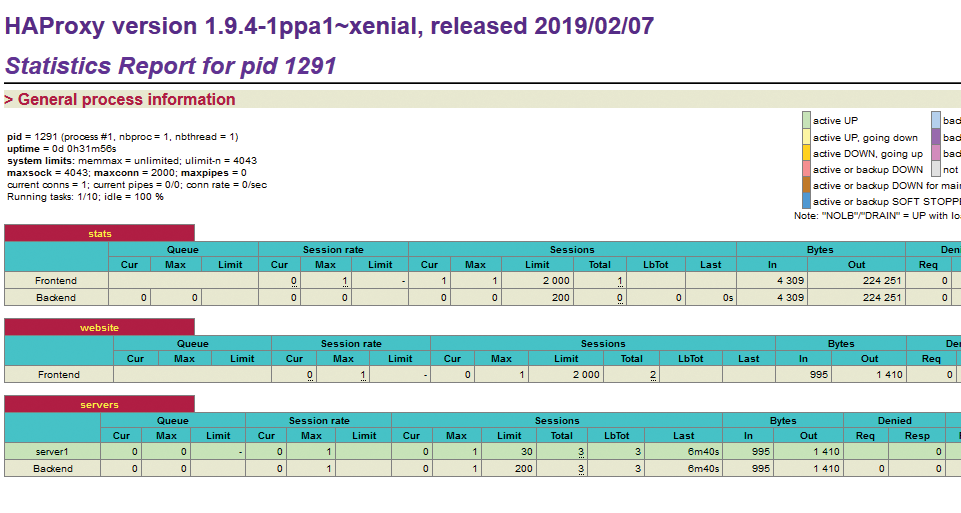

Speaking of metrics data, integrating HAProxy into conventional and state-of-the-art monitoring systems is very simple thanks to previous work by many users and developers. The tool comes with its own status page, which provides a basic overview (Figure 1). All told, HAProxy can be considered a safe choice when it comes to load balancers.

Unexpected: Nginx

Most admins will not have the second candidate in this comparison on their score cards as a potential load balancer, although they know the program well: Nginx [2] is used in many corporate environments, usually as a high-performance web server. However, Nginx comes with a module named Upstream that turns the web server into a load balancer. Keeping to what it does best, Nginx restricts itself to the HTTP protocol on Layer 7 of the OSI model.

Although Nginx is primarily a web server and not a load balancer, it can compete with HAProxy in terms of features. The program's modular structure is an advantage: Nginx can handle SSL and the more modern HTTP/2 protocol. Compressed connections and metrics data in real time are also part of the feature set.

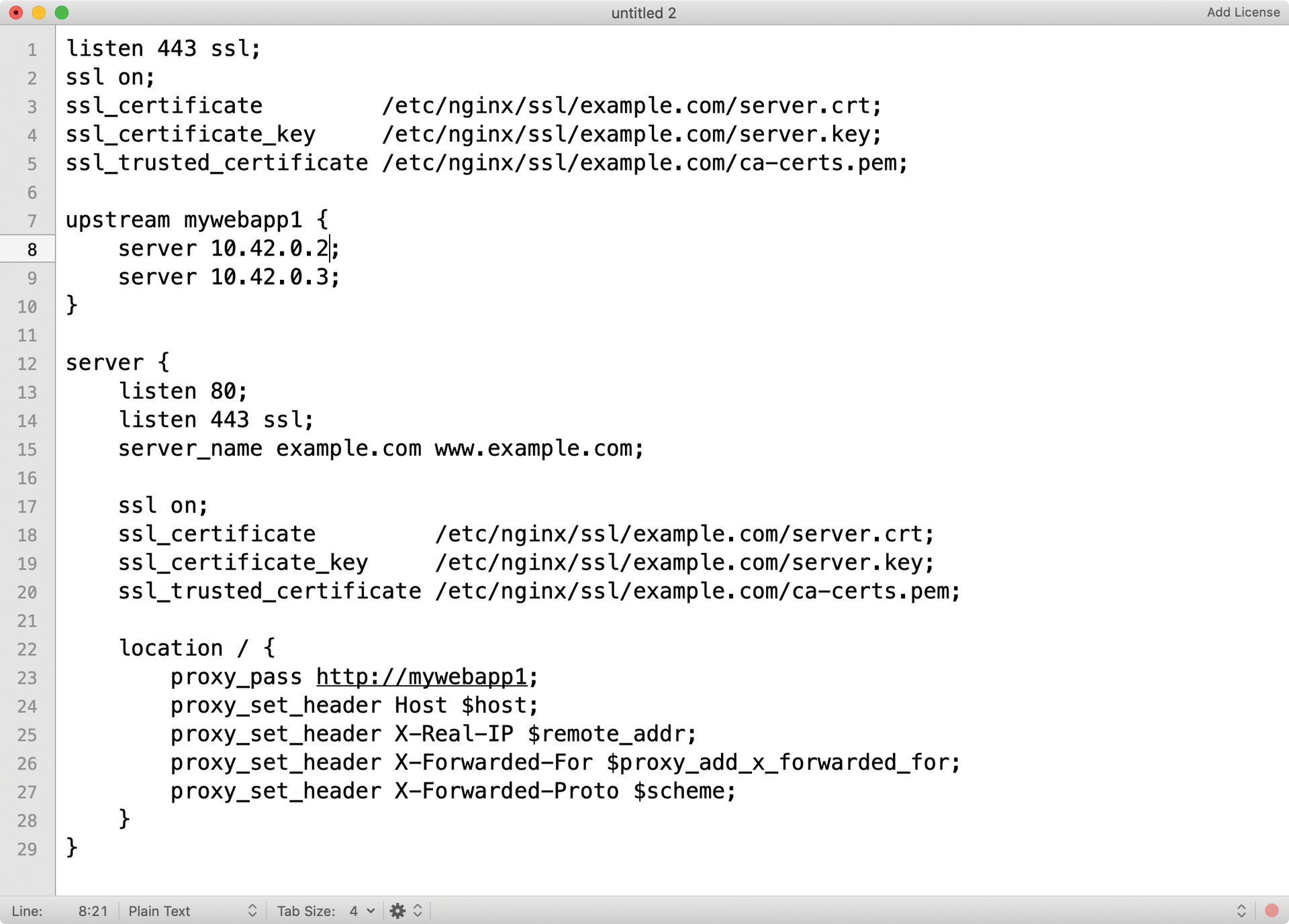

Thanks to the Upstream module (Figure 2), admins can then simply define another hop in the route taken by incoming packets. If SSL is used, Nginx handles SSL termination without a problem. Like HAProxy, Nginx supports several operating modes when it comes to forwarding clients to the back-end servers. In addition to the typical Round Robin mode, Least Connections is available, which selects the back end with the lowest load. The IP Hash algorithm calculates the target back end according to a hash value that it obtains from the client's IP address.

A commercial version of Nginx, Nginx Plus, offers a Least Time mode, as well, wherein the back end that currently has the lowest latency from Nginx's viewpoint is always given the connection. However, session persistence is also supported by the normal Nginx (minus Plus). Whether the IP hashing algorithm or a random mode with persistent sessions is the better option depends in part on the setup and can ultimately only be found out through trial and error.

Nginx admins also can solve the HA problem described at the beginning in a very elegant way by buying the product's commercial Nginx Plus distribution, because it has a built-in HA mode and can be run in active-passive mode or even active-active mode. Potentially unwelcome tools like Pacemaker can be left out in this scenario.

Robust Solution: Seesaw

Not surprisingly, Google, one of the most active companies in the IT environment, has its own opinion on the subject of load balancing. The company is heavily involved in the development of a load balancer, even if it is not officially a Google product. The name of this program is Seesaw [3], which describes the core aspect of a load balancer quite well.

Seesaw is based on the Linux virtual server (LVS) functions and therefore tends to follow Keepalived in terms of functionality. It is aimed exclusively at users of OSI Layer 4, which makes it explicitly the first load balancer in the test that does not offer special functions for HTTP. However, the World Wide Web protocol was probably not the focus of the Google developers when they started working on Seesaw a few years ago, which is clear from the basic operating concept of the solution.

Hacks that use Pacemaker and the like to achieve high availability do not exist in Seesaw. Instead, it always needs to be operated as a cluster of at least two instances that communicate with each other. In this way, Seesaw practically avoids the HA problem. On the Seesaw level, the admin configures the virtual IPs (VIPs) to be published to the outside world, as well as the IPs of the internal target systems.

Because Seesaw supports Anycast and load balancing for Anycast, the setup can become a little more complex. If you bind the Quagga Border Gateway Protocol (BGP) daemon to Seesaw, it will announce Anycast VIPs as soon as you enable them on one of the Seesaw systems. Anycast load balancing is something that Nginx and HAProxy only support to a limited extent, so this is where Seesaw provides innovative features.

In return, however, Seesaw has strict requirements: at least two network ports per machine on the same Layer 2 network. One is used for the system's own IP, and the others announce the VIPs for running services. However, the developers note that Seesaw can easily be run as a group of multiple virtual machines, which takes some of the stress out of the setup.

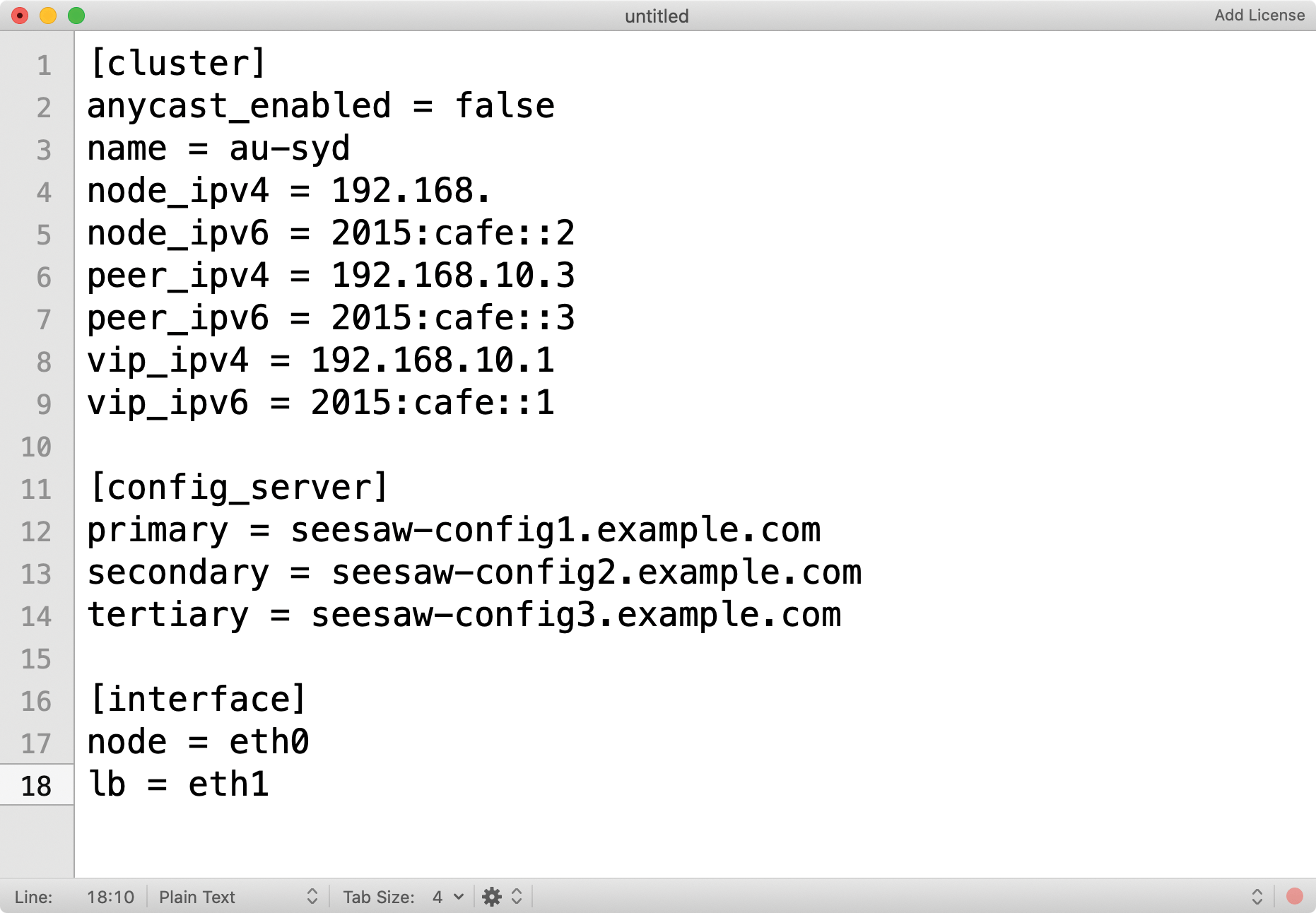

Written in Go, Seesaw ideally should not cause too much grief after the initial setup. The clearly laid out configuration file takes the INI format and usually comprises no more than 20 lines, although it only plays a minor role in the Seesaw context anyway. It was important to Google that the service could be managed easily from a central point. Seesaw therefore also includes a config server service, where the admin dynamically configures the back-end servers and then passes the information on to Seesaw (Figure 3).

This setup might sound a bit like overkill at first, and it only pays off to a limited extent if you are running a single load balancer pair. However, if you think in Google terms and are confronted with quite a few Seesaw instances, you will quickly understand the elegance behind this approach, which is all the more true because Seesaw tries to keep its configuration simple and does not try to replicate one of the common automators. If you are looking for a versatile load balancer for OSI Layer 4, you might want to take a closer look at Seesaw – it's worth it.

Commercial: Zevenet

The last candidate in the comparison is Zevenet [4], which proves that load balancers can be of commercial origin without being appliances. If you want, you can also get the service with hardware from Zevenet, but it is not mandatory. Zevenet also describes itself as cloud ready, and the manufacturer even delivers its product as a ready-to-use appliance for operation in various clouds.

In terms of content, Zevenet is not that spectacular. It is a standard load balancer, with community and professional versions available. The community version has basic balancing support for OSI Layers 4 and 7; the focus in Layer 7 is on HTTP. However, the strategy behind this is clearly do-it-yourself; support from the manufacturer is only available in the form of best-effort requests in their forum.

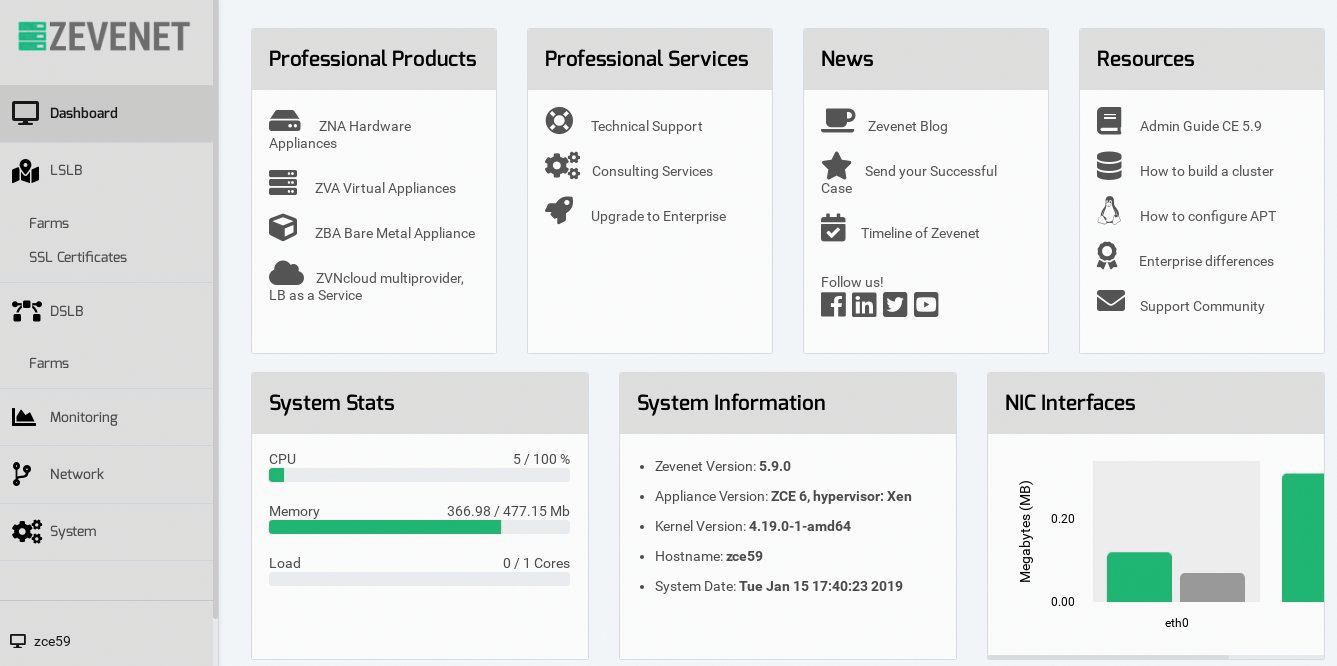

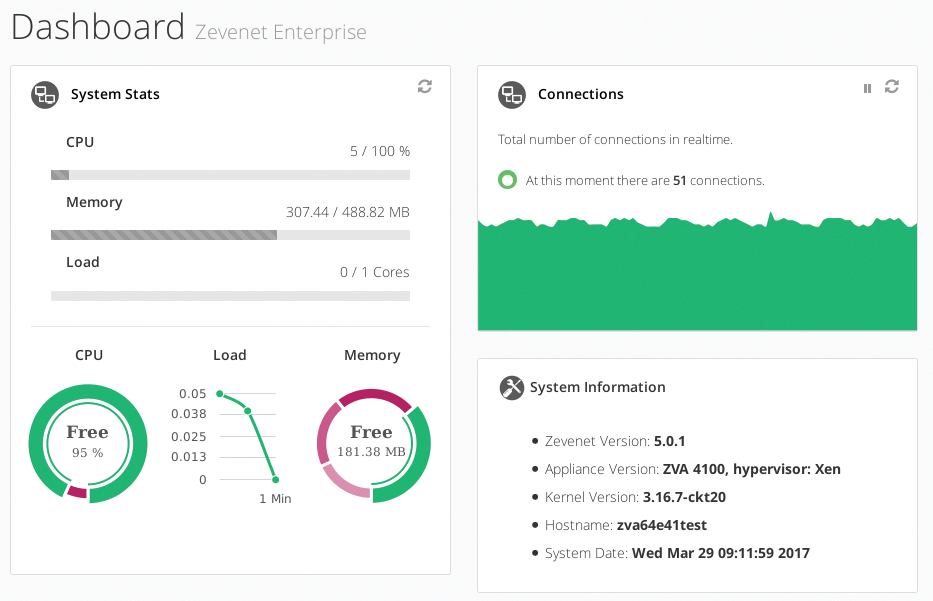

The Enterprise Edition from Zevenet is far more fun. Like Nginx and Seesaw, it offers built-in high availability for cluster operation, delivers its own monitoring messages over SNMP, and offers its own API for access. Unlike the tools presented so far, Zevenet Enterprise also has an extended GUI that admins can use to configure Zevenet.

A basic version of the GUI is also available for the Community Edition (Figure 4), but the Enterprise variant offers significantly more functions (Figure 5). What may be a thorn in the side of experienced admins can save the day in meetings with management. Zevenet can be connected to an external user administration tool in the GUI or, alternatively, provide its own with an audit trail. The ability to change the load balancer configuration can therefore be defined in Zevenet at the service level.

The Layer 4 and 7 capabilities of the Community version are significantly expanded in the Enterprise environment. For example, different log types can be monitored and logged separately, with the ability to specify the destination for the log messages. On OSI Layer 7 for HTTP, Zevenet offers features such as support for SSL wildcard certificates, cookie injection, and support for OpenSSL 1.1.

Not all of these features are equally relevant for all use cases, but all told, Zevenet's range of functions is impressive. Attention to detail can be seen in many places. For example, Zevenet transfers connections from one node of a cluster to another without losing state. Zevenet takes security seriously by integrating various external domain name system blacklist list (DNSBL) or real-time blackhole list (RBL) services, as well as services for DDoS prevention and the ability to deny access to arbitrary clients when needed. Zevenet already leaves the spheres of load balancing and mutates into something more like a small firewall.

Load Balancing for Containers

In common among all the load balancers presented so far is that admins often run them on separate hardware and integrate them into an HA setup, which is of great relevance for classic applications, conventional web server environments, and other standard software. However, it does not apply to cloud-native computing, because in this case, everything is software-defined to the extent possible and is managed by the users themselves. The centralized load balancer that handles forwarding for a website or several installations does not exist in this scenario.

Instead, the admin has Kubernetes (or one of the distributions based on it), which rolls out the app in pods that the respective container orchestrator distributes arbitrarily across physical systems. The network is purely virtual and cannot be tied to a specific host, so the typical load balancer architecture comes to nothing. If a setup requires load balancing (e.g., to allow various microcomponents of an application to communicate with each other in a distributed manner), the legacy approach fails.

Don't Do It Yourself

As a developer or administrator, you could create a container with a load balancer yourself, which you then roll out as part of the pod design. Technically, there is nothing to prevent an appropriately preconfigured Nginx from acting reliably as a balancer. However, setups that run in container orchestrations are usually very dynamic. The number of potential source and target servers on both sides changes regularly, for which classic software load balancers do not cope well.

Sidecar Solution

Fortunately, developers have already come up with a solution to this problem: software-based load balancers designed specifically for such container architectures. Istio [5] is something of a shooting star in this software segment. The software initially implements plain vanilla load balancing with integrated termination for SSL connections. If desired, Istio can also create Let's Encrypt SSL certificates with an ACME script.

For this purpose, Istio provides the containers in pods with sidecars, which act as a kind of proxy for the individual containers. In addition to simple balancing, Istio implements security rules and can make load balancing dependent on various dynamic parameters (e.g., on the load to which the individual services on the back end are currently exposed). Moreover, Istio comes preconfigured as a container and can be integrated with Kubernetes without too much trouble.

For applications that follow microarchitecture principles, approaches like Istio that are specifically designed for this purpose offer the better approach. They should be given preference to homegrown, statically rolled out load balancer containers in any case, because they offer a significantly larger feature set and are usually backed by a large community, which takes a lot of work off the admin's shoulders.

Conclusions

Load balancers comes as ready-to-use appliances or software for Linux servers, and a number of capable and feature-rich, software-based load balancers can be had for practically any environment. HAProxy, Nginx, Seesaw, and Zevenet all have very different backgrounds and therefore focus on slightly different scenarios. However, they do their job so well that you can deploy any of the programs discussed in this article in standard use cases without hesitation. At the end of the day, your personal preferences are also a factor that needs to be taken into consideration.