Automate CentOS and RHEL installation with PXE

Pushbutton

Extensive frameworks for Puppet, Chef, Ansible, and Salt are found in many setups for both automation and configuration. What happens once a system is installed and accessible over SSH is clearly defined by these frameworks, so how do you convince a computer to transition to this state? Several tool suites deal with the topic of bare metal deployment in different ways; the installation of the operating system, then, is part of a large task package that the respective software processes, as if by magic.

Not everyone wants the overhead of such solutions, but that doesn't mean you have to sacrifice convenience. Linux systems can usually be installed automatically by onboard resources, without a framework or complex abstraction. In this article, I show how Red Hat Enterprise Linux (RHEL, and by analogy CentOS) can be installed automatically by the normal tools of a Linux distribution with a preboot execution environment (PXE). All of the tasks in the deployment chain are addressed.

Initial State

To install the other systems automatically, you clearly require something like a manually installed nucleus. These systems are often referred to as bootstrap nodes or cluster workstations. Essentially, this is all about the infrastructure that enables the automatic installation of Red Hat or CentOS, although it does not include as many services as you might expect. All that is really needed is working name resolution, DHCP, trivial FTP (TFTP), and an HTTP server that provides the required files. If desired, Chrony or Ntpd can be added so that the freshly rolled out servers have the correct time.

In this article, I assume that all services for the operation of an automatic installation framework are running on one system. Ideally, this is a virtual machine (VM) that runs on a high-availability cluster for redundancy reasons. This setup can be easily realized on Linux with onboard resources by a distributed replicated block device (DRBD) and Pacemaker. Both tools are available for CentOS. DRBD and Pacemaker are discussed elsewhere [1] [2], so this part of the setup is left out here: It is not absolutely necessary anyway. If you can live with the fact that the deployment of machines does not work if the VM with the necessary infrastructure fails, you do not need to worry about high availability at this point.

The basis for the system offering bootstrap services for newly installed servers is CentOS in the latest version from the 8.x branch, but the work shown here can be done with RHEL, as well.

Installing Basic Services

As the first step, you need to install CentOS 8 and set it up to suit your requirements, including, among other things, storing an SSH key for your user account and adding it to the wheel group on the system so that the account can use sudo. Additionally, Ansible should be executable on the infrastructure node. Although the operating system of the bootstrap system cannot be installed automatically, nothing can prevent you from using Ansible for rolling out the most important services on this system itself to achieve reproducibility.

Assume you have a CentOS 8 system with a working network configuration, a user who can log in over SSH and use sudo, and Ansible, so that the command ansible-playbook can be executed. To use Ansible, the tool expects a specific structure in your local directory, so the next step is to create a folder named ansible/ in the home directory and then, below this folder, the roles/, group_vars/, and host_vars/ subfolders.

You also need to create in an editor an inventory for Ansible. For this purpose, create the hosts file with the content:

[infra] <full_hostname_of_the_Ansible_system> ansible_host=<Primary_IP_of_the_system> ansible_user=<login_name_of_admin_user> ansible_ssh_extra_args='-o StrictHostKeyChecking=no'

The entire entry after [infra] must be one long line. You can check whether this works by calling

ansible -i hosts infra -m ping

If Ansible then announces that the connection is working, everything is set up correctly.

Configure DHCP and TFTP

Your next steps are to get the required services running on the infrastructure VM. DHCP, TFTP, and Nginx are all it takes to deliver all the required files to requesting clients.

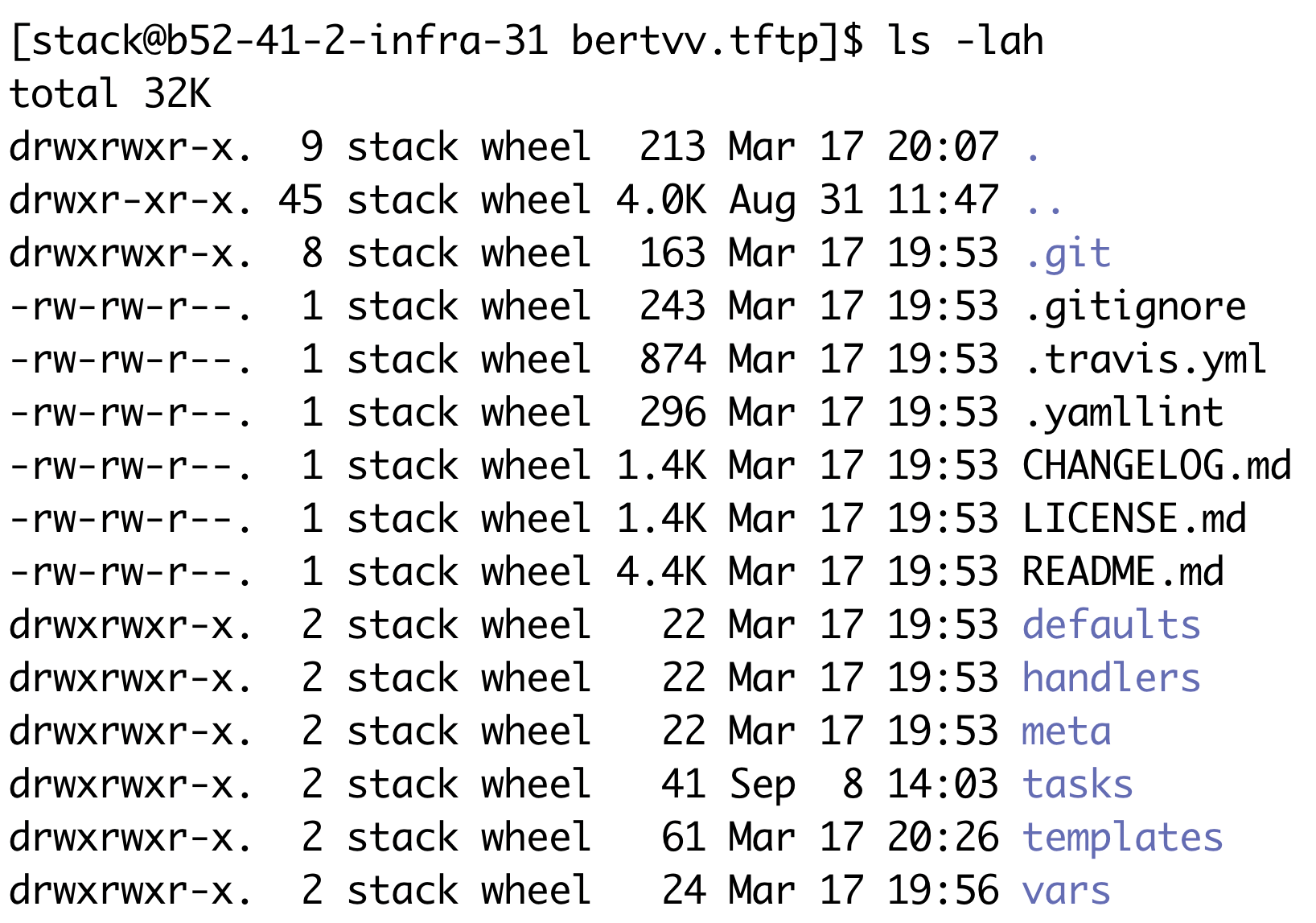

Of course, you can find ready-made Ansible roles for CentOS- and RHEL-based systems that set up DHCP and TFTP, and I use two roles from Dutch developer Bert van Vreckem, because they work well and can be launched with very little effort (Figure 1). In the roles/ subfolder check out the two Ansible modules from Git (installed with dnf -y install git):

VM> cd roles VM> git clone https://github.com/bertvv/ansible-role-tftp bertvv.tftp VM> git clone https://github.com/bertvv/ansible-role-dhcp bertvv.dhcp

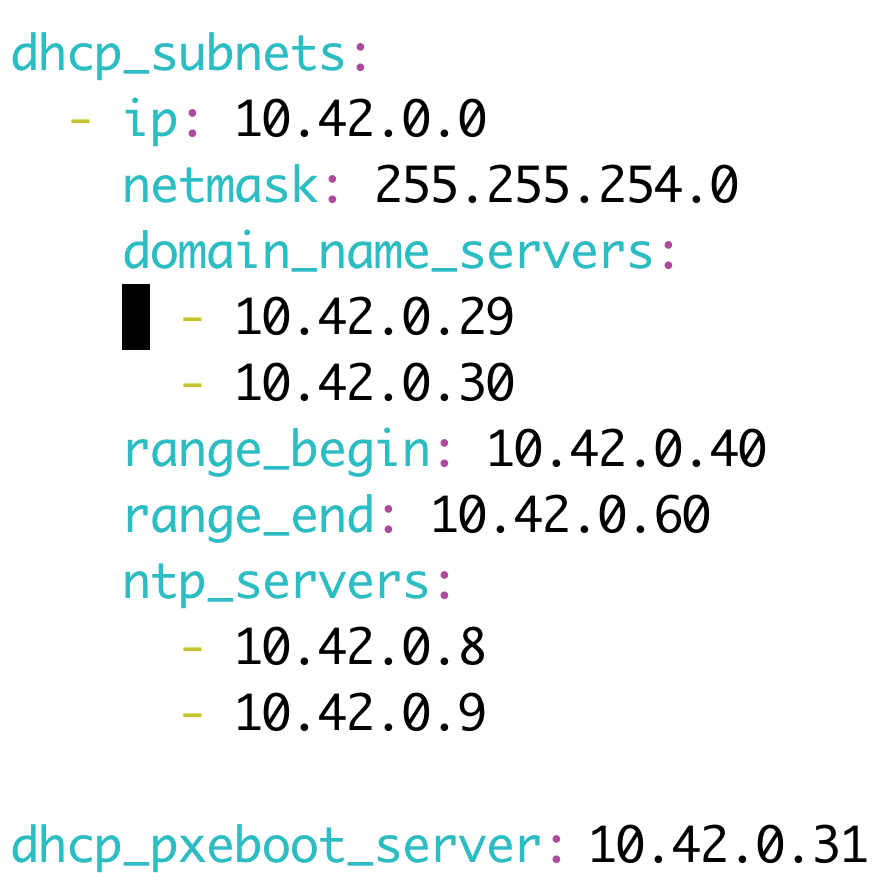

In the file host_vars/-<Hostname_of_infrastructure_system>.yml, save the configuration of the DHCP server as shown in Listing 1. It is important to get the hostname exactly right. If the full hostname of the system is infrastructure.cloud.internal, the name of the file must be infrastructure.cloud.internal.yml. Additionally, the values of the subnet configured in the file, the global address of the broadcast, and the address of the PXE boot server need to be adapted to the local conditions.

Listing 1: Host Variables

dhcp_global_domain_name: cloud.internal

dhcp_global_broadcast_address: 10.42.0.255

dhcp_subnets:

- ip: 10.42.0.0

netmask: 255.255.255.0

domain_name_servers:

- 10.42.0.10

- 10.42.0.11

range_begin: 10.42.0.200

range_end: 10.42.0.254

ntp_servers:

- 10.42.0.10

- 10.42.0.11

dhcp_pxeboot_server: 10.42.0.12

The subnet must be a subrange of the subnet where the VM with the infrastructure services itself is located. However, when configuring the range, you need to make sure the addresses that the DHCP server assigns to requesting clients do not collide with IPs that are already in use locally. It is also important that the value of dhcp_pxeboot_server reflects the IP address of the infrastructure VM (Figure 2); otherwise, downloading the required files over TFTP will fail later.

dhcpd), you need to define the configuration in the host variables of the server with the infrastructure services.TFTP is far less demanding than DHCP in terms of its configuration. Bert van Vreckem's module comes with meaningfully selected defaults for CentOS and sets up the TFTP server such that its root directory resides in /var/lib/tftpboot/, which is in line with the usual Linux standard.

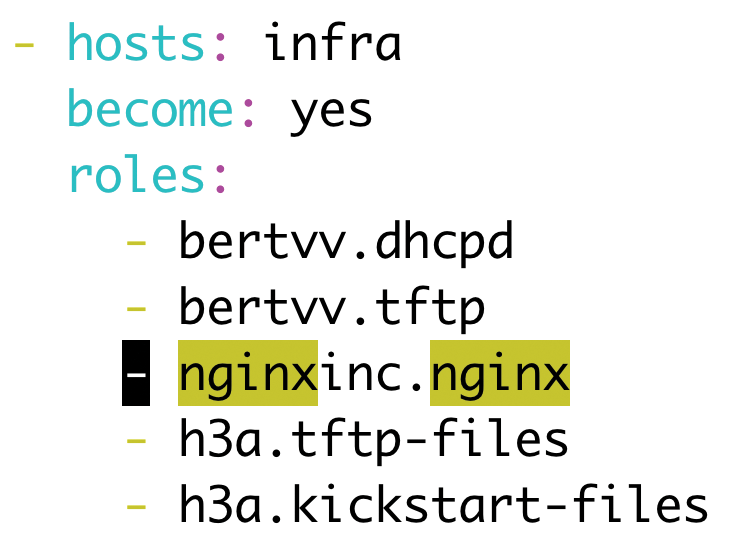

As soon as the two roles are available locally and you have stored a configuration for DHCP in the host variables, you need to create an Ansible playbook that calls both roles for the infrastructure host. In the example, the playbook is:

- hosts: infra

become: yes

roles:

- bertvv.dhcpd

- bertvv.tftp

Basically, for the Playbooks added in this article, you need to add a new line with the appropriate value to the entries in roles. The role's name matches that of the folder in the roles/ directory (Figure 3).

DHCP and TFTP Rollout

Now it's time to get down to business. Ansible is prepared well enough to roll out the TFTP and DHCP servers. You need to enforce this by typing the command:

ansible-playbook -i hosts infra.yml

The individual work steps of the two Playbooks then flash by on your screen.

If you are wondering why I do not go into more detail concerning the configuration of the firewall (i.e., firewalld), the chosen roles take care of this automatically and open the required ports for the respective services. The use of Ansible and the prebuilt modules definitely saves you some work.

Move Nginx into Position

Not all files for the automated RHEL or CentOS installation can be submitted to the installer over TFTP. That's not what the protocol is made for. You need to support your TFTP service with an Nginx server that can host the Kickstart files for Anaconda, for example.

A prebuilt Ansible role for this job, directly from the Ansible developers, works wonderfully on CentOS systems. Just clone the role into the roles/ folder:

$ cd roles $ git clone https://github.com/nginxinc/ansible-role-nginx nginxinc.nginx

After that, open the host_vars/Hostname.yml file again and save the configuration for Nginx (Listing 2). The entry for server_name needs to match the full hostname of the infrastructure system.

Listing 2: Nginx Configuration

nginx_http_template_enable: true

nginx_http_template:

default:

template_file: http/default.conf.j2

conf_file_name: default.conf

conf_file_location: /etc/nginx/conf.d/

servers:

server1:

list:

listen_localhost:

port: 80

server_name: infrastructure.cloud.internal

autoindex: true

web_server:

locations:

default:

location: /

html_file_location: /srv/data

autoindex: true

http_demo_conf: false

Now add the nginxinc.nginx role you cloned to the infra.yml playbook and execute the ansible-playbook command again. Of most import here is that the /srv/data/ be created on the infrastructure node, either manually or by the playbook. The folder needs to belong to the >nginx user and the nginx group so that Nginx can access it.

Enabling PXE Boot

A specific series of commands enable PXE booting of the systems. Here, I assume that the unified extensible firmware interface (UEFI) with the secure boot function is enabled on the target systems.

The boot process will later proceed as follows: Configure the system with the intelligent platform management interface (IPMI) – or manually – for a network or PXE boot. To do this, the system first sends a DHCP request, which also contains the file name of the bootloader in its response. The PXE firmware of the network card then queries this and, once it has loaded the bootloader locally, executes it. The bootloader then reloads its configuration file.

Depending on the requesting MAC address, the bootloader configuration can be influenced, which is very important for the configuration of the finished system. First, however, you will be interested in configuring the basics of the whole process. Conveniently, the chosen DHCP role already configured the DHCP server to always deliver pxelinux/shimx64.efi as the filename for the bootloader to UEFI systems.

In the first step, create under /var/lib/tftpboot/ the pxelinux/ subdirectory; then, create the centos8/ and pxelinux.cfg/ subdirectories below that. Next, copy the /boot/efi/EFI/centos/shimx64.efi file to the pxelinux/ folder. If it is not in place, also install the shim-x64 package.

The pxelinux/ folder also needs to contain the grubx64.efi file, which you will find online [3]. While you are on the CentOS mirror, you can load the two files found in the pxeboot directory (initrd.img and vmlinuz) [4] and store them in pxeboot/centos8/.

Still missing is a configuration file for GRUB that tells it which kernel to use. In the pxeboot/ folder, store the grub.cfg file, which follows the pattern shown in Listing 3.

Listing 3: Generic GRUB Config

set default="0"

function load_video {

insmod efi_gop

insmod efi_uga

insmod video_bochs

insmod video_cirrus

insmod all_video

}

load_video

set gfxpayload=keep

insmod gzio

insmod part_gpt

insmod ext2

set timeout=10

menuentry 'Install CentOS 8' --class centos --class gnu-linux --class gnu --class os {

linuxefi pxelinux/centos8/vmlinuz devfs=nomount method=http://mirror.centos.org/centos/8/BaseOS/x86_64/os inst.ks=http://172.23.48.31/kickstart/ks.cfg

initrdefi pxelinux/centos8/initrd.img

}

If you were to run a server through the PXE boot process, it would get a bootloader, a kernel, and an initramfs. However, the Kickstart file (Listing 4) is still missing.

Listing 4: Sample Kickstart Config

01 ignoredisk --only-use=sda 02 # Use text install 03 text 04 # Keyboard layouts 05 keyboard --vckeymap=at-nodeadkeys --xlayouts='de (nodeadkeys)','us' 06 # System language 07 lang en_US.UTF-8 08 # Network information 09 network --device=bond0 --bondslaves=ens1f0,ens1f1 --bondopts=mode=802.3ad,miimon-100 --bootproto=dhcp --activate 10 network --hostname=server.cloud.internal 11 network --nameserver=10.42.0.10,10.42.0.11 12 # Root password 13 rootpw --iscrypted <Password> 14 # Run the Setup Agent on first boot 15 firstboot --enable 16 # Do not configure the X Window System 17 skipx 18 # System timezone 19 timezone Europe/Vienna --isUtc --ntpservers 172.23.48.8,172.23.48.9 20 # user setup 21 user --name=example-user --password=<Password> --iscrypted --gecos="example-user" 22 # Disk partitioning information 23 zerombr 24 bootloader --location=mbr --boot-drive=sda --driveorder=sda 25 clearpart --all --initlabel --drives=sda 26 part pv.470 --fstype="lvmpv" --ondisk=sda --size 1 --grow 27 part /boot --size 512 --asprimary --fstype=ext4 --ondisk=sda 28 volgroup cl --pesize=4096 pv.470 29 logvol /var --fstype="xfs" --size=10240 --name=var --vgname=cl 30 logvol / --fstype="xfs" --size=10240 --name=root --vgname=cl 31 logvol swap --fstype="swap" --size=4096 --name=swap --vgname=cl 32 reboot 33 34 %packages 35 @^server-product-environment 36 kexec-tools 37 %end 38 39 %addon com_redhat_kdump --enable --reserve-mb='auto' 40 %end 41 42 %anaconda 43 pwpolicy root --minlen=6 --minquality=1 --notstrict --nochanges --notempty 44 pwpolicy user --minlen=6 --minquality=1 --notstrict --nochanges --emptyok 45 pwpolicy luks --minlen=6 --minquality=1 --notstrict --nochanges --notempty 46 %end

If you want to know which format the password in the user (line 21) and rootpw (line 13) lines must have, you can find corresponding information online [5]. Save the file as ks.cfg in the /srv/data/kickstart/ directory on the infrastructure host; then, set the permissions to 0755 so that Nginx can access the file.

After completing this step, you will be able to PXE boot a 64-bit system with UEFI and secure boot enabled. However, at the moment, you will encounter one catch: The installed systems want to pick up their IP addresses over DHCP, and the default Kickstart template assumes that all servers use the same hardware layout.

MAC Trick

You can approach this problem in several ways. Networking is a tricky subject, because it has several potential solutions. Conceivably, for example, you could pack configured systems into their own VLAN at the switch level and have the system configured accordingly by the Anaconda autoinstaller. Another DHCP server on the network could then be set up for this VLAN so that it assigns suitable IP addresses.

Also conceivable would be to store a suitable Kickstart configuration for each host containing the desired network configuration. A trick comes in handy in this case: Once the system launches, the GRUB bootloader does not first search for the grub.cfg file, but for a file named grub.cfg-01-<MAC_for_requesting_NIC> (e.g., grub.cfg-01-0c-42-a1-06-ab-ef). With the inst.ks parameter, you define which Kickstart file the client sees after starting the installer.

In the example here, the GRUB configuration could contain the parameter:

inst.ks=http://172.23.48.31/kickstart/ks01-0c-42-a1-06-ab-ef.cfg

In this case, Anaconda will no longer download the generic ks.cfg, but the variant specifically geared for the host. You can then follow the Anaconda manual if you want to configure static IP addresses.

In this context it is helpful to think of a mechanism to put both the required grub.cfg files and the Kickstart files in place. Ansible's template functions can help enormously here; then, you can build your own role containing a template for grub.cfg and ks.cfg and store the parameters to use for each host in your configuration in the two templates. Afterward, you would use the template function in Ansible to put the populated files in the right place on the filesystem. For each host stored in the Ansible configuration, you would always have a functional, permanently stored autoinstallation configuration. You will find more details of this in the Anaconda documentation [6].

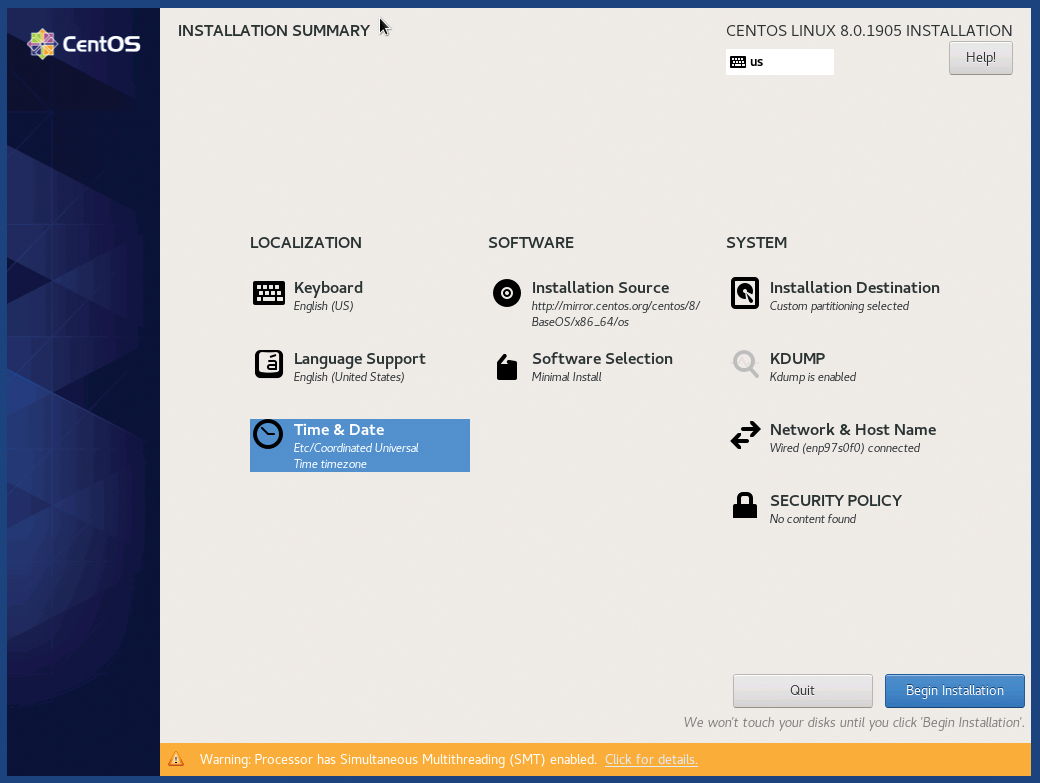

Postinstallation Process

The main actor in the process (Anaconda) has only been implicitly mentioned thus far, but the credit goes to Anaconda, which is part of the CentOS and RHEL initramfs files and automatically appears as soon as you specify an Anaconda parameter like method or inst.ks in the kernel command line (Figure 4).

If you have prepared an Anaconda configuration, you can look forward to finding a system with the appropriate parameters afterward. The Anaconda template I looked at here by no means exhausts all of the program's possibilities. You could also configure sudo in Anaconda or store an SSH key for users so that a login without a password works. However, I advise you to design your Kickstart templates to be as clear-cut and simple as possible and leave the rest to an automation expert.

Extending the Setup Retroactively

Up to this point, this article has worked out how you can convert bare metal into an installed base system. Although automation is good, more automation is even better. What you therefore need is to implement the last small piece of the installed basic system up to the step where the system automatically becomes part of the automation. In Ansible-speak, this objective would be met once a host automatically appeared in the Ansible inventory after the basic installation.

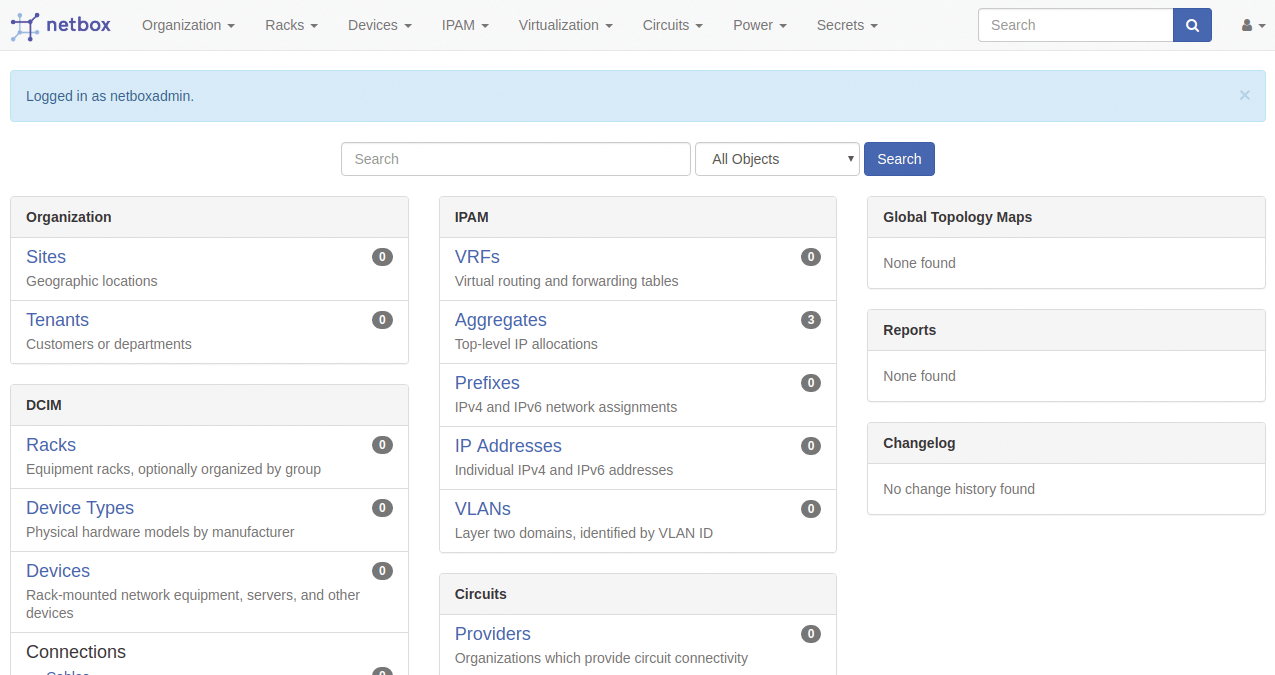

Netbox, a tool that has already been discussed several times in ADMIN [7], is a combined datacenter inventory management (DCIM) and IP address management (IPAM) system. Netbox (Figure 5) lists the servers in your data center, as well as the IPs of your various network interface cards (NICs). If you take this to its ultimate conclusion, you can generate the configuration file for the DHCP server from Netbox, as well as the Kickstart files for individual systems.

Extracting individual values from Netbox is not at all complicated because the service comes with a REST API that can be queried automatically. What's more, a library acts as a Netbox client especially for Python so that values can be queried from Netbox and processed directly in a Python script. Anyone planning a really big leap in automation will be looking to move in this direction and will ultimately use tags in Netbox to assign specific tasks to certain systems.

A Netbox client that regularly queries the parameters of the servers in Netbox can then generate specific Kickstart files according to these details and ensure that, for example, a special set of packages is rolled out on one system but not on another. The overhead is rewarded with a workflow in which you simply bolt a computer into the rack, enter it in Netbox, and tag it appropriately to complete the installation in a completely automated process.

If you want things to be a little less complicated, you might be satisfied with an intermediate stage. Many automators, including Ansible, can now generate their inventory directly from Netbox. As soon as a system appears in Netbox, it is also present in the inventory of an environment, and the next Ansible run would include that new server.

Conclusions

Installing Red Hat or CentOS automatically with standard tools is neither rocket science nor a task that requires complex frameworks. If you are confident working at the console, you will have no problems whatsoever setting up the necessary components.

Combining the automation in your own setup with Anaconda so that newly installed systems become part of your automation is also useful. Although Kickstart files provide sections for arbitrary shell commands that need to be called before, during, or after the installation, in theory, even configuration files of arbitrary programs can be rolled out in this way. In practice, however, it is a good idea to leave this work to automation engineers who specialize in the task. Inventory systems such as Netbox with an API that can be queried prove to be extremely useful, even if their initial setup is often difficult and tedious.