Processing streaming events with Apache Kafka

The Streamer

Event streaming is a modern concept that aims at continuously processing large volumes of data. The open source Apache Kafka [1] has established itself as a leader in the field. Kafka was originally developed by the career platform LinkedIn to process massive volumes of data in real time. Today, Kafka is used by more than 80 percent of the Fortune 100 companies, according to the project's own information.

Apache Kafka captures, processes, stores, and integrates data on a large scale. The software supports numerous applications, including distributed logging, stream processing, data integration, and pub/sub messaging. Kafka continuously processes the data almost in real time, without first writing to a database.

Kafka can connect to virtually any other data source in traditional enterprise information systems, modern databases, or the cloud. Together with the connectors available for Kafka Connect, Kafka forms an efficient integration point without hiding the logic or routing within the centralized infrastructure.

Many organizations use Kafka to monitor operating data. Kafka collects statistics from distributed applications to create centralized feeds with real-time metrics. Kafka also serves as a central source of truth for bundling data generated by various components of a distributed system. Kafka fields, stores, and processes data streams of any size (both real-time streams and from other interfaces, such as files or databases). As a result, companies entrust Kafka with technical tasks such as transforming, filtering, and aggregating data, and they also use it for critical business applications, such as payment transactions.

Kafka also works well as a modernized version of the traditional message broker, efficiently decoupling a process that generates events from one or more other processes that receive events.

What is Event Streaming

In the parlance of the event-streaming community, an event is any type of action, incident, or change that a piece of software or an application identifies or records. This value could be a payment, a mouse click on a website, or a temperature point recorded by a sensor, along with a description of what happened in each case.

An event connects a notification (a temporal element on the basis of which the system can trigger another action) with a state. In most cases the message is quite small – usually a few bytes or kilobytes. It is usually in a structured format, such as JSON, or is included in an object serialized with Apache Avro or Protocol Buffers (protobuf).

Architecture and Concepts

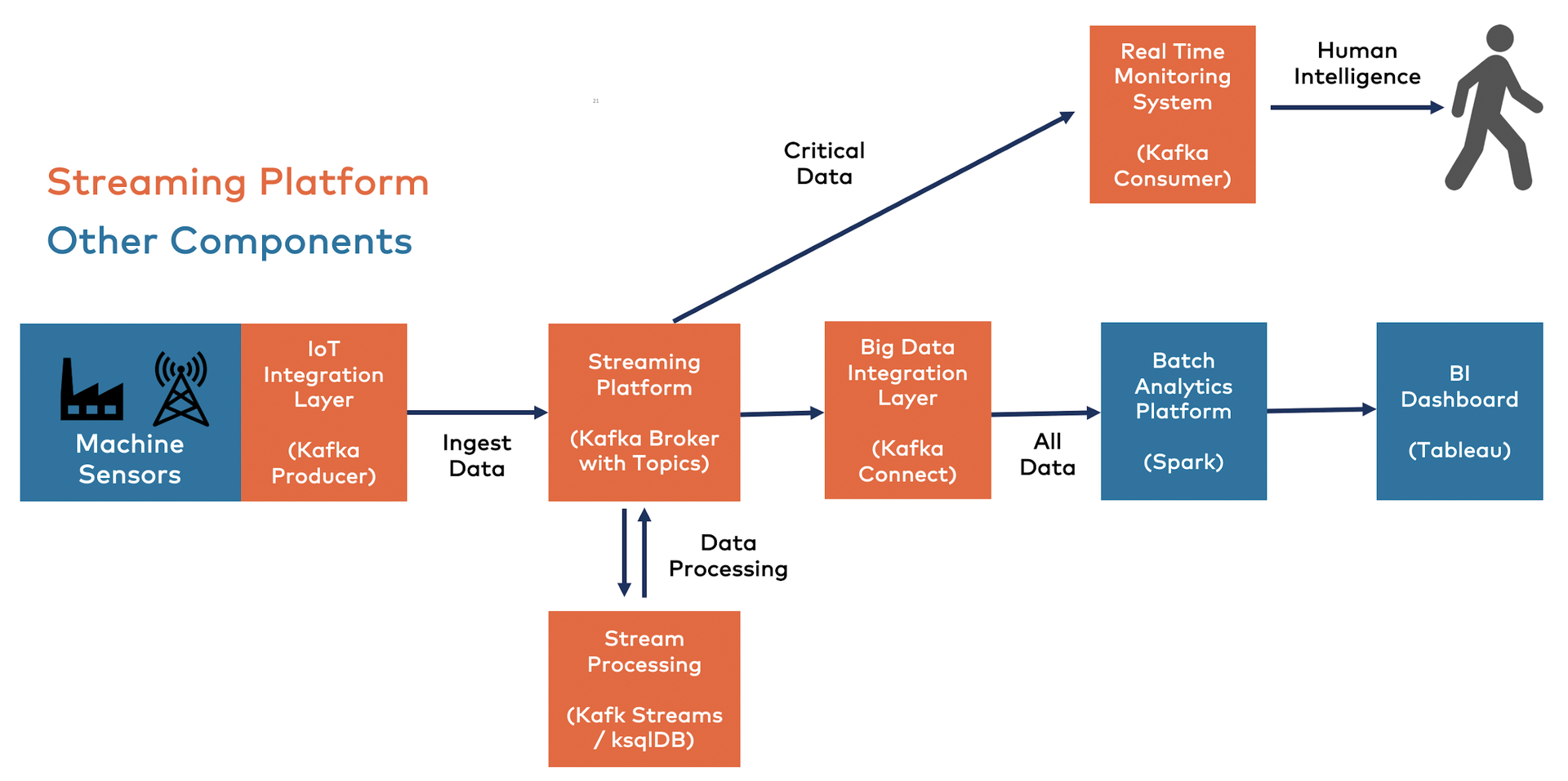

Figure 1 shows a sensor analysis use case in the Internet of Things (IoT) environment with Kafka. This scenario provides a good overview of the individual Kafka components and their interaction with other technologies.

Kafka's architecture is based on the abstract idea of a distributed commit log. By dividing the log into partitions, Kafka is able to scale systems. Kafka models events as key-value pairs.

Internally these keys and values consist only of byte sequences. However, in your preferred programming language they can often be represented as structured objects in that language's type system. Conversion between language types and internal bytes is known as (de-)serialization in Kafka-speak. As mentioned earlier, serialized formats are mostly JSON, JSON Schema, Avro, or protobuf.

What exactly do the keys and values represent? Values typically represent an application domain object or some form of raw message input in serialized form – such as the output of a sensor.

Although complex domain objects can be used as keys, they usually consist of primitive types like strings or integers. The key part of a Kafka event does not necessarily uniquely identify an event, as would the primary key of a row in a relational database. Instead, it is used to determine an identifiable variable in the system (e.g., a user, a job, or a specific connected device).

Although it might not sound that significant at first, keys determine how Kafka deals with things like parallelization and data localization, as you will see.

Kafka Topics

Events tend to accumulate. That is why the IT world needs a system to organize them. Kafka's most basic organizational unit is the "topic," which roughly corresponds to a table in a relational database. For developers working with Kafka, the topic is the abstraction they think about most. You create topics to store different types of events. Topics can also comprise filtered and transformed versions of existing topics.

A topic is a logical construct. Kafka stores the events for a topic in a log. These logs are easy to understand because they are simple data structures with known semantics.

You should keep three things in mind: (1) Kafka events always append to the end of a logfile. When the software writes a new message to a log, it always ends up at the last position. (2) You can only read events by searching for an arbitrary position (offset) in the log and then sequentially browsing log entries. Kafka does not allow queries like ANSI SQL, which lets you search for a certain value. (3) The events in the log prove to be immutable – past events are very difficult to undo.

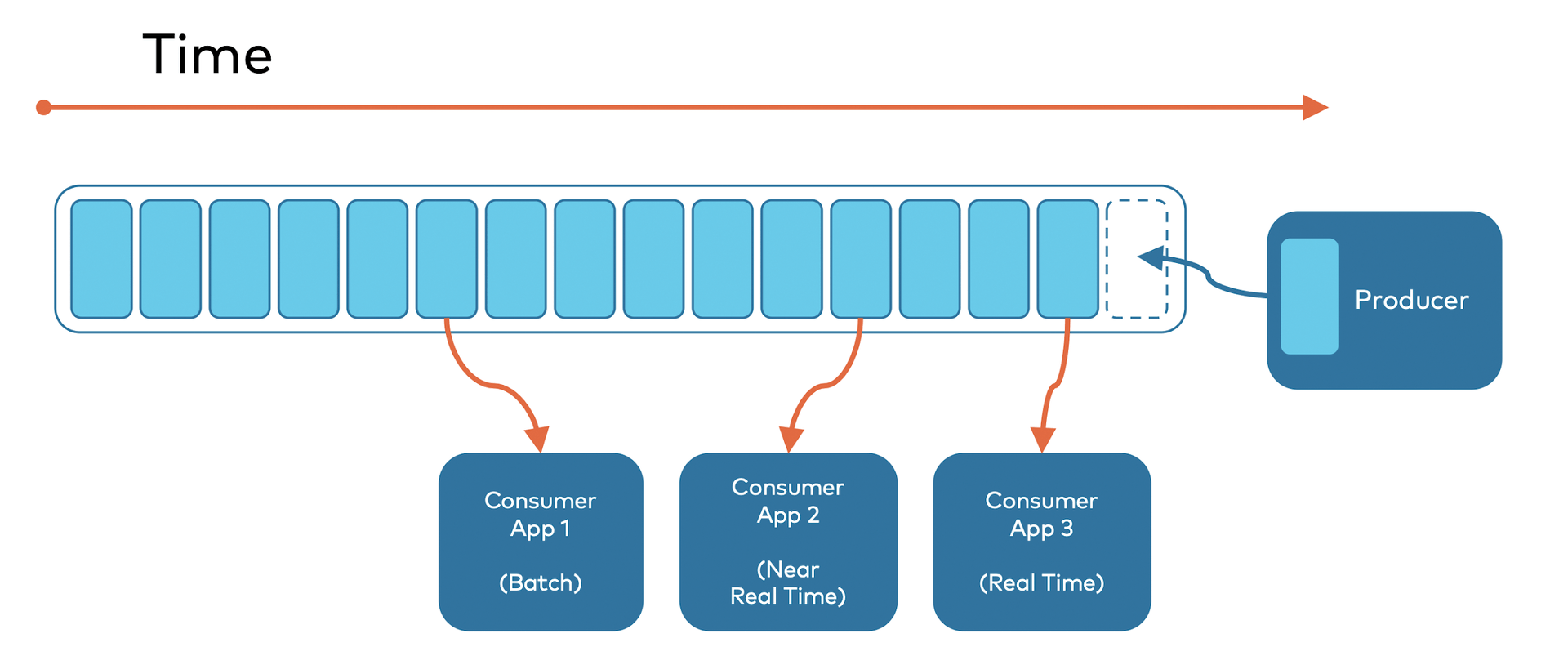

The logs themselves are basically perpetual. Traditional messaging systems in companies use queues as well as topics. These queues buffer messages on their way from source to destination. However, they usually also delete the messages after consumption. The goal is not to keep the messages longer, to be fed to the same or another application later (Figure 2).

Because Kafka topics are available as logfiles, the data they contain is by nature not temporarily available, as in traditional messaging systems, but permanently available. You can configure each topic so that the data expires either after a certain age (retention time) or as soon as the topic reaches a certain size. The time span can range from seconds to years to indefinitely.

The logs underlying Kafka topics are stored as files on a disk. When Kafka writes an event to a topic, it is as permanent as the data in a classical relational database.

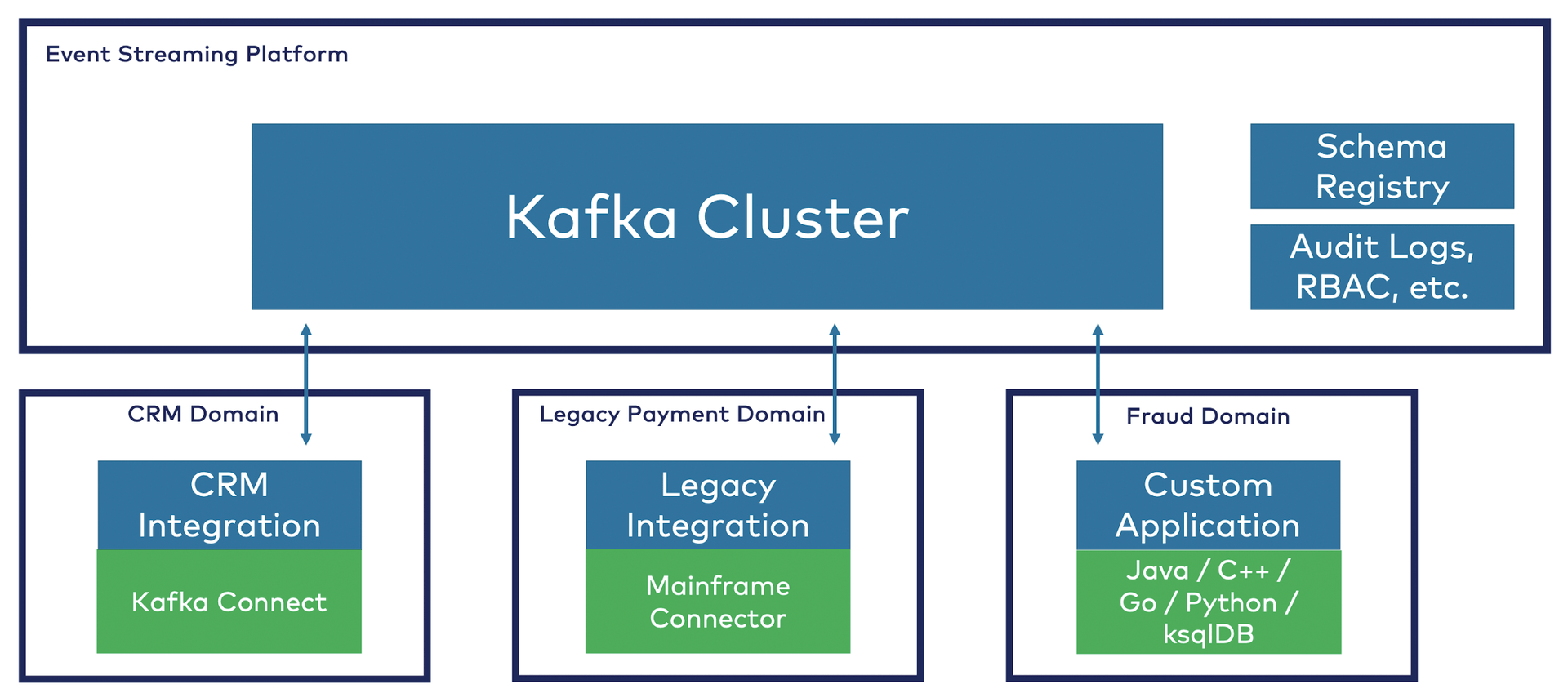

The simplicity of the log and the immutability of the content it contains are the key to Kafka's success as a critical component in modern data infrastructures. A (real) decoupling of the systems therefore works far better than with traditional middleware (Figure 3), which relies on the extract, transform, load (ETL) mechanism or an enterprise service bus (ESB) and which is based either on web services or message queues. Kafka simplifies domain-driven design (DDD) but also allows communication with outdated interfaces [2].

Kafka Partitions

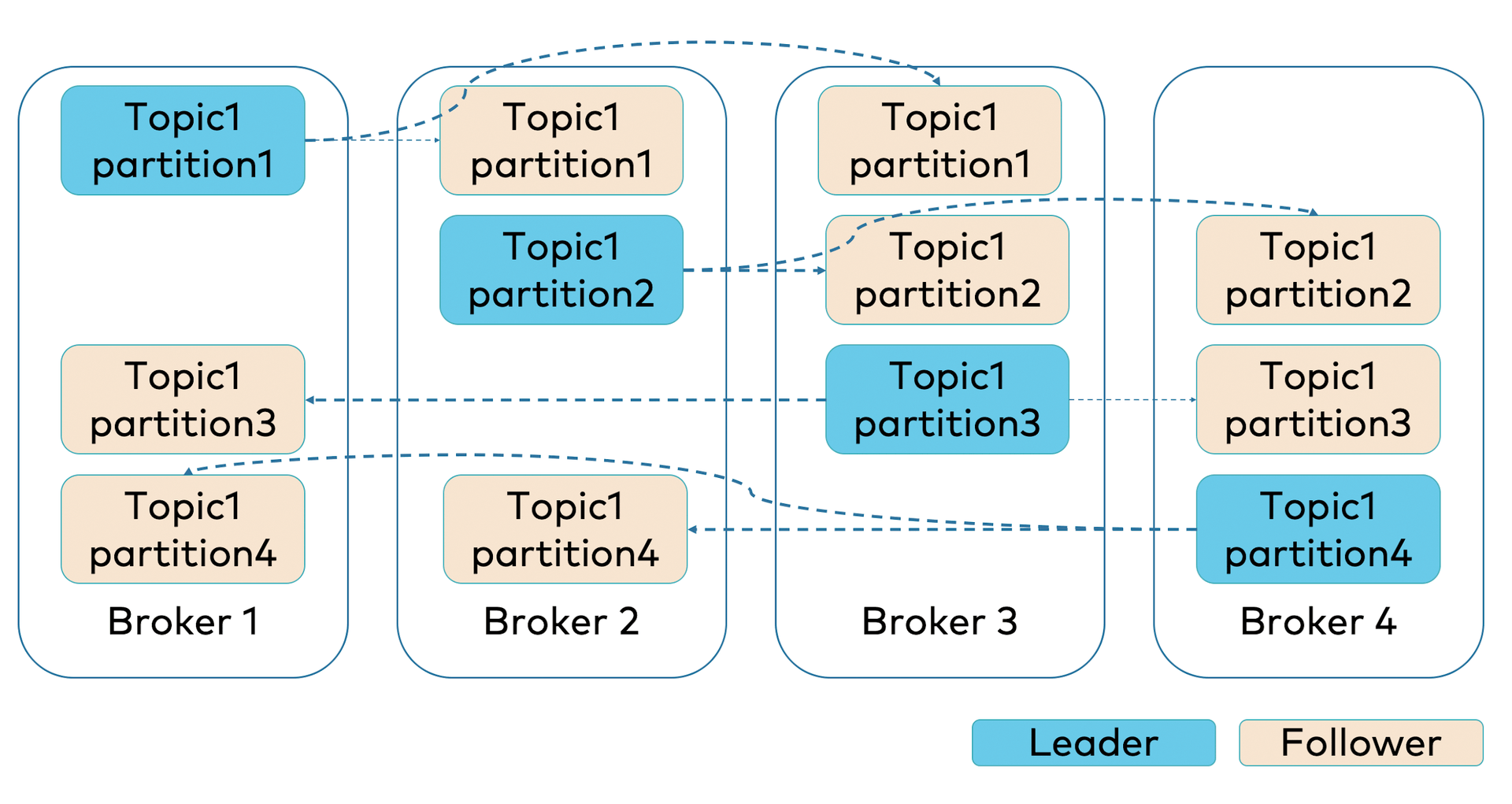

If a topic had to live exclusively on one machine, Kafka's scalability would be limited quite radically. Therefore, the software (in contrast to classical messaging systems) divides a logical topic into partitions, which it distributes to different machines (Figure 4), allowing individual topics to be scaled as desired in terms of data throughput and read and write access.

Partitioning takes the single topic and splits it (without redundancy) into several logs, each of which exists on a separate node in the Kafka cluster. This approach distributes the work of storing messages, writing new messages, and processing existing messages across many nodes in the cluster.

Once Kafka has partitioned a topic, you need a way to decide which messages Kafka should write to which partitions. If a message appears without a key, Kafka usually distributes the subsequent messages in a round-robin process to all partitions of the topic. In this case, all partitions receive an equal amount of data, but the incoming messages do not adhere to a specific order.

If the message includes a key, Kafka calculates the target partition from a hash of the key. In this way, the software guarantees that messages with the same key always end up in the same partition and therefore always remain in the correct order.

Keys are not unique, though, but reference an identifiable value in the system. For example, if the events all belong to the same customer, the customer ID used as a key guarantees that all events for a particular customer always arrive in the correct order. This guaranteed order is one of the great advantages of the append-only commit log in Kafka.

Kafka Brokers

Thus far, I have explained events, topics, and partitions, but I have not yet addressed the actual computers too explicitly. From a physical infrastructure perspective, Kafka comprises a network of machines known as brokers. Today, these are probably not physical servers, but more typically containers running on pods, which in turn run on virtualized servers running on actual processors in a physical data center somewhere in the world.

Whatever they do, they are independent machines, each running the Kafka broker process. Each broker hosts a certain set of partitions and handles incoming requests to write new events to the partitions or read events from them. Brokers also replicate the partitions among themselves.

Replication

Storing each partition on just one broker is not enough: Regardless of whether the brokers are bare-metal servers or managed containers, they and the storage space on which they are based are vulnerable to failure. For this reason, Kafka copies the partition data to several other brokers to keep it safe.

These copies are known as "follower replicas," whereas the main partition is known as the "leader replica" in Kafka-speak. When a producer generates data for the leader (generally in the form of read and write operations), the leader and the followers work together to replicate these new writes to the followers automatically. If one node in the cluster dies, developers can be confident that the data is safe because another node automatically takes over its role.

Client Applications

Now I will leave the Kafka cluster itself and turn to the applications that Kafka either populates or taps into: the producers and consumers. These client applications contain code developed by Kafka users to insert messages into and read messages from topics. Every component of the Kafka platform that is not a Kafka broker is basically a producer or consumer – or both. Producers and consumers form the interfaces to the Kafka cluster.

Kafka Producers

The API interface of the producer library is quite lightweight: A Java class named KafkaProducer connects the client to the cluster. This class has some configuration parameters, including the address of some brokers in the cluster, a suitable security configuration, and other settings that influence the network behavior of the producer.

Transparently for the developer, the library manages connection pools, network buffers, and the processes of waiting for confirmation of messages by brokers and possibly retransmitting messages. It also handles a multitude of other details that the application programmer does not need to worry about. The excerpt in Listing 1 shows the use of the KafkaProducer API to generate and send 10 payment messages.

Listing 1: KafkaProducer API

01 [...]

02 try (KafkaProducerString,<Payment> producer = new KafkaProducer<String, Payment>(props)) {

03

04 for (long i =**0; i <**10; i++) {

05 final String orderId = "id" + Long.toString(i);

06 final Payment payment = new Payment(orderId,**1000.00d);

07 final ProducerRecord<String, Payment> record =

08 new ProducerRecord<String, Payment>("transactions",

09 payment.getId().toString(),

10 payment);

11 producer.send(record);

12 }

13 } catch (final InterruptedException e) {

14 e.printStackTrace();

15 }

16 [...]

Kafka Consumers

Where there are producers, there are usually also consumers. The use of the KafkaConsumer API is similar in principle to that of the KafkaProducer API. The client connects to the cluster via the KafkaConsumer class. The class also has configuration options, which determine the address of the cluster, security options, and other parameters. On the basis of the connection, the consumer then subscribes to one or more topics.

Kafka scales consumer groups more or less automatically. Just like KafkaProducer, KafkaConsumer also manages connection pooling and the network protocol. However, the functionality on the consumer side goes far beyond the network cables.

When a consumer reads a message, it does not delete it, which is what distinguishes Kafka from traditional message queues. The message is still there, and any interested consumer can read it.

In fact, it is quite normal in Kafka for many consumers to access a topic. This seemingly minor fact has a disproportionately large influence on the types of software architectures that are emerging around Kafka, because Kafka is suitable not just for real-time data processing. In many cases, other systems also consume the data, including batch processes, file processing, request-response web services (representational state transfer, REST/simple object access protocol, SOAP), data warehouses, and machine learning infrastructures.

The excerpt in Listing 2 shows how the KafkaConsumer API consumes and processes 10 payment messages.

Listing 2: KafkaConsumer API

01 [...]

02 try (final KafkaConsumer<String, Payment> consumer = new KafkaConsumer<>(props)) {

03 consumer.subscribe(Collections.singletonList(TOPIC));

04

05 while (true) {

06 ConsumerRecords<String, Payment> records = consumer.poll(10);

07 for (ConsumerRecord<String, Payment> record : records) {

08 String key = record.key();

09 Payment value = record.value();

10 System.out.printf("key = %s, value = %s%n", key, value);

11 }

12 }

13 }

14 [...]

Kafka Ecosystem

If there were only brokers managing partitioned, replicated topics with an ever-growing collection of producers and consumers, who in turn write and read events, this would already be quite a useful system.

However, the Kafka developer community has learned that further application scenarios soon emerge among users in practice. To implement these, users then usually develop similar functions around the Kafka core. To do so, they build layers of application functionality to handle certain recurring tasks.

The code developed by Kafka users may do important work, but it is usually not relevant to the actual business field in which their activities lie. At most, it indirectly generates value for the users. Ideally, the Kafka community or infrastructure providers should provide such code.

And they do: Kafka Connect [3], the Confluent Schema Registry [4], Kafka Streams [5], and ksqlDB [6] are examples of this kind of infrastructure code. Here, I look at each of these examples in turn.

Data Integration with Kafka Connect

Information often resides in systems other than Kafka. Sometimes you want to convert data from these systems into Kafka topics; sometimes you want to store data from Kafka topics on these systems. The Kafka Connect integration API is the right tool for the job.

Kafka Connect comprises an ecosystem of connectors on the one hand and a client application on the other. The client application runs as a server process on hardware separate from the Kafka brokers – not just a single Connect worker, but a cluster of Connect workers who share the data transfer load into and out of Kafka from and to external systems. This arrangement makes the service scalable and fault tolerant.

Kafka Connect also relieves the user of the need to write complicated code: A JSON configuration is all that is required. The excerpt in Listing 3 shows how to stream data from Kafka into an Elasticsearch installation.

Listing 3: JSON for Kafka Connect

01 [...]

02 {

03 "connector.class": "io.confluent.connect.elasticsearch.ElasticsearchSinkConnector",

04 "topics" : "my_topic",

05 "connection.url" : "http://elasticsearch:9200",

06 "type.name" : "_doc",

07 "key.ignore" : "true",

08 "schema.ignore" : "true"

09 }

10 [...]

Kafka Streams and ksqlDB

In a very large Kafka-based application, consumers tend to increase complexity. For example, you might start with a simple stateless transformation (e.g., obfuscating personal information or changing the format of a message to meet internal schema requirements). Kafka users soon end up with complex aggregations, enrichments, and more.

The code of the KafkaConsumer API does not provide much support for such operations: Developers working for Kafka users therefore have to program a fair amount of frame code to deal with time slots, latecomer messages, lookup tables, aggregations by key, and more.

When programming, it is also important to remember that operations such as aggregation and enrichment are typically stateful. The Kafka application must not lose this state, but at the same time it must remain highly available: If the application fails, its state is also lost.

You could try to develop a schema to maintain this state somewhere, but it is devilishly complicated to write and debug on a large scale, and it wouldn't really help to improve the lives of Kafka users directly. Therefore, Apache Kafka offers an API for stream processing: Kafka Streams.

Kafka Streams

The Kafka Streams Java API allows easy access to all computational primitives of stateless and stateful stream processing (actions such as filtering, grouping, aggregating, merging, etc.), removing the need to write framework code against the consumer API to do all these things. Kafka Streams also supports the potentially large number of states that result from the calculations of the data stream processing. It also keeps the data collections and enrichments either in memory or in a local key-value store (based on RocksDB).

Combining stateful data processing and high scalability turns out to be a big challenge. The Streams API solves both problems: On the one hand, it maintains the status on the local hard disk and of internal topics in the Kafka cluster; on the other hand, the client application (i.e., the Kafka Streams cluster) automatically scales as Kafka adds or removes new client instances.

In a typical microservice, the application performs stream processing in addition to other functions. For example, a mail order company combines shipment events with events in a product information change log, which contains customer records to create shipment notification objects that other services then convert into email and text messages. However, the shipment notification service might also be required to provide a REST API for synchronous key queries by mobile applications once the apps render views that show the status of a particular shipment.

The service reacts to events. In this case, it first merges three data streams with each other and may perform further state calculations (state windows) according to the merges. Nevertheless, the service also serves HTTP requests at its REST endpoint.

Because Kafka Streams is a Java library and not a set of dedicated infrastructure components, it is trivial to integrate directly into other applications and develop sophisticated, scalable, fault-tolerant stream processing. This feature is one of the key differences from other stream-processing frameworks like ksqlDB, Apache Storm, or Apache Flink.

ksqlDB

Kafka Streams, as a Java-based stream-processing API, is very well suited for creating scalable, standalone stream-processing applications. However, it is also suitable for enriching the stream-processing functions available in Java applications.

What if applications are not in Java or the developers are looking for a simpler solution? What if it seems advantageous from an architectural or operational point of view to implement a pure stream-processing job without a web interface or API to provide the results to the frontend? In this case, ksqlDB enters the play.

The highly specialized database is optimized for applications that process data streams. It runs on its own scalable, fault-tolerant cluster and provides a REST interface for applications that then submit new stream-processing jobs to execute and retrieve results.

The stream-processing jobs and queries are written in SQL. Thanks to the interface options by REST and the command line, it does not matter which programming language the applications use. It is a good idea to start in development mode, either with Docker or a single node running natively on a development machine, or directly in a supported service.

In summary, ksqlDB is a standalone, SQL-based, stream-processing engine that continuously processes event streams and makes the results available to database-like applications. It aims to provide a conceptual model for most Kafka-based stream-processing application workloads. For comparison, Listing 4 shows an example of logic that continuously collects and counts the values of a message attribute. The beginning of the listing shows the Kafka Streams version; the second part shows the version written in ksqlDB.

Listing 4: Kafka Streams vs ksqlDB

01 [...]

02 // Kafka Streams (Java):

03 builder

04 .stream("input-stream",

05 Consumed.with(Serdes.String(), Serdes.String()))

06 .groupBy((key, value) -> value)

07 .count()

08 .toStream()

09 .to("counts", Produced.with(Serdes.String(), Serdes.Long()));

10

11 // ksqlDB (SQL):

12

13 SELECT x, count(*) FROM stream GROUP BY x EMIT CHANGES;

14 [...]

Conclusions and Outlook

Kafka has established itself on the market as the de facto standard for event streaming; many companies use it in production in various projects. Meanwhile, Kafka continues to develop.

With all the advantages of Apache Kafka, it is important not to ignore the disadvantages: Event streaming is a fundamentally new concept. Development, testing, and operation is therefore completely different from using known infrastructures. For example, Apache Kafka uses rolling upgrades instead of active-passive deployments.

That Kafka is a distributed system also has an effect on production operations. Kafka is more complex than plain vanilla messaging systems, and the hardware requirements are also completely different. For example, Apache ZooKeeper requires stable and low latencies. In return, the software processes large amounts of data (or small but critical business transactions) in real time and is highly available. This process not only involves sending from A to B, but also loosely coupled and scalable data integration of source and target systems with Kafka Connect and continuous event processing (stream processing) with Kafka Streams or ksqlDB.

In this article, I explained the basic concepts of Apache Kafka, but there are other helpful components as well:

- The REST proxy [7] takes care of communication over HTTP(S) with Kafka (producer, consumer, administration).

- The Schema Registry [4] regulates data governance; it manages and versions schemas and enforces certain data structures.

- Cloud services simplify the operation of fully managed serverless infrastructures, in particular, but also of platform-as-a-service offerings.

Apache Kafka version 3.0 will remove the dependency on ZooKeeper (for easier operation and even better scalability and performance), offer fully managed (serverless) Kafka Cloud services, and allow hybrid deployments (edge, data center, and multicloud).

Two videos from this year's Kafka Summit [8] [9], an annual conference of the Kafka community, also offer an outlook on the future and a review of the history of Apache Kafka. The conference took place online for the first time in 2020 and counted more than 30,000 registered developers.