MinIO: Amazon S3 competition

Premium Storage

The Amazon Simple Storage Service (S3) protocol has had astonishing development in recent years. Originally, Amazon regarded the tool merely as a means to store arbitrary files online with a standardized protocol, but today, S3 plays an important role in the Amazon tool world as a central service. Little wonder that many look-alikes have cropped up. Ceph, the free object store, for example, has offered a free alternative for years by way of the Ceph Object Gateway, which can handle both the OpenStack Swift protocol and Amazon S3.

MinIO is now following suit: It promises a local S3 instance that is largely compatible with the Amazon S3 implementation. MinIO even claims to offer functions that are not found in the original. The provider, MinIO Inc. [1], is not sparing when it comes to eloquent statements, such as "world-leading," or even "industry standard." Moreover, it's completely open source, which is reason enough to investigate the product. How does it work under the hood? What functions does it offer? How does it position itself compared with similar solutions? What does Amazon have to say?

How MinIO Works

MinIO is available under the free Apache license. You can download the product directly from the vendor's GitHub directory [2]. MinIO is written entirely in Go, which keeps the number of dependencies to be resolved to a minimum.

MinIO Inc. itself also offers several other options to help admins install MinIO on their systems [3]. The ready-to-use Docker container, for example, is particularly practical for getting started in very little time. However, if you want to do without containers, you will also find an installation script on the provider's website that downloads and launches the required programs.

What looks simple and clear-cut from the outside comprises several layers under the hood. You can't see them because MinIO comes as a single big Go binary, but it makes sense to dig down into the individual layers.

Three Layers

Internally, MinIO is defined as an arbitrary number of nodes with MinIO services that are divided into three layers. The lowest layer is the storage layer. Even an object store needs access to physical disk space. Of course, MinIO needs block storage devices for its data, and it is the admin's job to provide them. Like other solutions (e.g., Ceph), MinIO takes care of its redundancy setup itself (Figure 1).

![The MinIO architecture is distributed and implicitly redundant. It also supports encryption at rest. ©MinIO [4] The MinIO architecture is distributed and implicitly redundant. It also supports encryption at rest. ©MinIO [4]](images/F01-b02_minio-2.png)

The object store is based on the storage layer. MinIO views every file uploaded to the store as a binary object. Incoming binary objects, either from the client side or from other mini-instances of the same cluster, first end up in a cache. In the cache, a decision is made as to what happens to the objects. MinIO passes them on to the storage layer in either compressed or encrypted form.

Implicit Redundancy

Redundancy at the object store level is a good thing these days in a distributed solution like MinIO; hard drives are by far the most fragile components in IT. SSDs are hardly better: Contrary to popular opinion, they break down more often than hard drives, but at least they do it more predictably.

To manage dying block storage devices, MinIO implements internal replication between instances of a MinIO installation. The object layers speak their own protocol via a RESTful API. Notably, MinIO uses erasure coding instead of the classic one-to-one replication used by Ceph. This coding has advantages and disadvantages: The disk space required for replicas of an object in one-to-one replication are dictated by the object size. If you operate a cluster with a total of three replicas, each object exists three times, and the net capacity of the system is reduced by two thirds. This principle of operation has basically remained the same since RAID1, even with only one copy of the data.

Erasure coding works differently: It distributes parity data of individual block devices across all other block devices. The devices do not contain the complete binary objects, but the objects can be calculated from the parity data at any time, reducing the amount of storage space required in the system. For every 100TB of user data, almost 40TB of parity data is required, whereas 300TB of disk space would be needed for classic full replication.

On the other side of the coin, when resynchronization becomes necessary (i.e., if single block storage devices or whole nodes fail), considerable computational effort is needed to restore the objects from the parity data. Whereas objects are only copied back and forth during resyncing in the classic full replication, the individual mini-nodes need a while to catch up with erasure coding. If you use MinIO, make sure you use correspondingly powerful CPUs for the environment.

Eradicating Bit Rot

MinIO comes with a built-in data integrity checking mechanism. While the cluster is in use, the software checks the integrity of all stored objects transparently and in the background for both users and administrators. If the software detects that an object stored somewhere – for whatever reason – is no longer in its original state, it starts to resynchronize from another source in the cluster where the object is undamaged.

Cross-Site Replication

The software also uses its capabilities to replicate data to another location. Whereas object stores like Ceph do not support complete mirroring for various reasons, MinIO officially supports this use case with the corresponding functions built-in; external additional components are not required.

With the MinIO client, which I discuss later in detail, admins instead simply define the source and target and issue the instructions for replication. The replication target does not even have to be another mini-instance. Basically, any storage device that can be connected to MinIO can be considered – including official Amazon S3 or devices that speak the S3 protocol, such as some network-attached storage (NAS) appliances.

Encryption Included

The role of encryption is becoming increasingly important against the background of data protection and the increasing desire for data sovereignty. In the past, the ability to enable transport encryption was considered sufficient, but today, the demand for encryption of stored data (encryption at rest) is becoming stronger. MinIO has heard admins calling for this function and provides a module for dynamic encryption as part of the object layer. On state-of-the-art CPUs, it uses hardware encryption modules to avoid potential performance problems caused by computationally intensive encryption.

Archive Storage

Various regulations today require, for example, government agencies to use write once, read many (WORM) devices, which means that data records can no longer be modified once they have been sent to the archive. MinIO can act as WORM storage. If so desired, all functions that enable write operations can be deactivated in the MinIO API.

However, don't wax overly euphoric at this point – many authorities also require that systems be certified and approved for the task by law. The MinIO website show no evidence that MinIO has any such certification. In many countries, this solution would be ruled out for policy reasons in the worst case.

S3 Only

MinIO makes redundant storage with built-in encryption, anti-bit-rot functions, and the ability to replicate to three locations easy to implement. S3 is the client protocol used – a major difference compared with other solutions, especially industry leader Ceph. Just as OpenStack Swift is designed for Swift only, MinIO is designed to operate as an S3-compatible object store only.

MinIO therefore lacks the versatility of Ceph: It cannot simply be used as a storage backend for virtual instance hard drives. Also, the features that Ceph implements as part of CephFS in terms of a POSIX-compatible filesystem are not part of the MinIO strategy, but if you're looking for S3-compatible storage only, MinIO is a good choice.

If you additionally need backend storage for virtual instances such as virtual machines or Kubernetes instances, you will need to operate a second storage solution in addition to MinIO, such as a NAS or storage area network system. Although not very helpful from an administrative point of view, the S3 feature implemented in MinIO is significantly larger than that of the Ceph Object Gateway. Specialization is definitely a good thing in this respect.

Jack of All Trades

At this point, the MinIO client needs a good look. It can do far more than a simple S3 client, because it is far more than a simple client. If you specify an S3 rather than a MinIO instance as the target, its features can also be leveraged by the MinIO client.

The client can do far more by emulating a kind of POSIX filesystem at the command line – but one that is remotely located in S3-compatible storage. The tool, mc, is already known in the open source world and offers various additional commands, such as ls and cp. By setting up cp as an alias for mc cp or ls as alias for mc ls, you don't even need to type more than the standard commands, which will then always act on the S3 store. Additionally, mc provides auto-completion for various commands.

What is really important from the user's point of view, however, is that this command can be used to change the metadata of objects in the S3 store. If you want to replicate a bucket from one S3 instance to another, you need the mirror command. To use this, you first set up several S3-based storage backends in mc, and then stipulate that individual buckets must be kept in sync between the two locations. Replication between two MinIO instances is also quickly set up with mc replicate. If you want a temporary share of a file in S3 together with a temporary link, you can achieve this with the mc share command, which supports various parameters.

Simple Management

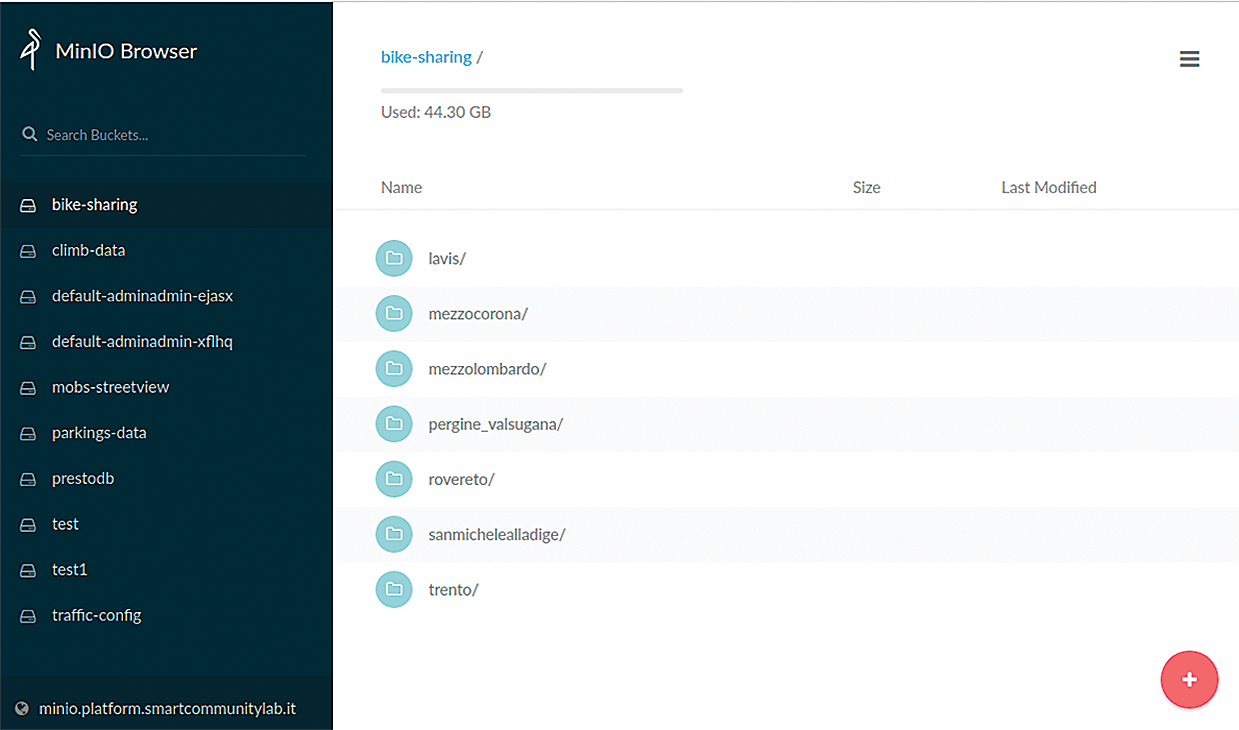

Another detail distinguishes MinIO from other solutions like the Ceph Object Gateway: The tool has a graphical user interface (GUI) as a client for the stored files. The program, MinIO Browser (Figure 2) prompts for the usual S3 user credentials when called. The user's buckets then appear in the overview. Various operations can be initiated in the GUI, but it is not as versatile as the command-line (CLI) client. Most admins will probably give priority to the CLI version, especially because it, unlike the GUI, is suitable for scripting.

Interfaces to Other Solutions

One central concern of the MinIO developers is apparently to keep their solution compatible with third-party services, which, in practice, is very helpful because it means you do not have to take care of central services yourself (e.g., user management). Instead, you could combine a MinIO installation with an external identity provider such as WSO2 or Keycloak.

This principle runs throughout the software. MinIO itself comes with a key management service (KMS), for instance, which takes care of managing cryptographic keys. This part of the software is part of the encryption mechanism that secures stored data and implements true encryption at rest. However, if you are already using an instance of HashiCorp Vault, you also can link MinIO to it through the configuration, which avoids uncontrolled growth of solutions for specific applications.

Monitoring is another example: MinIO offers a native interface for the Prometheus monitoring, alerting, and trending solution; the developers also provide corresponding queries for Grafana. MinIO can be combined with various orchestrators, different load balancers (e.g., Nginx and HAProxy), or frameworks such as Istio.

MinIO makes sure these connections also work in the opposite direction. Anyone who uses typical applications from the Big Data environment (e.g., Kafka, Hadoop, TensorFlow) can query data directly from MinIO and store data there. Moreover, MinIO provides a powerful backend storage solution for most manufacturers of backend systems. Because the developers have supported the S3 protocol for years, MinIO is sufficiently compatible. If you use MinIO, you can therefore look forward to a versatile S3 store that communicates well with other components.

Commercial License

The described range of functions makes it clear that MinIO means hard work. Although MinIO Inc. released MinIO under the terms of the AGPLv3, it also offers an alternative licensing option that differs in some key aspects from the terms of the AGPLv3; however, the vendor remains vague about the exact benefits of the commercial license.

Some companies, they say, did not want to deal with the detailed obligations that AGPLv3 imposes on licensees. As an example, the manufacturer cites companies on its compliance website that want to distribute MinIO as part of their own solution. One example of this would be providers of storage appliances who use MinIO as part of their firmware. If such a provider uses MinIO under the terms of the AGPLv3, they must pass on the source code of the MinIO version used, including any modifications, to the customers and grant them the same rights as those granted to the vendor itself by the AGPLv3. If the vendor instead enters into a commercial license agreement with MinIO, the obligation to hand over the source code is waived.

SUBNET Makes It Possible

The far more common use case for the commercial license is probably customers who need commercial support directly from the manufacturer. The subscription program, known as SUBNET, distinguishes between two levels. As part of a standard subscription, customers automatically receive long-term support for a version of MinIO for a period of one year. A service-level agreement (SLA) guarantees priority assistance for problems in less than 24 hours. To this end, customers have round-the-clock access to the manufacturer's support.

For updates, MinIO Inc. is available with help and advice. A panic button feature provides emergency assistance by the provider's consultants, but only once a year. The subscription also includes an annual review of the store architecture and a performance review. This package costs $10 per month per terabyte. However, charges are only levied up to a total capacity of 10PB; everything beyond this limit is virtually free of charge.

If this license is not sufficient, you can use the Enterprise license, which includes all the features of the Standard license, but costs $20 per month per terabyte up to a limit of 5PB. In return, customers receive five years of long-term support, an SLA with a response time of less than one hour, unlimited panic button deployment, and additional security reviews. In this model, the manufacturer also offers to reimburse customers for some damage that could occur to the stored data.

The costing for both models refers to the actual amount of storage in use, so if you have a 200PB cluster at your data center, but you are only using 100TB, you only pay for 100TB. Additional expenditures for redundancy are also not subject to charge. If you use the erasure coding feature for implicit redundancy, you will only pay the amount for 100TB of user data, even though the data in the cluster occupies around 140TB.

This license is not exactly cheap. For 500TB of capacity used, the monthly bill would be $10,000, which is not a small amount for software-defined storage.

The Elephant in the Room

MinIO impresses with its feature set and proves to be robust and reliable in tests. Because the program uses the Amazon S3 protocol, it is usable for many clients on the market, as well as by a variety of tools with S3 integration. Therefore, a critical, although brief look at the S3 protocol itself makes sense.

All of the many different S3 clones on the market, such as the previously mentioned Ceph Object Gateway, with an S3 interface have one central feature in common: They use a protocol that was not intended by Amazon for use post-implementation. Amazon S3 is not an open protocol and is not available under an open source license. The components underlying Amazon's S3 service are not available in open source form either.

All S3 implementations on the market today are the result of reverse engineering. The starting point is usually Amazon's S3 SDK, which developers can use to draw conclusions about which functions a store must be able to handle when calling certain commands and what feedback it can provide. Even Oracle now operates an S3 clone in its own in-house cloud, the legal status of which is still unclear.

For the users of programs like MinIO and for their manufacturers, this uncertain status results in at least a theoretical risk. Up to now Amazon has watched the goings-on and has not taken action against S3 clones. Quite possibly the company will stick to this strategy. However, it cannot be completely ruled out that Amazon will tighten the S3 reins somewhat in the future. The group could justify such an action, for example, by saying that inferior S3 implementations on the market are damaging the core brand, which in turn could have negative consequences for companies that use S3 clones locally.

If Amazon were to prohibit MinIO, for example, from selling the software and providing services for it by court order, users of the software would be out in the rain overnight. MinIO would then continue to run, but it would hardly make sense to operate it and would therefore pose an immanent operating risk.

Unclear Situation

How great the actual danger is that this scenario will occur cannot be realistically quantified at the moment. For admins, then, genuine security that a functioning protocol is available in the long term can only be achieved with the use of open source approaches.

A prime example would be OpenStack Swift, which in addition to the component, is also the name of the protocol itself. However, the number of solutions on the market that implement OpenStack Swift is tiny: Besides the original, only the Ceph Object Gateway with Swift support is available. Real choice looks different.

Conclusions

MinIO is a powerful solution for a local object store with an S3-compatible interface. In terms of features, the solution is cutting edge: Constant consistency checks in the background, encryption of stored data at rest, and erasure coding for more efficient use of disk space are functions you would expect from a state-of-the-art storage solution. Because the product is available free of charge under an open source license, it can also be tested and used as part of proof-of-concept installations. If you want commercial support, you can get the appropriate license – but do expect to pay quite a serious amount of money for it.