Secure containers with a hypervisor DMZ

Buffer Zone

Containers have become an almost omnipresent component in modern IT. The associated ecosystem is growing and making both application and integration ever easier. For some, containers are the next evolutionary step in virtual machines: They launch faster, are more flexible, and make better use of available resources.

One question remains unanswered: Are containers as secure as virtual machines? In this article, I first briefly describe the current status quo. Afterward, I provide insight into different approaches of eliminating security concerns. The considerations are limited to Linux as the underlying operating system, but this is not a real restriction, because the container ecosystem on Linux is more diverse than on its competitors.

How Secure?

Only the specifics of container security are investigated here. Exploiting an SQL vulnerability in a database or a vulnerability in a web server is not considered. Classic virtual machines serve as the measure of all things. The question is: Do containers offer the same kind of security? More precisely: Do containers offer comparable or even superior isolation of individual instances from each other? How vulnerable is the host to attack by the services running on it?

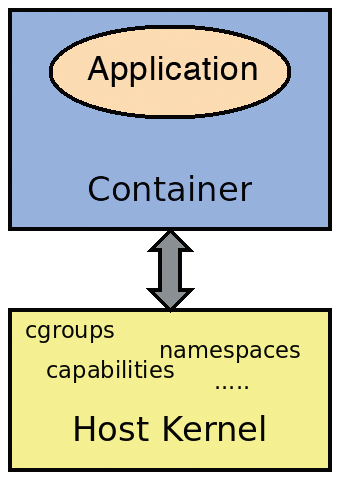

A short review of the basic functionality of containers is essential (Figure 1). Control groups and namespaces provided by the operating system kernel serve as fundamental components, along with some processes and access permissions assigned by the kernel. One major security challenge with containers immediately becomes apparent: A user who manages to break out of an instance goes directly to the operating system kernel – or at least dangerously close to it. The villain is thus in an environment that has comprehensive, far-reaching rights. Additionally, one kernel usually serves several container instances. In case of a successful attack, everyone is at risk.

The obvious countermeasure is to prevent such an outbreak, but that is easier said than done. The year 2019 alone saw two serious security vulnerabilities [1] [2]. Fortunately, this was not the case in 2020. However, this tranquility might be deceptive: The operating system kernel is a complex construct with many system calls and internal communication channels. Experience shows that undesirable side effects or even errors occur time and again. Sometimes these remain undiscovered for years. Is there no way to improve container security? Is trusting the hard work of the developer community the only way?

New Approach: Buffer Zone

The scenario just described is based on a certain assumption or way of thinking: It is important to prevent breakouts from the container instance, although this is only one possible aspect of container security. Avoiding breakouts protects the underlying operating system kernel. Is there another way to achieve this? Is it possibly okay just to mitigate the damage after such an breakout?

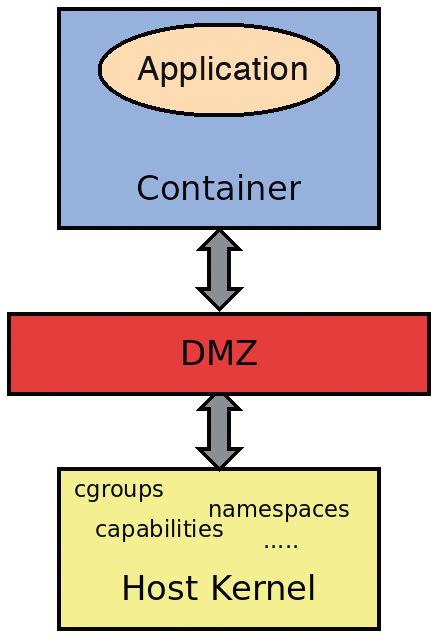

This approach is not at all new in IT. One well-known example comes from the field of networks, when securing a website with an application and database. A buffer zone, known as the demilitarized zone (DMZ) [3], has long been established here. The strategy can also be applied to containers (Figure 2).

In the three following approaches for this buffer zone, the essential difference is how "thick" or "extensive" it is.

Kata Containers

The first approach borrows heavily from classical virtualization with a hypervisor. One prominent representative is the Kata Containers [4] project, which has been active since 2017. Its sponsor is the Open Infrastructure Foundation, which many probably still know as the OpenStack Foundation [5].

However, the roots of Kata Containers go back further than 2017, and the project brings together efforts from Intel and the Hyper.sh secure container hosting service: Clear Containers [6] or runV [7]. Kata Containers use a type II hypervisor with a lean Linux as the buffer zone (Figure 3). In the first versions, a somewhat leaner version of Qemu [8] was used; newer versions support the Firecracker [9] microhypervisor by AWS.

When this article was written, the Kata Containers project had just released a new version that reduced the number of components needed. In Figure 3, you can see a proxy process, which is completely missing from the current version. Moreover, only a single shim process is now running for all container instances.

The real highlight, however, is not the use of Qemu and the like, but integration with container management tools. In other words: Starting, interacting with, and stopping container instances should work like conventional containers. The Kata approach uses two processes for this purpose.

The first, Kata Shim, is an extension of the standardized shim into the container world. It is the part of the bridge that reaches into the virtual machine. There, process number two, the Kata agent, takes over, running as a normal process within the virtual instance. In the standard installation, this role is handled by a lean operating system by Intel named Clear Linux [10], which gives the kernel of the underlying operating system double protection: through the kernel of the guest operating system and through the virtualization layer.

Another benefit is great compatibility. In principle, any application that can be virtualized should work; the same can be said for existing container images. There were no surprises in the laboratory tests. The use of known technologies also facilitates problem analysis and troubleshooting.

Hypervisor Buffer Disadvantages

Increased security with extensive compatibility also comes at a price. On the one hand is the heavier demand on hardware resources: A virtualization process has to run for each container instance. On the other hand, the underlying software requires maintenance and provides an additional attack vector, which is why Kata Containers prevents having a slimmed down version of Qemu. Speaking of maintenance, the guest operating system also has to be up to date. At the very least, critical bugs and vulnerabilities need to be addressed.

All in all, increased container security results in a larger attack surface on the host side and a significant increase in administrative overhead. To alleviate this problem, you could switch to microhypervisors, but again the question of compatibility arises. Ultimately, your decision should depend on the implementation of the microhypervisor. To gain practical experience, you can run Kata Containers with the Firecracker virtual machine manager (Listing 1) [11].

Listing 1: Kata and Firecracker

$ docker run --runtime=kata-fc -itd --name=kata-fc busybox sh

d78bde26f1d2c5dfc147cbb0489a54cf2e85094735f0f04cdf3ecba4826de8c9

$ pstree|grep -e container -e kata

|-containerd-+-containerd-shim-+-firecracker---2*[{firecracker}]

| |-kata-shim---7*[{kata-shim}]

| | `-10*[{containerd-shim}]

| `-14*[{containerd}]

$

$ docker exec -it kata-fc sh

/ #

/ # uname -r

5.4.32

Behind the Scenes

An installation of Kata Containers comprises a number of components that can be roughly divided into two classes. First are the processes that run on the host system, such as Kata Shim and the Kata hypervisor. In older versions, a proxy was added. Second are processes that run inside Kata Containers, starting with the operating system within the hypervisor and extending to the Kata agent and the application. In extreme cases, the user is thus confronted with more than a handful of components that need to be kept up to date and secure. However, the typical application does not provide for more than three parts.

The processes and development cycles for the host operating system and the application are independent of Kata Containers. The remaining components can be managed as a complete construct. In other words, popular Linux distributions come with software directories that support easy installation, updating, and removal of components, including the hypervisor, the guest core, the entire guest operating system, Kata Shim, and the Kata agent. By the way, containers can easily be operated Kata-style parallel to conventional containers.

Double Kernel Without Virtualization

As already mentioned, the hypervisor approach just described has two isolation layers – the guest operating system or its kernel and the virtualization itself. The question arises as to whether this could be simplified. How about using only one additional kernel as a buffer zone? Good idea, but how do you start a Linux kernel on one that is already running?

If you've been around in the IT world for a few years, you're probably familiar with user-mode Linux (UML) [12]. The necessary adjustments have been part of the kernel for years, but initial research shows that this project is pretty much in a niche of its own – not a good starting position to enrich the container world.

A second consideration might take you to the kexec() system call. Its typical field of application is collecting data that has led to a failure of the primary Linux kernel and has very little to do with executing containers, which run several times, at the same time, and for far longer.

Whether UML or kexec() – without extensive additional work – these projects cannot be used for increased container security.

gVisor

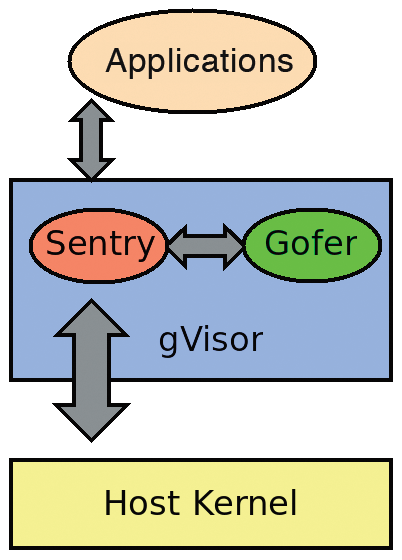

Google has implemented in the gVisor [13] project a watered-down variant of starting a second kernel. Two major challenges arise in establishing an additional operating system kernel as a buffer zone The first is running one or even multiple additional kernels. The second relates to transparent integration into the existing world of tools and methods for container management.

The gVisor project, written in the fairly modern Go programming language, provides a pretty pragmatic solution for these tasks. The software only pretends to be a genuine Linux kernel. The result is a normal executable file that maps all the necessary functions of the operating system kernel; the challenge of how to load, or of multiple execution, is therefore off the table.

Imitating a Linux kernel is certainly difficult, but not impossible. To minimize the additional attack surface, the range of functions can be reduced accordingly – "only" containers have to run. The gVisor kernel comprises two components (Figure 4): Sentry mimics the Linux substructure and intercepts the corresponding system calls, and Gofer controls access to the host system's volumes.

Attentive readers might wonder what exactly in this strategy might not work, and things did get off to a bit of a bumpy start. Fans of the Postgres database had some worries because of a lack of support for the sync_file_range() system call [14]. By now, this is ancient history. Even inexperienced programmers can get an overview of the supported system calls in the gvisor/pkg/sentry/syscalls/linux/linux64.go file, but you do not have to go into so much detail: The developer website supplies a compatibility list [15].

Gofer acts as a kind of proxy and interacts with the other gVisor component, Sentry. If you want to know more about it, you have to take a look at a fairly ancient protocol – 9P [16] – that has its origins in the late 1980s in the legendary Bell Labs.

Shoulder Surfing gVisor

If you compare Kata Containers and gVisor, the latter looks simpler. Instead of multiple software components, you only need to manage a single executable file. The area of attack from additional components on the overall system is also smaller. On the downside, compatibility requires additional overhead. Without any personal effort, users are dependent on the work and commitment of the gVisor developers.

Secure Containers with gVisor

The first steps with gVisor are easy: You only have to download and install a single binary file. One typical pitfall (besides unsupported system calls) is allowing for the version of the gVisor kernel, version 4.4.0 by default. In the world of open source software, however, this is often little more than a string – as it is here (Listing 2).

Listing 2: gVisor Kernel Buffer Zone

$ cat /etc/docker/daemon.json

{

"runtimes": {

"oci": {

"path": "/usr/sbin/runc"

},

"runsc": {

"path": "/usr/local/bin/runsc",

"runtimeArgs": [

"--platform=ptrace",

"--strace"

]

}

}

}

$ uname -r

5.8.16-300.fc33.x86_64

$ docker run -ti --runtime runsc busybox sh

/ #

/ # uname -r

4.4.0

The version number is stored in the gvisor/pkg/sentry/syscalls/linux/linux64.go file. Creating a customized binary file is easy thanks to (traditional) container technology. Listing 2 also shows how gVisor can be installed and operated in parallel with other runtime environments. You only need to specify the path to the binary file and any necessary arguments.

Minimalists

Finally, a third approach adopts the ideas of the first two and pushes minimalism to the extreme. Again, a kind of microhypervisor takes over the service, in addition to a super-minimalistic operating system kernel, which is an old acquaintance from the family of unikernels.

Unikernels have very different implementations. For example, OSv [17] still maintains a certain Linux compatibility, although parallels to the gVisor kernel presented here can be seen. At the other end of the reusability spectrum is MirageOS, the godfather of Nabla containers [18]: Here you build the application and kernel completely from scratch, and both are completely matched to each other. As a result, you only have one executable file. In other words, the application is the kernel and vice versa. One layer below this is the microhypervisor, which specializes in the execution of unikernels. It has little in common with its colleagues Firecracker, Nova [19], or Bareflank [20].

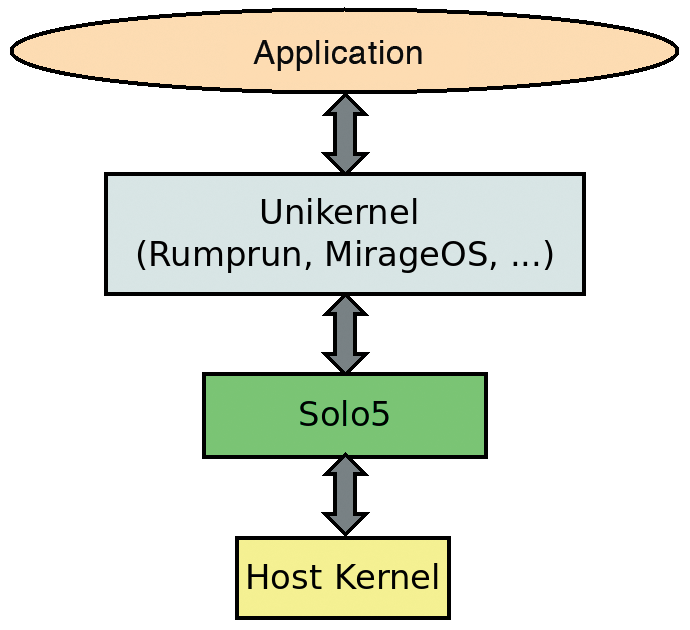

One implementation of this approach is Nabla containers. The roots of the project lie in the IBM research laboratories. Solo5 is used as the micro-hypervisor. Originally it was only intended as an extension of MirageOS for KVM [21]. Today, it is a framework for executing various unikernel implementations. Despite the proximity to MirageOS, Nabla containers have developed a certain preference for rump kernels [22].

The schematic structure is shown in Figure 5. The name "Nabla" derives from its structure. At the top is the micro-hypervisor with the unikernel, the basis of which is the application. The size of the components reflects their importance on the business end, and the order corresponds to the structure of the technology stack. The result is an upside-down triangle that is very similar to the nabla symbol from vector analysis.

A few advantages of Nabla containers are obvious: As with the Kata approach, the operating system core and virtualization present two isolation layers that are greatly minimized and require significantly less in terms of resources than the combination of Clear Linux and Qemu Lite. Additionally, almost all system calls are prohibited in Nabla containers. The software uses the kernel's secure computing mode (seccomp) functions [23]. Ultimately, the following system calls are available:

read()write()exit_group()clock_gettime()ppoll()pwrite64()pread6()

On the downside, unlike Kata Containers or gVisor, existing container images cannot be used directly with the Nabla approach, revealing a clear lack of compatibility. Tests conducted by the editorial team showed that the migration overhead is huge, even for small applications.

Where to Next?

The container community takes the issue of security very seriously. In principle, there are two parallel streams: One deals with improving the container software, and the other, as discussed in this article, deals with methods for establishing additional outside lines of defense. The idea of using a DMZ from the network sector is experiencing a renaissance. An additional operating system kernel – and sometimes even a virtualization layer – acts as a buffer zone between the application and the host.

Basic compatibility with the known management tools for containers is a given; they can even be operated completely in parallel (see also Listing 2). However, the reusability of existing applications and container images differs widely. Kata Containers put fewer obstacles in the user's way, At the other end of the spectrum are Nabla containers. Either way, the idea of the buffer zone is as simple as it is brilliant. Thanks to the different implementations, there should be something to suit everyone's taste.