Hardware suitable for cloud environments

Key to Success

Cloud environments are complex, costly installations with countless individual components. Because they should scale seamlessly across the board, their planning is already far more complex than that of conventional setups. Various basic design parameters are set by the platform's administrator before even a single machine is installed in the rack.

If you are planning a large, scalable platform, you need to do it in a way that leaves as many options open for later as possible. These tasks involve varying complexity at the various levels of a setup. Compute nodes, for example, easily need to be more powerful than current generation systems in a few years' time. If necessary, existing systems are replaced by new ones as soon as the older ones are no longer maintainable.

Critical infrastructure is more difficult. If the network switches are on their last legs, all the power in the world from the compute nodes is not going to help. If the network has not been sensibly planned, its hardware cannot be replaced or scaled easily. Mistakes in the design of a platform are therefore a cardinal sin, because they can only be corrected later with considerable effort or, in the worst case, not at all.

In this article, I look at the aspects you need to consider when planning the physical side of a cloud environment at four levels: network, storage, controller, and compute.

Network: Open Protocols and Linux

The first aspect to consider is the network, because this is where all the threads literally come together. If you come from a conventional IT background and are planning a setup for the first time, you will probably be used to having an independent team take care of the network. Usually, this team will not grant other admins a say in the matter of the hardware to be procured. The vendors are then generally Cisco or Juniper, which ties you to a central network supplier. In large environments, however, this is a problem, not least because the typical silo formation of conventional setups no longer works in the cloud.

Centralized compute management, whether with OpenStack, CloudStack, OpenNebula, or Proxmox, comprises several layers with different protocols. At the latest, when protocols such as the Ethernet virtual private network (EVPN) are used, debugging must always be able to cover the different layers of the cake.

The classic network admin who debugs the switches from the comfort of a quiet office does not exist in clouds like this because you always have to trace the path of packages across the boundaries of Linux servers. Therefore, good generalists should be preferred as administrators over network and storage specialists.

Future Security

The question of future-proofing a certain solution arises when it comes to the topic of networks. Undoubtedly, several powerful software-defined network (SDN) approaches are on the market, and each major manufacturer has its own portfolio. These solutions regularly spread across multiple network layers, so they need to be integrated tightly with the virtualization solution.

However, you do not build a cloud for the next five years and then replace it with another system. If you are establishing a setup on the order of decades, one of the central questions is whether the provider of the SDN solution will still support it in 10 years' time. Will Cisco 2030 still support OpenStack in a way that it remains integrated into Cisco's application-centric infrastructure (ACI) SDN solution? And what is your plan if support is discontinued? During operation, the SDN can hardly be replaced by another solution on a single platform.

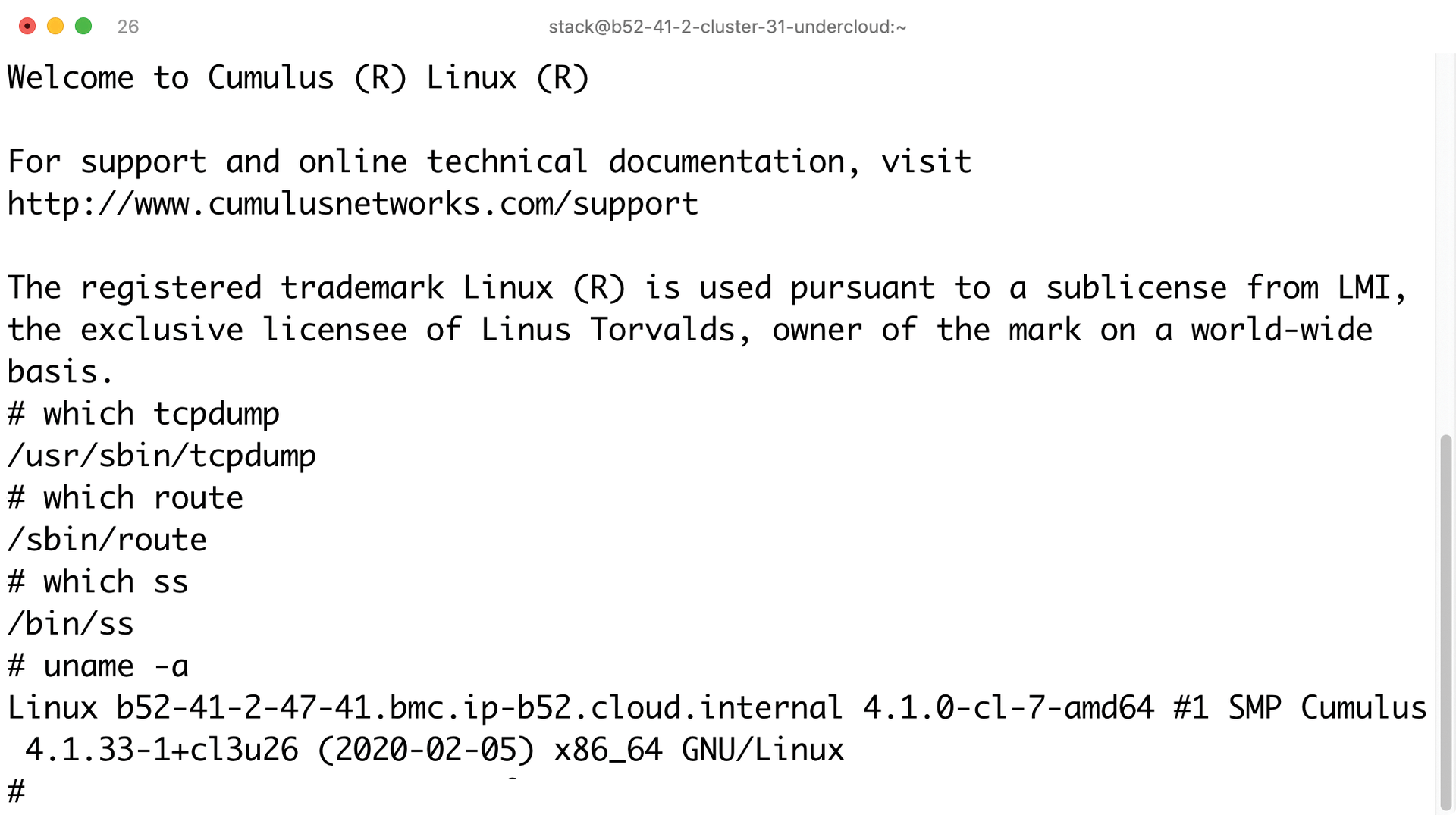

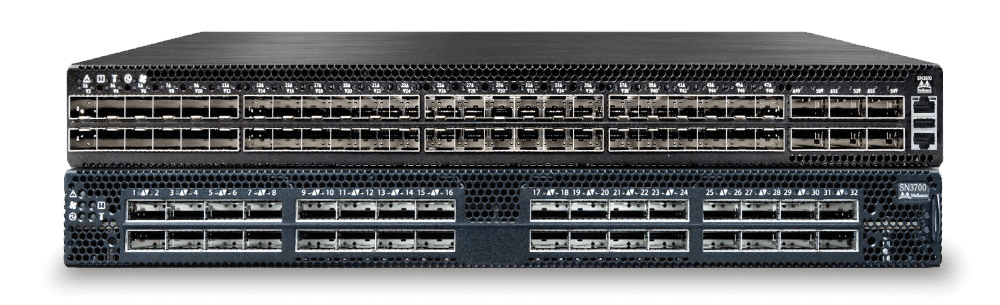

In my experience, I have found it sensible to rely on open standards such as the Border Gateway Protocol (BGP) and EVPN when it comes to networks. Switches with Linux offer admins a familiar environment (Figure 1) and are now available with throughput rates of up to 400Gbps per port (Figure 2). In this way, you can build a typical spine-leaf architecture. Each rack acts as a separate Layer 2 domain, and the switches use internal (i)BGP and route the traffic between the racks in Layer 3 of the network.

Unlike the classical star architecture, not every lower switch level has less bandwidth available in such an environment. If you later discover that the spine layer is no longer up to the demands of the environment, an additional layer can be added from the running operation – without any downtime.

However, you do not want to be stingy with bandwidth. Redundant 25Gbps links per Link Aggregation Control Protocol (LACP) need to be available for each server, but more is always better.

Open Protocols in the Cloud

If you construct your network as described, you get a completely agnostic transport layer for the overlay traffic within the cloud. EVPN and iBGP are currently difficult to merge with the SDN solutions of common platforms. Ultimately, however, this step is no longer necessary because Open vSwitch, in particular, has seen massive change in recent years. In the form of open virtual networking (OVN), a central control mechanism is now available for Open vSwitch that works far better than Open vSwitch itself. Support for OVN is already found in many cloud solutions, most notably OpenStack, in which it has become the standard, so worries about an end to OVN support are unfounded.

Storage Questions: NAS, SAN, SDS

Once you have planned the network and procured the appropriate hardware, you can move on to the next important topic: storage. Here too, it is often necessary to say goodbye to cherished conventions. The idea of buying a classic network-attached storage (NAS) or storage area network (SAN) from a manufacturer and connecting it to the cloud over Ethernet is undoubtedly obvious. Today, such devices are also considerably cheaper than just a few years ago, because several manufacturers are trying to gain a foothold in this market by means of an aggressive pricing policy. Despite all the progress made, central network storage still has enormous design problems. However redundant they may be internally, they always form a single point of failure, which becomes a problem, at the latest when the power in the data center is switched off because of a fire or similar disaster.

Even worse, these devices do not allow horizontal scaling in a meaningful way. When a platform scales across the board, its central infrastructure must grow with it. For OpenStack or other compute management software, NAS and SAN systems are therefore hardly a sensible option. Moreover, by buying the appropriate device, you expose yourself to vendor lock-in. Storage is almost the same as SDN: Once a structure is in production, a component such as the storage underlying it can hardly be removed during operation and replaced by another product. After all, in most clouds you can operate several storage solutions in parallel. In your own interest, however, you will want to save yourself the effort of copying data from one storage device to another during operation.

Additionally, solutions for software-defined storage (SDS), even in the open source world, are certainly possible. Speaking of object storage, Ceph is the industry leader by a country mile, although there are other approaches, as well, such as DRBD by Linbit. Although it began its career as a replication solution for two systems, it now scales across the board. DRBD dynamically creates local volumes of the desired size on a network of servers with storage devices and then automatically configures replication between these resources.

Flash or Not Flash, That is the Question

No matter the kind of storage solution the admin decides on, one central question is almost always asked: Do you go for slow, hard disks, fast SSDs, or a mixture of the two? This debate only makes sense in the first place because flash-based memory, essentially solid-state drives (SSDs) and NVMe devices, has become significantly cheaper in recent years and is now available in acceptable sizes.

A fleet of 8TB SSDs, for example, costs around $1,200 (EUR2,000) on the free market. The price can undoubtedly be reduced considerably if you buy 30 or 40 units from your trusted hardware dealer. In any case, it is worth calculating the price difference between disks and SSDs.

As an example, assume a Ceph setup that initially comprises six servers with a gross capacity of 450TB. The effectively usable storage capacity is thus 150TB. Each individual node must therefore contribute 75TB to the total storage capacity. A good Western Digital hard drive server costs about $260 (EUR250), and 10 of them are required for each server. If you add SSDs to the hard disks as fast cache for your own write-ahead log (WAL) and Ceph's metadata database, the storage devices cost around $3,800 (EUR3,500) per server. Additionally, you need a 2U server for about $6,500 (EUR6,000). Roughly calculated, a Ceph server like this would cost about $13K (EUR10K), with an entire cluster for around $100K (EUR80K).

The same cluster with SSDs costs significantly more on paper. Here, the storage devices cost about $20K (EUR18K) per system, so that, in addition to the server itself, it comes in around $32K (EUR24K) per machine and a total of $240K (EUR192K), bottom line. In return, however, the SSD-based Ceph cluster is seriously fast, gets by without caching hacks, and offers all the other advantages of SSDs (Figure 3). The additional expenditure of approximately $140K (EUR110K) quickly pays dividends, if you consider the total proceeds of a rack over the duration of its lifetime. With several million dollars of turnover, which a rack achieves given a good level of utilization, the extra $140K no longer looks like such a bad deal.

Storage Hardware Can Be Tricky

If you decide on a scalable DIY storage solution, you purchase the components to reflect your specific needs. The examples of Ceph and DRBD illustrate this well.

I already looked at what the hardware for a Ceph cluster can look like, in principle. The number of object-based storage devices (OSDs) per host should not exceed 10; otherwise, if one Ceph node fails, significant resynchronization traffic would disrupt regular operations or drag on forever if you slow it down accordingly. The trick with SSDs, which are given to the OSDs as fast cache in Ceph, is well known – and it still works.

Ceph and RAID controllers don't get on very well in a team. Therefore, if RAID controllers cannot be replaced by simple host bus adapters (HBAs), they should be operated in HBA mode. Battery-backed caches are more likely to prove a hindrance in the worst case scenario because they can cause unforeseen problems in Ceph and slow it down.

With regard to CPU and RAM, the rule of thumb is still valid today that Ceph requires one CPU core per OSD and 1GB of RAM per terabyte of memory offered. For a node with 10 OSDs, a medium-sized multicore CPU in combination with 128GB of RAM is sufficient. Today, these values are at the lower limits of the scale for most hardware manufacturers.

DRBD with Higher Performance Requirements

If you consider DRBD to be an alternative to Ceph, you need fundamentally different hardware. DRBD is far less complex at its core than Ceph; the focus is clearly on copying data back and forth between two block storage devices at the block level of the Linux kernel.

Both in terms of CPU and RAM, DRBD systems are therefore far more frugal than the corresponding servers for Ceph. In terms of storage performance, however, they have far higher requirements for the individual devices than Ceph, because DRBD, unlike Ceph, does not distribute its writes across many spindles throughout the system.

You can intervene to improve performance by putting the DRBD activity log on fast storage. The journal receives each write and then writes it out to the storage backend. If NVMe is used, it makes a noticeable difference. Even battery-supported buffer storage does not put DRBD out of step.

Compute: The Art of Costing

Once you have made it this far, you are facing the last piece of the hardware puzzle: the hardware for the compute nodes. Although compute is only one of the two services that cloud environments typically provide (the other being storage), it is also one of the two services that cloud environments need to provide. However, an Amazon Simple Storage Service (S3)-compatible interface is now almost always included in the scope of delivery of standard object storage devices, so that Ceph, for example, simply handles the storage role itself. In any case, compute is the most frequently requested service.

The question as to which hardware is suitable for compute is not easily answered. Several factors play an important role. At least in the public cloud context, the motto is: Bigger is always better. Platforms of this kind can only be operated profitably if they grow quickly. However, profit does not lie in the combination of storage and compute, as many admins suspect.

In large Ceph clusters, a single gigabyte costs well under a cent to manufacture, and even with terabyte data usage and high coverage, the customer pays very little for storage. The decisive factor for the admin is instead the selling price of a single virtual CPU (vCPU) less the production costs incurred for it. The company uses this factor to scale its turnover with the platform, and thus ultimately its own profit.

So the question as to which hardware is suitable for compute is directly linked to the price of a single vCPU, which in turn is determined by the number of available CPUs per comparison unit. Although this sounds complicated in theory, it is actually quite simple to calculate. To work things out, you need to fire up your favorite spreadsheet.

Assume a complete rack with 42 height units, of which at least two units are reserved for the necessary network hardware. Most data centers also have a maximum amount of power per rack – that means 16 to 18 servers, each of two height units, can usually be accommodated in such a rack.

First, you have the task of recording all the costs per month, ideally for a period of five years. These costs include power, air conditioning, and normal maintenance, as well as the cost of rack installation, estimated hardware repair costs, and any support costs. In the end you have a list of all costs, broken down by month. From this, in turn, the total monthly costs for two 1Us in the rack can be derived (Figure 4).

Overcommit is Standard

The next step is to find out how much cash a single vCPU has to generate to cover expenses and make a profit. To do this, simply multiply the actual number of cores available on the server by an overcommit factor (e.g., 4) to obtain the number of vCPUs that can be sold for every two-height units. In the final step, you need to determine adequate coverage per vCPU, which finally gives you the selling price.

It is important always to plan a safety reserve of 20 percent per rack across the entire platform, which also includes typical waste. On top of that, a rack does not earn money from day 1, so the cost per vCPU has to be calculated so that the rack has generated its planned profit by the end of its service life.

Speaking of overcommit: Many admins experience sleepless nights because of the basically standard approach in large virtualization environments: It is virtually never the case that a specific workload actually uses 100 percent of its own vCPUs on a permanent basis, and if it does, it does not do so for all virtual machines (VMs) per physical server simultaneously. Without an overcommit factor, a large amount of hardware per rack would therefore be undertasked.

By the way, the costing described here makes one thing very clear: The more vCPUs available for every two-height unit, the greater the profit you make with the rack over its service life. In other words, the more vCPUs that can be squeezed into the same area, the better it is from a commercial perspective.

AMD Instead of Intel

The concrete hardware recommendations for compute hardware are therefore clear: Current AMD Epyc CPUs not only deliver more cores for less cash, they perform better than their Intel counterparts by virtually all current benchmarks. The 2U systems are standard, and the only storage in the compute nodes should be two small SSDs on which the system is stored.

Today, all common clouds realize storage for virtual machines by network-connected storage, so that further local storage is unnecessary – at least if you follow the recommendations in this article and do not use your SDS in a hyperconvergent way.

Ratio of RAM to vCPUs

Many admins send off their servers into the fray with massive amounts of RAM and never even think about figures below 512GB per machine. Whether this is useful and necessary depends on the number of vCPUs per server and the assumed breakdown.

Assuming that two CPUs with 64 physical cores each are available for every two 1U, if you subtract eight cores, 120 physical cores remain, which translates into 240 cores thanks to hyperthreading. Assuming an overcommit factor of 4, a total of 1,000 vCPUs would be available in the two 1Us. With 512GB of RAM, the rough calculation gives you 2GB of RAM per vCPU, which, although OK, is pretty much at the lower limit. One terabyte of RAM would therefore be the better option in such systems. A ratio of 1:4 is typical.

Of course, customers do regularly ask for other breakdowns, but you need to pay attention: If a customer wants to use systems with a few vCPUs and a large mount of RAM for particularly memory-intensive tasks, the remaining vCPUs of the system on which these VMs run cannot be used – they no longer have RAM available.

The problem, known as waste, can become a real threat to the entire business case. Companies regularly try to avoid such special requests through pricing (i.e., by marketing the storage-heavy hardware profiles at a considerably higher price than those with the desired ratio of vCPUs and RAM).

Sensible (Re-)Route: Cluster Workstations

Almost every virtualization solution requires a number of services to run smoothly. OpenStack is a good example of this, regardless of whether you buy a distribution from Canonical or Red Hat: Domain Name Service (DNS), Network Time Protocol (NTP), and other services are mandatory.

In my experience, it has proven to be sensible to run these services on a separate high-availability (HA) cluster, which needs to be equipped with dual multicore CPUs and have at least 256GB of RAM and several terabytes of disk space to run multiple VMs for different services, if necessary.

At this point, compliance usually comes into play because different rules apply to external, as opposed to internal, connections. VMs must then be operated twice, depending on the target.

Special Hardware for Remote Access

Finally, I would like to point out a factor that many administrators lose sight of when working in cloud environments and also forget about when planning: remote access. Remote access is usually implemented through the out-of-band interfaces of the servers, which usually also provide a separate network interface card for this purpose. In concrete terms, however, this means you need to build a complete network for access to the management interfaces, including your own switches. Alternatively, the switches for the servers can be used, but these are usually equipped with small form factor pluggable (SFP) ports. Classical management interfaces, on the other hand, rely on RJ45, so appropriate optics are required (Figure 5).

Conclusions

At the end of the day, finding the right hardware combination for your scalable environment is not rocket science. However, it does require thorough planning in advance, and costing the final sales price is a fundamental key to success. If you want to make it big as a provider with a scalable environment, you should not shy away from the effort.