Kibana Meets Kubernetes

Second Sight

The other day I faced a scenario, wherein I wanted to output logs to Elastic Stack (previously called ELK Stack) for visualization through dashboards. Elastic Stack comprises three software components: Elasticsearch, Logstash, and Kibana. When combined, they offer advanced logging, alerting, and searching [1]. The logging was needed so I could dig deeper into what was happening with an application running on a Kubernetes cluster over a prolonged period of time.

I turned to Elastic Stack because it became uber-popular as ELK Stack for good reason. It's widely adopted, helps speed up the process of analyzing massive amounts of data that's churned out by machines, and is sometimes used in enterprises as an alternative to one of the commercial market leaders, Splunk [2].

To make sure I could use my solution as hoped, I decided to create a lab before trying it out elsewhere in a more critical environment. In this article, I show the steps I took and the resulting proof-of-concept functionality.

Three Is the Magic Number

I'm going to use the excellent K3s to build my Kubernetes cluster. For instructions on how to install K3s, check out the "Teeny, Tiny" section in my article on StatusBay in this issue. However, I would definitely recommend getting some background information by visiting their site [3] or reading another of my articles [4] in which I use it for applications in the Internet of Things (IoT) space.

If, when you run the command

$ kubectl get pods --all-namespaces

you see CrashLoopBackOff errors, it's likely to do with netfilter's iptables tying itself in knots.

Kubernetes is well known for causing consternation with other software that uses iptables. I talk more about this in the IoT article, but you can check which chains are loaded in iptables with:

$ iptables -nvL

Consider stopping firewalld if it's running; try the command

$ systemctl status firewalld

and replace status with stop to halt the service, if required.

On my Linux Mint 19 (Tara) machine, which sits atop Ubuntu 18.04 (Bionic), I had to uninstall K3s (use the /usr/local/bin/k3s-uninstall.sh script), switch off Uncomplicated Firewall (UFW) and use the desktop GUI to clear my iptables clashes before running the installation command again. It took no time at all, but obviously it's a far from ideal situation.

In my lab environment, however, I didn't mind. Clearly you should ensure this part is working more gracefully and as expected before deploying it in production. Incidentally, once the core Kubernetes pods were all up and running, I could switch UFW back on with impunity.

A happy K3s installation with all its relevant pods running looks like Listing 1, with the core K3s pods running without error. From the second command in the listing, you can see that only the K3s server is running.

Listing 1: A Happy K3s

echo ~ $ kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system metrics-server-6d684c7b5-ssck9 1/1 Running 0 12m kube-system local-path-provisioner-58fb86bdfd-hgdc4 1/1 Running 0 12m kube-system helm-install-traefik-2b2f2 0/1 Completed 0 12m kube-system svclb-traefik-p46m5 2/2 Running 0 11m kube-system coredns-d798c9dd-kjhjv 1/1 Running 0 12m kube-system traefik-6787cddb4b-594kr 1/1 Running 0 11m $ kubectl get nodes NAME STATUS ROLES AGE VERSION echo Ready master 20m v1.17.3+k3s1

The machine's hostname for the K3s server is echo, which you would need to know to add worker nodes (agents, in K3s parlance), for which the ROLES column would state <none>.

A Moose Loose

Thanks to K3s, you now have a highly scalable Kubernetes build available. You would need to plug in agent nodes for load balancing and failover to create a proper cluster, as I discussed in the "Kubernetes k3s lightweight distro" IoT article mentioned earlier.

That's not a problem in this example, however. To install the Elastic Stack, I'll use the excellent Kubernetes package manager Helm. I give complete instructions in the StatusBay article "Dig Deep into Kubernetes" in this issue (just ignore the first paragraph on installing StatusBay and the second listing in that article). You'll also find some nice, clear docs on the Helm installation page [5].

From version 3.0 onward, Helm had some relatively significant changes with the removal of Tiller because of security concerns [6], so be sure to choose version 3.0+.

Hoots Mon

The Helm Project itself provides the code to install Elasticsearch, Logstash, and Kibana on its GitHub page [7]. To begin, you have to add the repository to Helm so it knows where to look for the charts (or packages) wanted; then, before installing any charts, always check for the latest versions before submitting a search to discover the massive list of available Helm charts:

$ helm repo add stable https://kubernetes-charts.storage.googleapis.com/ $ helm repo update $ helm search repo stable

If you're new to Helm, have a look at the values.yaml file, where you can customize the Helm chart so it's easier to install with the options you need for your requirements, yet still take advantage of easier revision control between upgrades and downgrades. You can check out an example values file with adjustable options in a browser [8].

To see the dependency information on the elastic-stack Helm chart, enter:

$ helm show chart stable/elastic-stack

Listing 2 shows the output and helpfully lists all the packages referenced in the elastic-stack chart. As you can see, Helm takes care of a lot of behind-the-scenes configuration for you automatically, which is why Helm is so powerful. You can change a few variables to suit your needs and, with revision control and other features, keep a close track on how your Kubernetes packages are installed.

Listing 2: Elastic Stack in Helm Chart

$ helm show chart stable/elastic-stack apiVersion: v1 appVersion: "6" dependencies: - condition: elasticsearch.enabled name: elasticsearch repository: https://kubernetes-charts.storage.googleapis.com/ version: ^1.32.0 - condition: kibana.enabled name: kibana repository: https://kubernetes-charts.storage.googleapis.com/ version: ^3.2.0 - condition: filebeat.enabled name: filebeat repository: https://kubernetes-charts.storage.googleapis.com/ version: ^1.7.0 - condition: logstash.enabled name: logstash repository: https://kubernetes-charts.storage.googleapis.com/ version: ^2.3.0 - condition: fluentd.enabled name: fluentd repository: https://kubernetes-charts.storage.googleapis.com/ version: ^2.2.0 - condition: fluent-bit.enabled name: fluent-bit repository: https://kubernetes-charts.storage.googleapis.com/ version: ^2.8.0 - condition: fluentd-elasticsearch.enabled name: fluentd-elasticsearch repository: https://kubernetes-charts.storage.googleapis.com/ version: ^2.0.0 - condition: nginx-ldapauth-proxy.enabled name: nginx-ldapauth-proxy repository: https://kubernetes-charts.storage.googleapis.com/ version: ^0.1.0 - condition: elasticsearch-curator.enabled name: elasticsearch-curator repository: https://kubernetes-charts.storage.googleapis.com/ version: ^2.1.0 - condition: elasticsearch-exporter.enabled name: elasticsearch-exporter repository: https://kubernetes-charts.storage.googleapis.com/ version: ^2.1.0 description: A Helm chart for ELK home: https://www.elastic.co/products icon: https://www.elastic.co/assets/bltb35193323e8f1770/logo-elastic-stack-lt.svg maintainers: - email: pete.brown@powerhrg.com name: rendhalver - email: jrodgers@powerhrg.com name: jar361 - email: christian.roggia@gmail.com name: christian-roggia name: elastic-stack version: 1.9.0

Check It Out

If you tried to jump ahead and run a Helm command, you might have been disappointed. First, you need to make sure that Helm and K3s are playing together nicely by telling Helm where to access the Kubernetes cluster:

$ export KUBECONFIG=/etc/rancher/k3s/k3s.yaml $ kubectl get pods --all-namespaces $ helm ls --all-namespaces

If you didn't get any errors, then all is well and you can continue; otherwise, search online for Helm errors relating to issues such as cluster unreachable and the like.

Next, having tweaked the values file to your requirements, run the chart installation command:

$ helm install stable/elastic-stack --generate-name

Listing 3 reports some welcome news – an installed set of Helm charts that report a DNS name to access. Now you can access Elastic Stack from inside or outside the cluster.

Listing 3: Kibana Access Info

NAME: elastic-stack-1583501473

LAST DEPLOYED: Fri Mar 6 13:31:15 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

The elasticsearch cluster and associated extras have been installed.

Kibana can be accessed:

* Within your cluster, at the following DNS name at port 9200:

elastic-stack-1583501473.default.svc.cluster.local

* From outside the cluster, run these commands in the same shell:

export POD_NAME=$(kubectl get pods --namespace default -l "app=elastic-stack,release=elastic-stack-1583501473" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:5601 to use Kibana"

kubectl port-forward --namespace default $POD_NAME 5601:5601

Instead of following the details in the listing, for now, I'll adapt them and access Kibana (in the default Kubernetes namespace) with the commands:

$ export POD_NAME=$(kubectl get pods -n default | grep kibana | awk '{print $1}')

$ echo "Visit http://127.0.0.1:5601 to use Kibana"

$ kubectl port-forward --namespace default $POD_NAME 5601:5601

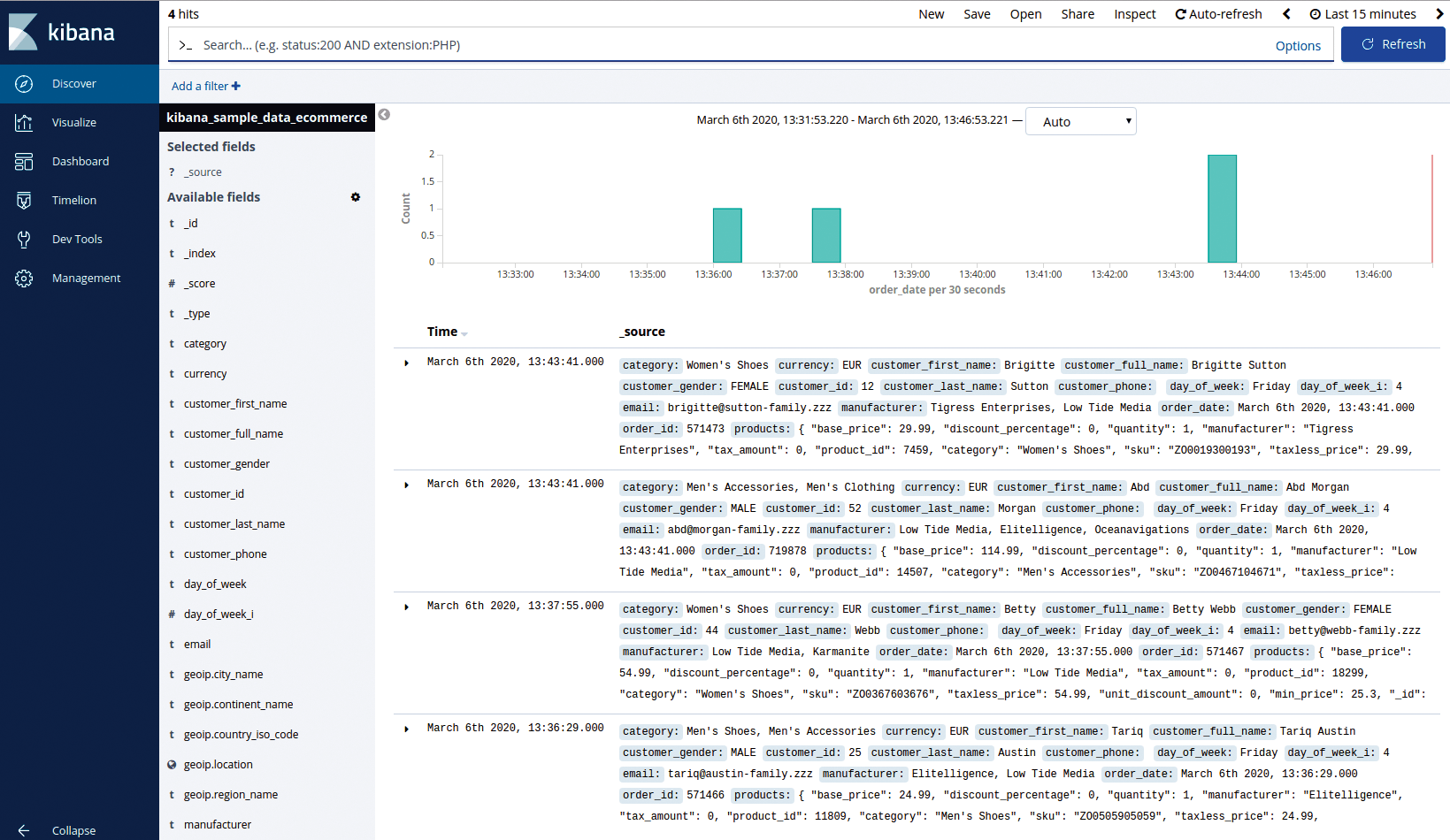

By clicking the URL displayed in the echo statement – et voilà! – a Kibana installation awaits your interaction. To test that things look sane, click through the sample data links in the Discover section (Figure 1).

In normal circumstances, you need to ingest data into your Elastic Stack. Detailed information on exactly how to do that, dependent on your needs, is on the Elastic site [9]. This comprehensive document is easy to follow and well worth a look. Another resource [10] describes a slightly different approach to the one I've taken, with information on how to get Kubernetes to push logs into Elasticsearch to monitor Kubernetes activities with Fluentd [11]. Note the warning about leaving Kibana opened up to public access and the security pain that may cause you. If you're interested in monitoring Kubernetes, you can find information on that page to get you started.

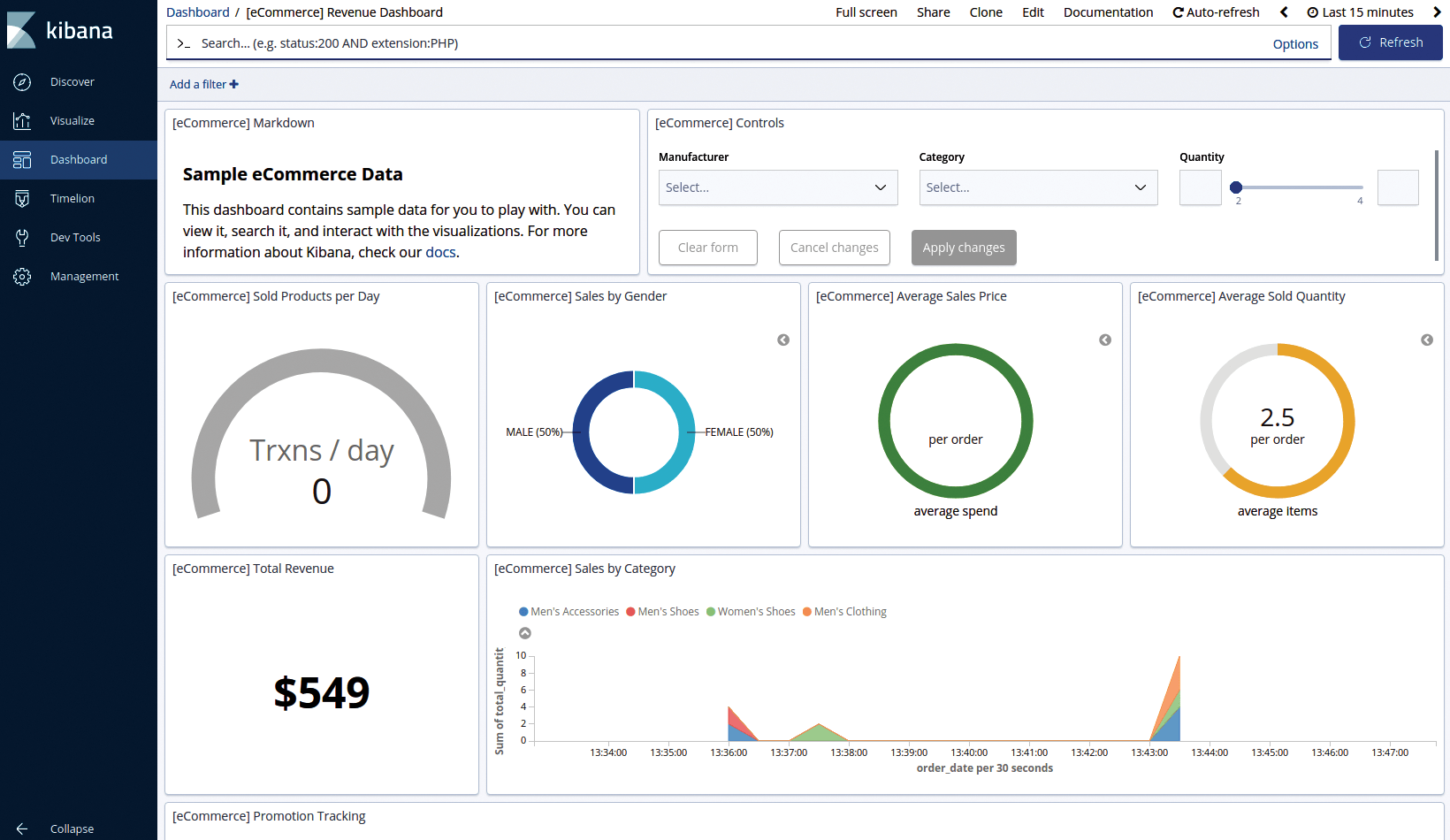

As promised at the beginning of this article, the aim of my lab installation was to create some dashboards, as shown in a more visual representation of the sample data in Figure 2.

The End Is Nigh

There's something very satisfying about being able to set up a lab quickly by getting a piece of tech working before rolling it out into some form of consumer-facing service. As you can see, the full stack, including K3s, is slick and fast to set up. The solution is pretty much perfect for experimentation. The installation is so quick, you are able to tear it down and rebuild it (or, e.g., write an Ansible playbook to create it) without the interminable wait.

I will leave you to ingest some useful telemetry into your shiny new Elastic Stack.