A versatile proxy for microservice architectures

Traffic Control

The microservices architecture that has become so popular lately offers a number of benefits, including agile development. If the individual parts of an application no longer have to be squeezed into the release cycle of a large monolithic product, development becomes far more dynamic.

However, if you suddenly have to deal with – from the developers' point of view – many small components, you have to define interfaces that talk to each other. All of a sudden topics such as load balancing, fault tolerance, and security start to play a significant role. Service mesh solutions such as Istio, which I reviewed in an earlier article [1], are currently enjoying great popularity, promising admins an automated approach to networking.

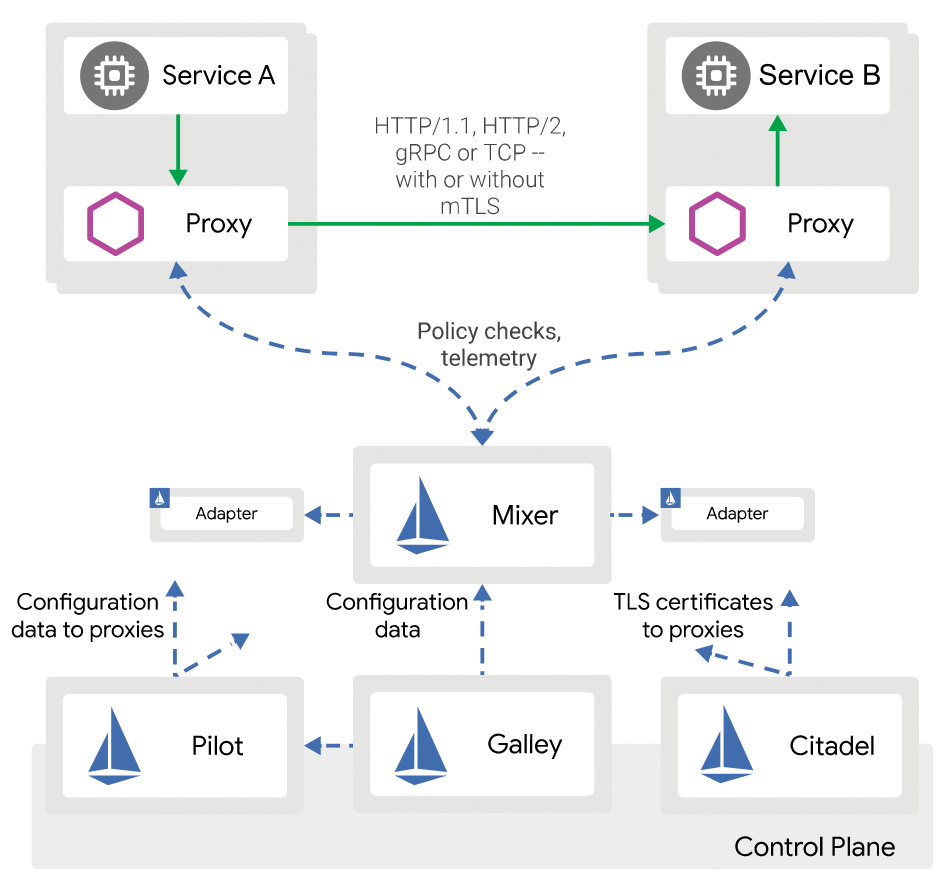

As a closer look at the Istio architecture (Figure 1) reveals, the Envoy open source component is the core of the solution and confers most of the functionality. Fundamentally, Istio is a dynamic configuration framework that feeds Envoy settings for a specific environment.

Accordingly, the Istio developers refer to Envoy as a sidecar, but if you see Envoy only as a component of Istio, you are doing the tool an injustice. In this article, I look into Envoy itself, its features and the question of how Envoy can be rolled out and operated in a meaningful way, even removed from Istio.

Universal Proxy

The very first sentence on the Envoy website [2] reveals that the developers are seeking to provide a comprehensive solution. They refer to the tool as a proxy suitable both for use in applications and for use in user-facing operations.

Both developers and admins have to deal with several types of connections in clouds. On the one hand, customers connect with individual application services from the outside by relying on a microservices architecture. On the other hand, the individual components talk to each other. Envoy claims to serve both use cases adequately as a proxy server: It seeks to be the switching point in both directions.

Such challenges in the area of communication between services are not new. Classical setups usually combine several services; here, too, interprocess communication is an issue. Even a short excursion into the desktop world makes this clear, where various services written by different developers have to talk to each other.

In the past, libraries that offered standardized functions and thus facilitated the task of establishing connections often solved the problem. However, the concept is relatively rigid, and if you look at the situation on the desktop, you will quickly discover that communication buses, to which all the tools involved are connected, have been the dominant approach for years. Envoy ultimately sees itself in exactly this tradition: It seeks to act as a bus within setups. To make this work, Envoy comes with various practical features and characteristics.

C++ Performance

If your focus is on the world of contemporary programs and solutions, you will tend think of Go or Rust when it comes to new applications. Although Envoy is still comparatively new, the solution was not written in any of the modern programming languages. Instead, the developers deliberately went for C++ 11, which they believe has a far lower performance overhead than its modern counterparts.

For example, latency is significantly higher in the various current scripting and programming languages than is desirable for modern high-performance setups. C++ 11, on the other hand, is optimized out of the box both for efficient development and fast performance. Their argument against C was that, although fast, from the developer's point of view it does not provide the necessary tools desired for the development of a proxy.

Layers

Sooner or later every computer scientist is confronted with the various levels of the OSI model. The team describes Envoy as primarily a proxy for OSI Layers 3 and 4, which means Envoy can be configured to work exclusively on the basis of IP addresses or, if required by the application, potentially as an instance that speaks a certain protocol. A load distributor for HTTP can be implemented by resolutely forwarding port 80 to the back ends.

In practice, however, this approach proves to be lacking because features such as session persistence are now commonplace in the load balancer business. In specific terms, one requirement in today's setups is that a client must always reliably reach the same back end for several requests in succession. The session handling integrated in many web applications is an example of a function that requires this persistence.

If Envoy were based solely on Layer 3, it could offer this function without understanding the HTTP protocol. In this case, however, the program would not be able to analyze the traffic flowing through it (e.g., to identify the session ID of the application on the web server side and use it if necessary). This is only possible if Envoy actually understands the traffic – and that's exactly what the Envoy feature is for, as well as processing data from Layer 4.

Plugins for Variety

The Envoy developers clearly cannot build support into their product for every web application on the planet, especially when the specifications for many apps are not even open to scrutiny. To offer users as wide a feature set as possible, the developers decided to implement a plugin interface.

Because Envoy is based on an open architecture and free standards, every application developer has the opportunity to write an Envoy plugin for their specific application and deliver it with their pod. When the developer loads an Envoy extension, the software automatically routes traffic through this plugin. Several plugins can be connected in series.

By the way, Envoy comes with a few basic filters out of the box. One handles HTTP proxy functionality, and another is designed as an endpoint for TLS connections. A simple TCP proxy is included in the scope of delivery, as well.

Special Case: HTTP

In the context of load balancing, the HTTP protocol has special significance. The HTTP use case has been around long before any microservices architectures. Web server setups have been based on the simple principle of load balancing for decades. Even in times of REST APIs and modern web architectures, HTTP traffic still accounts for an enormous portion of the traffic generated. It's hardly surprising that the Envoy developers have paid special attention to the HTTP protocol – particularly in two places.

Envoy allows interposing additional Layer 7 filters over a separate interface in the HTTP filter. This interface is also freely accessible and documented, so developers can produce their own filters. More specifically, these filters have access to the entire HTTP flow and can monitor or modify it according to the tools provided by the HTTP filter.

The developers cite HTTP packet routing, limits for incoming HTTP connections using various parameters (number of incoming HTTP connections, volume of data transferred, etc.), and sniffing transferred content as examples. Conceivably during the analysis of incoming HTTP packets, Envoy could detect that a defined limit for individual back ends has been exceeded, which would cause Envoy to route them dynamically elsewhere. As an example of sniffing, the Envoy developers cite Amazon's DynamoDB, which can be monitored by Envoy.

Between Worlds

Envoy supports HTTP/2, which has been on the market since 2015, although its predecessor is still common in the wild. Anyone building a new application today will tend to choose HTTP/2 over the old HTTP. Nevertheless, Envoy offers a practical function to act as a mediator between the two worlds, handling both HTTP/1.1 and HTTP/2. When Envoy translates between the two protocol versions, it does so completely transparently to the client so that older clients that do not yet support HTTP/2 can talk to HTTP/2 services through Envoy. For communication between several Envoy instances in a setup, however, the developers recommend exclusively using HTTP/2.

Remote procedure calls (RPCs) are usually understood to be a framework for calling commands in a controlled manner on remote systems. The processes generate results and send them back to the requesting party. The most common way to develop RPC systems is legacy client-server systems.

In the context of microservice architectures and containers, RPC is experiencing a new dawn thanks to Google's gRPC protocol. Google developed gRPC with the explicit aim of standardizing and automating various processes within an application. If a developer wants to define an interface between two applications, they do not have to re-invent the wheel; rather, they can use the modular gRPC instead.

Little wonder that Envoy supports gRPC and can analyze gRPC traffic flows. In practice, Envoy becomes a dynamic gRPC transport layer in environments that uses gRPC: It intercepts and interprets the messages and routes the traffic accordingly in such a way that it reaches the desired destination efficiently and the desired RPC action is triggered.

In practice, gRPC and HTTP in Envoy complement each other, which is useful from a developer's point of view. Not only does it eliminate the need to design one's own API, Envoy also automatically manages the connection to the API service in the best possible way.

Configuring Envoy

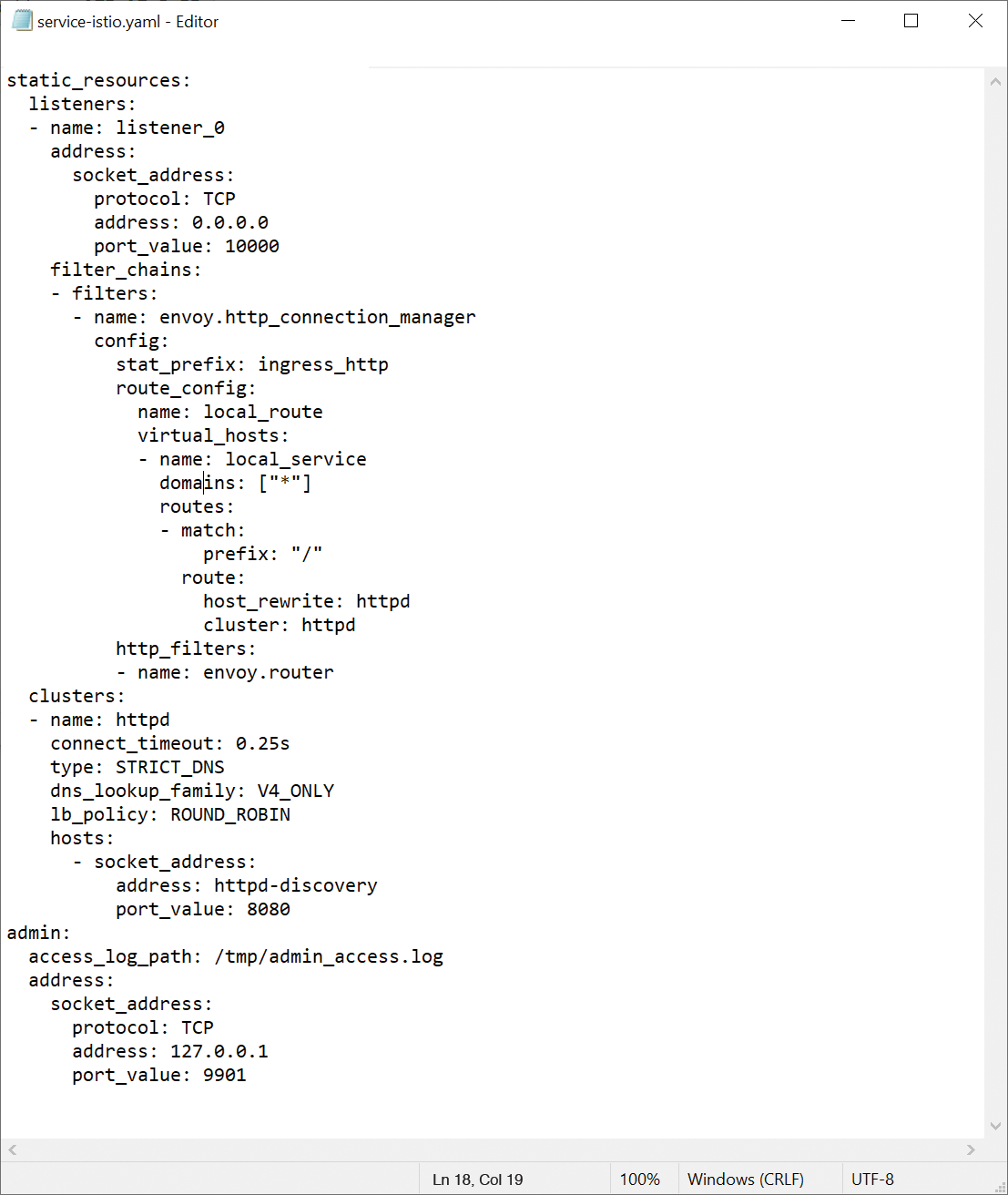

Rolling out Envoy as the sidecar for a container in Kubernetes is not difficult – in fact, it is even quite easy (Figure 2). Where Envoy gets its configuration is a more difficult question, but without configuration, Envoy doesn't even know which back ends are available for an application component. Because microapps, by definition, work much more dynamically than conventional apps, the classic approach of a static configuration is not useful.

Envoy takes this aspect of microapps into account and offers several options – almost all based on the principle of determining the configuration as autonomously as possible and almost all because Envoy allows a static configuration. The developers explicitly point out that they do not want to sideline this feature.

In a static Envoy configuration, host discovery is only possible over DNS; otherwise, practically all features are available. According to the developers, even large setups can be built with it, and the hot restart feature certainly has a part to play. If the developers change the Envoy configuration on the fly, the hot restart feature acts as a kind of Envoy variant of SIGHUP, which explicitly does not work here because the restart has to be triggered by internal Envoy functions.

For admins who like things a tad more dynamic, various service discovery APIs are available in Envoy that can be used to modify the Envoy configuration during operation. Endpoints can be configured with a dedicated API just as dynamically as clusters. Further APIs are available for routes and for cryptographic strings (typically passwords). On the Envoy website, the developers explain the various service discovery APIs in detail [3]. In practice, the APIs form the basis for solutions such as Istio or Contour, which use the APIs to store new configuration directives in Envoy during operation.

In the Know

Although advocates of modern microarchitecture applications often spread the myth that such applications no longer need monitoring, admins and developers usually know better – especially in setups in which instances of services are constantly disappearing or being added as a result of dynamic scaling. Envoy comes with several built-in functions that enable efficient and comprehensive monitoring.

The program is able to keep extensive records of its activities. For example, if it forwards an incoming request to a back end or establishes a connection between two services in an application, it always records the action and notes how much traffic is sent over the communication path. Additionally, the individual filters supplied with Envoy by default record many metrics. You can write your own filters for Envoy, as well.

However, the ability to record metrics is only one side of the coin. The other side is to get the data out of Envoy, store it sensibly, and then visualize it. Envoy offers several front ends to transfer data to other services.

The historical standard is exporting to Statsd, from which the metric data can then be further processed. If you want to avoid the detour, you can also export the data from Envoy directly to Prometheus (Figure 3). Grafana now has a number of pre-built dashboards that graphically display metric data from Envoy if stored in Prometheus, which guarantees a useful overview.

Meaningful Envoy

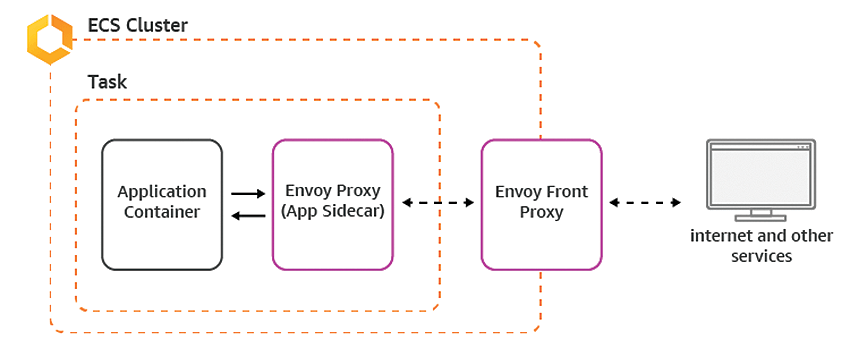

Once you have discovered the various advantages of Envoy, you might ask yourself how you have lived your life up to now without it. Envoy offers huge advantages: In no other way can a mesh network be spanned so easily between the services of a microservices architecture – which inevitably raises the question of how Envoy can be rolled out and provided with a suitable configuration. As one example, Amazon accomplishes this directly as a resource (Figure 4).

The most common approach is Istio. Istio promises no less than an all-round, no worries package that integrates Envoy as a central component for both incoming data traffic on the client side ("ingress") and for communication between the components of an application ("east-west traffic"). Envoy extends Istio to include various automations and, with a few rules, tries to relieve the developer of most of the work.

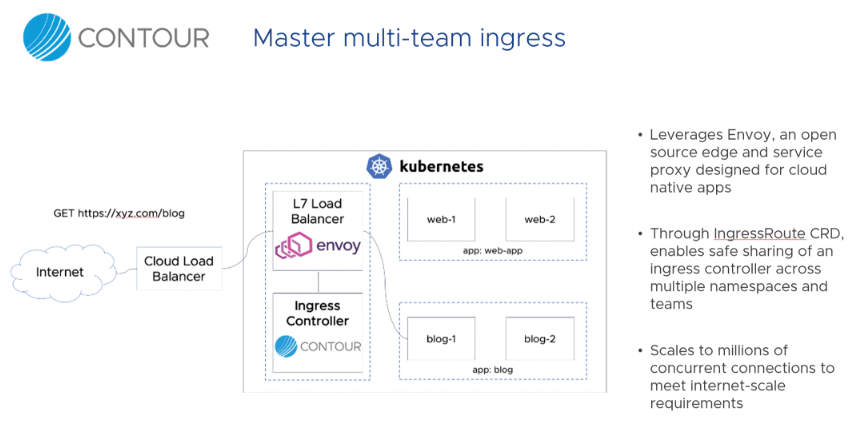

However, Istio is by no means the only solution of this kind. For example, VMware's Contour is a proxy designed especially for the ingress traffic in Kubernetes. East-west traffic between the parts of an application is irrelevant to Contour, but the developers at VMware do set great store by the features for incoming traffic from the client side.

Envoy Control Plane

From an architectural point of view, Contour is not so different from Istio. Contour also acts as a control plane for Envoy and is controlled in Kubernetes by means of custom resource definitions (CRDs). Once users have set up their pods accordingly, Envoy runs as a proxy instance and is dynamically controlled by Contour.

Contour has a comprehensive feature set. If a component of an application is automatically scaled horizontally because of high load, for example, Contour Envoy adapts autonomously. If so desired, Contour can also automatically configure Envoy's TLS capabilities after simply specifying the appropriate certificates when rolling out the pod definitions. However, all these functions are just the daily grind from Envoy's point of view.

Freestyle also supports Envoy. As described, Envoy can monitor traffic directly from a virtual monitor port, various upstream servers can be configured dynamically in Contour, and admins can define error conditions according to the parameters of a setup.

Having an Envoy instance for several namespaces in Kubernetes is also possible. In this way, traffic from a shared URL can be distributed to different pods in Kubernetes, which are then operated by different teams.

Also Ran: Consul Connect

Another possible way to roll out Envoy as a sidecar is offered by the Consul cluster consensus algorithm. Dubbed Connect, Envoy becomes part of the Consul service mesh for Kubernetes. Envoy essentially takes care of connecting clients outside the setup to services within it. Moreover, Envoy is responsible for SSL termination if the admin wants to use these features.

Almost in passing, Connect also configures Envoy to act as a basic security barrier. Two services that are not authorized to communicate with each other according to Connect cannot do so through the proxy server either. The proxy would reject any connection attempts in collaboration with Connect.

Without a running Consul instance, the use of Connect makes only limited sense. If you use Consul anyway, you can set up Connect as a service and use it without too much additional overhead. If you do not use Consul, you are probably better off with Istio or Contour, depending on your area of responsibility (Figure 5).

Conclusions

Modern app architectures present admins and developers with some new challenges regarding the management of many parallel connections. Envoy proves to be a fantastic tool that mediates between clients and applications as well as between the parts of an application. Its performance is impressive. In addition to simple proxy connections, complex setups are no problem. SSL termination is one of the easier tasks for Envoy. At the moment, the program faces competition from one direction, with Linkerd being the only serious alternative to Envoy. Anyone developing apps for Kubernetes will want to take a good look at Envoy and carefully compare it with Linkerd.