Fixing Ceph performance problems

First Aid Kit

Ceph has become the de facto standard for software-defined storage (SDS). Companies building large, scalable environments today are increasingly unlikely to go with classic network-attached storage (NAS) or storage area network (SAN) appliances; rather, distributed object storage, now part of Red Hat, is preferred.

Unlike classic storage solutions, Ceph is designed for scalability and longevity. Because Ceph is easy to use with off-the-shelf hardware, enterprises do not have to worry about only being able to source spare parts directly from the manufacturer. When a hardware warranty is coming to an end, for example, you don't have to replace a Ceph store completely with a new solution. Instead, you remove the affected servers from the system and add new ones without disrupting ongoing operations.

The other side of the coin is that the central role Ceph plays makes performance problems particularly critical. Ceph is extremely complex: If the object store runs slowly, you need to consider many components. In the best case, only one component is responsible for bad performance. If you are less lucky, performance problems arise from the interaction of several components in the cluster, making it correspondingly difficult to debug.

After a short refresher on Ceph basics, I offer useful tips for everyday monitoring of Ceph in the data center, especially in terms of performance. In addition to preventive topics, I also deal with the question of how admins can handle persistent Ceph performance problems with on-board resources.

The Setup

Over weeks and months, a new Ceph cluster is designed and implemented in line with all of the current best practices with a 25Gbps fast network over redundant Link Aggregation Control Protocol (LACP) links. A dedicated network with its own Ethernet hardware for traffic between drives in Ceph ensures that the client data traffic and the traffic for Ceph's internal replication do not slow each other down.

Although slow hard drives are installed into the Ceph cluster, the recommendations of the developers have been followed meticulously, and these slow drives have been provided with a kind of SSD cache. As soon as the data is written to these caches, a write is considered complete for the client, making it look client-side as if users are writing to an SSD-only cluster.

Initially, the cluster delivers the desired performance, but suddenly packets are just crawling across the wire, and users' patience is running out. The time Ceph takes to get things done seems endless, and nobody really knows where the problem might lie. Two questions arise: How do you discover the root cause of the slow down? How do you ideally and continuously monitor your cluster so that you can identify potential problems before cluster users even notice them? The answers to both questions require a basic understanding of how Ceph works.

RADOS and Backends

Red Hat markets a collection of tools under the Ceph product name that creates a complex storage solution when used together. The core of Ceph is the distributed object store RADOS, which is known as an object store because it handles every incoming snippet of information as a binary file.

Ceph achieves its core feature of distributed storage by splitting up these files and putting them back together again later. When the user uploads a file to RADOS, the client breaks it down into several 4MB objects before uploading, which RADOS then distributes across all its hard drives.

Ceph is logically divided into RADOS on the one hand and its frontends on the other (Figure 1). Clients have a cornucopia of options to shovel data into a Ceph cluster, but they all work only if the RADOS object store is working, which depends on several factors.

![Clients access Ceph's internal object store, RADOS, either as a block device, as an object, or as a filesystem [1]. CC BY-SA 4.0 Clients access Ceph's internal object store, RADOS, either as a block device, as an object, or as a filesystem [1]. CC BY-SA 4.0](images/F01-b02_ceph-performance_2.png)

Of OSDs and MONs

Two components are necessary for the absolutely basic functionality of RADOS. The first component is the object storage daemon (OSD) which acts as a data silo in a Ceph installation. Each OSD instance links itself to a device, making it available within the cluster over the RADOS protocol. In principle, hard drives or SSDs can be used. However, OSDs keep a journal similar to filesystems – the cache mentioned earlier – and Ceph basically offers the option of outsourcing this cache to fast SSDs, without having to equip the entire system with SSDs.

The OSDs are accompanied by the second component, monitoring servers (MONs), which monitor basic object storage functions. In Ceph, like any distributed storage solution, someone has to make sure that only those parts of the cluster that have a quorum (i.e., the majority of the MONs) are used. If a cluster breaks up because of network problems, diverging writes could otherwise take place in parallel on the now multiple parts of the cluster, and a split-brain would result. Avoiding this is one of the most important tasks of any storage solution.

Additionally, the MONs record which MONs and which OSDs exist (MONmap and OSDmap). The client needs both pieces of information to store binary objects in RADOS.

How Writes Work

To avoid the entire write load having to be handled by the clients in Ceph, the developers decided to use a decentralized approach. Clients implement the CRUSH algorithm [2], which provides random results, but always the same random results with the same layout of the Ceph cluster, which is possible because the client knows the list of all OSDs in the form of the OSDmap that the MONs maintain for them.

If a client wants to store something, Ceph first divides the information into binary objects with CRUSH. In the standard configuration, these are 4MB maximum in size. The client then also uses CRUSH to compute the appropriate primary object storage daemon for each of these objects and then uploads.

The OSDs join the fray as soon as binary objects are received by using CRUSH to calculate the secondary OSDs for the objects and then copying them. Only when the object has arrived in the journals of as many OSDs as the value size specifies (3 out of the box) is the acknowledgement for the write sent to the client, and only then is the write considered complete.

Layer Cake

Although Ceph is not overly versatile in direct comparison with other cloud solutions, such as software-defined networking, it is still a very good solution. Nevertheless, the write or read performance from a Ceph cluster in this fictional case is still poor. A number of components can be considered possible culprits, and their complexity is reflected in the complexity of monitoring the different levels for speed.

The Basics

Sensible performance monitoring in Ceph ideally starts with the basics of monitoring. Even with Ceph nodes, admins want to keep track of the state of individual hard drives. Although Ceph detects failed hard drives automatically, it is not uncommon for hard disks or SSDs to develop minor defects. Outwardly, they still claim to be working, but write operations take ages, despite not aborting with an error message.

Ceph itself detects these slow writes and displays them (more about this later). It does no harm, however, to discover these tell-tale DriveReady-SeekComplete errors in the central logging system. Today, Ceph regularly uses modern monitoring software such as Prometheus, but it pays to keep up with monitoring of other classic vital signs. Local hardware problems, such as RAM failure or simple overheating, tend to result in a slower response from Ceph.

Network Problems

One huge error domain in Ceph is, of course, problems with the network between the client and RADOS and between the drives in RADOS. Anyone who has dealt with the subject of networks in more detail knows that modern switches and network cards are highly complex and have a large number of tweaks and features that influence the achievable performance. At the same time, Ceph itself has almost no influence on this, because there's not much that can be tuned on its network. It uses the TCP/IP stack available in the Linux kernel and relies on the existing network infrastructure to function correctly.

For the admin, this means that you have to monitor your hardware meticulously in terms of networking, especially as it applies to typical performance parameters. However, this is also easier said than done. The standard interface to access the relevant information by almost all modern devices is SNMP. However, practically every manufacturer does its own thing when it comes to collecting the relevant data.

Mellanox, for example, incorporates in its application-specific integrated circuits (ASICs) a function known as What Just Happened, which supports the admin in finding dropped frames or other problems. Other manufacturers, such as Cisco and Juniper, also have monitoring tools for their own hardware on board. However, no all-round package exists, and depending on the local setup, it ultimately boils down to a complete solution built by the brand itself. However, if you are careful to use switches with Linux firmware, you are not totally dependent on the specific solutions invented by the vendors.

If you experience performance problems in Ceph, the first step is to find out where they are happening. If they occur between the clients and the cluster, different network hardware might be responsible, rather than communication between the OSDs. In case of doubt, it is helpful to use tools such as iPerf to move the cluster around and measure the results manually.

Problems with Ceph

Assuming the surrounding infrastructure is working, a performance problem in Ceph very likely has its roots in one of the software components, which could affect RADOS itself; however, the kernel in the systems running Ceph might also be the culprit.

Fortunately, you have many monitoring options, especially at the Ceph level. The Ceph developers know about the complexity of performance monitoring in their solution. In case of an incident, you first have to identify the primary and secondary OSDs for each object and then search for the problem.

If you have thousands of OSDs in your system, you are facing a powerful enemy, which is why the Ceph OSDs meticulously record metrics for their own performance. Therefore, if an OSD notices that writing a binary object is taking too long, it sends a corresponding slow-write message to all OSDs in the cluster, thus decentrally storing the information at many locations.

Additionally, Ceph offers several interfaces to help you access this information. The simplest method is to run the ceph -w command on a node with an active MON. The output provides an overview of all problems currently noted in the system, including slow writes. However, this method cannot be automated sensibly.

Some time ago, the Ceph developers therefore added another service to their software – mgr (for manager) – that collects the metric data recorded by the OSDs, making it possible to use the data in monitoring tools such as Prometheus.

Dashboard or Grafana

A quick overview option is provided by the Ceph developers themselves: The mgr component made its way into the object store along with the Ceph Dashboard [3], which harks back to the openAttic project and visualizes the state of the Ceph cluster in an appealing way. The Dashboard also highlights problems that Ceph itself has detected.

However, this solution is not ideal for cases in which a functioning monitoring system (e.g., Prometheus) is already running, because the alert system usually has a direct connection to it. The Ceph dashboard usually cannot be integrated directly, but the mgr component provides a native interface to Prometheus, to which metric data from Ceph can be forwarded. Because Telegraf also supports the Prometheus API, the data can find its way into InfluxDB, if desired.

Grafana is also on board. The Grafana store offers several dashboards for the labels Prometheus uses to read metric data from Ceph; these dashboards visualize the most important metrics for a quick overview.

Look Closely

Whether the metric data is displayed by the Ceph Dashboard or by Prometheus and Grafana, the central question remains as to which data you need to track. Four values play a central role in performance:

-

ceph.commit_latency_msspecifies the time Ceph needs on average for all operations. Operations include writing to a drive or initiating a write operation to a secondary OSD. -

ceph.apply_latency_msindicates the average time it takes for data to reach the OSDs. -

ceph.read_bytes_secandceph.write_bytes_secprovide an initial overview of what is going on in the cluster.

The same values can also be read out for individual pools. Pools are logical areas within RADOS. However, don't expect too much from these values if the internal Ceph cluster meets the usual standards and all pools point to the same drives in the background. If you have different pools for different storage drives in the cluster (e.g., HDDs and SSDs), the dataset per pool is probably more useful.

In Practice

With the described approaches, problems in Ceph can be detected reliably, but this is far from the end of the story. Many parameters that the Ceph manager delivers to the outside world are generated from the metric values of the individual OSDs in the system. However, they can also be read out directly from the outside with the admin socket of each individual OSD. If you want, you can configure Prometheus or Grafana to pass every operation on every OSD in the installation to the monitoring system. The sheer volume, however, can have devastating effects – a jumble of thousands of values quickly becomes confusing.

It can also be difficult to identify the causes of certain metric values in Ceph, but that's the other half of the Ceph story: Knowing that the cluster is experiencing slow writes in the cluster is all well and good, but what you really need is information about the cause of the problem. The following example from my personal experience can give you an impression of how monitoring details can be used to detect a performance problem in Ceph. However, this process cannot be meaningfully automated.

The Problem

The initial setup is a Ceph cluster with about 2.5PB gross capacity that is mainly used for OpenStack. When you start a virtual machine that uses an RBD image stored in Ceph as a hard drive for the root filesystem, an annoying effect regularly occurs: The virtual machines (VMs) experience an I/O stall for several minutes and are unusable during this period. Afterward, the situation normalizes for some time, but the problem returns regularly. During the periods when the problem does not exist, writes to the volumes of the VMs deliver a passable 1.5GBps.

The monitoring systems I used did indeed display the slow writes described above, but I could not identify a pattern. Instead, the writes were distributed across all OSDs in the system. It quickly became clear that the problem was not specific to OpenStack, because a local RBD image without access through the cloud solution led to the same issues.

Is It the Network?

The network soon became the main suspect because it was apparently the only infrastructure shared by all the components involved. However, extensive tests with iPerf and similar tools refuted this hypothesis. Between the clients of the Ceph cluster, the dual 25Gbps LACP link was up and running – reliably – at 25Gbps and above in the iPerf tests. The lack of clues was aggravated by the error counters of all the network interface controllers (NICs) involved, as well as those on the network switches, stubbornly remaining at 0.

From there on it became a tedious search. In situations like this, you can only do one thing: trace the individual writes. As soon as a slow write process appeared in the monitoring system, I took a closer look. Ceph always shows the primary OSD of a slow write. On the host on which the OSD runs, the admin socket is used in the next step – and this proved to be very helpful.

In fact, each OSD keeps an internal record of the many operations it performs. The log of a primary OSD for an object also contains individual entries, including the start and end of copy operations for this object to the secondary OSDs. The command

ceph daemon osd.<number> dump_ops_in_flight

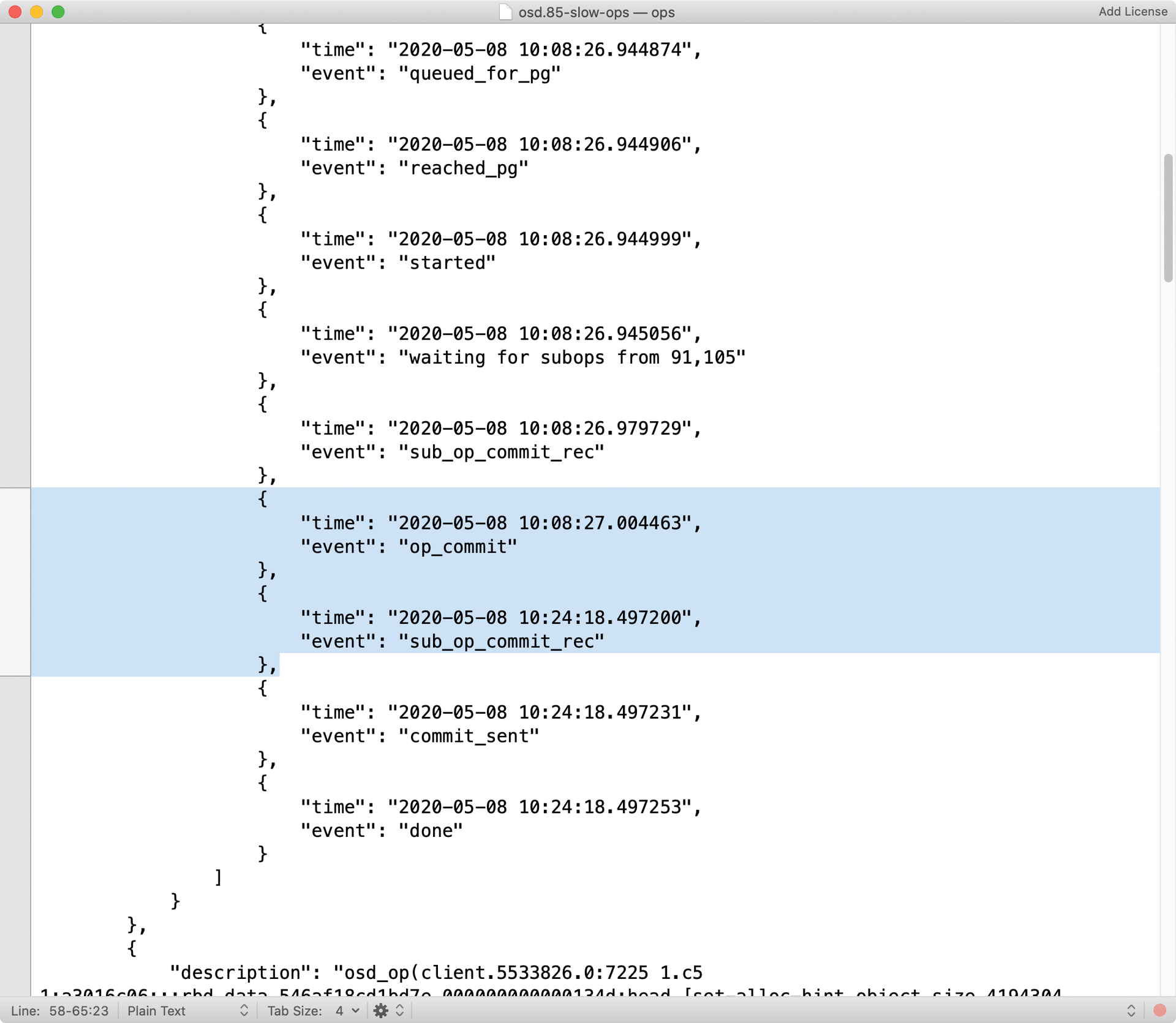

displays all the operations that an OSD in Ceph is currently performing. Past slow ops can be retrieved with the dump_historic_slow_ops parameter (Figure 2), whereas dump_historic_ops lets you display log messages about all past operations, but only over a certain period of time.

Equipped with these tools, further monitoring became possible: For individual slow writes, the primary OSD could now be identified; there again, the OSD in question provided details of the secondary OSDs it had computed. I thought their log messages for the same write operation would prove useful for gaining information about defective storage drives.

However, it quickly became clear that for the majority of the time the primary OSD spent waiting for responses from the secondary OSDs, the latter were not aware of the task at hand. As soon as the write requests arrived at the secondary OSDs, they were completed within a few milliseconds. However, it often took several minutes for the requests to reach the secondary OSDs.

The Network Revisited

Because the network hardware had already been excluded as a potential source of error because of extensive testing, it appeared to be a Ceph problem. After much trial and error, the spotlight finally fell on the packet filters of the systems involved. Last but not least, the iptables successor nftables, which is used by default in CentOS 8, turned out to be the cause of the problem. It was not a misconfiguration. Instead, a bug in the Linux kernel caused the packet filter to suppress communication according to an unclear pattern, which in turn explained why the problem in Ceph was very erratic. An update to a newer kernel finally remedied the situation.

As the example clearly shows, automated performance monitoring from within Ceph is one thing, but if you are dogged by persistent performance problems, you can usually look forward to an extended debugging session. At this point, it certainly does no harm to have the manufacturer of the distribution you are using on board as your support partner.

Conclusions

Performance monitoring in Ceph can be implemented easily with Ceph's on-board tools for recording metric data. At the end of the day, it is fairly unimportant whether you view the results in the Ceph Dashboard or Prometheus. However, this does not mean you should stop monitoring the system's classic performance parameters.

To a certain extent, it is unsatisfactory that detecting a problem in a cluster does not allow any direct conclusions to be drawn about its solution. In concrete terms, this means that once you know that a problem exists, the real work has just begun, and this work can rarely be automated.