Bufferbloat causes and management

All Puffed Up

Data sent on a journey across the Internet often takes different amounts of time to travel the same distance. This delay time, which a packet experiences on the network, comprises:

- transmission delay, the time required to send the packet over the communication links;

- processing delay, the time each network element spends processing the packet; and

- queue delay, the time spent waiting for processing or transmission.

The data paths between communicating endpoints typically consist of many hops with links of different speeds. The lowest bandwidth along the path represents the bottleneck, because the packets cannot reach their destination faster than the time required to transmit a packet at the bottleneck data rate.

In practice, the delay time along the path – the time from the beginning of the transmission of a packet by the sender to the reception of the packet at the destination by the receiver – can be far longer than the time needed to transmit the packet at the bottleneck data rate. To ensure a constant packet flow at maximum speed, you need a sufficient number of packets in transmission to fill the path between the sender and the destination.

Buffers temporarily store the packets in a communication link while it is in use, which requires a corresponding amount of memory in the connecting component. However, the Internet has a design flaw known as bufferbloat that is caused by the incorrect use of data buffers.

TCP/IP Data Throughput

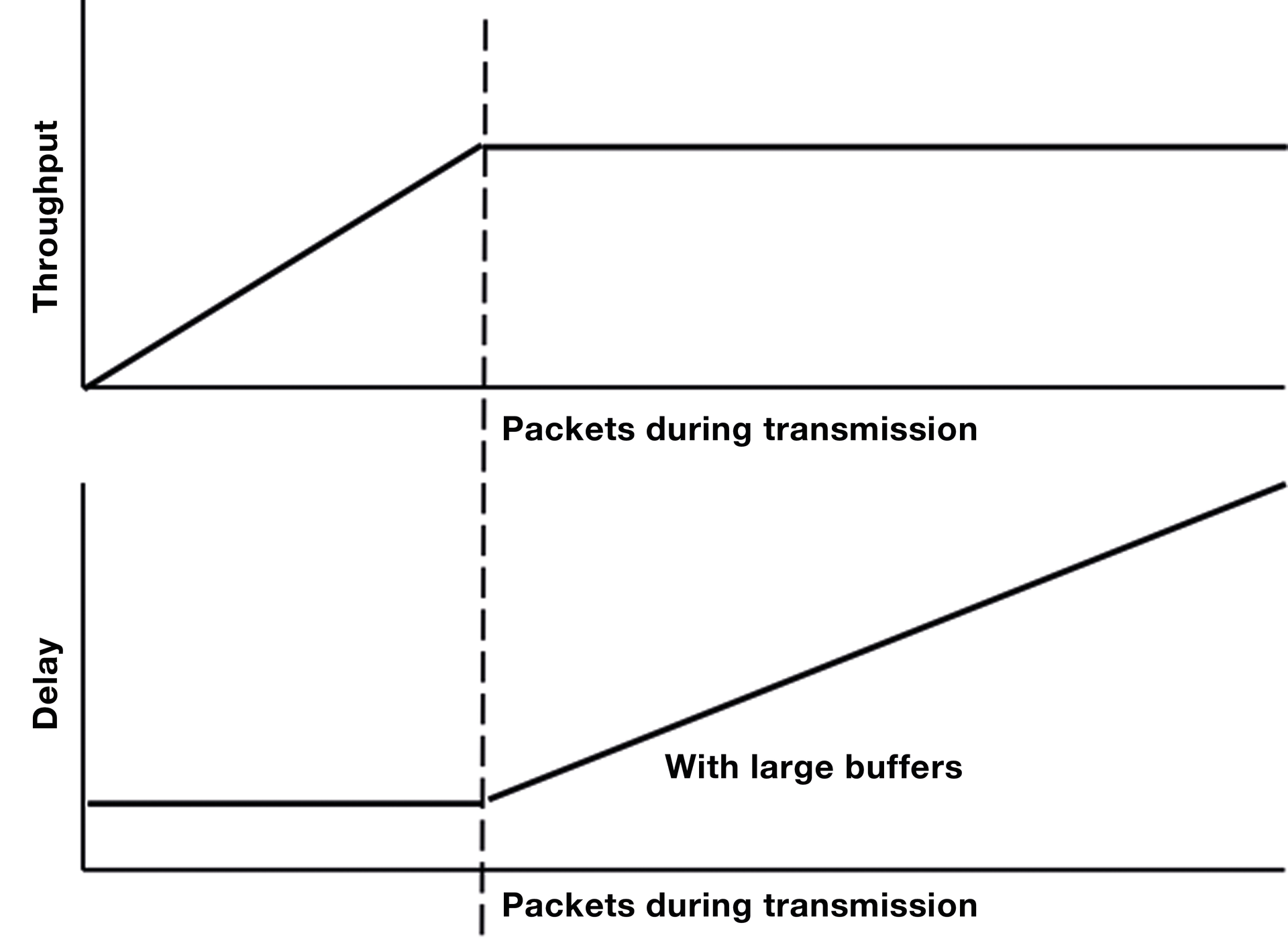

System throughput is the data rate at which the number of packets transmitted from the network to the destination is equal to the number of packets transmitted into the network. If the number of packets in transmission increases, the throughput increases until the packets are sent and received at the bottleneck data rate. If more packets are transmitted, the receive rate will not increase. If the network has large buffers along the path, they are filled with the additional packets and the delay increases.

Logically, a network without buffers has no space to buffer packets waiting to be transmitted. For this reason, the additional packets are deleted. If the transmission rate is increased, the loss rate increases accordingly. To work without intermediate buffers, packet arrivals must be predictable and lossless. In such a case, synchronized timing should ensure that packet losses do not occur. Such networks are complex, expensive, and inflexible. A well-known example of a bufferless network is the analog phone network. The addition of buffers to networks and the packaging of data into packets of variable size led to the development of the Internet.

Data transport on the Internet is grounded on the TCP/IP protocols. The basis of TCP is the idea of line capacity and the knowledge that excessive buffering does not occur along the data path to impede sending a certain volume of data at a time. The early Internet suffered from insufficient buffering. Even under moderate load, a data burst could cause a bottleneck in the transmission of packets (whether one or more connections), and data could be lost because of insufficient bandwidth. The losses eliminated congestion on the network but also led to a drop in throughput. To avoid these problems, sufficiently large buffers were used in the linking components, thus avoiding poor network utilization.

As part of the solution, slow-start and congestion-avoidance algorithms were integrated into the TCP protocol. These additional features created the conditions for the rapid growth of the Internet in the 1990s, as the algorithms maximized throughput, minimized delays, and ensured low losses (Figure 1). The source and target TCPs attempt to determine the line capacity between the two communication partners and then balance the number of packets in transmission. Because network connections are used by many applications simultaneously and conditions on the transmission paths change dynamically, the algorithms continuously examine the network and adjust the number of packets in transmission.

Discovery of Bufferbloat

A few years ago, a Google programmer working at home uploaded a large file to his work server. His children complained to him that his work was negatively affecting their Internet traffic. Of course, the expert wondered how his uploading activities could affect his children's downloads. With no clear answer, he set out to investigate this question.

By experimenting with pings and different load levels on his Internet connection, he discovered that the latency times were often four to 10 times greater than expected. He named this phenomenon "bufferbloat." His conclusion was that some data packets are trapped in excessively large buffers for short periods of time.

The bufferbloat problem was not recognized for a long time for three reasons:

- Bufferbloat is closely related to the functionality of the TCP protocol and the management of dynamic network buffers. Even in the 21st century, many programmers do not fully understand the management of dynamic buffers across network connections and the components interacting on the transmission path.

- A widespread misconception is that discarding packets on the Internet is always a problem. However, the truth is that this process is how the TCP protocol functions correctly.

- Many think that the best way to eliminate poor performance is to increase bandwidth.

Understanding what bufferbloat is and how TCP works is important when it comes to fixing it.

Effects of Bufferbloat

Imagine vehicles driving along an imaginary road. The cars are trying to get from one end to the other as fast as possible, driving almost bumper to bumper at the highest safe speed. The vehicles are, of course, the IP packets, and the road is a network connection. The bandwidth of the connection corresponds to the speed that the cars, including their loads, can travel from one end of the road to the other, and latency is the time it takes each car to get from one end of the road to the other.

One of the problems facing road networks is congestion. If too many cars try to use the road at once, unpredictable things happen (e.g., cars can run off the road or just break down. On the Internet, this is called packet loss, which should be kept to a minimum but cannot be eliminated completely.

One way to tackle a congestion problem would be a metering device of some sort that interrupts the road to the destination, such as ramp meters that control traffic merging onto freeways. When the driver approaches the freeway, a traffic light at the entrance tells them when they can enter the traffic flow. When traffic is low and the lights are not in use, they can simply merge into traffic. The traffic meter controls the timing and speed at which vehicles leave the on-ramp so the number of vehicles on the freeway is maintained at a reasonable level. The traffic meter is continually informed of the traffic congestion and tries to ensure that the new traffic does not have any adverse repercussions.

In this case, only the traffic light manages the merging traffic. The timing of the light has to ensure that the maximum capacity of the freeway is not exceeded, and the arriving cars rely on the fact that there is always enough space on the ramp for them in terms of speed of passage. On the Internet, the freeway ramp is a package buffer. Because network hardware and software developers hate unspecific packet behavior, just as highway builders hate car accidents, networkers have set up many huge buffers all over the network.

On a network, quantities optimize the available bandwidth. In other words, the network maximizes the amount of data that can be transmitted over the network in a constant time. However, these buffers affect latency, so the system has to make one more effort with the cars waiting to merge onto the freeway. It is rush hour and all kinds of vehicles are arriving faster than they can move on. Emergency vehicles, normal cars, delivery vans, and trucks accumulate on the ramp in the order of their arrival, and the traffic meter only deals with the vehicles directly in front of it (i.e., a First In, First Out (FIFO) queuing mechanism). However, the buffer ramp is very large, and the traffic meter does not know which vehicles are important and which are not. Traffic continues to back up and the ramp becomes overloaded.

When this happens on the Internet, the ramp (buffer) adds latency to the connection in question, because packets reach their destinations after long delays and the previously smooth network traffic begins to stutter. The cars try to find alternative routes and, in most cases, fail. In practice, a continuous stream of incoming vehicles tends to bunch together. The size of the bunch depends on the width of the exit. This bunching of traffic inevitably leads to additional accidents. Throughput is reduced and it appears as if the buffer is not even there. Practice has shown that the larger (the more inflated) the buffer, the worse the problems become. In the meantime, some extremely large buffers can be discovered on the Internet. If these buffers were freeway connections, they would be the size of Germany.

Now imagine a huge network of roads and freeways, each with traffic circles that act as buffers at its intersections. The cars on the route are trying to get through as quickly as possible, but they will experience several cascades of delays, and the traffic, which initially runs smoothly, becomes increasingly bunched and chaotic: The congested traffic from upstream buffers clogs the downstream buffers, even though the same volume of traffic would be handled without any problems if it ran smoothly. Such behavior leads to severe and sometimes irretrievable packet losses. As network traffic increases, it is increasingly transmitted in data bursts, and these patterns become more and more chaotic. The individual connections quickly fluctuate back and forth between idle and overload cascades. As a result, delays and packet run times change dramatically and do not follow a predictable pattern.

Packet loss – which should be prevented by integrating buffers – increases dramatically once all buffers are filled because of the random occurrence of thousands of packets causing Internet routers to slow down data transmission. One of the most obvious consequences of this is the latency peaks and thus the slowdown of the most frequently used services (e.g., DNS lookup). Voice over IP services (VoIP, e.g., Skype) and video streaming only work sporadically and can hardly be used.

The way these latency-sensitive services deteriorate illustrates the bufferbloat problem: The perceived speed of the Internet is more a function of latency (time to response) than bandwidth. Thus, bufferbloat changes the features that are most important to users: As the buffers on the network grow larger and more numerous, the effect of bufferbloat becomes greater. However, increasing bandwidth does not eliminate the bufferbloat cascades, and higher bandwidths often make the problem even worse.

Influence of Bufferbloat on TCP Operation

The vast majority of network traffic relies on TCP as its transport protocol. To fully understand bufferbloat, I'll look at the details of the TCP protocol. A handshake procedure (the three-way handshake) is used to establish a TCP connection. With its help, the individual transmission parameters are negotiated between the TCP entities involved in the connection (sender and receiver), including, for example, the initial sequence numbers. If an FTP server needs to transfer a large file, the TCP protocol usually begins its transfer by transmitting four TCP segments. The sender then waits for correct confirmation of receipt of these packets. Usually, reception is confirmed by transmitting an acknowledgement. Once the four segments have been acknowledged, the receiver increases the send rate by transmitting eight segments and waiting for them to be confirmed. If this is successful, the send window is set to the value 16. Afterward, the transmission window can be increased even further following the same principle.

The first connection phase is known as TCP slow start. The packets are deliberately sent more slowly at the beginning of a session to avoid an overload situation. Also at the beginning of the connection, the sender and receiver negotiate a suitable window size based on the receiver's buffer size, which should prevent a buffer overflow on the receiving side. Nevertheless, an internal network overload can occur at any time. For this reason, the sender first starts with a small window of 1 maximum segment size (MSS). Once the receiver acknowledges receipt, the sender doubles the size of the window, resulting in exponential growth and either continues until the size of the receiver's maximum window is reached or a timeout occurs (receiver fails to acknowledge receipt). In this case, the window size drops back to 1 MSS and the whole game of doubling the window size starts all over again.

Overload control (congestion avoidance) uses another value, the slow-start threshold (ssthresh). Once this is reached, the window only grows by 1 MSS. Instead of exponential growth, growth is linear. It also only increases until the receiver's maximum window size is reached or a timeout occurs. If the sender detects that packet losses have occurred on the route, it reduces its transmission rate by half and initiates a slow start. This process dynamically adapts the TCP rate to the capacity of the connection.

How Bufferbloat Disrupts Traffic

To illustrate the bufferbloat mechanism, I will use a high-speed connection and a connection with a slower link. Suppose I have a 1Gbps connection (CATV or DSL) that provides 10Mbps in the download direction and 2Mbps in the upload direction. A classic FTP server fills the buffer over the fast download connection faster than the send rate in the slower upload direction can confirm the received packets. Ultimately, the acknowledgements (ACKs) from the slower direction determine the total throughput of the connection. However, if the buffers are too large, several things can happen:

- When the buffer fills up, the last incoming packet can be deleted. This is known as a "tail drop." The confirmation informing the sender that the packet has been dropped is not transmitted until the next valid packet is received (which must arrive after the dropped packet). With large buffers, this can take a considerable amount of time. Experiments showed that nearly 200 segments were received before the transmitting station retransmitted the lost segment.

- If several traffic flows are handled over one connection, a kind of permanent queue develops. A fixed number of packets is therefore always in the queue. If there are not enough packets to fill the buffer provided for this purpose, no packets are dropped and TCP congestion control is not lifted. However, the delay for all users of the buffer increases.

Buffer Management

To prioritize specific traffic, the differentiated services (DiffServ) bits of the IP layer can be used to implement preferential transmission for specific traffic types (e.g., network control or VoIP). Ultimately, DiffServ is used to class the respective traffic, but this does not eliminate the bufferbloat problem, because some of the queues responsible for transferring non-prioritized traffic can still be too large and therefore contain many large TCP segments. Consequently, the effect on the TCP congestion mechanism persists.

Formerly, several active queue management efforts used random early discard (RED, also known as random early detection or random early drop) and weighted RED (WRED), which ensures that certain packets were discarded when the buffer reached a critical level (but was not yet full). In practice, however, these techniques had some bugs, and RED was difficult to configure. As a result, use of the RED and WRED mechanisms was discontinued, and automatic configuration methods were sought.

The controlled delay (CoDel) method controls the time a packet is in the queue. Two parameters are used for this: an interval and a threshold. If the interval value of the stored packets exceeds the delays to the target, packets are randomly deleted. This deletion technique does not depend on the size of the queue, nor does it use the tail drop mechanism. Tests have shown better delay behavior and far better throughput results than the RED method, especially for wireless connections. Furthermore, CoDel technology can be easily implemented on the hardware side.

The flow (or fair) queuing controlled delay (FQ-CoDel) method (RFC 8290 [1]) divides the queue into 1,024 additional queues by default. A separate queue is then randomly assigned to each new data flow. Within each subqueue, CoDel is used to eliminate delay problems in case of TCP overloads.

The various queues are resolved by the deficit round robin (DRR) mechanism. First, this procedure ensures that TCP overload control is working properly. Moreover, by mixing the packets in the queues, small packets (e.g., DNS responses and TCP ACKs) are no longer trapped in large queues, so processing of large and small packets are fairer.

Testing Bufferbloat

Some speed measurement websites only determine the delay when the respective connection is idle and no data transfer is active. To determine bufferbloat accurately, you should start a ping (e.g., to Google). As a result, you will receive a series of ping responses that typically have times in the range of 20-100ms (Listing 1).

Listing 1: Google.com Ping

ping google.com PING google.com (172,217,16,206): 56 data bytes 64 Bytes from 172.217.16.206: icmp_seq=0 ttl=54 time=26.587 ms 64 Bytes from 172.217.16.206: icmp_seq=1 ttl=54 time=24.823 ms 64 Bytes from 172.217.16.206: icmp_seq=2 ttl=54 time=25.474 ms 64 Bytes from 172.217.16.206: icmp_seq=3 ttl=54 time=24.450 ms 64 Bytes from 172.217.16.206: icmp_seq=4 ttl=54 time=23.802 ms 64 Bytes from 172.217.16.206: icmp_seq=5 ttl=54 time=29.555 ms 64 Bytes from 172.217.16.206: icmp_seq=6 ttl=54 time=34.759 ms

In the next step, select a speed test [2]-[5] for the Internet connection and monitor the ping times while the speed test is running. If the upload or download times increase dramatically, your router is probably suffering from bufferbloat.

Conclusions

Because bufferbloat misleads the TCP congestion avoidance algorithm in terms of effective line size, modern networks are prone to congestion-driven collapse caused by saturated buffers, leading to unexpectedly high packet delays. If a congestion collapse occurs on a large network, only a complete shutdown and careful restart of the entire network will help restore network stability. If such a breakdown occurs, you will need to contact the manufacturers of the linking components as soon as possible. Sometimes the suppliers provide appropriate patches.

Moving around the Internet is like being in a high-speed plane while constantly changing the wings, the engines, and the fuselage. Most of the cockpit instruments have also been removed and replaced by a few new instruments. The Internet has crashed several times over the past 30 years and will crash again. For this reason, you should always install the latest firmware versions and patches on your network components to steer clear of avoidable problems.