Run TensorFlow models on edge devices

On the Edge

Machine learning has changed the computing paradigm. Products today are built with artificial intelligence (AI) as a central attribute, and consumers are beginning to expect automation and human-like interactions with the devices they are using. However, much of the deep learning revolution has been limited to the cloud. Recently, and thanks to the performance increase of microcontroller and embedded devices, several machine learning libraries for mobile, embedded, and Internet of Things (IoT) devices have been announced that aim to offload computation to the edge. One such toolset is TensorFlow Lite, which I use in a real-world vertical farming operation.

Although building and training deep neural networks requires powerful servers provided with graphics processing units (GPUs) or tensor processing units (TPUs), machine learning inference requires fewer resources and can be executed on the edge. Developers can add intelligence to IoT devices that perform tasks such as anomaly detection, speech recognition, or regression (i.e., making a prediction), without depending on a cloud and thus wireless connectivity. Edge computing is so much more scalable, because the computation cost is spread over the end devices, instead of being centralized in a cloud. For some use cases, like speech recognition, AI on the edge guarantees better privacy, because personal data is kept on the device in your home or office.

In this article, I focus on the use of TensorFlow Lite [1] on IoT devices and, more precisely, in the application domain of vertical farming. Machine learning and artificial intelligence will help optimize vegetable yield or predict the harvesting date of plants.

The first section briefly introduces the concept of vertical farming before introducing TensorFlow Lite and looking at how it differs from TensorFlow. Then, I present the connected vertical farm and why and how it is used with TensorFlow Lite. The main body is a tutorial on the design and training of a salad greens growth model with TensorFlow, before optimizing and predicting on the edge (i.e., on device) the harvesting date of the salad greens with TensorFlow Lite. Finally, I conclude the article by summing up my thoughts on TensorFlow Lite and provide feedback and the insights gained from my experience.

Vertical Farming

Vertical farming is a new method of growing crops in vertically stacked layers in a fully controlled environment. Vertical farming aims to minimize water use and maximize productivity by growing crops stacked in a climate-controlled cabinet without soil in small amounts of nutrient-rich water.

Thus, vertical farming could help meet growing global food demands in a sustainable way by reducing distribution chains, producing fresh greens and vegetables with lower emissions close to populations, and providing higher nutrient produce with a better taste and look.

During the last five years, many companies have begun developing vertical farming systems, choosing structures like shipping containers, buildings, tunnels, or cabinet-like systems, which is the system I present in this article (Figure 1).

Two categories of soilless farming techniques are used in vertical farming:

In systems that use the hydroponic nutrient film technique (NFT), the roots of greens are submerged in a very shallow stream of water solution containing macronutrients (nitrogen, phosphorus, potassium, etc.). Optionally, in a hydroponic ebb and flow system, an inert medium (e.g., gravel or sand) is used as a soil substitute to provide support for the roots.

Unlike hydroponics, aeroponics does not require a liquid or solid medium in which to grow plants; instead, a liquid solution with nutrients is applied with a high-pressure jet or with misted fog, hereafter referred to as high-pressure aeroponics (HPA) and nebulization, respectively. Nebulization aeroponics is the most sustainable soilless growing technique, because it uses up to 90 percent less water than the most efficient conventional hydroponic systems and requires no replacement of growth medium.

Beyond technical and ecological considerations, the irrigation system strongly affects the growth of vegetables, which is why it is a key parameter to take into account in the artificial intelligence.

TensorFlow and TensorFlow Lite

TensorFlow is an end-to-end machine learning platform initially developed by the Google Brain team for internal Google use. The free and open source software library, released under Apache License 2.0 in 2015, allows a wide range of machine learning tasks, such as the design and training of neural networks, on many platforms, including CPUs, GPUs, and TPUs. November 2019 saw the release of TensorFlow 2.0, which was used for the project this article.

TensorFlow provides stable Python (for version 3.7) and C APIs. However, other programming languages supported without guaranteed API backward compatibility are C++, Go, Java, JavaScript, and Swift (early release). In this article, I use the Python API.

The TensorFlow platform is quite complex and comes with many components, like TensorBoard to debug and study the training steps of a model, TensorFlow.js to execute a model and run inference in a web browser, and TensorFlow Lite for devices with low memory and low computation capabilities.

TensorFlow Lite provides all the building blocks to convert and optimize a TensorFlow model on mobile, embedded, and IoT devices and to execute the model on these constrained edge devices. However, TensorFlow Lite, unlike TensorFlow, cannot be used to develop and train a machine learning model.

In short, TensorFlow Lite comes with two components: the TensorFlow Lite Converter and the TensorFlow Lite Runtime. The TensorFlow Lite Converter offers the necessary API and optimizers to reduce the memory footprint of a TensorFlow model, increase execution speed, and lower computation costs.

Usually, Python data scientists perform data engineering and preprocessing tasks on lab computers with pandas [2] or NumPy [3] libraries, before using TensorFlow to design and train a deep learning model. Note that the training phase can be offloaded to a GPU in the cloud. Then, on the lab computer, the researcher uses the TensorFlow Lite Converter, which comes with the TensorFlow distribution, to optimize and convert the trained model in a .tflite model optimized for edge devices. This .tflite model is then deployed to the edge device as a software update.

Finally, at run time, the edge device uses the TensorFlow Lite Runtime to execute the model and make predictions with live data from the vertical farm. A key point is that the TensorFlow distribution is not installed on the device, only the standalone TensorFlow Lite Runtime. Depending on the edge device platform, the TensorFlow Lite Runtime is either provided in an AAR package from JCenter for Android, a Python wheel package, or a C shared library for embedded devices.

Vertical Farming System

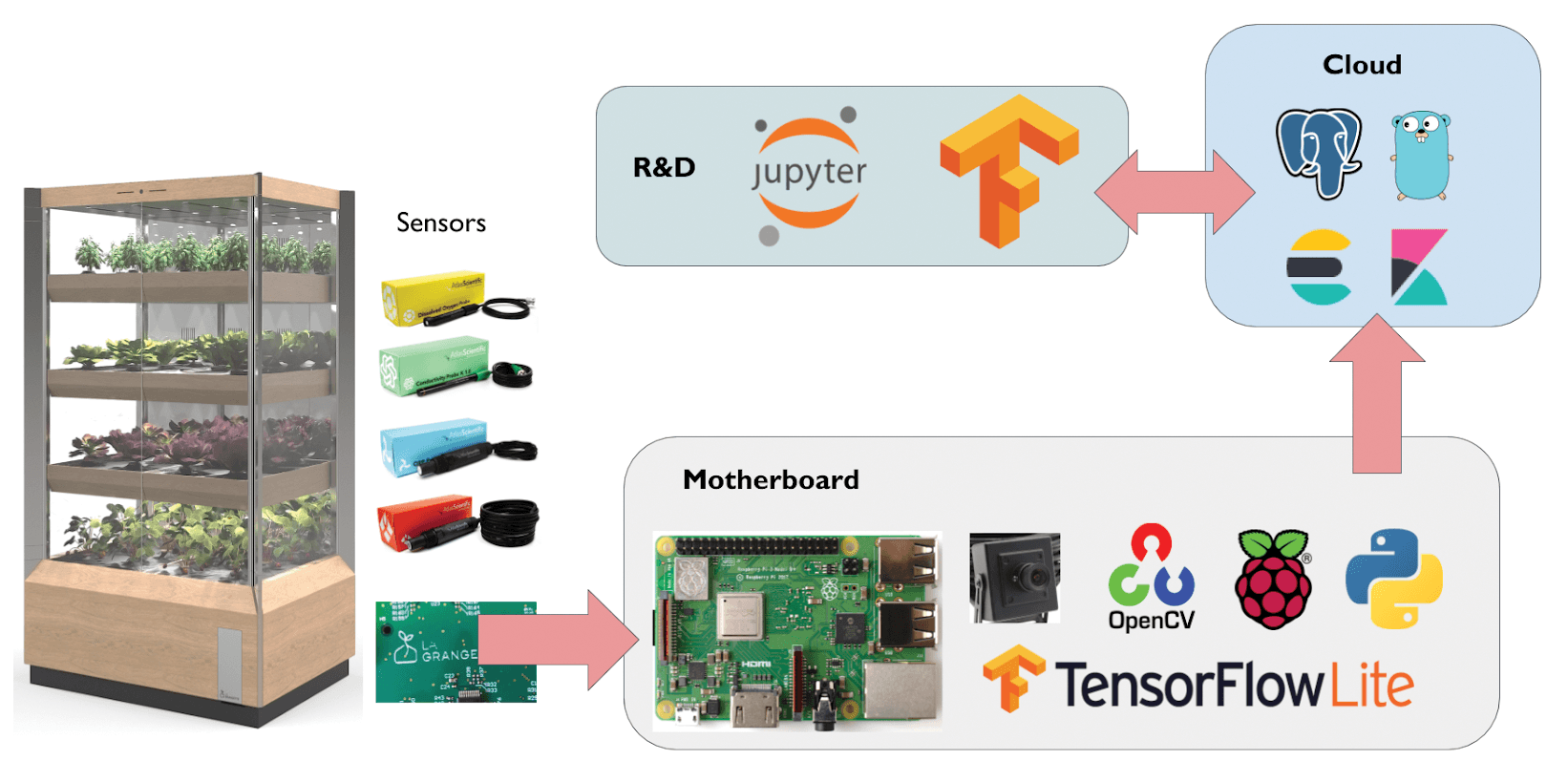

The cabinet for indoor vertical farming shown in Figure 1 has four shelves able to support 12 plants spread on a grid. The full vertical farming system is divided into four components as summarized in Figure 2:

- The cabinet with the sensors, actuators, and irrigation systems

- The motherboard, which for this project is a Raspberry Pi 3B+

- The cloud server

- The research and development (R&D) computer

The cabinet houses the following eight sensors and two cameras per layer: (1) carbon dioxide (CO2, ppm), (2) dissolved oxygen (ppm), (3) electrical conductivity (µS/cm), (4) oxidation-reduction (redox) potential (mV), (5) photosynthetic photon flux density (PPFD, µmol/sq m/sec), (6) water pH, (7) humidity (%), and (8) temperature (°C). The prototype implements the four different irrigation systems introduced earlier (one on each layer) to study their effect on plant growth and quality. Actuators help control the temperature, level of CO2, and lighting.

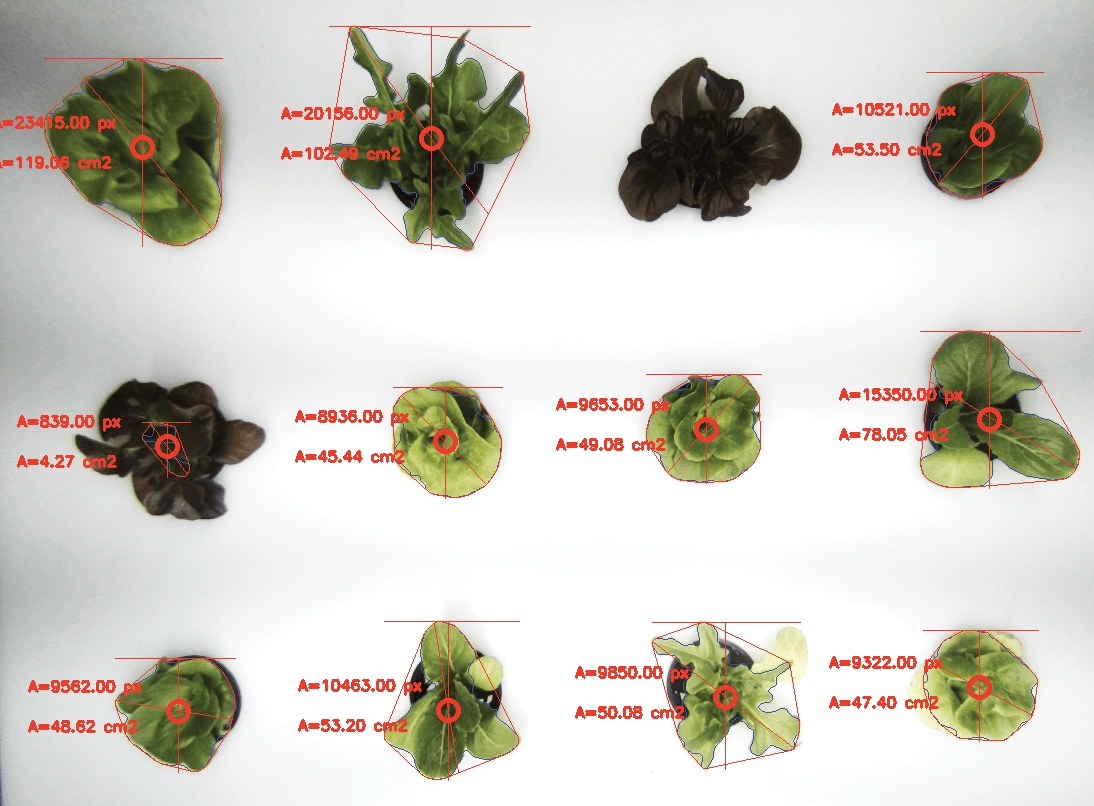

The Raspberry Pi is the brains of the system. It aggregates data from the sensors, controls the irrigation systems and actuators, and computes the plant leaf area with the help of cameras and several image processing algorithms developed with OpenCV [4] (Figure 3). At the same time, it runs the TensorFlow Lite Runtime to execute the plant growth prediction deep learning algorithm in real time. All the data is "cached" locally on the Raspberry Pi before being sent to the cloud API when Internet is available. The Raspberry Pi software is written in Python.

The cloud has three main features: an API developed in Go to collect the data from the motherboard (i.e., the Raspberry Pi); a Postgres database to store the data transmitted by the cabinet motherboard, as well as information detailing the experiments and plant features (variety, date of planting, etc.), mainly for agronomic research purposes; and finally a web interface for data visualization developed with the Elasticsearch-Logstash-Kibana (ELK) stack [5].

Lettuce Weight Regression Model

A regression problem aims to model the output of a continuous value, such as temperature or weight. In contrast, a classification problem aims to select a class from a list of classes.

To build the model, I used the new tf.keras API introduced in TensorFlow 2.0. The goal is to predict the lettuce fresh weight at a certain date after planting. To set up the R&D environment on the computer, I used Python 3.7, created a virtual environment with virtualenv [6], and installed the TensorFlow distribution with pip (Listing 1).

Listing 1: TensorFlow Installation

python3 --version pip3 --version virtualenv --version virtualenv --system-site-packages -p python3 ./venv ## Activate the environment source ./venv/bin/activate ## Install the TensorFlow distribution pip install --upgrade pip pip install --upgrade tensorflow=2.0 pandas numpy pathlib ## Check the setup python -c "import tensorflow as tf;print(tf.reduce_sum(tf.random.normal([1000, 1000])))"

Neural Network

For simplicity and easy comprehension, consider the 10 features shown in Table 1 with sample data values. Note that I am working on real-world data from sensors that could fail and need to be calibrated. To avoid aberrant results, then, it is crucial to implement strong preprocessing techniques like sampling, filtering, and normalization before feeding the data into the neural network.

Tabelle 1: Sample Dataset

|

Feature |

Description |

Sample Value |

|---|---|---|

|

Weight |

Weight of lettuce head |

135.0 g |

|

DaP |

Days after planting |

10 days |

|

CumCO2 |

Cumulative sum of CO2 |

4 ppm |

|

CumLight |

Cumulative sum of light |

1420.0 umol/sq m/sec |

|

CumTemp |

Cumulative sum of temperature |

8623.0°C |

|

LeafArea |

Leaf area |

156 sq cm |

|

Irrigation system (one-hot encoding) |

||

|

NFT |

Hydroponic nutrient film |

0.0 |

|

HPA |

High-pressure aeroponics |

1.0 |

|

Ebb&Flood |

Hydroponic ebb and flood |

0.0 |

|

Nebu |

Nebulized aeroponics |

0.0 |

The dataset is split in two for training and testing. The value to be predicted is the weight, so the remaining nine features are separated out to be the input of the neural network (i.e., weight will be the output).

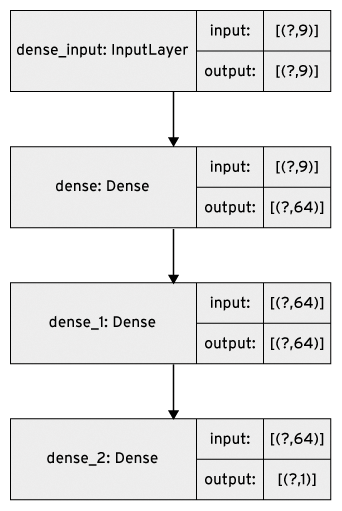

To build the model, I use a simple Sequential model (Listing 2) with two densely connected hidden layers and an output layer that returns a single continuous value: the lettuce fresh weight. I chose only two hidden layers, because there is not much training data and I only have nine features. In such cases, a small network with few hidden layers is better to avoid overfitting. I empirically chose the widely used rectified linear unit (ReLU) activation function and the mean squared error (MSE) loss function for regression problems (different loss functions are used for classification problems).

Listing 2: Sequential Model

01 import tensorflow as tf 02 [...] 03 04 model = tf.keras.Sequential([ 05 tf.keras.layers.Dense(64, activation=tf.nn.relu, input_shape=[len(train_dataset.keys())]), 06 tf.keras.layers.Dense(64, activation=tf.nn.relu), 07 tf.keras.layers.Dense(1) 08 ]) 09 10 optimizer = tf.keras.optimizers.RMSprop(0.001) 11 model.compile(loss='mean_squared_error', 12 optimizer=optimizer, 13 metrics=['mean_absolute_error', 'mean_squared_error'])

The model is shown in Figure 4. Note that the number of inputs to the neural network is nine, which corresponds to the number of input features. Now, the model can be trained by the training dataset:

model.fit(train_features, train_weights, epochs=100, validation_split=0.2, verbose=0)

After training, when I save and export the trained model (Listing 3), I see that it is 86077 bytes.

Listing 3: Exporting the Model

01 model_export_dir= "./models/lg_weight/"

02 tf.saved_model.save(model, model_export_dir)

03 root_directory = pathlib.Path(model_export_dir)

04 tf_model_size = sum(f.stat().st_size for f in root_directory.glob('**/*.pb') if f.is_file())

05 print("TF model is {} bytes".format(keras_model_size))

Convert and Optimize

Now comes the important part of this tutorial: converting and saving the trained model to the TensorFlow Lite format with the default optimizations:

converter = tf.lite.TFLiteConverter.from_keras_model(model)

tflite_model = converter.convert()

open('./models/model.tflite', "wb").write(tflite_model)

Checking the model size, I see that it is now 20912 bytes, which is four times smaller than the original TensorFlow model:

model_size = os.path.getsize('./models/model.tflite')

print("TFLite model is {} bytes".format(model_size))

I can further reduce the model size with the optimizers offered by TensorFlow Lite Converter. The simplest form of post-training quantization quantizes only the weights from floating-point to 8 bits of precision, which is also called "hybrid" quantization:

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE] tflite_quant_model = converter.convert()

The model is now only 8672 bytes, which is 10 times smaller than the conventional TensorFlow model.

On the Edge

The previous section introduced the basics for solving a regression problem applied to lettuce weight prediction and then showed how to convert a model to the TensorFlow Lite format after training and then optimize. Now I am ready to deploy the trained optimized model (model.tflite) to the brain (i.e., the Raspberry Pi) of the vertical farm.

Remember that on IoT devices, only the standalone and lightweight TensorFlow Lite Runtime needs to be installed. As on the R&D computer, I used Python 3.7 and pip to install the TensorFlow Lite Runtime wheel package. In this case, the Raspberry Pi is running Raspbian Buster, so I install the Python wheel as follows:

pip3 install https://dl.google.com/coral/python/tflite_runtime-2.1.0-cp37-cp37m-linux_armv7l.whl

Then, executing the model and making a prediction with the Python API is quite easy. Because I am working with real-world data from sensors, it is crucial to implement strong preprocessing techniques to sample, filter, and normalize the data fed into the neural network.

The first time through, I need to allocate memory for the tensors:

import tflite_runtime.interpreter as tflite interpreter = tflite.Interpreter(model_content=tflite_model_ffile) interpreter.allocate_tensors()

Next, I feed the input tensors with the input features, invoke the interpreter, and read the prediction. The code snippet in Listing 4 has array input_tensor, which contains the input features (i.e., the preprocessed CumLight, CumTemp, etc.), and tensor_index, which corresponds to the number of features.

Listing 4: Weight Prediction

interpreter.set_tensor(tensor_index=9, value=input_tensor) # run inference interpreter.invoke() # tensor_index is 0 because the output contains only a single value weight_inferred = interpreter.get_tensor(tensor_index=0)

Results

The time has come to evaluate the accuracy of the model and see how well it generalizes with the test set, which I did not use when training the model. The results will tell me how good I can expect the model prediction to be when I use it in the real world.

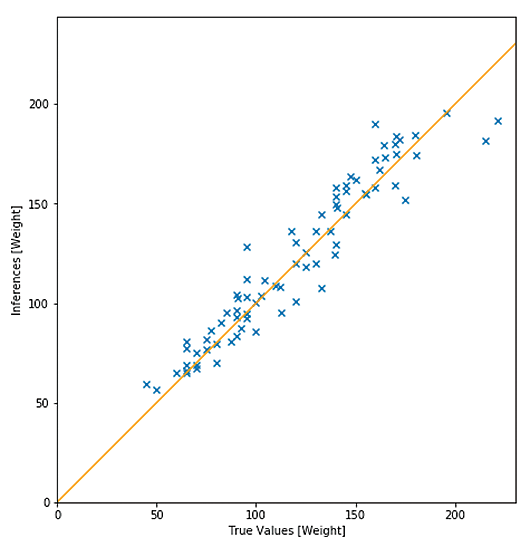

First, I can evaluate the accuracy at a glance with a graph (Figure 5). The blue crosses show the inferred weight values as a function of the true values for the test dataset. The error can be seen as the distance between the blue crosses and the orange line.

A metric often used to evaluate regression models is the mean absolute percentage error (MAPE), which measures how far predicted values are from observed values. For the test dataset, the MAPE=9.44%, which is definitely precise enough for a vertical farmer.

Execution Time

For evaluation purposes, I installed the full TensorFlow distribution on a test Raspberry Pi and compared the inference execution time between the original TensorFlow model and the optimized .tflite model with the TensorFlow Lite Runtime. The results showed that inference with TensorFlow Lite ran in the range of a few milliseconds and was three to four times faster than TensorFlow.

Conclusion

TensorFlow Lite allows the accurate prediction of the harvesting date of lettuce growing in a vertical farm, without the need for a powerful server and with only an intermittent connection to the cloud. By reducing the computation cost and the deep neural network model size, the inference can be run on the edge by a Raspberry Pi with the TensorFlow Lite Runtime. This task has a small footprint and lets the Raspberry Pi manage all the other critical tasks of a working vertical farm. Furthermore, TensorFlow Lite can be used on IoT devices as small as ARM Cortex-M microcontrollers.

TensorFlow Lite has several limitations, though. Only a subset of operators is supported, which constrains the design of the model. For instance, recurrent neural networks are not fully supported. However, a Google team seems to be working on closing this gap for all models that TensorFlow currently offers. Another weakness is the absence of support for reinforcement learning, which requires more computation capabilities because the incremental training tasks are performed on the edge. This challenge can nonetheless be overcome with an Edge TPU device or a component like the Google Coral [7] board or the Coral USB accelerator, which can be plugged in to a Raspberry Pi.