The TensorFlow AI framework

Machine Schooling

Those working in IT and in the AI environment often know little more about TensorFlow than that it has something to do with artificial intelligence (AI). In fact, TensorFlow is one of the most powerful artificial intelligence frameworks. In contrast to some abstract projects from university research labs, it can be used today. In this article, I introduce the topic and look into the features that TensorFlow offers and contexts in which it can be used sensibly.

Basics

Wikipedia describes TensorFlow as "a free and open-source software library for dataflow and differentiable programming across a range of tasks" [1]. If you don't happen to be a math genius or an IT scientist, this description might make it difficult to understand what TensorFlow is actually about.

To begin, it makes more sense to look at TensorFlow from a different perspective: What does the program do for developers, and which problems can it help solve? To understand this, you must understand some more technical terms: What does machine learning actually mean in a technical sense? What is deep learning, and how does a neural network work? All these concepts appear regularly in the TensorFlow context, and without understanding them, TensorFlow cannot be understood. If you need an overview before proceeding, please see the "Neural Networks" box.

Essential Preparation

Building an environment that can weave neural networks and make the necessary calculations for them well and reliably is obviously not a trivial task, and it would not make sense for every research institution to build its own completely individual AI implementation, because many AI approaches face similar problems and use comparable methods to reach their goals.

In IT, issues arise repeatedly, even in very different projects. The answers to these questions are provided by software libraries that implement functions that can then be integrated into other environments. People who use these libraries in their own programs can save themselves a huge amount of overhead and ensure a higher degree of standardization, which in turn reduces the cost of developing and maintaining software.

The idea behind TensorFlow is easy to grasp against this background: TensorFlow sees itself as an AI library that researchers and developers can feed with data to develop AI and neural networks. This effectively saves the effort of composing an AI environment for individual use cases.

Data Streams as the Basis

In practical terms, TensorFlow applies a graph model to arrive at mathematical calculations and lets developers build graphs with data that originates from data streams. Each node in a TensorFlow network is a mathematical operation that changes the incoming data in a specific way before it migrates further through the neural network. The connections between the nodes form multidimensional arrays, which are referred to as "tensors."

The real power of the neural network is to improve the signal strength with which the stream data makes its way through the graph so that a specific, predetermined goal is achieved. To do so, it automatically adjusts the tensors – which makes it clear how TensorFlow got its name.

Ultimately, the great strength of TensorFlow is that it abstracts some of the complexity in the AI environment for the developer. If you have a concrete problem to solve, you do not have to develop and deal with the complexities of an algorithm for machine learning. Instead, you can access TensorFlow and combine its ready-made functions with your own input material, which TensorFlow then processes. In fact, TensorFlow is so versatile that you only have to describe a basic task.

Second Attempt

TensorFlow comes from Google and is therefore backed by one of the world's most powerful corporations. However, it is not Google's first foray into the world of artificial intelligence: DistBelief, which Google Brain (the deep learning artificial intelligence research team) created in 2011, was a purely proprietary platform and their first attempt at AI. Although the product was used widely internally, it was not planned for external use or even release. A number of scientists and programmers whose day-to-day business was AI and neural networks were hired and the old DistBelief code was revamped extensively.

Google published the result of those efforts in 2017 as TensorFlow, which enjoyed great popularity from the first moment. Many of today's AI use cases would be practically unthinkable without TensorFlow. Although Google can be accused of disregarding their "don't be evil" slogan in some departments, the company has undoubtedly done the world a service by publishing TensorFlow.

Written in Python

The task descriptions that are contributed by developers can be written in Python, which has proven to be helpful for the dissemination of TensorFlow. The description of a neural network is ultimately composed in a moderately complex script language, but in Python, the user only notes down the tasks. Under the hood a C++-based engine processes the tasks defined by the developer in TensorFlow. That said, the tensors and nodes are all Python objects that can be used as in any other Python application.

The Python bindings only offer an initial introduction to TensorFlow; if you want to use another language, you have a wide choice. Beneficially, TensorFlow is ultimately a framework with a defined API, for which different front ends can be written with relative ease. Developers can choose between Python, C, C++, Go, Java, JavaScript, and Swift, with further implementations from third-party vendors (e.g., C# and R). The results produced by TensorFlow are ultimately independent of the front-end language used.

Wide Hardware Base

As you know, not every type of processor is suitable for every type of workload, which has been emphasized lately when powerful graphics cards with Nvidia chipsets were unavailable for months or only at absurd prices. At that time, Bitcoin miners were literally buying up these boards by the truckload to mine digital currency. The relatively banal reason is that a GPU processes the Bitcoin mining operations far faster than a regular processor, because the GPU was optimized for similar workloads. For some workloads, though, a regular x86_64 CPU comes out clearly ahead.

TensorFlow scores points again with abstraction by its handling of different processor types, which allows you to run a corresponding workload on a certain target CPU by means of appropriate instructions. Moreover, TensorFlow can use multi-CPU environments, so you do not have to deal with the peculiarities of individual CPUs; you simply write instructions that TensorFlow then processes.

Support for Mobile Devices

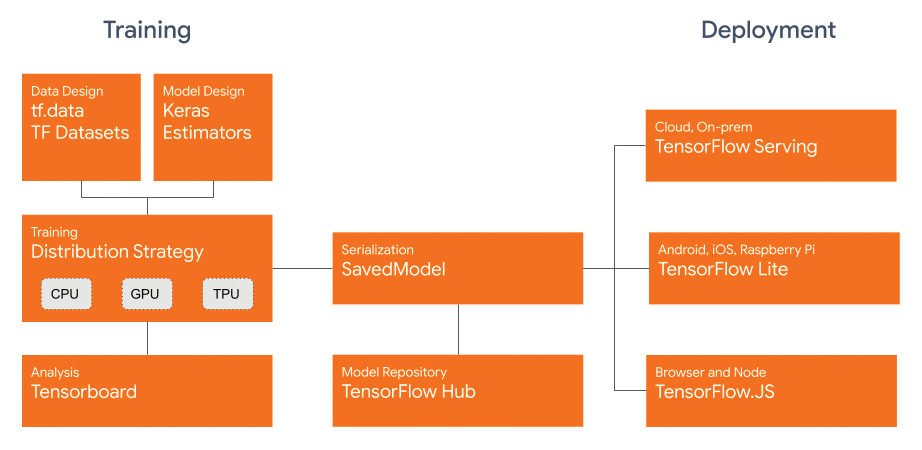

TensorFlow's developers avoid restricting the use of the framework to large computing environments. One intended application scenario for TensorFlow relies on mobile and embedded systems, and it can even be executed in browsers, thanks to its JavaScript connection.

Especially for mobile devices, the developers have come up with TensorFlow Lite (see the associated article in this issue). This lean version can execute developed models but does not handle the task of automatically expanding the neural network. Ultimately, it becomes clear at the program level that the two steps are logically distinct. Once a pattern has been defined, it processes a task for given material as an independent model within the AI framework. The machine learning part must be considered separately and might not even be necessary. Running on mobile devices, TensorFlow Lite simply does not have the resources needed for the neural network part, which is why the product is limited to the remaining task.

Extensions with TensorFlow Hub

Google is known to be committed to standardization, even if it would prefer to make its own standards the worldwide norm. For the developers of machine learning applications, the TensorFlow developers have therefore come up with the TensorFlow Hub. Any developer can contribute to this online directory of machine learning modules by uploading their own code.

Google anticipates several advantages: First, individual developers can work with smaller datasets if they find optimized code and matching data on the TensorFlow Hub. Additionally, common directories for corresponding modules help set standards: If TensorFlow Hub finds a module that performs a certain task well and reliably, developers can fall back on it instead of reinventing the wheel, which in turn reduces susceptibility to errors. Newcomers to machine learning will also tend to find it easier to access code on the Hub, enabling faster learning.

Keras for Even Faster Entry

Keras, another library for machine learning, follows in the wake of TensorFlow. Keras also was written by a Google employee, François Chollet, who published the first version in 2015.

Originally, Keras and TensorFlow had nothing to do with each other. Although Keras is also a library for deep machine learning, it differs from TensorFlow in one striking aspect: Keras does not itself implement an engine and instead sees itself primarily as a front end for machine learning engines – like TensorFlow. Since version 1.4, TensorFlow offers support for the Keras API, which is developed independently of TensorFlow (Figure 1). A merge was explicitly ruled out, not least because Keras would have had to sacrifice some of its functionality.

In combination with TensorFlow, Keras still offers very useful additional functions, but the library is uncompromisingly trimmed for simplicity and usability. If you look at TensorFlow Hub, you can see that the idea has caught on; a fair share of the samples and templates available there not only need TensorFlow, but also Keras. Even users who have little or no experience with AI and deep learning will quickly achieve initial success with Keras. The library is therefore ideally suited for education.

Working with Data Sources

Whatever your dealings with neural networks, deep learning, and artificial intelligence may be, you should always remember one thing: Any machine learning engine is only as good as the data on which it trains.

Remember, for example, the Microsoft chatbot Tay, which the company launched on Twitter in 2016. The noble idea was that Tay should develop the ability to communicate like a human by using the training material it obtained from its conversations with users. The result was devastating, probably because trolls deliberately targeted the service: Tay mutated in just under 16 hours to a bully account that had no problem with racist theses and neo-Nazi views, which it openly communicated.

If you are looking for an introduction to TensorFlow, you have several ways to keep such problems away for the time being. On TensorFlow Hub, you can find various pre-trained networks that are the result of previous TensorFlow runs on large, typically generic, datasets, so they form a good basis for automated learning.

Additionally, several projects offer prepared datasets for TensorFlow for a variety of potential objectives. For example, if you want to try out automatic image recognition in the context of neural networks, the dataset by the Aerial Cactus Identification project is a good example. This dataset is exactly what the name suggests: a suitable set of data for training a neural network to recognize cacti automatically in photos (Figure 2).

![Cactus or not a cactus? From images like this, deep learning networks learn how to identify cacti in images [2]. © Kaggle Cactus or not a cactus? From images like this, deep learning networks learn how to identify cacti in images [2]. © Kaggle](images/b02_tensorflow_2.png)

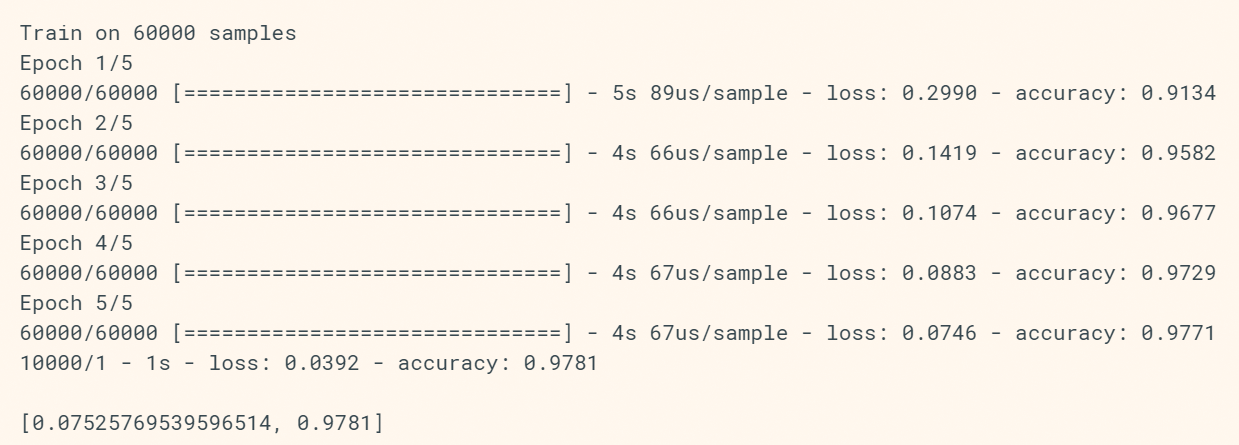

Another impressive example of a dataset is the MNIST database (Figure 3), which contains nearly 60,000 handwriting samples in the form of single letters, making it a good basis for neural networks that need to be trained to interpret and read handwriting. Once again, the potential of neural networks is evident. Anyone who has ever transcribed old documents by hand that were written in German or English blackletter knows how complicated this task can be. A neural network trained for specific scripts could do this far better, but most importantly, it could do it faster (Figure 4).

![The MNIST set [3] is example input data on which an AI environment can learn automatically how to interpret handwriting. © MNIST The MNIST set [3] is example input data on which an AI environment can learn automatically how to interpret handwriting. © MNIST](images/b03_tensorflow_3.png)

The same applies to other tasks. For example, if you want to recognize certain shapes in a series of photos, you can view the pictures yourself or have an AI setup do it. Against this background, the often somewhat strange-looking example data circulating in the TensorFlow environment is understandable (e.g., a database with photos of toys in front of an arbitrary backdrop; Figure 5). Searching the web quickly brings to light other data sets on which TensorFlow neural networks can be used.

![Toys against an arbitrary background might look confusing, but it provides a method for teaching neural networks to recognize toys [4]. © NORB Toys against an arbitrary background might look confusing, but it provides a method for teaching neural networks to recognize toys [4]. © NORB](images/b05_tensorflow_4.png)

Next Steps

An overview article like this can explain what TensorFlow does and what it is used for in practical terms, but a hands-on introduction would take another article. If you want to learn more about TensorFlow, check out the Tensorflow Lite article in this issue. Additionally, you can find many really excellent guides online, some of which TensorFlow itself lists [5].

Keras helps you build a neural network that analyzes images in a very short time. Almost all beginner tutorials rely on Keras because it is easier to use than TensorFlow. All tutorials also contain the dataset needed to do the respective task with Keras. Once you have worked your way through the examples in Keras notation, you'll quickly move on to more advanced models. Although more complicated, they also let you get to the heart of the matter in a very short time.

If you want to look into artificial intelligence, deep learning and neural networks, TensorFlow is the ideal tool. What's more, TensorFlow is future-proof. Google continues to play a very active role in the development of the solution, to which new features are added regularly. The product seems unlikely to disappear, because Google uses TensorFlow itself in various products, such as to process photos from Streetview.