Artificial intelligence improves monitoring

Voyage of Discovery

Artificial intelligence (AI) and machine learning are on the rise in IT, especially in the field of system monitoring. AI, machine learning, and deep Learning often are wrongly used as synonyms. AI is the ability of a machine to make decisions similar to those made by humans. For example, software could decide to trigger an alarm that a human being would also have triggered. AI is the simulation of intelligent behavior by more or less complex algorithms.

Machine learning, on the other hand, means classifying methods, procedures, and algorithms that help the machine make decisions. Machine learning is the math that lets AI learn from experience. From this perspective, machine learning merely provides the basis for decision making.

An example can illustrate this point. The learning result is a percentage that assigns 90 percent of the current data to a certain type. Whether the machine then considers this value together with others as the trigger for an alarm has nothing to do – mathematically speaking – with the part of the algorithm that calculates the value.

Machine learning generally works more reliably the more data you have available. This method can also be understood as a filter. Today, most companies have such an abundance of data that manual evaluation is inconceivable. As a remedy, machine learning algorithms and other methods can be deployed to filter the available data and reduce it to a level that allows interpretation. After preparing the data set appropriately, rules for intelligent software behavior can then be defined.

In traditional machine learning, the user decides as early as the implementation stage which algorithm to use or how to filter what set of information. In deep learning, on the other hand, a neural network determines which information it passes on and how this information is weighted. Deep learning methods require a great deal of computing power. Although the underlying math has existed for a long time, it is only increased computing power that has made deep learning really universal in the last decade.

Various Methods and Results

Regardless of whether a purely statistical method of analysis – especially exploratory data analysis (EDA) – or a machine learning algorithm is used, a distinction can always be made between univariate and multivariate methods. Univariate methods work faster because they usually require less computing power. Multivariate methods, on the other hand, can reveal correlations that would otherwise have remained undiscovered. However, the application of complex methods does not always lead to better results.

In general, you can imagine a machine learning method as a two-part process (Figure 1): The first part – training the algorithm – comprises a detailed analysis of the available data. The purpose is to discover patterns and find a mathematical rule or function that explains this kind of pattern. Methods for this process include, linear, non-linear, logistical, and others. The goal is to obtain the smallest possible error component when explaining the data to be analyzed with the corresponding mathematical function. The function derived in the first step is then used to predict further data.

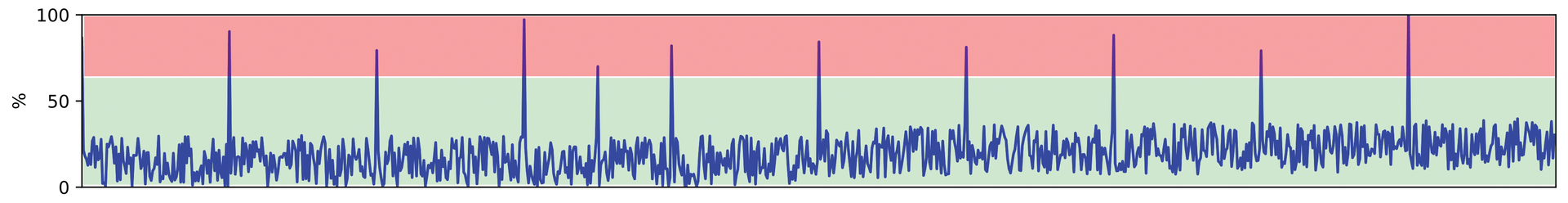

The following sections illustrate the different forms of analysis by assessing the load curve of a server processor (Figure 2). The load remains below 50 percent over the entire period, except for a few isolated load peaks.

Univariate Analysis

Univariate analysis only ever examines one metric at any given time. Applied to the processor curve, this means that traditional resource or limit-based monitoring focuses on the higher punctual deflections in particular and classifies them as potential risks as a function of how the thresholds are configured and the number of times the thresholds are exceeded.

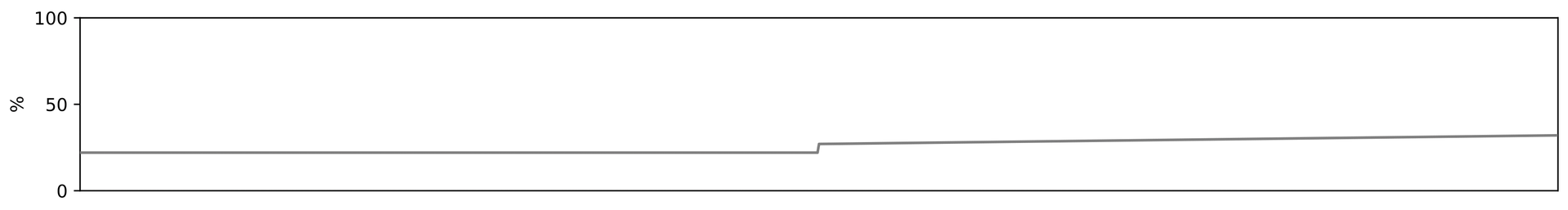

Furthermore, univariate analysis could also discover that limit violations almost always happen at regular intervals. The event in question therefore appears to occur cyclically. Most of the limit violations in the example can be assigned to this cyclical type, but others cannot, so it is no longer a matter of individual events, but of two groups of events. Additionally, the mean utilization value can be observed to remain constant in the first half of the data but increase in a linear manner in the second half (Figure 3).

Although this effect is much less obvious, it should not be neglected: If the trend continues, it can lead to a continuously increasing server load in the future. In this case, you could use one part of the data to predict another part. Depending on how well this prediction works, it can be concluded that the processes that generate the data are still the same.

Several statements can be made about a single curve, but it is difficult to make decisions on this basis. Without additional data (e.g., from other measurable variables or other comparable servers), the administrator can only interpret the situation from their experience and respond accordingly.

Bivariate Analysis

In bivariate analysis, two curves are always examined simultaneously. It would thus be possible to find an explanation for effects in the data with the help of another curve. For example, the orange curve in Figure 4 could be used to explain cyclical load peaks (e.g., batch tasks that occur at constant time intervals and would lead to a higher processor load). Combined with the corresponding logs, the event can be classified as a planned, not a dangerous, activity. Only a single candidate from the remaining limit violations in the example defies this kind of explanation and needs to be examined more closely.

Multivariate Analysis

Multivariate analysis extends the principle of bivariate analysis to the simultaneous interpretation of an arbitrary number of curves. For example, if the processor load starts to grow not only on one server, but on several at the same time, the effect is even more relevant. In an age of misuse of resources for illegitimate purposes, such as cryptojacking, attackers want to avoid being noticed at all if possible. A minimal percentage of misused computing power is not noticeable on individual machines. Only an analysis that links conspicuous changes on several servers or studies several metrics together brings such attacks to light and helps stop the attacks promptly.

Machine learning algorithms can also be distinguished according to the number of labels they require, which is of particular interest, because such labels cannot always be generated automatically and are therefore a potentially time-consuming part of the analysis. In supervised learning, labels are essential: The algorithm learns to distinguish between different groups in the training phase by relying on the labels. In semi-supervised learning, at least part of the data is labeled, whereas no labels are necessary in unsupervised learning.

Anomaly detection in particular is increasingly relying on unsupervised methods, especially to detect as early as possible critical situations that have never occurred before. The potential methods range from traditional algorithms like DBSCAN, a density-based data clustering algorithm, to state-of-the-art methods such as Isolation Forest, a learning algorithm for anomaly detection. One point of criticism of unsupervised methods is that, without ground truth, anomalies can still be detected, but it is impossible to estimate how many of the existing events have been found.

Practical Use

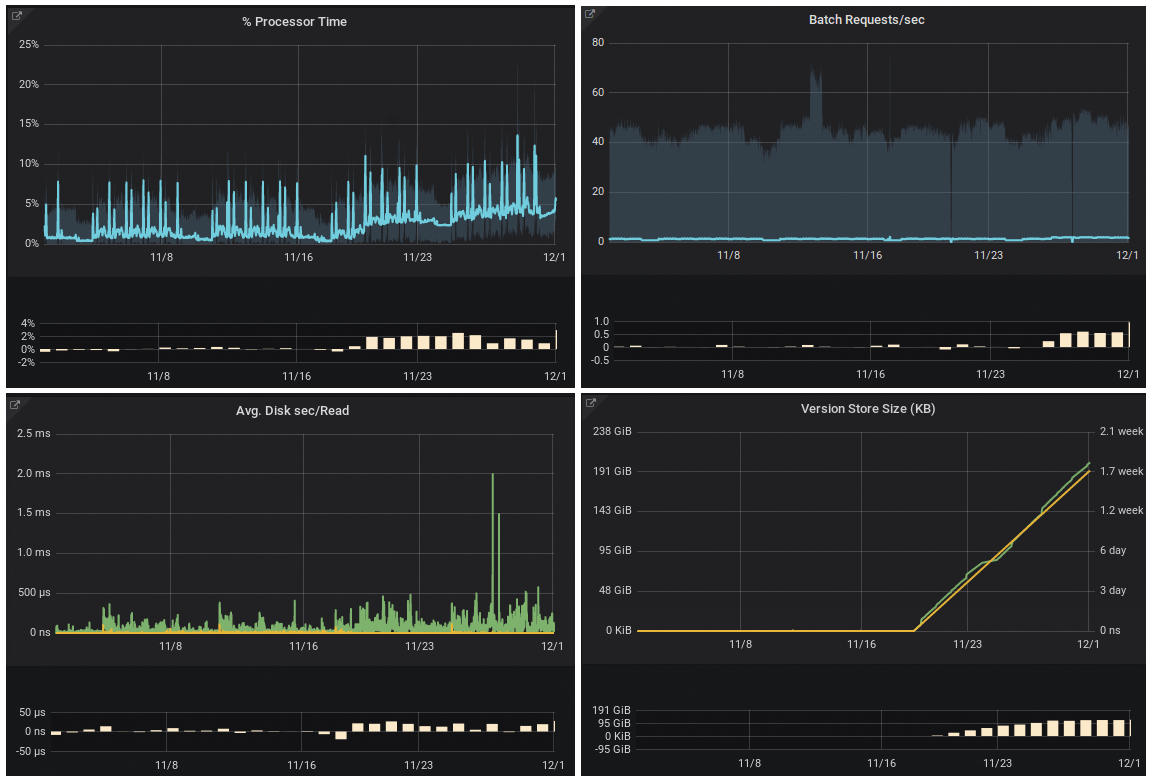

Figure 5 shows a use case that resembles the example introduced earlier. The processor load (top left) increases in a linear fashion. The difference plot displayed as a bar chart below each of the four curves illustrates and quantifies the effect by comparing the current situation with that of the previous week. The increase cannot be explained with batch requests (top right). Only joint analysis with hard disk latencies, the version store size (bottom right), and the longest running transaction provides a more accurate picture and explains the processor load.

AI can be used to detect such constellations without having to search for them manually in dashboards. Therefore, an experienced admin, who would otherwise have to spend a lot of time analyzing the causes, can take immediate action. Critical settings or conditions can be eliminated before serious problems develop with far-reaching consequences. Additionally, AI can examine the expert's countermeasures, detect patterns, and suggest appropriate countermeasures for comparable situations in the future or, in some cases, even initiate the response automatically.

In contrast to traditional reactive monitoring, AI-based methods can look a bit further into the future and notify the administrator as soon as the probability of the current situation turning sour increases.

Besides the traditional question – Is the current situation critical (compared with historical data)? – mathematical answers to the following two questions can also be given: When will a critical situation occur if everything continues as before? What needs to be done so that the situation does not become critical? Where years of experience used to be the main advantage, AI can now calculate which metric is contributing to the respective situation and with what probability.

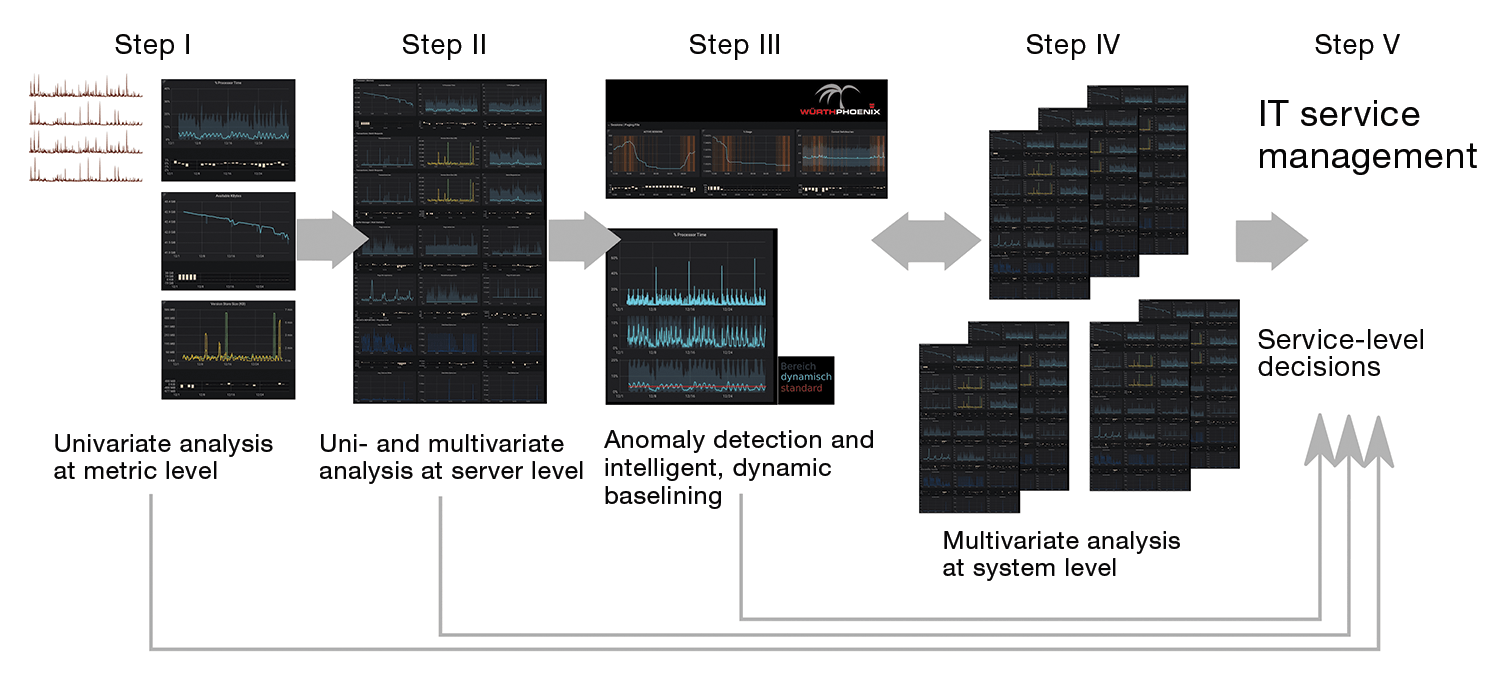

These theoretical considerations can be transferred to performance monitoring in practice by using, for example, the pipeline shown in Figure 6. A time series database (such as InfluxDB) stores data from heterogeneous sources. The user first checks each incoming metric at step I by univariate analysis and simplifies it with statistical methods to allow navigation. For the most part, alerts are optimized.

Findings gained at step I are transferred to step II, where both univariate and multivariate analysis takes place at the server level. Step III detects anomalies at the server level and evaluates them, helping to create intelligent and dynamic baselines that can be used to prevent faults in the best way possible.

Step IV is influenced directly or indirectly by all previous levels. Multivariate analysis at the system level and the targeted use of machine learning algorithms for analysis and predictions enable efficient root cause analysis. Downtime can be minimized. Finally, step V brings together all previously gained knowledge to improve service management in general. Thanks to the processed and statistically summarized data, as well as the results already achieved, informed decisions can be made and their effects promptly quantified after implementation.

If a company wants to expand its system monitoring capabilities – for example, to check whether a particular application is running smoothly on its own system or at the customer's site – it can use standard solutions such as existing pre-implemented algorithms (e.g., random forests, artificial neural networks, or support vector machines). Provided that the experience in the in-house team is sufficient, results can be achieved that would be inconceivable without AI.

In many cases, promising but more complex approaches that are still in active research cannot be sufficiently generalized to be implemented in standard solutions. Identifying the additional analysis potential they offer for the respective application is a task for experts. All the routines required for data preparation (e.g., normalization, pruning, splitting) are usually available, however.

For this step, it is only important that the person who creates the preprocessing pipeline is sufficiently familiar with the machine learning algorithm to be used later so that they can preprocess the necessary steps in the correct sequence. Automated methods can also achieve results, but with a risk that data will be processed in a time-consuming manner, without the later results making sense from a mathematical point of view or adding genuine value in the real world.

Conclusions

AI and machine learning are changing almost all industries, not least IT. Approaches and methods for recording, analyzing, and processing the data generated by IT operations are grouped under the generic term "IT operations analytics" (ITOA). Modern monitoring systems such as NetEye by Würth Phoenix already provide some simplifications and facilitations for administrators; semi-autonomous solutions should not be long in coming.

However, ITOA clearly cannot replace traditional application performance management (APM). Instead, it is a supplement that empowers administrators to react to problems more quickly and in a more targeted manner. Experienced IT staff remains indispensable.