Mesh Service for OSI Layers 2 and 3

Network Lego

Kubernetes is not necessarily the brightest star for people who operate networks, because it does not cover more complex network scenarios, such as those found in ISPs, telecommunications companies, and advanced enterprise networks. The Container Network Interface (CNI) only creates the network interfaces required for containers when creating the pods and nodes and removes them when the resources are deleted [1].

This comes as no surprise, because Kubernetes' flexibility mainly relates to applications. Thanks to application service meshes like Istio, Open Systems Interconnection (OSI) Layers 4 (the level of TCP streams and UDP datagrams) and 7 (HTTP/application protocols) can be configured comprehensively, whereas the underlying Layer 2 and Layer 3 network (frames and IP packet layers) cannot.

Who Needs It?

The developers [2] of Network Service Mesh (NSM) [3] are committed to making their Kubernetes-oriented approach attractive for companies that want to use cloud-native models for Network Function Virtualization (NFV) [4], 5G networks, edge computing, or Internet of Things (IoT). Whereas network functions offered by telcos currently run as virtualizations on hardware (i.e., virtualized network functions, VNFs), in future, they will become cloud-native network functions (CNFs) and reside in containers. The Network Service Mesh project is still at a very early stage; version 0.1.0 (code-named Andromeda) was released in August 2019, but some key points of the intended route for the Network Service Mesh have already been defined.

Theoretically, it would also be possible to reproduce subnets, interfaces, switches, and so on in virtual form as containers or pods in Kubernetes. However, the project managers take a rather skeptical view of this, referring to it as Cloud 1.0, because many benefits of cloud-native applications would be compromised. For example, if a developer in such a scenario wanted to provide a pod with a virtual private network (VPN) interface to connect to their enterprise VPN, they would have to take care of the details of the IP addresses, subnets, routes, and so on.

Enter Service Mesh

The Network Service Mesh project aims to reduce this complexity by adapting the existing service mesh model in Kubernetes to allow connections on network Layers 2 and 3. For this purpose, it introduces three concepts: network services, network service endpoints, and connection interfaces.

The project also provides an illustrative example [5]. The task is to give a pod or network service client (which in the example belongs to a user named Sarah) access to a corporate VPN (Figure 1). The pod requests a network service to this end.

In the example, the name of the VPN is secure-intranet-connectivity and encapsulates one or more pods on the network, which in turn are referred to as network service endpoints. A network service like this can be defined in YAML format (Listing 1), much like services in Kubernetes.

Listing 1: Simple Network Service (Part 1)

01 kind: NetworkService 02 apiVersion: V1 03 metadata: 04 name: secure-intranet-connectivity 05 spec: 06 payload: IP 07 [...]

In the example, the network service initially consists of a pod that provides a VPN gateway as a network service endpoint. The idea now is for the client pod to connect to the VPN gateway through which to access the company VPN.

Search and Find

To ensure that the client pod finds the network service with the VPN gateway, the VPN pod identifies itself in the network by what is known as a destination label: app=vpn-gateway. In turn, the client pod sends a destination label with its requests (app:vpn-gateway), which helps the pod find the gateway.

Good Connection

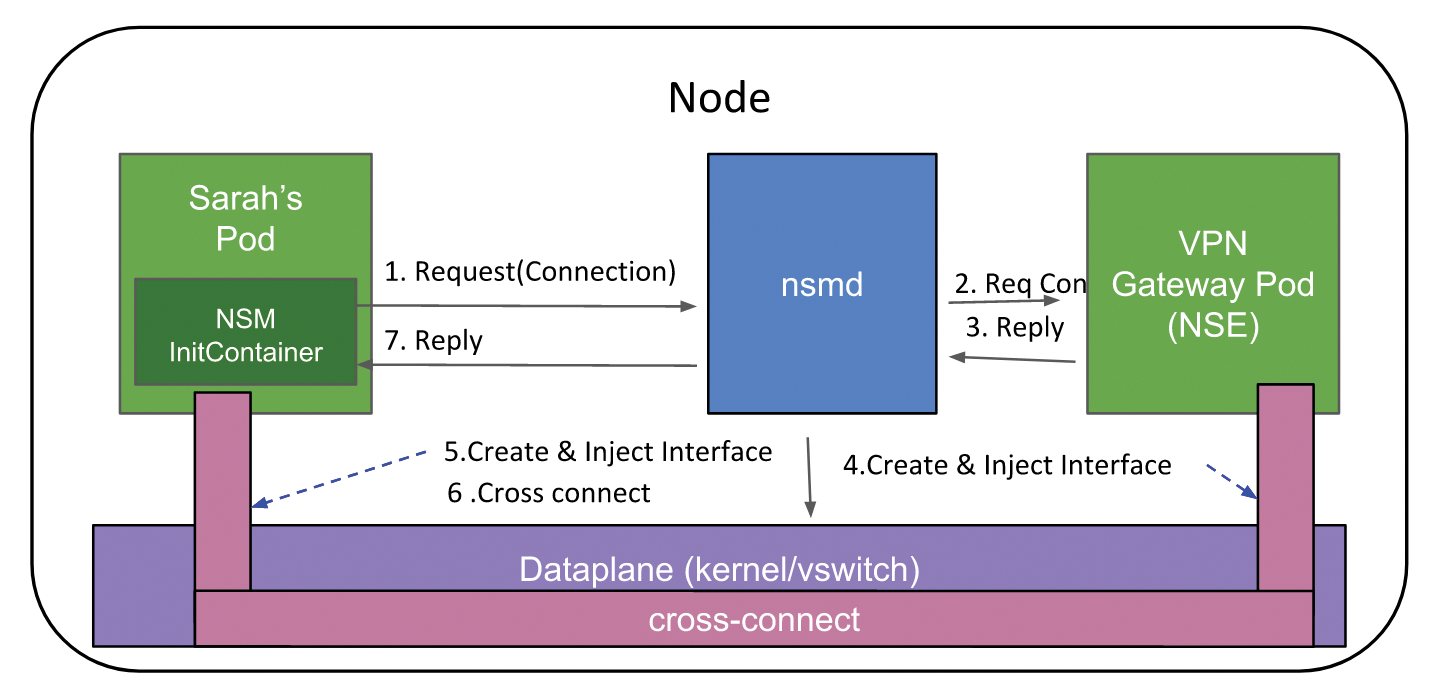

The question is now how the connection between the client pod and the VPN gateway as a network service endpoint is established. Who distributes the IP addresses, creates the correct subnets, configures the interfaces, and defines the routes? The answer is the Network Service Manager (nsmd), running as a DaemonSet [6] on each node and linking the client pod with the VPN gateway in the example. Therefore, an NSM InitContainer must be running in the requesting client pod, as well.

The Network Service Manager daemon receives a request from the client pod looking for a connection to the VPN gateway over Google's remote procedure call (gRPC) framework [7]. The manager forwards the request to the VPN gateway pod. If a response is received from this pod, nsmd generates the appropriate interfaces in the forwarder (formerly the data plane).

The forwarder is a privileged interface with kernel access. It first creates a suitable interface for the gateway and then connects it to the gateway pod. It then generates an interface for the client pod, injects it into the pod, and finally sends an acknowledgement to the client pod (Figure 2). Now the client and VPN gateway establish a point-to-point connection. Note that all connections in the Network Service Mesh are based on these kinds of point-to-point connections.

The whole thing also works across nodes. In this case, the manager of node 1 (nsmd1) is notified of the network services and network service endpoints existing in node 2 by the Kubernetes API server. Nsmd1 then contacts the manager of node 2 (nsmd2), which in turn contacts the VPN gateway pod. By way of the respective forwarders in the nodes (in future there will be several forwarders), nsmd1 and nsmd2 then establish a tunnel between the client pod and the VPN gateway pod.

The NSM developers intend these connections to work across cluster boundaries, too. The external Network Service Mesh (eNSM) will be able to contact virtual infrastructure managers (VIMs), which is especially interesting in environments with telecommunications hardware. VIMs handle control and management in Network Functions Virtualization Infrastructures (NFVIs), the networks for operating the VNFs, processor, memory, and network resources. The Network Service Mesh thus builds a bridge to other networks containing, for example, OpenStack virtual machines. However, it should also be possible to integrate external network devices into NSMs in this way.

If a developer or administrator wants to add new network service endpoints or generally get an overview of all network services, endpoints, and managers available in a cluster, the network service registry (NSR) jumps into the breach. It knows all the service mesh components in a specific cluster or on a physical network. The sum of all components entered in the NSR then forms a network service registry domain (NSRD).

Adding a Firewall

Returning to the initial example, if the company wants to add a firewall pod between the client pod and the VPN gateway, this is not a major obstacle, thanks to the labels. The firewall pod automatically forwards all incoming requests to the VPN gateway pod.

Its destination label is then intuitively named app=firewall. In the YAML file, the source is app:firewall and the route destination is destinationSelector (or the destination label) app:vpn-gateway (Listing 2, lines 3-9). If the request comes from the pod, the source label (line 10) is missing. The route then automatically takes you to the firewall (lines 12-14).

Listing 2: Simple Network Service (Part 2)

01 [...] 02 matches: 03 - match: 04 sourceSelector: 05 app:firewall 06 route: 07 - destination: 08 destinationSelector: 09 app:vpn-gateway 10 match: 11 route 12 - destination: 13 destinationSelector: 14 app:firewall

The Network Service Mesh developers insist the adjustments to Kubernetes to implement NSM are not massive. In return, NSM users would have various advantages. For example, tunnels and network contexts would become "first class citizens." NSM allows heterogeneous network configurations and can cope with exotic protocols. Linux interface, shared memory packet interface (Memif), and Vhost-user are supported. Payloads include Ethernet frames, IP, multiprotocol label switching (MPLS), or Layer 2 tunneling protocol (L2TP).

NSM organizes connections dynamically and on demand. New connections negotiate their properties independently. Additionally, service function chaining (SFC) known from the software-defined networking environment can also be implemented with NSM: As the firewall and VPN gateway example shows, the administrator can link several network functions.

Conclusions

The Network Service Mesh project is still at an early stage. In this respect, interested parties will have to wait and see how quickly it will be able to convert its plans into software. Developers of cloud-native applications, in particular, will probably like NSM because it simplifies what is often the complicated setup procedure for more specialized network functions. From Kubernetes, they know YAML files and the structure of a service mesh, so NSM fits nicely in the Kubernetes context.

At the same time, thanks to the external Network Service Mesh, NSM can also be used in OpenStack networks that use virtual machines, as is often the case in telecommunications, for example. As was revealed at the Open Networking Summit, the desire for container-based network functions is particularly strong there. Replacing VNFs with CNFs is high on the roadmap. In this respect, NSM is convenient for telcos.