Secure access to Kubernetes

Avoiding Pitfalls

The first time you roll out a Kubernetes test cluster with kubeadm, superuser certificate-based access is copied to ~/.kube/config on the host, with all options open. Unfortunately, it is not uncommon for clusters used in production to continue working this way. Security officers in more regulated industries would probably shut down such an installation immediately. In this article, I seek to shed some light on the issue and show alternatives.

Three Steps

Kubernetes has a highly granular authorization system that influences the rights of administrators and users, as well as the access options to the running services and components within the cluster. The cluster is usually controlled directly by means of an API and the kubectl command-line front end. Access to the kube-apiserver control component is kept as simple as possible by the Kubernetes makers. Developers who want to build more complex services in Kubernetes can take advantage of this system.

The official Kubernetes documentation is a useful starting point [1] if you want to understand the access control strategies that Kubernetes brings to the table. Figure 1 shows the different components and their interaction within a cluster. All requests run through the API server and pass through three stations: authentication, authorization, and access control.

![Kubernetes makes virtually no distinction between human users and service accounts in terms of access controls. © Kubernetes [2] Kubernetes makes virtually no distinction between human users and service accounts in terms of access controls. © Kubernetes [2]](images/b01_kubstruct.png)

Authentication checks whether Kubernetes is able to verify the username with an authentication method that can be a password file, a token, or a client certificate, whereas Kubernetes manages ordinary users outside the cluster itself. The platform allows multiple authentication modules and tries them all, until one of them allows authentication or until all fail.

If the first step was successful, authorization follows, during which a decision is made as to whether the authenticated user is granted the right to execute operations on an object according to the configuration. The process clarifies, for example, whether the admin user is allowed to create a new pod in the development namespace, or whether they have write permission for pods in the management namespace.

In the last step, the access control modules look into the request. For example, although user Bob can have the right to create pods in the development namespace occupying a maximum of 1GB of RAM, a request for a container of 2GB would pass the first two checks but fail the access control test.

Namespaces

The basic Kubernetes concept of namespaces further brackets all other resources and implements multiclient capability. Without special permissions, which you would have to define in the authorization area, a component only ever sees other components in the area of its own namespace.

For example, if you want to use kubectl to list the running pods in the cluster, entering

kubectl get pods

usually results in zero output. The command queries the default namespace, which is unlikely to be the one in which any of the pods that make up the cluster are running.

Only after adding the -n kube-system option do you see output (Listing 1); this option also considers the Kubernetes components that are typically run in the kube-system namespace. To see all the pods in all namespaces, you need to pass in the additional -A option.

Listing 1: kube-system Namespace Pods

$ kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-5644d7b6d9-7n5qq 1/1 Running 1 41d coredns-5644d7b6d9-mxt8k 1/1 Running 1 41d etcd-kube-node-105 1/1 Running 1 41d kube-apiserver-kube-node-105 1/1 Running 1 41d kube-controller-manager-kube-node-105 1/1 Running 3 41d kube-flannel-ds-arm-47r2m 1/1 Running 1 41d kube-flannel-ds-arm-nkdrf 1/1 Running 4 40d kube-flannel-ds-arm-vdprb 1/1 Running 3 26d kube-flannel-ds-arm-xnxqp 1/1 Running 0 26d kube-flannel-ds-arm-zsnpp 1/1 Running 4 34d kube-proxy-lknwh 1/1 Running 1 41d kube-proxy-nbdkq 1/1 Running 0 34d kube-proxy-p2j4x 1/1 Running 4 40d kube-proxy-sxxkh 1/1 Running 0 26d kube-proxy-w2nf6 1/1 Running 0 26d kube-scheduler-kube-node-105 1/1 Running 4 41d

Of Humans and Services

As shown in Figure 1, Kubernetes treats both human users and service accounts in the same way after authentication, in that, during authorization and access control, it makes no difference whether jack@test.com or databaseservice wants something from the cluster. Kubernetes checks requests according to role affiliations before letting them pass – or not.

The components in the Kubernetes cluster usually communicate with each other by service accounts (e.g., a pod talks to other pods or services). In theory, a human user could also use service accounts. Whether this is sensible and desired is another matter.

Admins create service accounts with kubectl and the Kubernetes API, which act as internal users of the cluster. Kubernetes automatically provides a secret, which authenticates the request.

Certificate or Token

Users can log on with an X.509 certificate signed by the Certificate Authority (CA) in the Kubernetes cluster. Kubernetes takes the username and group memberships from the CommonName of the certificate; the line

CN=hvapour/o=group1/o=group2

logs on a user named hvapour with the group memberships group1 and group2. In principle, this method also runs internally in Kubernetes, because you have to generate the certificate requests accordingly and sign them with the Kubernetes CA.

Moreover, it is possible to use a token file in the format:

<Token>,<Username>,<UserID>,"Group1,Group2"

To do this, however, you need to reconfigure the Kubernetes API server, completing the call parameters for kube-apiserver with the --token-auth-file=<Path_to_file> option. However, because the API server also runs in the container as part of Kubernetes, adding the option is not trivial.

The call parameters of the Kubernetes components are in the /etc/kubernetes/manifests/ directory. The kube-apiserver.yaml file (Figure 2) configures the corresponding container and contains all the call parameters as a YAML list. Enter the following line:

--token-auth-file=<path to tokenfile>

kube-apiserver in /etc/kubernetes/manifests/.and drop the token file into a folder that is accessible through the container.

Instead of token files, you can use a password file in the same way. Its syntax is similar, except that the first line contains the password. Instead of --token-auth-file=<Path_to_file>, you add the --basic-auth-file=<Path_to_file> option. The API server then uses the HTTP basic auth method, and clients have to use it to send their requests.

Both variants come at the price of the cluster admin only being able to change the passwords and tokens in the file. They also require a restart of the kube-apiserver container. You can trigger this either with a kubelet on the master node or by restarting the pod with kubectl.

OpenID Preferred

OpenID is a variant of OAuth 2.0 [3] that Microsoft and Google offer in their public clouds. The service distributes tokens that prove to be more up to date and which Kubernetes accepts, assuming you have done the prep work. If so, cloud providers then handle the authentication.

Another external method is webhooks. Kubernetes sends a JSON request with a token to an external service and waits for a response, which must contain the username, group membership(s), and extra fields, if any, in the JSON block.

Finally, the path between the user and Kubernetes can contain an authentication proxy that extends the web requests to include X-headers, for example:

X-Remote-User: jack X-Remote-Group: Dev1

Once the function is activated, Kubernetes accepts the data in this way. To prevent an attacker from spoofing this process, the proxy uses a client certificate to identify itself to Kubernetes.

Service Accounts

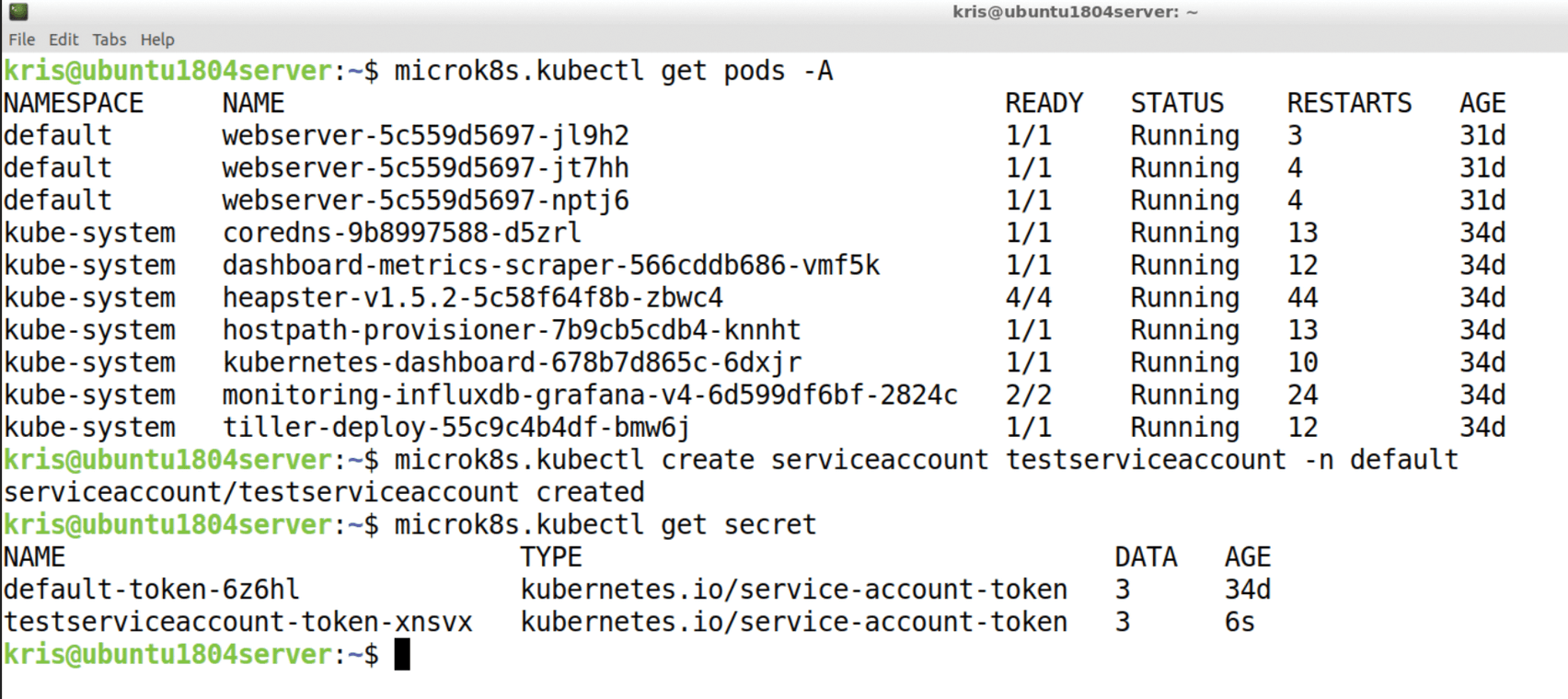

The simplest variant of an authentication method is to create a service account. For example, the first command in Listing 2 creates a new service account named testserviceaccount in the default namespace.

Listing 2: Setting Up a Service Account

$ kubectl create serviceaccount testserviceaccount -n default

$ kubectl describe secret testserviceaccount-token-xnsvx

Name: testserviceaccount-token-xnsvx

Namespace: default

Labels: <none>

Annotations: kubernetes.io/service-account.name: testserviceaccount

kubernetes.io/service-account.uid: b45a08c2-d385-412b-9505-6bdf87b64a7a

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 7 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InNCRjdsTmJHeGVoUTdGR0ZTemsyalpCaGhqclhoQjVBLXdpZnVxdVNDbXcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InRlc3RzZXJ2aWNlYWNjb3VudC10b2tlbi1ic214YiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJ0ZXN0c2VydmljZWFjY291bnQiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJiNDVhMDhjMi1kMzg1LTQxMmItOTUwNS02YmRmODdiNjRhN2EiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDp0ZXN0c2VydmljZWFjY291bnQifQ.SO9XwM3zgiW6sOfEaJx1P6m1lFdsDj9IgBCogkKgg-b8pYm4wErq9a6ZSyR6Z5E3sdG4Djkc4Gnfs4oxBncl3IpDQSNVjL6ahtPMtlrKq3ssbzvlbDSsoyJDp568O2RzuaqSP_Zy8Kmm9ddaBKUQ46DUfvLw-7MVgUf-_IY8vuaAXCCBvgeJCIpJf0_IWuBQ2uWh-0JtEZwu7OCrVO2B51bvTuXbB7lbYZvnpIJT8umBSjvoA0G78OnawY0tHtycAXedCx8UuY7DET3UQio-31vNOQQ0wKBVUlCw-9ASKX112ZKvbkzbp1ZP8MG-BgMoWJoqFdrErv2Nvo1pN85vTA

Although this account has no permissions yet, the command creates a secret in addition to the account. The output of kubectl get secret now contains an entry (Figure 3); the secret for the newly created testserviceaccount is shown.

kubectl (the Microk8s variant is shown here), you can create a service account and query the secret.Now take a closer look at the output of the second command in Listing 2. The entry below token: is for authentication. If the Kubernetes dashboard is in use, you can copy this jumble of characters and use it as a token to log in. However, the user will still not see much in the dashboard because all of the permissions are missing. You will want to add the lines

[...]

users:

- name: testserviceaccount

user:

token: eyJh[...]

[...]

to ~/.kube/config in the section below the users: label – with the complete token, of course.

Accounts for Humans

Authenticating a human user with a certificate works in a similar way. First, generate a certificate signing request (CSR). It is important to store the username as a CommonName (CN) and map all the desired group memberships via organizations. With OpenSSL, this would be:

$ openssl req -new -keyout testuser.key -out testuser.csr -nodes -subj "/CN=testuser/O=app1"

Now sign the request with the CA certificate and the key of the Kubernetes cluster. If you use the ca command in OpenSSL, you will typically have to modify openssl.cnf to remove mandatory fields like country.

The user then packs the certificate, including the key, into their ~/.kube/config file. Instead of the token: element in the service account, the client-certificate-data and client-key-data fields exist in the user context. They contain the Base64-encoded version of the certificate and private key, which the YAML configuration file expects in one line. If OpenSSL is used to generate the certificate, only the block between BEGIN CERTIFICATE and END CERTIFICATE is relevant and needs to be packaged.

Roles and Authorization

Now two entities are accessing the cluster that have passed the first step of authentication mentioned at the beginning, but they do not yet have any rights in the cluster. To change this, you need to shift the configuration focus to the authorization section.

The first concept you need to look into here is the role, which describes the goal of an action. The attributes of a role include:

- the namespace in which the role acts,

- the function it performs (read, write, create something, and so on),

- the type of objects it accesses (pods, services), and

- the API groups to which it belongs and that extend the Kubernetes API.

Besides the normal role that applies within a namespace, the ClusterRole applies to the entire Kubernetes cluster. Kubernetes manages roles through the API (i.e., you can work at the command line with kubectl).

YAML files describe the roles. Listing 3 shows a role file that allows an entity to read active pods. If you want to create a cluster role, you instead need to enter ClusterRole in the kind field and delete the namespace entry from metadata. Now, save the definition as pod-reader.yml and create the role by typing:

Listing 3: Role as a YAML File

01 apiVersion: rbac.authorization.k8s.io/v1 02 kind: Role 03 metadata: 04 namespace: default 05 name: pod-reader 06 rules: 07 - apiGroups: [""] # "" stands for the core API group 08 resources: ["pods"] 09 verbs: ["get", "watch", "list"]

kubectl apply -f pod-reader.yml

Again, be sure to pay attention to the namespace in which you do this.

The question still arises as to who is allowed to work with the rights of this role. Enter RoleBinding or ClusterRoleBinding, with which you can assign users and service accounts to existing roles.

In concrete terms, Kubernetes determines in the first step of an incoming API request whether or not the user is allowed to authenticate at all. If this is the case, it tries to assign a role to the user with RoleBinding. If this also works, Kubernetes finds out which rights the user has on the basis of the role found and then checks whether the request is within the permitted scope.

To specifically link testserviceaccount and testuser created previously with the pod-reader role, you would create the RoleBinding action (Listing 4). After creating the bindings, testuser can finally read the pods in the default namespace with kubectl get pods.

Listing 4: RoleBinding Example

01 apiVersion: rbac.authorization.k8s.io/v1 02 kind: RoleBinding 03 metadata: 04 name: read-pods 05 namespace: default 06 subjects: 07 - kind: User 08 name: testuser 09 apiGroup: rbac.authorization.k8s.io 10 - kind: ServiceAccount 11 name: testserviceaccount 12 roleRef: 13 kind: Role 14 name: pod-reader 15 apiGroup: rbac.authorization.k8s.io

Role Behavior

If you want to manage what a user can access, you need to restrict the role. For example, some resources are arranged hierarchically. If testuser should only be able to access the logs child resource of the pods resource, the entry under resources in the role definition in Listing 4 is "pods/logs".

Once you have created your Kubernetes cluster with kubeadm, you will see a ClusterRole named cluster-admin that provides full access. If you assign this role to a user, the user has unrestricted access privileges.

To avoid an inflation of single roles, at least on the level of the entire cluster, you can have aggregated roles among ClusterRoles. In this case, the individual roles are assigned a label field with a specific value. The aggregated role then gathers all roles that have a label with this value and thus forms a union of all these roles. A ClusterRole created in this way can again be used in the ClusterRoleBinding, and a user assigned to the role then enjoys all the rights assigned to the role.

In addition to the role-based authorization described above, attribute-based authorization applies a more complicated set of rules to API-based access by kubelets. For example, the Node Authorizer evaluates the requests by reference to the sender. In webhook mode, Kubernetes first sends all API requests from the users in JSON format to an external REST service, which then replies with True or False.

Access Control

The last aspect is to control the content of a query. Kubernetes provides a long list of admission controllers that monitor and control very different things. Without changing the configuration, the controllers listed in the "Admission Controller" box are enabled.

The Kubernetes documentation contains a description stating which controller implements which logic and what the configuration looks like [4]. Some of the plugins require their own configuration files or a YAML block in the cluster configuration.

One interesting example is LimitRanger, which limits resources (e.g., CPU, memory usage) for a namespace. This plugin modifies running requests with default values. This happens, for example, if a pod definition does not specify how much CPU or memory it can request. In this way, the cluster admin can manage the extent to which resources are used by a customer or a namespace.

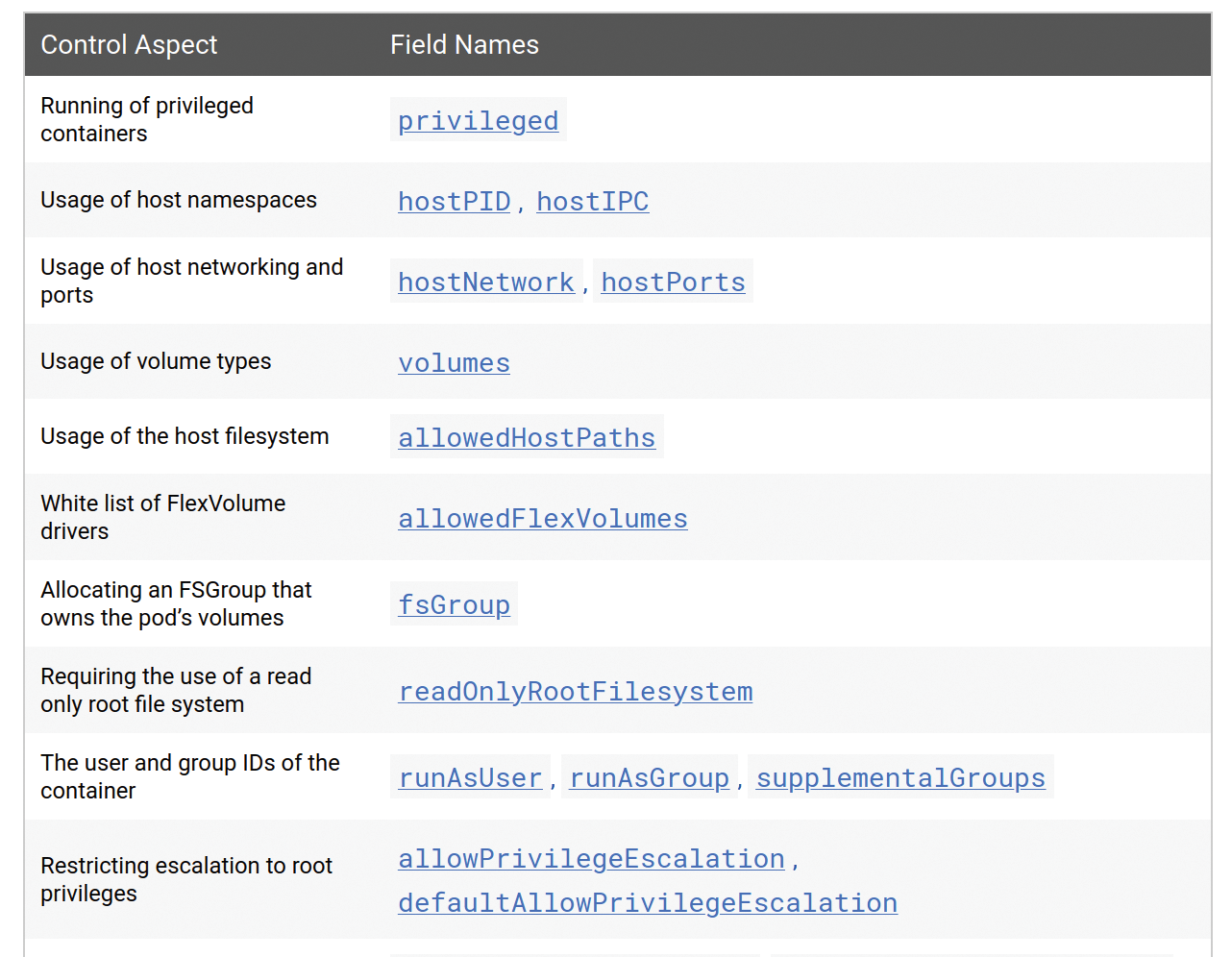

Optionally, an admission controller named PodSecurityPolicy can be added [5] that increases security by insisting that pods generated by the system by replica sets and deployments comply with certain security policies. Among other things, it can restrict Linux capabilities, define SE Linux contexts and AppArmor profiles for containers, or regulate the handling of privileges (Figure 4).

PodSecurityPolicy comprehensively explains the attributes linked to it.Conclusions

Because Kubernetes makes all operations available through an API, it offers admins maximum flexibility. This pattern also continues in the security infrastructure. Rolling out even more complex environments with dedicated security configurations is a simple and unambiguous process controlled by YAML files, which, however, does not release the developer from having to think about how to use these possibilities sensibly. On the upside, it opens up an opportunity to set effective standards in a centralized location.

The access controls shown in the article only illustrate the first step toward establishing security. Cluster admins also need to pay attention to the integrity and security of the images used. Images can contain security holes and are a potential gateway for rootkits. Also, the possibilities for container users to escalate their privileges are a permanent security issue.

What happens in practice if the deployment of a rolled out project fails because of security settings? This situation is not much different from what has happened with other IT security components (e.g., firewalls) in the last 20 years. IT security managers must therefore familiarize themselves with what can be the complex security contexts of Kubernetes systems. The design allows for secure operations but does not relieve admins from the burden of thinking.