Kubernetes k3s lightweight distro

Smooth Operator

If commentators are to be believed, tech communities have warmly embraced another predictable and definable phase in the Internet's evolution following the steady creep of Internet of Things (IoT) technologies. Apparently, the human race suddenly deems it necessary to connect to the Internet 24 hours a day anything that boasts a thermistor. Reportedly, somewhere in the region of a staggering 50 to 70 billion IoT devices will be in action by the end of 2020.

For example, according to one report [1], a water project in China includes a whopping 100,000 IoT sensors to monitor three separate 1,000km-long canals that will ultimately "divert 44.8 billion cubic metres of water annually from rivers in southern China and supply it to the arid north." The sensors were apparently installed to monitor for structural weaknesses (in a region with a history of earthquakes), scan water quality, and check water flow rates.

As you can imagine, the constant data streams being fed 24/7 from that number of sensors needs a seriously robust infrastructure management solution. Kubernetes (often abbreviated k8s by the cool kids) has historically been trying to address the non-trivial challenge of achieving what might be considered production-grade clusters without using beefy processing power and sizeable amounts of memory. With its automated failover, scalability, load balancing services to meet global traffic demands, and extensible framework suitable for distributed systems in cloud and data centers alike, Kubernetes has gained unparalleled popularity for managing containers. Building on 15 years of herding cat-like containers in busy production clusters at Google means it also has an unimpeachable pedigree.

In this article, I look at a Kubernetes implementation equally suitable for use at the network edge, shoehorned into continuous integration and continuous deployment (CI/CD) pipeline tests and IoT appliances. The super-portable k3s [2] Kubernetes distribution is a lightweight solution to all the capacity challenges that a fully blown installation might usually bring and should keep on trucking all year round with little intervention.

We Won It Six Times

The compliant k3s boasts a simple installation process that, according to the README file, requires "half the memory, all in a binary less than 40MB" to run. By design, it is authored with a healthy degree of foresight by the people at Rancher [3]. The GitHub page [4] notes that the components removed from a fully-blown Kubernetes installation shouldn't cause any headaches. Much of what has been removed, they say, is older legacy code, alpha and experimental features, or non-default features.

As well as pruning many of the add-ons from a standard build (which can be replaced apparently with out-of-tree add-ons), the light k3s dutifully replaces the highly performant key-value store etcd [5] with a teeny, tiny SQLite3 database module to act as the storage component. For context, the not-so-new Android smartphone on which I am writing this article uses an SQLite database. Chuck in a refined mechanism to assist with the encrypted TLS communications required to run a Kubernetes cluster securely and a slim list of operating system dependencies, and k3s is built to be fully accessible.

The documentation self-references k3s [6]: "Simple but powerful 'batteries-included' features have been added, such as: a local storage provider, a service load balancer, a helm controller, and the Traefik ingress controller."

The marvelous k3s should apparently run on most Linux flavors but has been tested on Ubuntu 16.04 and 18.04 on AMD64 and Raspbian Buster on armhf. For reference, I'm using Linux Mint 19 based on Ubuntu 18.04 for my k3s cluster.

All the Things

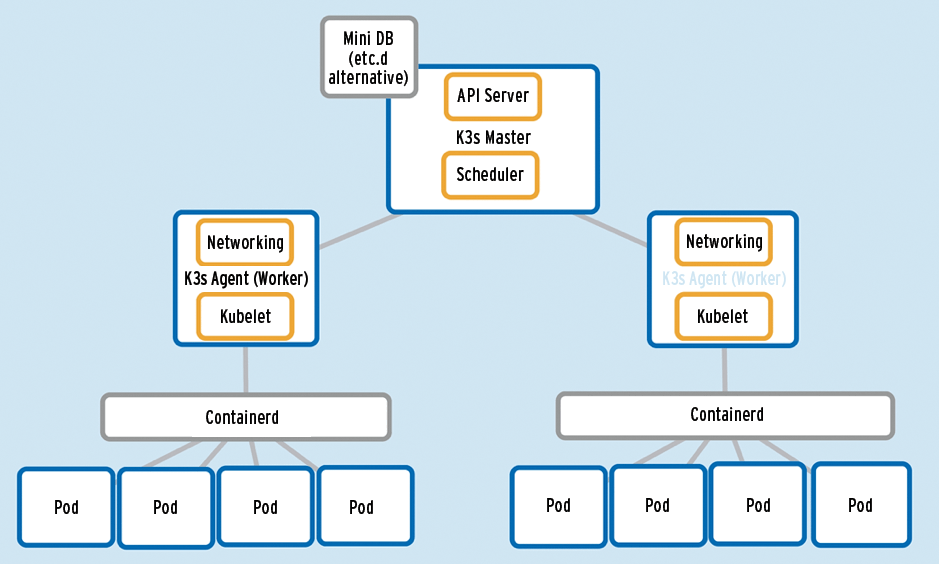

Figure 1 shows the slight changes in naming conventions that you might be used to if you are familiar with Kubernetes and how, from an architectural perspective, a k3s cluster might fit together. Note for example that the nodes, now commonly called Worker nodes, are called Agents. Additionally, in k3s parlance, the Master node is referred to as a k3s Server. Not much else is different. As you'd expect, most of the usual elements are present: the controller, the scheduler, and the API server.

All Good Things

The docs give you a tantalizing, single command line to download, install, and run k3s:

$ curl -sfL https://get.k3s.io | sh -

With that curl command, the download is run through an sh shell. From my DevOps security perspective, I'm not a fan of being quite so cavalier and trusting software I'm not familiar with. Therefore, I would remove the | sh - pipe and see what's being downloaded first. The -s switch makes the curl output silent, and the -f and -L switches ensure that HTTP errors are not shown and the command simply quits and follows page redirections, respectively.

If you run the command without the shell pipe as suggested and instead redirect the curl output to a file,

$ curl -sfL https://get.k3s.io > install-script.sh

you get a good idea what k3s is trying to do. In broad strokes, after skimming through the helpful comments at the top of the output file, you will see a number of configurable install options, such as avoiding k3s automatically starting up as a service, altering which GitHub repository to check for releases, and not downloading the k3s binary again if it's already available. At more than 700 lines, the content is well worth a quick once-over to get a better idea of what's going on behind the scenes.

William Tell or Agent Cooper to Boot

It's time to get your hands much dirtier now and delve into the innards of k3s. To begin, fire up your k3s cluster by running the script above. Either put the appended pipe and sh section back at the end of the command or type:

chmod +x install-script.sh ./install-script.sh

You will need to be root for the correct execution permissions.

The trailing dash after the sh in the original command stops other options being accidentally passed to the shell that's going to run. Listing 1 shows what happens after running the install script. The output is nice, clean, and easy to follow.

Listing 1: Installing k3s

[INFO] Finding latest release [INFO] Using v1.0.0 as release [INFO] Downloading hash https://github.com/rancher/k3s/releases/download/v1.0.0/sha256sum-amd64.txt [INFO] Downloading binary https://github.com/rancher/k3s/releases/download/v1.0.0/k3s [INFO] Verifying binary download [INFO] Installing k3s to /usr/local/bin/k3s [INFO] Creating /usr/local/bin/kubectl symlink to k3s [INFO] Creating /usr/local/bin/crictl symlink to k3s [INFO] Skipping /usr/local/bin/ctr symlink to k3s, command exists in PATH at /usr/bin/ctr [INFO] Creating killall script /usr/local/bin/k3s-killall.sh [INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh [INFO] env: Creating environment file /etc/systemd/system/k3s.service.env [INFO] systemd: Creating service file /etc/systemd/system/k3s.service [INFO] systemd: Enabling k3s unit Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service ? /etc/systemd/system/k3s.service. [INFO] systemd: Starting k3s

The documentation notes that as part of the installation process, the /etc/rancher/k3s/k3s.yaml config file is created. In that file, you can see a certificate authority (CA) entry to keep internal communications honest and trustworthy across the cluster, along with a set of admin credentials.

So you don't have to install client-side binaries, the sophisticated kubectl is also installed locally by k3s, which is a nice time-saving touch. You're suitably encouraged at this point to see whether the magical one-liner did reliably create a Kubernetes build by running the command:

$ kubectl get pods --all-namespaces

Although you have connected to the API server, as you can see in Listing 2, some of the pods are failing to run correctly with CrashLoopBackOff errors. Troubleshooting these errors will give you more insight into what might go wrong with Kubernetes and k3s.

Listing 2: Failing Pods (Abridged)

NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-d798c9dd-h25s2 0/1 Running 0 10m kube-system metrics-server-6d684c7b5-b2r8w 0/1 CrashLoopBackOff 6 10m kube-system local-path-provisioner-58fb86bdfd 0/1 CrashLoopBackOff 6 10m kube-system helm-install-traefik-rjcwp 0/1 CrashLoopBackOff 6 10m

A cluster by definition is more than one node, and so far you've only created one node. To check how many are available, enter

$ kubectl get nodes NAME STATUS ROLES AGE VERSION kilo Ready master 15m v1.16.3-k3s.2

or check events. As Listing 3 shows, not much is going on. However, by checking all Kubernetes namespaces for events with the command

$ kubectl get events --all-namespaces

you can see lots of useful information.

Listing 3: Cluster Events

$ kubectl get events LAST SEEN TYPE REASON OBJECT MESSAGE 16m Normal Starting node/kilo Starting kubelet. 16m Warning InvalidDiskCapacity node/kilo invalid capacity 0 on image filesystem 16m Normal NodeHasSufficientMemory node/kilo Node kilo status is now: NodeHasSufficientMemory 16m Normal NodeHasNoDiskPressure node/kilo Node kilo status is now: NodeHasNoDiskPressure 16m Normal NodeHasSufficientPID node/kilo Node kilo status is now: NodeHasSufficientPID 16m Normal NodeAllocatableEnforced node/kilo Updated Node Allocatable limit across pods 16m Normal Starting node/kilo Starting kube-proxy. 16m Normal NodeReady node/kilo Node kilo status is now: NodeReady 16m Normal RegisteredNode node/kilo Node kilo event: Registered Node kilo in Controller

To debug any containerd [7] run-time issues, look at the logfile in Listing 4, which shows some heavily abbreviated sample entries with some of the errors. You can also check the verbose logging in the Syslog file, /var/log/syslog, or on Red Hat Enterprise Linux derivatives, /var/log/messages. To check your systemd service status, enter:

$ systemctl status k3s

Listing 4: Kubernetes Namespace Errors

$ less /var/lib/rancher/k3s/agent/containerd/containerd.log BackOff pod/helm-install-traefik-rjcwp Back-off restarting failed container pod/coredns-d798c9dd-h25s2 Readiness probe failed: HTTP probe failed with statuscode: 503 BackOff pod/local-path-provisioner-58fb86bdfd-2zqn2 Back-off restarting failed container

After looking at the output from the command

iptables --nvL

you might discover (in-hand with reading on GitHub [8] about a cluster networking problem) that firewalld is probably responsible for breaking internal networking. A simple fix is to see whether firewalld is enabled:

$ systemctl status firewalld

To stop it running, you use:

$ systemctl stop firewalld

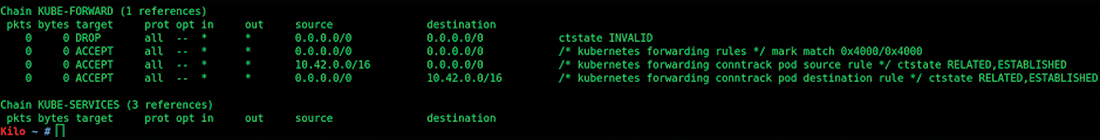

That iptables and Kubernetes don't play nicely together is relatively well known (in this case, the ACCEPT entries in Figure 2 would be showing as REJECT before stopping firewalld). As a result, traffic won't be forwarded correctly under the k3s-friendly KUBE-FORWARD chain in iptables. The number of pods that should be running can be seen in Listing 5.

Listing 5: All Pods Running

NAMESPACE NAME READY STATUS RESTARTS AGE kube-system local-path-provisioner-58fb86bdfd-bvphm 1/1 Running 0 65s kube-system metrics-server-6d684c7b5-rrwpl 1/1 Running 0 65s kube-system coredns-d798c9dd-ffzxf 1/1 Running 0 65s kube-system helm-install-traefik-qfwfj 0/1 Completed 0 65s kube-system svclb-traefik-sj589 3/3 Running 0 31s kube-system traefik-65bccdc4bd-dctwx 1/1 Running 0 31s

Ain't No Thing

Now that you have a happy cluster, install a pod to make sure Kubernetes is running as expected. The command to install a BusyBox pod is:

$ kubectl apply -f https://k8s.io/examples/admin/dns/busybox.yaml pod/busybox created

As you can see from the output, k3s accepts standard YAML (see also Listing 6). If you check the default namespace, you can see the pod is running (Listing 7)

Listing 6: BusyBox YAML

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

restartPolicy: Always

Listing 7: BusyBox Running

$ kubectl get pods -ndefault NAME READY STATUS RESTARTS AGE busybox 1/1 Running 0 2m14s

Excellent! It looks like the cluster is able to handle workloads, as hoped. Of course, the next step is to add some Agent (Worker) nodes to the k3s Server (Master) to run more than just a single-node cluster.

Two's Company

Look back at Figure 1 to the example of a standard configuration with two Agent nodes and a main Server node. Consider how software as slick as k3s might make life easier for you when it comes to adding Agent nodes to your cluster. I'll illustrate this surprisingly simple process with a single Master node and a single Agent in Amazon Web Services (AWS) [9].

The process so far has set up a Master node, which helps create the intricate security settings and certificates for a cluster. Suitably armed, you can now look inside the file /var/lib/rancher/k3s/server/node-token to glean the API token and insert it into the command that will be run on the Agent node. Essentially, the command simply tells k3s to enter Agent mode and not become a Master node:

$ k3s agent --server https://${MASTER}:6443 --token ${TOKEN}

On a freshly built node (which I'll return to later), it's even quicker:

$ curl -sfL https://get.k3s.io | K3S_URL=https://${MASTER}:6443 TOKEN=${TOKEN} sh -

I have some Terraform code [10] that will set up two instances, creating them in the same virtual private cloud (VPC). To keep things simple, don't worry about how the instances are created; instead, focus on the k3s aspects. For testing purposes, you could hand-crank the instances, too.

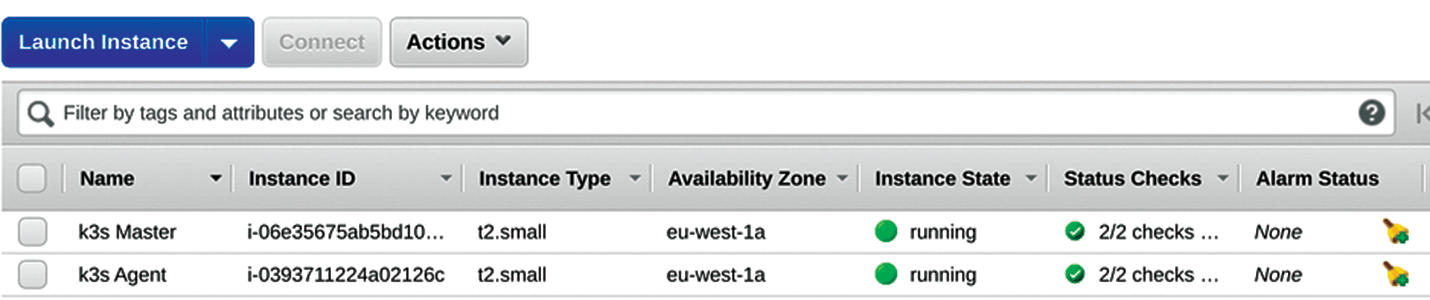

As mentioned earlier, I've gone for two Ubuntu 18.04 (Bionic) instances. Additionally, I'll use the same key pair for both, put the instances in a VPC of their own, and configure a public IP address for each (so I can quickly access them with SSH). After a quick check of which packages need updating and an apt upgrade -y command on each instance, followed by a reboot, I'm confident that each instance has a sane environment on which k3s can run (Figure 3).

For the Master node, you again turn to the faithful installation one-liner:

$ curl -sfL https://get.k3s.io | sh -

After 30 seconds or so, you have the same output as seen in Listing 1.

Now, check how many nodes are present in the, ahem, lonely one-machine cluster (Listing 8). As expected, the role is displayed as master. Check the API token needed for the node's configuration in /var/lib/rancher/k3s/server/node-token (see also the "API Token" box). I can display the entire unredacted contents of that file with impunity, because the test lab was torn down in a few minutes time:

$ cat /var/lib/rancher/k3s/server/node-token K10fc63f5c9923fc0b5b377cac1432ca2a4daa0b8ebb2ed1df6c2b63df13b092002::server:bf7e806276f76d4bc00fdbf1b27ab921

Listing 8: A One-Machine Cluster

$ kubectl get nodes NAME STATUS ROLES AGE VERSION ip-172-30-2-181 Ready master 27s v1.16.3-k3s.2

Before leaving the Master node, look up its private IP address,

$ ip a

and the cni0 interface. Then, carefully take a note of that IP address. In my case, it was 172.30.2.181/24.

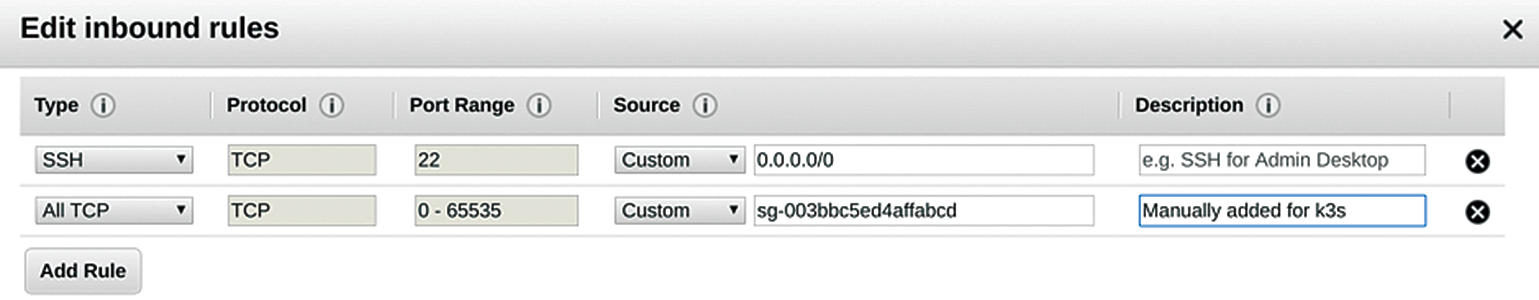

The standard security groups (SGs) in AWS only open TCP port 22, so I've made sure that any instances in that newly baked VPC can talk to each other. As you will see in a later command, only TCP port 6443 is needed for intracluster communications. Figure 4 shows what I've added to the SG (the SG self-references here, so anything in the VPC can talk to everything else over the prescribed ports).

Now, jump over to the Agent node:

$ ssh -i k3s-key.pem ubuntu@34.XXX.XXX.XXX # Agent node over public IP address

I'll reuse the command I looked at a little earlier. This time, however, I will populate the slightly longer command used for Agents with two environment variables. Adjust these to suit your needs and then run the commands:

$ export MASTER="172.30.2.181" # Private IP Address $ export TOKEN="K10fc63f5c9923fc0b5b377cac1432ca2a4daa0b8ebb2ed1df6c2b63df13b092002::server:bf7e806276f76d4bc00fdbf1b27ab921"

Finally, you're ready to install your agent with a command that pulls in your environment variables:

$ curl -sfL https://get.k3s.io | K3S_URL=https://${MASTER}:6443 K3S_TOKEN=${TOKEN} sh -

In Listing 9, you can see in the tail end of the installation output where k3s acknowledges Agent mode. Because the K3S_URL variable has been passed to it, the Agent mode has been enabled. However, to test that the Agent node's k3s service is running as expected, it's wise to refer to systemd again:

$ systemctl status k3s-agent

Listing 9: Agent Mode Enabled

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env [INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service [INFO] systemd: Enabling k3s-agent unit Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service ? /etc/systemd/system/k3s-agent.service. [INFO] systemd: Starting k3s-agent

The output you're hoping for, right at the end of the log, is kube.go:124] Node controller sync successful.

Now for the proof of the pudding: Log back in to your Master node and see if the cluster has more than one member (Listing 10). As you can see, all is well. You can add workloads to the Agent node and, if you want, also chuck another couple of Agent nodes into the pool to help load balance applications and add some resilience.

Listing 10: State of the Cluster

$ kubectl get nodes NAME STATUS ROLES AGE VERSION ip-172-30-2-181 Ready master 45m v1.16.3-k3s.2 ip-172-30-2-174 Ready <none> 11m v1.16.3-k3s.2

Here, the <none> instead of worker or agent is the default [11].

The End Is Nigh

I hope your appetite has been suitably whetted. I can't help but think k3s is going to change the way I test Kubernetes.

Being a Linux fan, I often find myself telling non-technical people that most of their televisions, broadband routers, and smartphones use Linux (never mind spacecraft and space stations), and I wonder how long it will be before I'm saying that about embedded versions of Kubernetes.

If you run into problems with k3s, detailed, well-presented docs can be found at the main website [6]. You can use the /usr/local/bin/k3s-uninstall.sh file to uninstall k3s after testing. The output from the script is nice and clear.

Lest you forget the venerable Docker, the docs state: "K3s includes and defaults to containerd …. If you want to use Docker instead of containerd then you simply need to run the agent with the --docker flag" [12].

You can see the Docker run time in action with:

$ k3s agent -u ${MASTER} --token ${TOKEN} --docker &

The next time you have your dog chipped or your washing machine does a software update to change the tune it plays after finishing a load of washing, consider where the IoT evolution is taking us and exactly what part you and the technology you use might play in it.