Setting up DevOps Orchestration Platform

Framed

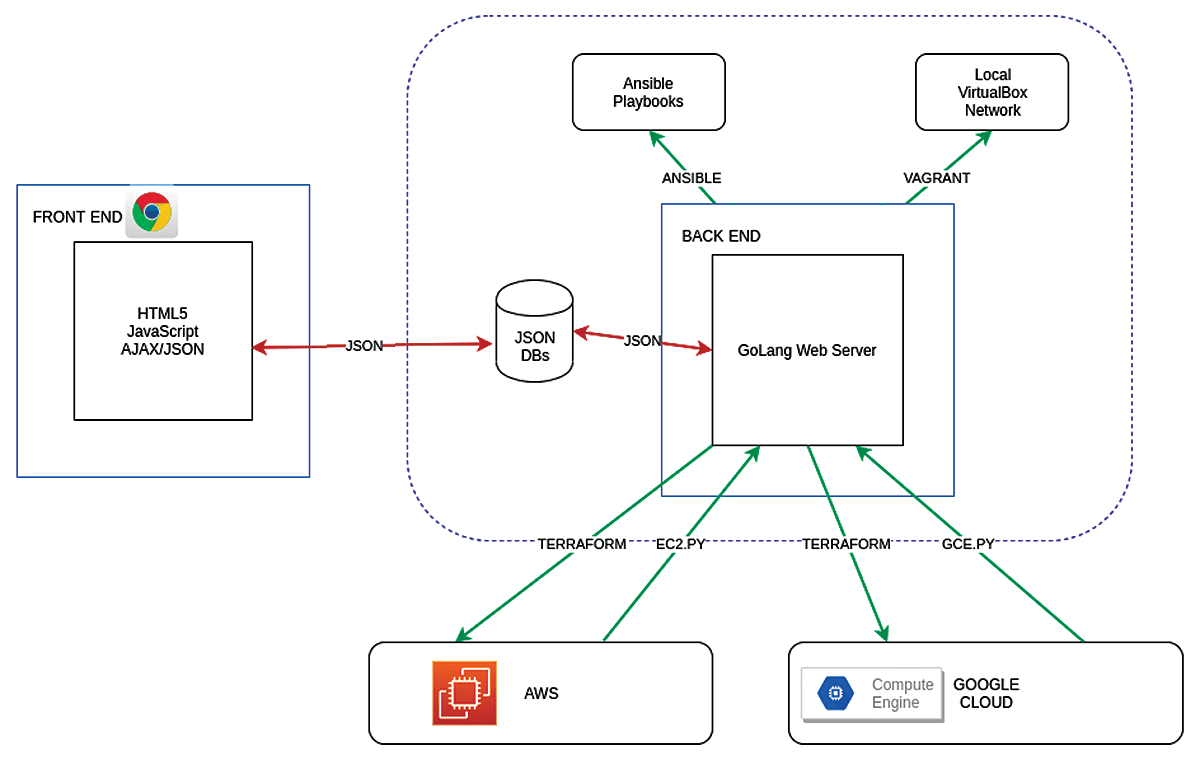

DevOps Orchestration Platform [1] is a web-server-based software platform and framework that implements an abstraction layer above Terraform [2] and Ansible [3], including the utilization of Ansible EC2.py and GCE.py scripts (Figure 1). It employs other open source technologies such as GoTTY and various JavaScript libraries and allows you to control security at two levels from the GUI: cloud hypervisor level (AWS Security Groups and Google Cloud Platform Firewall) and operating system platform level (iptables).

The platform currently supports a local VirtualBox network, Amazon Web Services (AWS), and Google Cloud Platform (GCP). The tool can run Ansible playbooks of various applications for installation on the deployed or imported infrastructure. Many sample Ansible playbooks have already been provided, forming a basis on which to extend the framework.

A Docker module allows you to install the Docker daemon and client on arbitrary virtual machine (VM) instances and use the same Ansible playbooks to deploy software into Docker containers before committing the changes into a Docker image (baking) that can be pushed to a Sonatype Nexus 3 Docker registry. Nexus 3 itself can be installed on an instance from the DevOps Orchestration Platform GUI without running a single command or editing a single configuration file.

For this article, I assume you have already installed the components and versions shown in the "Technologies" box, with the necessary paths and Linux environment variables configured. I also assume you have accounts on whatever choice of Cloud provider you want to use (AWS/GCP) with API keys already generated so that you can fully deploy instances and configure security groups and subnets.

For a list of current restrictions and limitations, see the "Limitations" box. In a later section, I describe how the framework can be extended to overcome some or all of these limitations. Note that the current limitations would not prevent you from going into the Google Cloud or AWS console and making changes or additions, manually or by API scripts, to components such as virtual private clouds (VPCs), routing tables, subnets, and network access control lists (NACLs).

User Guide

After ensuring the indicated version of Golang, as well as the versions of the other software mentioned, is installed and accessible by path environment variables, navigate into webserver in the top-level directory to build the web server:

cd webserver ./build_and_run.sh

Now, point your web browser (note that only Firefox and Chrome are supported) to http://localhost:6543.

Deploying the Infrastructure

The first task is to decide whether to deploy on a local VirtualBox network, in AWS, or in Google Cloud. If using AWS or Google Cloud, the first task is to configure your keys. Select AWS or Google Cloud in the left navigation bar and store the keys, which you will have obtained from the AWS or Google Cloud console. Alternatively, you can import AWS keys from environment variables by pressing the corresponding button.

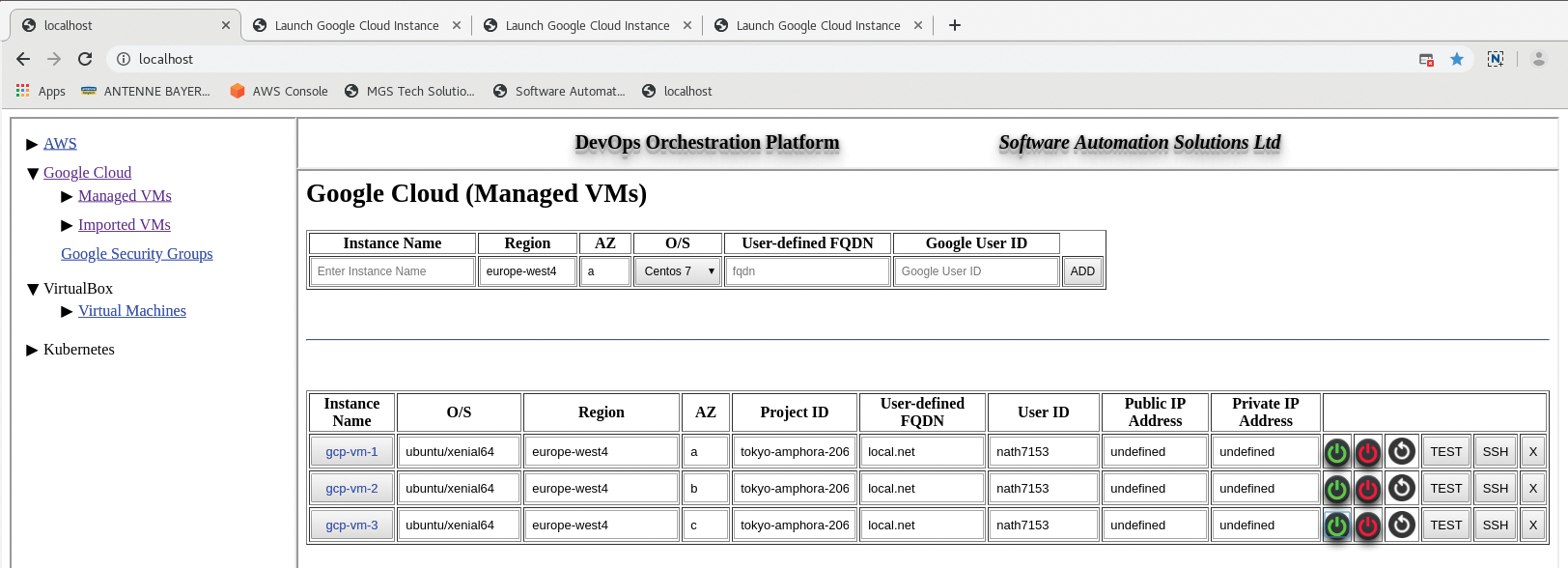

Instances on AWS or Google Cloud can be launched, or you can import details of existing instances. Importing existing instances uses the API keys to execute Ansible EC2.py and GCE.py scripts, which are dynamically populated as templates and then run, allowing the interrogation of details about existing cloud resources. Only basic details are imported (e.g., public and private IP addresses and subnets), because only the default VPCs are currently supported (Figure 2).

Launching new instances is achieved by dynamic populating of Terraform templates. The templates and details are abstracted from the user, and the functionality runs "behind the scenes"; you only have to use the GUI. Details of new instances and subnets are added from the top table, where you choose the instance name, region, availability zone, and Ubuntu or CentOS operating system. The new instance then appears in the lower table.

In the case of Google Cloud, you must choose a user-defined fully qualified domain name (FQDN) and provide the user ID for the Google Cloud user account. (The FQDN is arbitrary but can be used in the case of a private BIND9 server deployment). The buttons in each added instance row are self-explanatory.

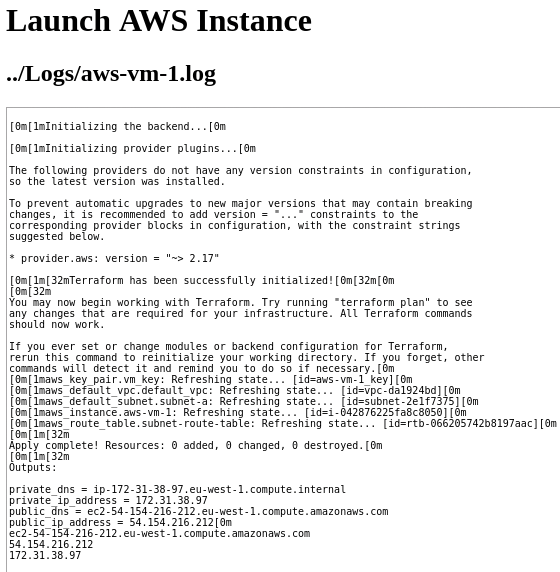

After the Terraform log run has completed, clicking TEST (Figure 3) makes the platform interrogate the Terraform output (Figure 4) and pull the variables into the system. The results (public and private IP addresses) appear on each row.

VirtualBox instances can be deployed into a local VirtualBox network that uses a mechanism hidden from the user: dynamic populating of Vagrant files. Again, you need not edit the Vagrant files. Select VirtualBox in the left navigation column to use this option.

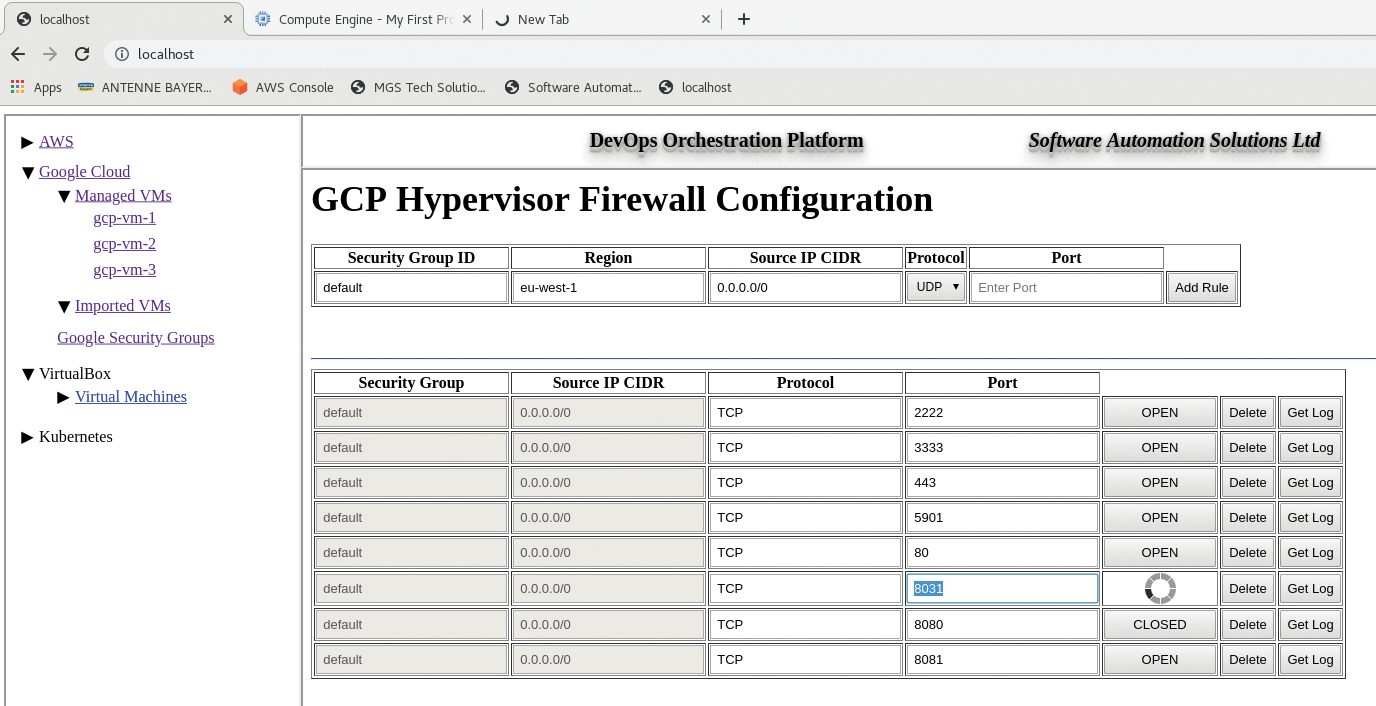

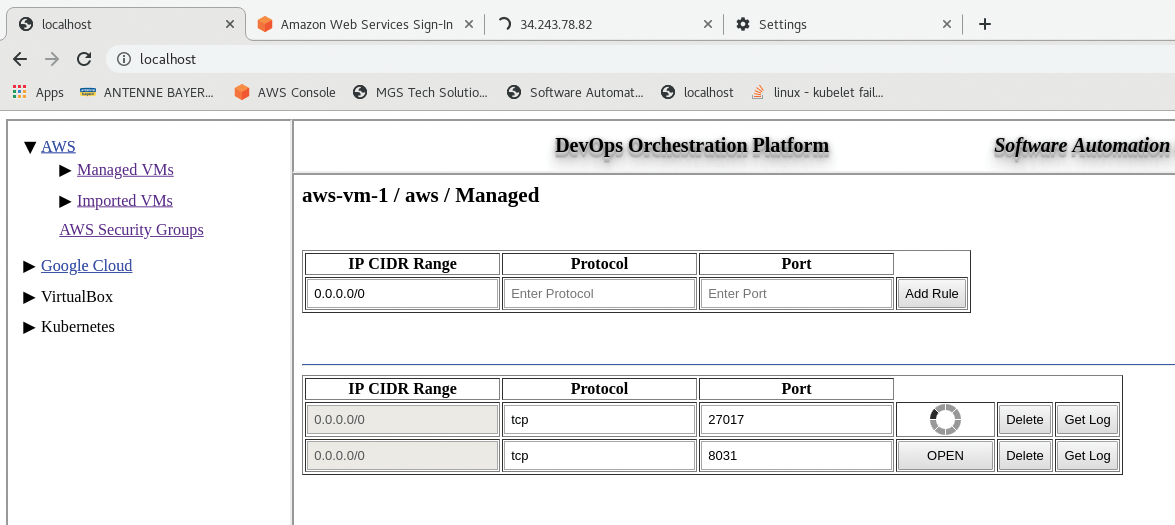

Configuring Hypervisor Security

The left navigation bar can be used to navigate to the cloud hypervisor firewall configuration window (known as Security Group in the case of AWS and Firewall in Google Cloud). New rows of Protocol-Port combinations are added from the uppermost table that then appear in the lower table, after which the corresponding ports can be opened or closed by clicking the buttons (Figures 5 and 6). Note that in each case, only the default firewall security group for the (default) VPC is currently supported.

Configuring iptables

DevOps Orchestration Platform provides additional security options at the operating system platform level in the form of iptables rules. To configure, click a VM instance button from the VirtualBox, AWS, or Google Cloud list (Figure 7); alternatively, navigate to a specific VM instance by selecting one from the expandable navigation column on the left side; then you can select the O/S Platform Firewall from a specific VM instance detail window.

The Protocol-Port combinations are added as in the security group rules described earlier. As before, a button for a specific port on a specific row can be clicked to open or close a port.

SSH Connection to Instances

To SSH to a specific VM instance you can use either the SSH button in a row from the Managed VMs list or from the SSH button in the VM detail window. Either action launches an SSH window into the corresponding VM instance (Figure 8). As described earlier, in the case of AWS or GCP, ensure you have clicked the TEST button for each row of a newly deployed instance so the required data is pulled from the Terraform output and the SSH keys are configured. For convenience, the SSH window can also be detached into a separate window by clicking the Detach button.

Deploying Sample Applications

Select a VM by clicking a VM instance button under VirtualBox, AWS (Managed VMs), or Google Cloud (Managed VMs) in the main window; alternatively, you can select a VM from the expandable navigation column on the left side.

General software applications and Linux utilities can be deployed by selecting from the drop-down list under the Generic Software Deployment section. Clicking Generate Inventory generates an Ansible inventory that is then stored in the corresponding deployment path on the back-end server filesystem as follows:

devops-orchestration-platform/devops_repo/ansible/<name_of_app>/inventory_<name_of_cloud_type>-<name_of_instance>

Selecting Deploy ensures an inventory is generated and then Ansible is run to deploy the application software onto the instance. Server application software can be deployed onto an instance under the Server Deployment section.

For some servers, such as DNS BIND9, the server needs to know what clients will access it. As shown in the Server Deployment section in Figure 9, a list of client VM instances can be selected for a particular server deployment. In the specific example shown, permission is given to three client nodes to access the DNS server.

The purpose of the Client Deployment section is to deploy client software intended to access a service. For a specific client technology, it is critically important to select the server instance to which the client will send its requests. For example, if a DNS client is being deployed onto aws-vm-2, it will send its requests to the DNS server running on aws-vm-1, where aws-vm-1 is selected in the Select Server for Client drop-down.

DevOps Orchestration Platform includes a number of sample applications (see the "Sample Applications" box), along with their Ansible templates and playbooks. The Ansible inventories for these applications are dynamically generated at the infrastructure bootstrap stages described earlier (i.e., the framework facilitates a connection between Terraform and Ansible that is entirely invisible to the end user, who need only use the web application GUI).

The ELK sample playbook can deploy Elastic Stack (Filebeat, Logstash, ElasticSearch, and Kibana) onto arbitrary instances and configure them all to communicate together. After that, you can point your browser at Kibana to launch the dashboard (after opening the required ports in the Security Group configuration window for the corresponding default VPC). See the port window pop-up described later for assistance with this.

The Prometheus and Grafana sample playbook similarly installs Prometheus, Grafana, and the Node Exporter onto instances determined by the user from the GUI. If you then point a browser to the Grafana port on the Grafana VM instance, you will see the Grafana dashboards that were automatically installed and configured by the platform.

Ensuring the correct ports are opened on the default VPC's instances from the Security Groups or Firewall configuration feature – and likewise for iptables rules if iptables was deployed – is critically important.

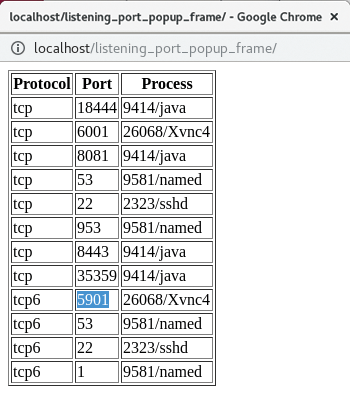

Local Listening Ports

The listening port pop-up window (Figure 10) is useful not only for analyzing the potential attack surface of each instance from a security perspective, but to find out which port to point your browser to after deploying applications such as Prometheus, Grafana, Kibana, VNC, and so on. The port window can be displayed by selecting Get Listening Ports from the VM instance detail window (Figure 9).

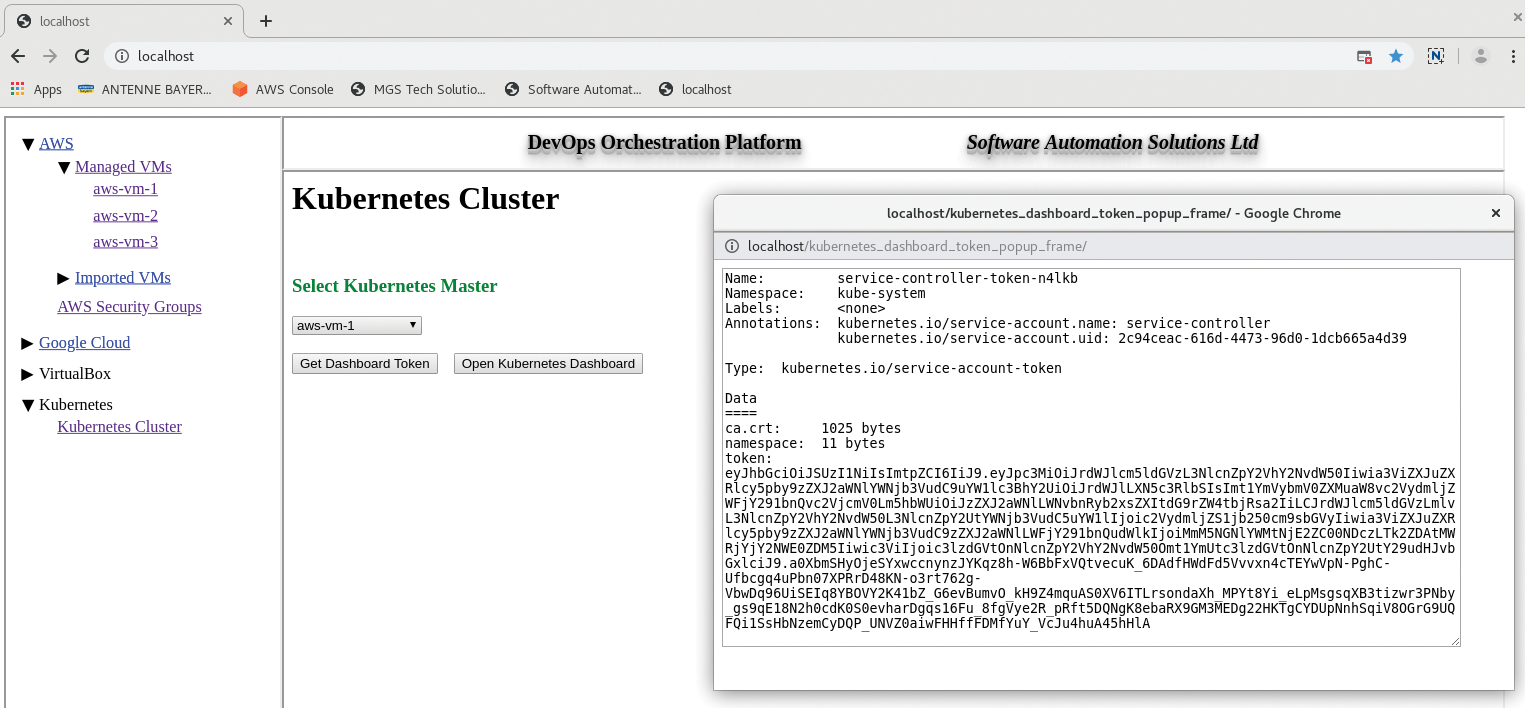

Deploying Kubernetes on Bare Metal VMs

A powerful feature of DevOps Orchestration Platform is that it is able to deploy Kubernetes on arbitrary bare metal VM clusters. You do not need to make a single change to a single configuration file or even run a single Linux command. Everything from the Kubernetes Weave Net network overlay to the master and agent installations on instances is dealt with by automated Terraform templating and dynamic Ansible inventory configuration.

However, Kubernetes components must be installed in the correct order, simultaneously being mindful that, in the Kubernetes case, "server" refers to "master," and "client" refers to "agent" or "node." In the terminology of DevOps Orchestration Platform, then, joining a Kubernetes node to a Kubernetes master involves pointing a Kubernetes client to the Kubernetes server at the agent deployment stage.

For Kubernetes, the correct installation order is:

- Install Docker

- Prepare Kubernetes

- Install Kubernetes master

- Install Kubernetes agent

To use the private Docker registry, Nexus 3 must already have been deployed by the tool, with the Configure Docker Client playbook additionally run on every node that will have a Docker daemon.

After Kubernetes is installed (checking the Ansible log to ensure it has run smoothly), navigate to Kubernetes on the left navigation bar, select the instance hosting your Kubernetes master node, and click Get Dashboard Token, before opening the Kubernetes Dashboard (Figure 11).

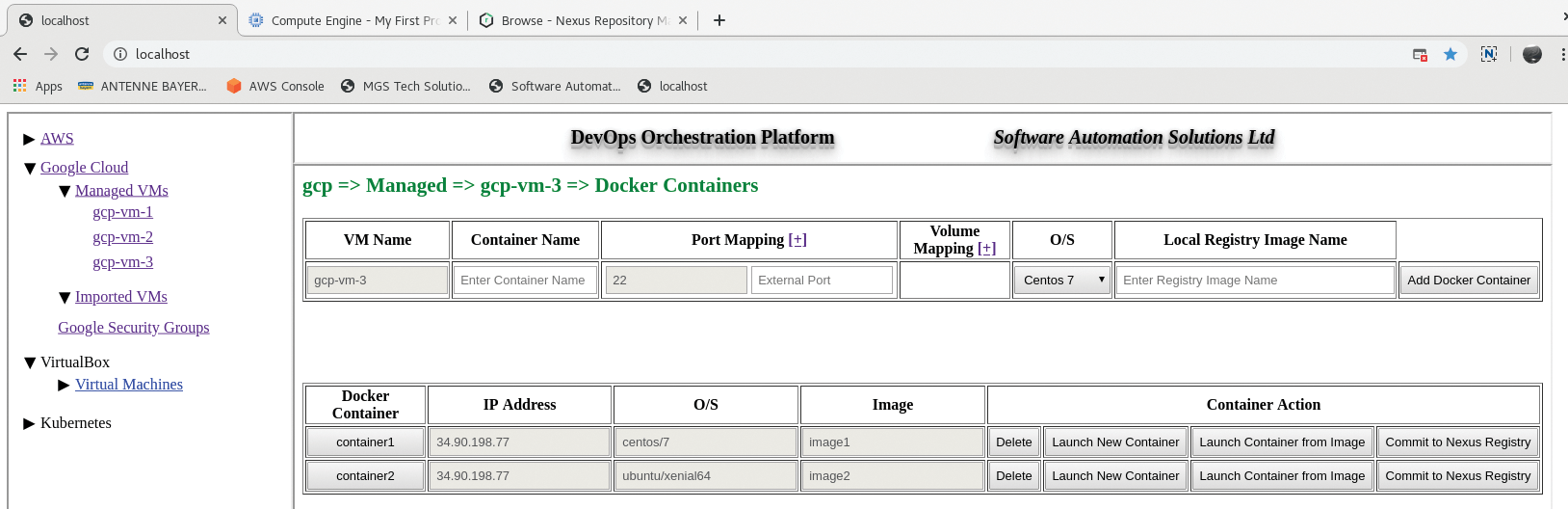

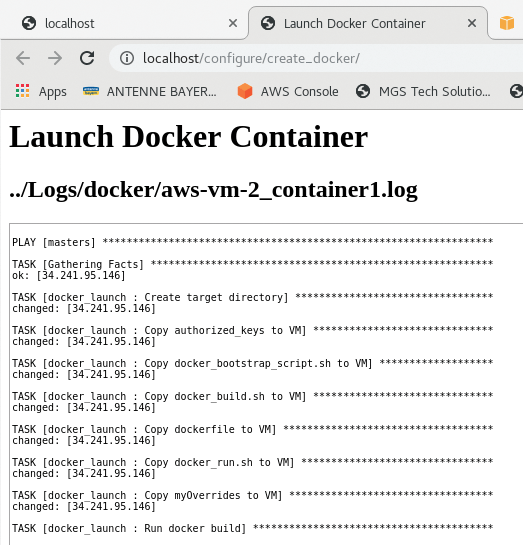

Bootstrapping Docker Containers and Images

The platform can be used to bootstrap and deploy Docker containers, although testing of this feature was limited by time constraints. To use this feature, a Nexus 3 server must have previously been deployed into the corresponding subnet, by the tool. Nexus 3 contains its own private Docker registry, also configured automatically by DevOps Orchestration Platform. TLS certificates are automatically generated. After deploying Nexus 3, the Configure Docker Client playbook must be run for each node running the Docker client daemon. Selecting Docker Containers in the VM instance detail window brings up the Docker configuration window shown in Figure 12.

Similar to the mechanism used for VMs, Docker containers are added from the top window. Port and volume mappings to the host can be added here – as many as desired. Remember that "host" in this case refers to the deployed cloud instance or VirtualBox VM, not the host running DevOps Orchestration Platform. After adding the Docker container details, various options are available when launching the container.

To commit the Docker container to a Docker registry (which invokes docker commit behind the scenes), a Nexus 3 deployment must have previously been deployed into the same subnet as the instance on which the Docker containers are being launched (Figure 13). The instances comprising the Docker client and Docker registry must be resolvable within the subnet, by either the sample DNS BIND9 playbooks included with the tool or an equivalent DNS service such as Route 53.

After a successful commit, you will be able to see the Docker image stored in the Nexus 3 registry. These images will have names matching the ones you entered in the Local Registry Image Name box in Figure 12.

The SSH username and password used inside the Docker containers currently must be the same as the SSH credentials for the VM instance on which that Docker image was originally bootstrapped. Therefore, if the tool is used to pull the Docker image back out of the registry to run it again, it must be pulled onto the same original VM instance.

Code Structure Walk-Through

DevOps Orchestration Platform has been designed with extendability, scalability, and flexibility in mind. With basic knowledge of JavaScript, Golang, HTML5, Ansible, Terraform, and Bash, you can extend the framework to virtually any infrastructure-as-code or Ansible use case. This section is a short walk-through of the code structure, which will be broken down directory by directory so you can understand how the project is modularized in the context of the architecture diagram given earlier.

High-Level Project Structure

The DevOps Orchestration Platform structure is shown in Listing 1.

-

ansiblecontains everything related to Ansible playbooks and is used for temporary storage of the VM instance SSH keys. -

bootstrapin the name means the directory is dynamically generated by the tool and used for temporary storage of keys and other VM instance details. -

firewallin the name means the directory is dynamically generated by the tool and used for controlling the security groups. -

dockeris a temporary storage area for scripts and commands related to bootstrapping Docker containers. This subdirectory additionally contains Docker logs in which the name contains the name of each Docker container appended. -

Logscontains the generated VM and Docker logs with the naming convention<VM instance Name>-<O/S Name>. TheLogssubdirectory also contains Docker logs named for each Docker container appended. -

softwarecontains GoTTY, an open source utility used by DevOps Orchestration Platform for browser-based SSH access. -

webservercontains all the web server Golang code, JavaScript, HTML, and templates and is described in the next section.

Listing 1: Directory Structure

+-- DevOps-Orchestration-Platform

+-- ansible

+-- aws_bootstrap

+-- aws_firewall

+-- docker

+-- gcp_bootstrap

+-- gcp_firewall

+-- Logs

+-- OTHER_LICENSES

+-- software

+-- vm_bootstrap

+-- webserver

+-- ansible_handler

+-- aws_handler

+-- build_and_run.sh

+-- connectivity_check

+-- css

+-- databases

+-- gcp_handler

+-- general_utility_web_handler

+-- index.html

+-- js

+-- kubernetes_handler

+-- kv_store

+-- linux_command_line

+-- logging

+-- nav_frame.html

+-- operations_frame.html

+-- security

+-- template_populator

+-- templates

+-- ansible

+-- cloud_vm_access_and_import

+-- docker

+-- html

+-- security

+-- terraform

+-- vagrant

+-- title_bar.html

+-- top_level

+-- top_level.go

+-- virtualbox_handler

+-- vm_launcher

Web Server Components

Much of the webserver directory tree is self-explanatory, so only the more complex components are described.

-

build_and_run.shis a helper script that builds the Golang code and launches the Golang web server on port 6543. -

jsstores most of the JavaScript. DevOps Orchestration Platform uses HTML5 iFrames, along with a lot of raw JavaScript to process frame elements. The platform makes heavy use of jQuery Ajax calls containing large JSON segments for back-end to front-end synchronization. It also uses the native Golang web server templating system for a significant portion of the back-end to front-end synchronization. -

databasescontains a snapshot of the entire state of every component of the system at any one time. The database system currently employed is a simplified NoSQL scheme containing JSON strings stored on the back-end filesystem. At run time, the JSON strings are imported by the Golang code and converted into hash maps (equivalent todictconstructors in Python). Front-end actions triggered by the user result in modified hash maps being re-written to the filesystem as new JSON strings. This scheme could in future be migrated to an alternative database, such as Apache Cassandra, MongoDB, or another technology (see the later section "Extending the Framework"). -

handlerin the name means the directory handles web server routes for user actions intended to trigger deployment of Ansible playbooks, Terraform-based infrastructure, Vagrant, and other events triggered from the front-end web application. -

kv_storecontains Golang utility functions facilitating an abstraction of the JSON-based databases and their import and export. -

connectivity_checkis used for SSH connectivity utilities. -

templates, not to be confused with Ansible templates, contains not only HTML templates, but files with the.j3extension. These are abstract template files used as templates for Terraform infrastructure bootstrap scripts, among other tasks. The.j3files are processed within Golang template modules (e.g., to populate Terraform templates, generic Bash scripts, and temporary scripts that open and close ports on the various firewall platforms). -

templates/cloud_vm_access_and_importhas the templates used to populate AnsibleEC2.pyandGCE.pyscripts for importing existing cloud resources. The Ansible inventories are generated dynamically from within Golang code and are not themselves templated. The Ansible inventory generator code is stored at the pathwebserver/ansible_handler/template_generator.go. The Ansible.j2templates themselves, stored in their respective playbook directory tree paths as usual, use variables inside the dynamically generated Ansible inventories.

Extending the Framework

Extending the framework to custom VPCs, security groups, NACLs, and routing tables is straightforward, because the central mechanism for populating Terraform templates is already in place. New JSON databases could also be added at the back end to support extensions to the framework.

Currently, DevOps Orchestration Platform is a console application that runs a web server, but on an individual user's Debian/Ubuntu/Mint desktop PC – partly for security and partly because the product requires the backing of a bigger open source community with the resources necessary to turn it into a fully networked multiuser or software-as-a-service (SaaS) platform. In any event, the current console-based web server application provides a flexible proof of concept and foundation on which to extend the framework.

Some ideas on how to evolve the framework into a full SaaS platform with role-based access control (RBAC) to a central web server include:

- Add login session and session cookie capability; Golang open source libraries already exist that can facilitate this function.

- Extend the back-end filesystem to include user-ID-specific paths and configuration files.

- Make the Terraform state files user specific, with each user-specific Terraform script and Terraform state file stored at a user-specific filesystem path.

- Use a central database (e.g., Apache Cassandra or Postgres), HashiCorp Vault [7], or a combination of data storage to contain user-specific passwords and keys.

- Control AWS and Google Cloud access permissions by providing users with permission-specific AWS/GCP API keys.

- Store TLS certificates centrally with HashiCorp Vault within a deployed subnet instead of with the existing scheme, which generates private TLS certificates (e.g., for Docker, Nexus 3).