Monitor and optimize Fibre Channel SAN performance

Tune Up

In the past, spinning hard disks were often a potential bottleneck for fast data processing, but in the age of hybrid and all-flash storage systems, the bottlenecks are shifting to other locations on the storage area network (SAN). I talk about where it makes sense to influence the data stream and how possible bottlenecks can be detected at an early stage. To this end, I will be determining the critically important performance parameters within a Fibre Channel SAN and showing optimization approaches.

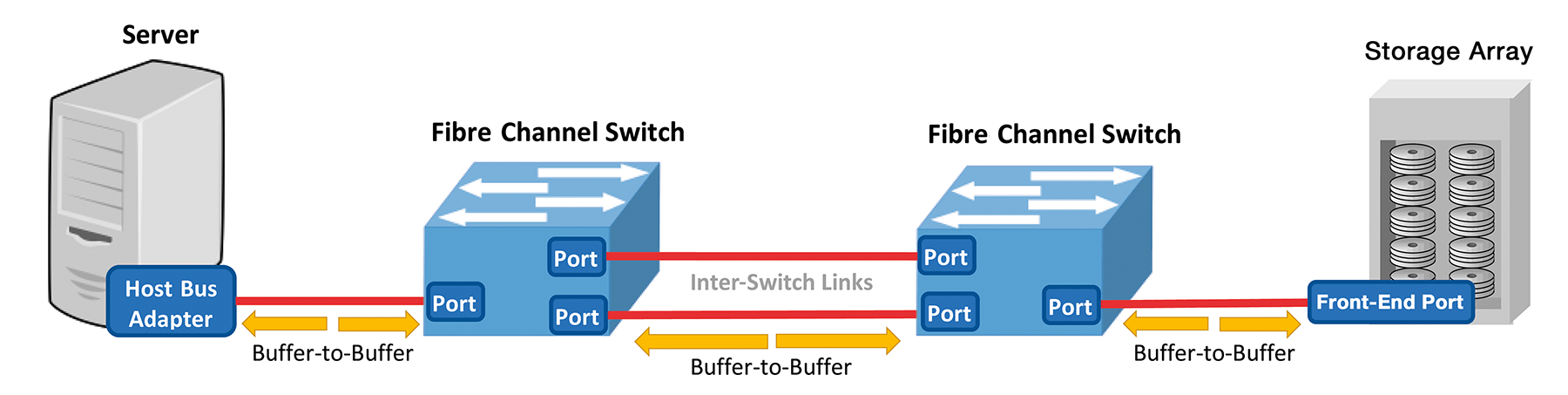

The Fibre Channel (FC) protocol is connectionless and transports data packets in buffer-to-buffer (B2B) mode. Two endpoints, such as a host bus adapter (HBA) and a switch port, negotiate a number of FC frames, which are added to the input buffer as buffer credits at the other end, allowing the sender to transmit a certain number of frames to the receiver on a network without having to wait for each individual data packet to be confirmed (Figure 1).

For each data packet sent, the buffer credit is reduced by a value of one, and for each data packet confirmed by the other party, the value increases by one. The remote station sends a receive ready (R_RDY) message to the sender as soon as the frames have been processed and new data can be sent. If the sender does not receive this R_RDY message and all buffer credits are used up, no further data packets are transmitted until the sender receives the message. Actual flow control of the data is handled by the higher level SCSI protocol.

Suppose a server writes data over a Fibre Channel SAN to a remote storage system; the FC frames are forwarded to multiple locations along the way in the B2B process, as is the case whenever an HBA or a storage port communicates with a switch port or two switches exchange data with each other over one or more Inter-Switch Link (ISL) connections connected in parallel. With this FC transport layer method – service class 3 (connectionless without acknowledgement) optimized for mass storage data – many FC devices can communicate in parallel with high bandwidth. However, this type of communication also has weaknesses, which quickly become apparent in certain constellations.

Backlog by R_RDY Messages

One example of this type of backlog is an HBA or memory port that does not return R_RDY messages to the sender because of a technical defect or driver problem or that only returns R_RDY messages to the sender after a delay. In turn, transmission of new frames are delayed. Incoming data is then stored and consumes the available buffer credits. The backlog then spreads farther back and gradually uses up the buffer credits of the other FC ports on the route.

Especially with shared connections, all SAN subscribers who communicate over the same ISL connection are negatively affected because no buffer credits are available for them during this period. A single slow-drain device can lead to a massive drop in the performance of many SAN devices (fabric congestions). Although most FC switch manufacturers have now developed countermeasures against such fabric congestions, they only take effect when the problem has already occurred and are only available for the newer generations of SAN components.

To detect fabric congestions at an early stage, you at least need to monitor the ISL ports on the SAN for such situations. One indicator of this kind of bottleneck is the increase in the zero buffer credit values at the ISL ports. These values indicate how often units had to wait 2.5µs for the R_RDY message to arrive before further frames could be sent. If this counter grows to a value in the millions within a few minutes, caution is advised. In such critical cases, the counters for "link resets" and "C3 timeouts" at the affected ISL ports usually also grow.

Data Rate Mismatches

A similar effect as in the previous case can occur if a large volume of data is transferred at different speeds between endpoints on the SAN. For example, if the HBA operates at a bandwidth of 8Gbps while the front-end port on the storage system operates at 16Gbps, the storage port can process the data almost twice as fast as the HBA. In return, at full transfer rate, the storage system returns twice the volume of data to the HBA that it could process in the same time.

Buffering the received frames also nibbles away the buffer credits there, which can cause a backlog and a fabric congestion given a continuously high data transfer volume. The situation becomes even more drastic with high data volumes at 4 and 32Gbps. Such effects typically occur at high data rates on the ports of the nodes with the lowest bandwidth in the data stream.

Additionally, too high a fan-in ratio of servers to the storage port is possible (i.e., too high a volume of data from the servers arriving at the storage port, which is no longer able to process the data). My recommendation is therefore to adapt the speed of the HBA and storage port to a uniform speed and, depending on the data transfer rates, maintain a moderate fan-in ratio between servers and the storage port, if possible.

To reduce the data traffic fundamentally over the ISLs, you will want to configure your servers such that the hosts only read locally in the case of cross-location mirroring (e.g., with the Logical Volume Manager) and only access both storage systems when writing. With a high read rate, this approach immensely reduces ISL data traffic and thus the risk of potential bottlenecks.

Overcrowded Queue Slows SAN

The SCSI protocol also has ways to accelerate data flow. The Command Queuing and I/O Queuing methods supported by SCSI-3 achieve a significant increase in performance. For example, a server connected to the SAN can send several SCSI commands in parallel to the logical unit number (LUN) of a storage system. When the commands arrive, they are put into a kind of waiting loop before it is their turn to be processed. Especially for random I/O operations, this arrangement offers significant performance gain.

The number of I/O operations that can be buffered in this queue is known as the queue depth. Important values include the maximum queue depth per LUN and per front-end port of a storage array. These values are usually fixed in the storage system and immutable. On the other hand, you can specify the maximum queue depth on the server side of the HBA or in its driver. Make sure that the sum of the queue depths of all LUNs on a front-end port does not exceed its maximum permitted queue depth. If, for example, 100 LUNs are mapped to an array port and addressed by their servers with a queue depth of 16, the maximum queue depth value at the array port must be greater than 1,600. If, on the other hand, the maximum value of a port is only 1,024, the connected servers can only work with a queue depth of 10 with these LUNs. It makes sense to ask the vendor about the limits and optimum settings for the queue depth.

If a front-end port is overloaded because of incorrect settings and too many parallel I/O operations, and all queues are used up, the storage array sends a Queue_Full or Device_Busy message back to the connected servers, which triggers a complex recovery mechanism that usually affects all servers connected to this front-end port. On the other hand, a balanced queue depth configuration can often tweak that extra share of server and storage performance out of the systems. If the mapped servers or the number of visible LUNs change significantly, you need to update the calculations to prevent gradual overloading.

Watch Out for Multipathing

Standard operating system settings often lead to an imbalance in data traffic, so you will want to pay attention to Fibre Channel multipathing of servers, wherein only one of several connections are actively used. This imbalance then extends to the SAN and ultimately to the storage array. Potential performance bottlenecks occur far more frequently in such constellations. Modern storage systems today use active-active mode over all available controllers and ports. You will want to leverage these capabilities for the benefit of your environment.

Sometimes the use of vendor-specific multipathing drivers can be expedient. These drivers are typically slightly better suited to the capabilities of the storage array, have more specific options, and are often better suited for monitoring than standard operating system drivers. On the other hand, if you want to keep your servers regularly patched, a certain version maintenance and compatibility check overhead can be a result of such third-party software.

Optimizing Data Streams with QoS

Service providers who simultaneously support many different customers with many performance-hungry applications in their storage environments need to ensure that mission-critical applications are assigned the required storage performance in a stable manner at all times. An advantage for one application can be a disadvantage for another. A consistent quality of service (QoS) strategy allows for better planning of data streams and means that critical servers and applications can be prioritized from a performance perspective.

Vendors of storage systems, SAN components, or HBAs have different technical approaches to this problem, but they are not related. In no place here can the data flow be centrally controlled and regulated across all components. Moreover, most solutions do not make a clear distinction between normal operation and failure mode. For example, if performance problems occur within the SAN, the storage system stoically retains its prioritized settings, because it knows nothing about the problem.

Although initial approaches have been made for communication between HBAs and SAN components to act across the board, they only work with newer models and are only available for a few performance metrics. Special HBAs and their drivers support prioritization at the LUN level on the server. The drawback is that you have to set up each individual server, which can be a mammoth task with hundreds of physical servers – not to mention the effort of large-scale server virtualization.

Various options also exist for prioritizing I/Os for SAN components. Basically, the data stream could be directed through the SAN with the use of virtual fabrics or virtual SANs (e.g., to separate test and production systems or individual customers logically from each other). However, this method is not well suited for a more granular distribution of important applications, because the administrative overhead and technical limitations speak against it. For this purpose, it is possible to route servers through the SAN through specially prioritized zones in the data flow. In this way, the frames of high-priority zones receive the right of way and are preferred in the event of a bottleneck.

On the storage systems themselves, QoS functionalities have been established for some time and are therefore the most developed. Depending on the manufacturer or model, data throughput can be limited in terms of megabytes or I/O operations per second for individual LUNs, pools, or servers – or, in return, prioritized at the same level. Such functions require permanent performance monitoring, which is usually available under a free license with modern storage systems. Depending on the setting options, less prioritized data is then permanently throttled or only sent to the back of the queue if a bottleneck situation is looming on the horizon.

However, be aware that applications in a dynamic IT landscape lose priority during their life cycle and that you will have to adjust the settings associated with them time and time again. Whether you're prioritizing storage, SAN, or servers, you should always choose only one of these three levels at which you control the data stream; otherwise, you could easily lose track in the event of a performance problem.

Determining Critical Performance KPIs

The basis for the efficient provision of SAN capacities is good, permanent monitoring of all important SAN performance indicators. You should know your key performance indicators (KPIs) and document these values over a long period of time. Whether you work with vendor performance tools or with higher level central monitoring software that queries the available interfaces (e.g., SNMP, SMI-S, or REST API), defining KPIs for servers, SAN, and storage is decisive. On the server side, the response times or I/O wait times of the LUNs or disks are certainly an important factor, but the data throughput (MBps) for the connected HBAs also can be helpful.

Within the SAN you need to pay special attention to all ISL connections, because often a bottleneck in data throughput occurs, or, as described, buffer credits are missing. Alerts are also conceivable for all SAN ports when 80 or 90 percent of the maximum data throughput rate is reached, which you can use to monitor all HBAs and storage ports for this metric. However, you should be a little more conservative with the monitoring parameters and feel your way forward slowly. Experience has shown that approaching bottlenecks are often overlooked if too many alerts are regularly received and have to be checked manually.

Optimizing Array Performance

For a storage array, the load on the front-end processors, the cache write pending rate, and the response times of all LUNs presented to the servers are important values you will want to monitor. In the case of the LUN response times, however, you need to differentiate between random and sequential access, because the block sizes of the two access types differ considerably. For example, sequential processing within a storage array often takes far longer because of the larger block size than random processing, and this difference is reflected in response time.

Many of the values in the storage array differ depending on the system architecture and cannot be set across the board; you will need to contact the vendor to find out at which utilization level a component's performance is likely to be impaired and inquire about further critical measuring points, as well. Various vendor tools offer preset limits based on best practices, which can also be adapted to your own requirements.

Additionally, when planning the growth of your environment, make sure that if a central component (e.g., an HBA on the server, a SAN switch, or a cache or processor board) fails, the storage array can continue to work without problems and does not lead to a massive impairment of operations or even to outages of individual applications.

Equipped for Emergencies

Even if you are familiar with the SAN infrastructure and have set up appropriate monitoring at key points (Table 1), performance bottlenecks cannot be completely ruled out. A component failure, a driver problem, or a faulty Fibre Channel cable can cause sudden problems. If such an incident occurs and important applications are affected, it is important to gain a quick overview of the essential performance parameters of the infrastructure. Therefore, it is very helpful if you have the relevant values from unrestricted normal operation as a baseline to compare with the current values of the problem situation.

Tabelle 1: Key Fibre Channel SAN Performance Parameters

|

Parameter |

Measuring Point |

Recommended Value |

|---|---|---|

|

SAN-ISL port buffer-to-buffer zero counter |

ISL ports on SAN switch or director |

<1,000,000 within 5 minutes |

|

Server LUN queue depth |

HBA driver |

1-32, depending on the number of LUNs at the front-end port |

|

SAN-ISL data throughput |

ISL ports on SAN switch or director |

<80% of maximum data throughput |

|

Server I/O response time (average) |

Server operating system, volume manager |

<10ms |

|

Memory system processors |

Storage system |

<70%-80% |

|

Storage system front-end port data throughput |

Storage system |

<80%-90% |

|

Memory system cache write pending rate |

Storage system |

<30% |

|

Storage system LUN I/O service time (average) |

Storage system |

<10ms |

This comparison would reveal, for example, whether performance-hungry servers or applications are suddenly generating 30 percent more I/O operations after software updates and affecting other servers in the same environment as noisy neighbors, or whether I/O operations can no longer be processed by individual connections because of defective components or cables. However, you need to gain experience in the handling and interpretation of the performance indicators from these tools to be sufficiently prepared for genuine problems. Storage is often mistakenly suspected of being the endpoint of performance problems.

If you can make a well-founded and verifiable statement about the load situation of your SAN environment within a few minutes and precisely put your finger on the overload situation and its causes – or provide contrary evidence, backed up with well-founded figures that help to discover where the problem is arising – you will leave observers with a positive impression.

Conclusions

Given compliance with a few important rules and monitoring in the right places, even large Fibre Channel storage networks can be operated with great performance and stability. If you give priority to the most important applications at a suitable point, you can keep them available even in the event of a problem. If you are also trained in the use of performance tools and have the values from normal operation as a reference, the causes of performance problems can often be identified very quickly.