Central logging for Kubernetes users

Shape Shifter

In conventional setups of the past, admins had to troubleshoot fewer nodes per setup and fewer technologies and protocols than is the case today in the cloud, with its hundreds and thousands of technologies and protocols for software-defined networking, software-defined storage, and solutions like OpenStack. In the worst case, network nodes also need to be checked separately. If you are searching for errors in this kind of environment, you cannot put the required logfiles together manually.

The Elasticsearch, Logstash, and Kibana (ELK) team has demonstrated its ability to collect logs continuously from affected systems, store them centrally, index the results, and thus make them searchable. However ELK and its variations prove to be complex beasts. Getting ELK up and running is no mean achievement, and once it is finally running, operations and maintenance prove to be complex. A full-grown ELK cluster can massively consume resources, as well.

Unfortunately, you don't have a lot of alternatives. In the case of the popular competitor Splunk, a mere glance at the price list is bad for your blood pressure. However, the Grafana developers are sending Loki [1] into battle as a lean solution for central logging, aimed primarily at Kubernetes users who are already using Prometheus [2].

Loki claims to avoid much of the overhead that is a fixed part of ELK. In terms of functionality, the product can't keep up with ELK, but most admins don't need many features that bloat ELK in the first place. Unfortunately, ELK does not allow you to sacrifice part of the feature set for reduced complexity. Loki from Grafana opens up this door. In this article, I go into detail about Loki and describe which functions are available and which are missing.

The Roots of Loki:Prometheus and Cortex

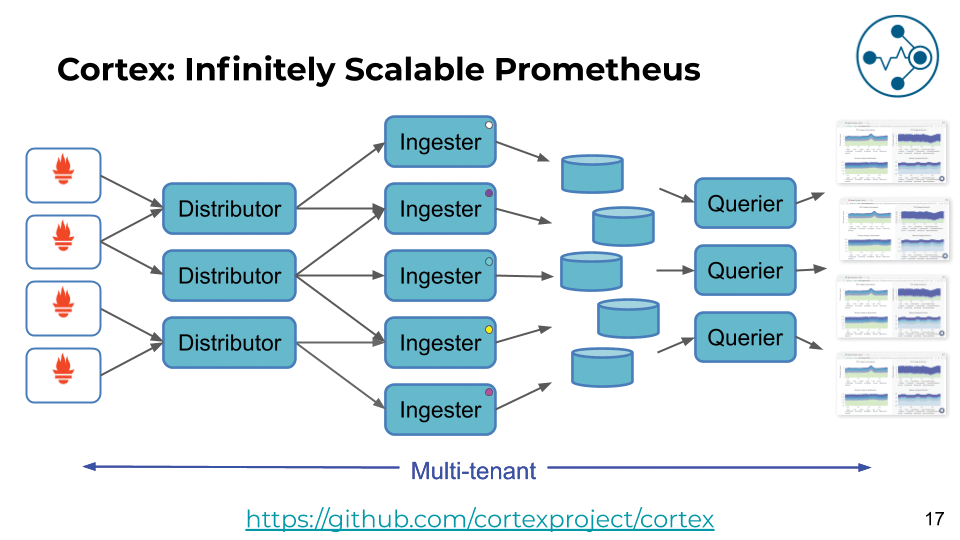

If you follow Loki back to its roots, you will come across some interesting details: Loki is not a completely new development; the Grafana developers oriented their work on Prometheus – but not directly. Loki was inspired by a Prometheus fork named Cortex [3], which extends the original Prometheus, adding the horizontal scalability admins often missed.

Prometheus itself has no scale-out story. Instead, the developers recommend running many instances in parallel and sharding the systems to be monitored. Sending the incoming metric data to several Prometheus instances is intended to provide redundancy in such a setup, but this construct forces you to tie different Prometheus instances to a single instance of the graphics drawing tool Grafana, often with unsatisfactory results.

Cortex removes this Prometheus design limitation but has not yet achieved the widespread distribution level and popularity of its ancestor. Clearly, it was well enough known to the Grafana developers, because in their search for a suitable tool for their project they used Cortex as a starting point, which also explains the slogan the Loki developers use to advertise their product: Loki is "like Prometheus, but for logs."

Log Entries as Metric Data

Both Prometheus and its derivative Cortex are tools for monitoring, alerting, and trending (MAT). However, they cannot be compared with the well-known monitoring tools such as Icinga 2 or Nagios, which primarily focus on event-based monitoring. MAT systems, on the other hand, are designed to collect as many performance metrics as possible from the computers to be monitored.

From this data, the applied load can be read off and the future load can be estimated; monitoring is more or less a waste product. If you know how many instances of the httpd process are running on a system, you can use a suitable component to raise an alert as soon as a value drops below a certain threshold. Loki's radically revolutionary approach now consists of treating the log data of the target systems exactly as if they were regular metric data.

If you have already set up a complete Prometheus for an environment, you will have dealt with labels, which are useful in Prometheus to distinguish between metrics. Admins typically use labels for certain values: An http_return_codes metric could have a value label, which in turn takes tags of 200, 403, 404, and so on. Ultimately, labels help admins keep the total number of all metrics reasonably manageable, limiting the overhead needed for storage and processing.

Different from ELK

Loki attaches itself to these labels and uses them to index the incoming log messages, which marks the biggest architectural difference from ELK. For this very reason, Loki is far more efficient and lightweight: It does not meticulously evaluate incoming log messages and store them on the basis of defined rules and keywords; rather, it works on the basis of the labels attached to them.

What sounds complicated in theory is simple and comprehensible in practice. Suppose, for example, an instance of the Cluster Consensus Manager Consul is running in a Kubernetes environment and produces log messages. If you rely on Prometheus for monitoring, you will use this tool to monitor Consul on the hosts.

One metric that Prometheus uses for Consul is consul_service_health_status, but if you are running a development instance and a production instance of the environment, you could define an Env label that can assume the value dev or prod. With Grafana linked to Prometheus, different graphs could then be drawn by label. Loki does something very similar by classifying the stored log entries by label so you can display log entries for prod and dev.

Although not as convenient as the full-text search feature to which ELK users are accustomed, the Loki solution is far more frugal in terms of resources. Because Prometheus and its Cortex fork are easy to configure dynamically, Loki is far better suited for operation in containers, as well.

Loki in Practice

Loki can be virtualized easily and that was even one of the core requirements of the developers. Because Loki requires fewer resources than ELK, it does not need massive hardware resources. Like Prometheus, Loki is a Go application, which you can get from GitHub [1]. However, it is not necessary to roll out and launch Loki as a Go binary. In the best cloud style, the Loki developers offer Docker images of the solution on Docker Hub, so you can deploy them locally straightaway. Therefore, the only external task is to send the configuration file to the container.

Under the Hood

What looks so easy at first glance requires a combination of several components on the inside. In the style of a cloud-native application, Loki comprises several components that need to interact to succeed. However, the architecture on which Loki is based is not that specific to Loki. It simply recycles large parts of the development work already done for Cortex (Figure 1). Because Cortex works well, there's no reason why Loki shouldn't.

Log data that reaches Loki is grabbed by the Distributor component; several instances of this service are usually running. With large-scale systems, the number of incoming log messages can quickly reach many millions depending on the type of services running in the cloud, so a single Distributor instance would hardly be sufficient. However, it would also be problematic to drop these incoming log messages into a database without filtering and processing. If the database survived the onslaught, it would inevitably become a bottleneck in the logging setup.

The active instances of the Distributor therefore categorize the incoming data into streams on the basis of labels and forward them to Ingesters, which are responsible for processing the data. In concrete terms, processing means forming log packages (chunks) from the incoming log messages, which can be compressed by Gzip. Like the Distributors, the several Ingester instances also run at the same time, forming a ring architecture over which a Distributor applies a consistent hash algorithm to calculate which of the Ingester instances is currently responsible for a particular label.

Once an Ingester has completed a chunk of a log, the final step en route to central logging then follows: storing the information in the storage system to which Loki is connected. As already mentioned, Loki differs considerably from its predecessor Prometheus, for which a time series database is a key aspect.

Loki, on the other hand, does not handle metrics, but text, so it stores the chunks and information about where they reside separately. The index lists all known chunks of log data, but the data packets themselves are located on the same storage facility configured for Loki.

What is interesting about the Loki architecture is that it almost completely separates the read and write paths. If you want to read logs from Loki via Grafana, a third service is used in Loki, the Querier, which accesses the index and stored chunks in the background. It also communicates briefly with the Ingesters to find log entries that have not yet been moved to storage. Otherwise, read and write operations function completely independently.

Scaling Works

Looking at the overall Loki construct, it becomes clear that the design of the solution fits perfectly with the requirements faced by the developers: scalable, cost-effective with regard to the required hardware, and as flexible as possible.

The index ends up with Cassandra, Bigtable, or DynamoDB, all of which are known to scale horizontally without restrictions. The chunks are stored in an object store such as Amazon S3, which also scales well. The components belonging to Loki itself, such as the Distributors and Queriers, are stateless and therefore scale to match requirements.

Only the Ingester is a bit tricky. Unlike its colleagues, it is a stateful application that simply must not fail. However, the implemented ring mechanism provides the features required for sharding, so you can deploy any number of Ingesters to suit needs. Loki scales horizontally without limits. Because it does not store the contents of the incoming log data, it has a noticeably smaller hardware footprint than a comparable ELK stack.

The Loki documentation contains detailed tips on scalability, but briefly, to scale horizontally, Loki needs the Consul cluster consensus mechanism to coordinate the work steps beyond the borders of nodes. If you want to use Loki in this way, it is a very good idea to read and understand the corresponding documentation, because a scaled Loki setup of this kind is far more complex than a single instance.

Loki is noticeably easier to implement than Prometheus, because Loki does not save the payload (i.e., the log data) itself at the end. This task is handled by external storage, which provides the high availability on which Loki relies.

Where Do the Logs Originate?

So far I have described how Loki works internally and how it stores and manages data. However, the question of how log messages make their way to Loki has not yet been clarified. This much is true: The Prometheus Node Exporters are not suitable here because they are tied to numeric metric data. Prometheus itself does not have the ability to process metric data other than numbers, which is why the existing Prometheus exporters cannot handle log messages.

In the setup described here, the Loki tool promtail attaches itself to existing logging sources, records the details there, and sends them to predefined instances of the Loki server. The "tail" in the name is no coincidence: Much like the tail Linux command, it outputs the ends of logs in Prometheus format.

During operation, you could also let Promtail handle and manipulate (rewrite) logfiles. Experienced Prometheus jockeys will quickly notice that Loki is fundamentally different from Prometheus in one design aspect: Whereas Prometheus collects its metric data from the monitoring targets itself, Loki follows the push principle – the Promtail instances send their data to Loki.

Graphics with Grafana

Because Loki comes from the Grafana developers, the aggregated log data is only displayed with this tool. Grafana version 6.0 or newer offers the necessary functions. The rest is simple: Set up Loki as a data source as you would for Prometheus. Grafana then displays the corresponding entries.

The query language naturally has certain similarities in Loki and Cortex and therefore in Prometheus. Even complex queries can be built. At the end of the day, Grafana turns out to be a useful tool for displaying logs with the Loki back end. If you prefer a less graphical approach, the logcli command-line tool is an option, too; as expected, however, it is not particularly convenient.

Strong Duet

In principle, the team of Loki and Promtail can be used completely independently of a container solution, just like Prometheus. However, the developers doubtlessly prefer to see them used in combination with Kubernetes, and indeed, the solution is particularly well suited to Kubernetes.

On the one hand, Prometheus and Cortex have been very closely connected to Kubernetes from the very beginning – so far, in fact, that Prometheus can attach itself directly to the Kubernetes master servers to find the list of systems to monitor with fully automated node discovery. Additionally, Prometheus is perfectly capable of collecting, interpreting, and storing the metric data output by Kubernetes, processing all labels that belong to the metric data automatically.

Loki ultimately inherits all these advantages: If Loki is attached to an existing Kubernetes Prometheus setup, the same label rules can be recycled, making the setup easy to use.

Monitoring Loki

The best solution for centralized logging is useless if it is not available in a crisis. The same applies if the admin has forgotten to integrate important logfiles into the Loki cycle. However, the Loki developers offer support for both scenarios. A small service named Loki Canary systematically searches systems for logfiles that Loki does not collect.

Both Loki and Promtail can even output metric data about themselves via their Prometheus interfaces, if so required; then, you can integrate it into Prometheus accordingly. The Loki Operations Manual lists appropriate metrics.

No Multitenancy

Finally, Loki also unfortunately inherited a "feature" from Prometheus. The program does not support user administration and therefore treats all users that access it equally. Loki is not usable for multitenancy. Instead, you need to run one Loki instance per tenant and secure it such that access by external intruders is not possible.