Prowling AWS

Snooping Around

Hearing that an external, independent organization has been commissioned to spend time actively attacking the cloud estate you have been tasked with helping to secure can be a little daunting – unless, of course, you are involved with a project at the seminal greenfield stage, and you have yet to learn what goes where and how it all fits together. To add to the complexity, if you are using Amazon Web Services (AWS), AWS Organizations can segregate departmental duties and, therefore, security controls between multiple accounts; commonly this might mean the use of 20 or more accounts. With these concerns and, if you blink a little too slowly, it's quite possible that you will miss a new AWS feature or service that needs to be understood and, once deployed, secured.

Fret not, however, because a few open source tools can help mitigate the pain before an external auditor or penetration tester receives permission to attack your precious cloud infrastructure. In this article, I show you how to install and run the highly sophisticated tool Prowler [1]. With the use of just a handful of its many features, you can test against the industry-consensus benchmarks from the Center for Internet Security (CIS) [2].

What Are You Lookin' At?

When you run Prowler against the overwhelmingly dominant cloud provider AWS, you get the chance to apply an impressive 49 test criteria of the AWS Foundations Benchmark. For some additional context, sections on the AWS Security Blog [3] are worth digging into further.

To bring more to the party, the sophisticated Prowler also stealthily prowls for issues in compliance with General Data Protection Regulation (GDPR) of the European Union and the Health Insurance Portability and Accountability Act (HIPAA) of the United States. Prowler refers to these 40 additional checks as "extras". Table 1 shows the type and number of checks that Prowler can run, and the right-hand column offers the group name you should use to get Prowler to test against specific sets of checks.

Tabelle 1: Checks and Group Names

|

Description |

No./Type of Checks |

Group Name |

|---|---|---|

|

Identity and access management |

22 checks |

group 1 |

|

Logging |

9 checks |

group 2 |

|

Monitoring |

14 checks |

group 3 |

|

Networking |

4 checks |

group 4 |

|

Critical priority CIS |

CIS Level 1 |

cislevel1 |

|

Critical and high-priority CIS |

CIS Level 2 |

cislevel2 |

|

Extras |

39 checks |

extras |

|

Forensics |

See README file [4] |

forensics-ready |

|

GDPR |

See website [5] |

gdpr |

|

HIPAA |

See website [6] |

hipaa |

Porch Climbing

To start getting your hands dirty, install Prowler and see what it can do to help improve the visibility of your security issues. To begin, go to the GitHub page [1] held under author Toni de la Fuente's account; he also has a useful blogging site [7] that offers a number of useful insights into the vast landscape of security tools available to users these days and where to find them. I recommend a visit, whatever your level of experience.

The next step is cloning the repository with the git command [8] (Listing 1). As you can see at the beginning of the command's output, the prowler/ directory will hold the code.

Listing 1: Installing Prowler

$ git clone https://github.com/toniblyx/prowler.git ** Cloning into 'prowler'... remote: Enumerating objects: 50, done. remote: Counting objects: 100% (50/50), done. remote: Compressing objects: 100% (41/41), done. remote: Total 2955 (delta 6), reused 43 (delta 5), pack-reused 2905 Receiving objects: 100% (2955/2955), 971.57 KiB | 915.00 KiB/s, done. Resolving deltas: 100% (1934/1934), done.

The README file recommends installing the ansi2html and detect-secrets packages with the pip Python package installer:

$ pip install awscli ansi2html detect-secrets

If you don't have pip installed, fret not: Use your package manager. For example, on Debian derivatives, use the apt command:

$ apt install python-pip

On Red Hat Enterprise Linux child distributions and others like openSUSE or Arch Linux, you can find instructions online [9] for help if you're not sure of the package names.

Now you're just about set to run Prowler from a local machine perspective. Before continuing, however, the other part of the process is configuring the correct AWS Identity and Access Management (IAM) permissions.

An Access Key and a Secret Key attached to a user is needed from AWS, with the correct permissions being made available to the user via a role. Don't worry, though: The permissions aren't giving away the crown jewels but reveal any potential holes in your security posture. Therefore, the results need to be stored somewhere with care, as do all access credentials to AWS.

You might call the List/Read/Describe actions "read-only" if you wanted to summarize succinctly the levels of access required by Prowler. You can either use the SecurityAudit policy permissions, which is provided by AWS directly, or the custom set of permissions in Listing 2 required by the role to be attached to the user in IAM, which opens up the DescribeTrustedAdvisorChecks, in addition to those offered by the SecurityAudit policy, according to the GitHub README file.

Listing 2: Permissions for IAM Role

{

"Version": "2012-10-17",

"Statement": [{

"Action": [

"acm:describecertificate",

"acm:listcertificates",

"apigateway:get",

"autoscaling:describe*",

"cloudformation:describestack*",

"cloudformation:getstackpolicy",

"cloudformation:gettemplate",

"cloudformation:liststack*",

"cloudfront:get*",

"cloudfront:list*",

"cloudtrail:describetrails",

"cloudtrail:geteventselectors",

"cloudtrail:gettrailstatus",

"cloudtrail:listtags",

"cloudwatch:describe*",

"codecommit:batchgetrepositories",

"codecommit:getbranch",

"codecommit:getobjectidentifier",

"codecommit:getrepository",

"codecommit:list*",

"codedeploy:batch*",

"codedeploy:get*",

"codedeploy:list*",

"config:deliver*",

"config:describe*",

"config:get*",

"datapipeline:describeobjects",

"datapipeline:describepipelines",

"datapipeline:evaluateexpression",

"datapipeline:getpipelinedefinition",

"datapipeline:listpipelines",

"datapipeline:queryobjects",

"datapipeline:validatepipelinedefinition",

"directconnect:describe*",

"dynamodb:listtables",

"ec2:describe*",

"ecr:describe*",

"ecs:describe*",

"ecs:list*",

"elasticache:describe*",

"elasticbeanstalk:describe*",

"elasticloadbalancing:describe*",

"elasticmapreduce:describejobflows",

"elasticmapreduce:listclusters",

"es:describeelasticsearchdomainconfig",

"es:listdomainnames",

"firehose:describe*",

"firehose:list*",

"glacier:listvaults",

"guardduty:listdetectors",

"iam:generatecredentialreport",

"iam:get*",

"iam:list*",

"kms:describe*",

"kms:get*",

"kms:list*",

"lambda:getpolicy",

"lambda:listfunctions",

"logs:DescribeLogGroups",

"logs:DescribeMetricFilters",

"rds:describe*",

"rds:downloaddblogfileportion",

"rds:listtagsforresource",

"redshift:describe*",

"route53:getchange",

"route53:getcheckeripranges",

"route53:getgeolocation",

"route53:gethealthcheck",

"route53:gethealthcheckcount",

"route53:gethealthchecklastfailurereason",

"route53:gethostedzone",

"route53:gethostedzonecount",

"route53:getreusabledelegationset",

"route53:listgeolocations",

"route53:listhealthchecks",

"route53:listhostedzones",

"route53:listhostedzonesbyname",

"route53:listqueryloggingconfigs",

"route53:listresourcerecordsets",

"route53:listreusabledelegationsets",

"route53:listtagsforresource",

"route53:listtagsforresources",

"route53domains:getdomaindetail",

"route53domains:getoperationdetail",

"route53domains:listdomains",

"route53domains:listoperations",

"route53domains:listtagsfordomain",

"s3:getbucket*",

"s3:getlifecycleconfiguration",

"s3:getobjectacl",

"s3:getobjectversionacl",

"s3:listallmybuckets",

"sdb:domainmetadata",

"sdb:listdomains",

"ses:getidentitydkimattributes",

"ses:getidentityverificationattributes",

"ses:listidentities",

"ses:listverifiedemailaddresses",

"ses:sendemail",

"sns:gettopicattributes",

"sns:listsubscriptionsbytopic",

"sns:listtopics",

"sqs:getqueueattributes",

"sqs:listqueues",

"support:describetrustedadvisorchecks",

"tag:getresources",

"tag:gettagkeys"

],

"Effect": "Allow",

"Resource": "*"

}]

}

Have a close look at the permissions to make sure you're happy with them. As you can see, a lot of list and get actions cover a massive amount of AWS's ever-growing number of services. In a moment, I'll return to this policy after setting up the AWS configuration.

Gate Jumping

For those who aren't familiar with the process of setting up credentials for AWS, I'll zoom through them briefly. The obvious focus will be on Prowler in action.

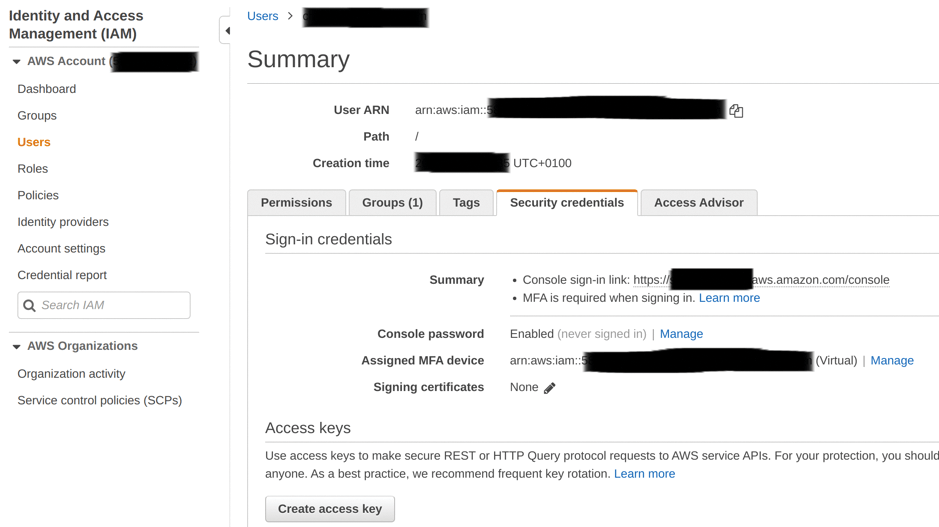

In the redacted Figure 1, you can see the screen found in the IAM service under the Users | Security credentials tab. Because you're only shown the secret key once, you should click the Create access key button at the bottom and then safely store the details.

To make use of the Access Key and Secret Key you've just generated, return to the terminal and enter:

$ aws configure AWS Access Key ID []:

The aws command became available when you installed the AWS command-line tool with the pip package manager.

As you will see from the questions asked by that command, you need to offer a few defaults, such as the preferred AWS region, the output format, and, most importantly, your Access Key and Secret Key, which you can enter with cut and paste. Once you've filled in those details, two files are created in plain text and stored in the ./aws directory: config and credentials. Because these keys are plain text, many developers use environment variables to populate their terminal with these details so they're ephemeral and not saved in a visible format. Wherever you keep them, you should encrypt them when stored – known as "data at rest" in security terms.

Lurking

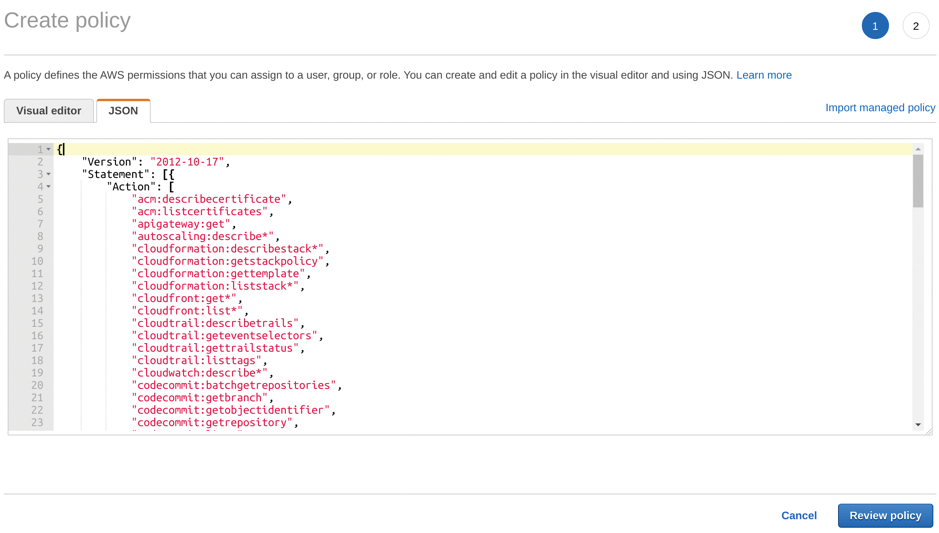

Back in your browser and the AWS IAM service, you can see in Figure 2 where to paste the policy content shown in Listing 2 (i.e., the Policies | Create policy page). After carefully pasting all of Listing 2 into the JSON tab, click the blue Review policy button at the bottom of the screen. Just make sure you paste over the existing empty JSON policy to remove it before proceeding, and you'll be fine.

On the following screen, you're required to provide a sensible name for the policy (e.g., prowler-audit-policy), check the policy rules displayed, and click the blue button at the bottom of the page to proceed.

Figure 3 shows success, and you can now attach your shiny new policy to your user (or role, if you prefer, having attached the role to your user).

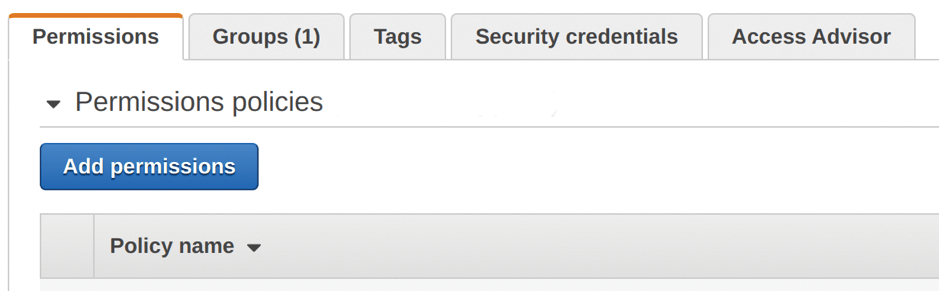

The final AWS step is attaching your policy to your user, as seen in Figure 4. In the IAM service, click Users, choose your user, then click Add permissions and select a policy. Next, click Attach existing policies directly, tick the box beside prowler-audit-policy to select it, and click the blue Next: Review button.

On the next screen, click Add permissions; lo and behold, you'll see your new policy under Attached directly.

If you failed to get that far, just retrace your steps. It's not tricky once you are familiar with the process.

Prowling

To recap, you have created an AWS user and attached your newly created policy to that user. Good practice would usually be to create an IAM role, too, and then attach the policy to the new role if multiple users need to access the policy. The command aws configure lets the AWS command-line client know exactly where to find your credentials.

You can now cd to your prowler directory to run the script that fires up Prowler. You probably remember that the directory was created during the GitHub repository cloning process in the early stages.

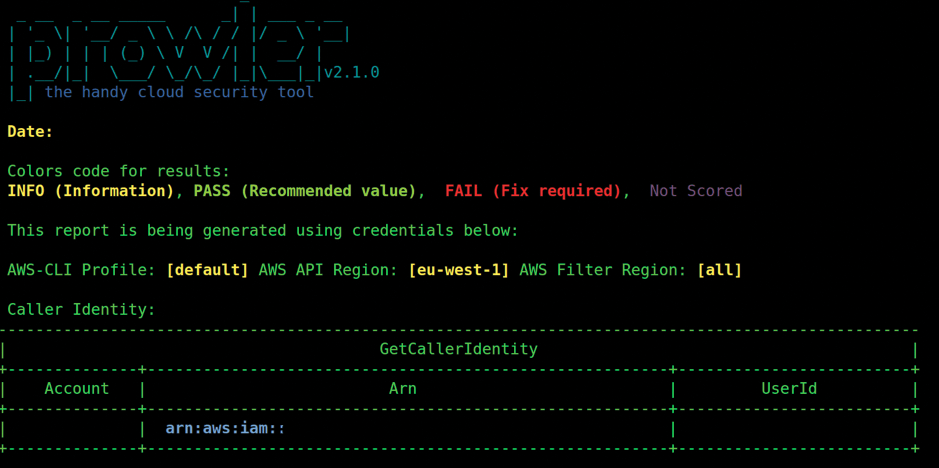

Now you can run your tests. A relatively healthy smattering of patience is required for your first run. As you'd expect because of the Herculean task being attempted by Prowler, it takes a good few minutes to complete. The redacted Figure 5 shows the beginning of an in-depth audit.

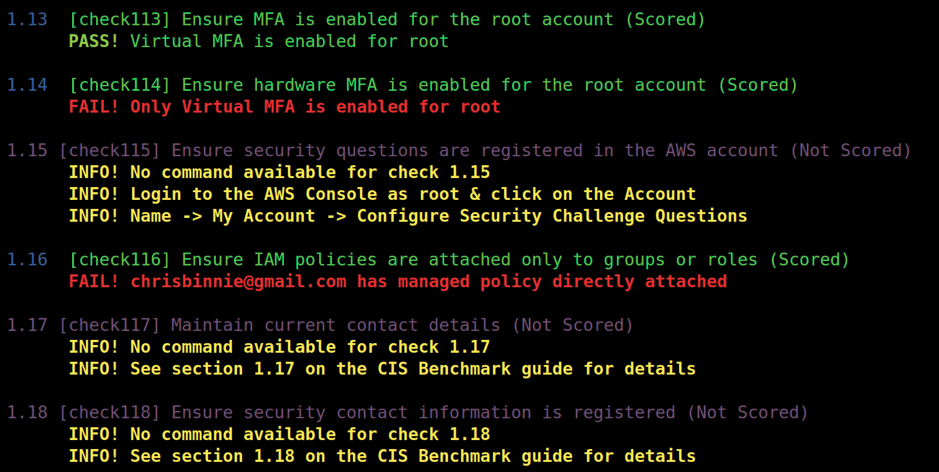

As the AWS audit continues, you can see the impressive test coverage being performed against the AWS account (Figure 6). If your permissions are safe in the IAM policy, then other than using up some of your concurrent API request limits it's a good idea to run this type of audit frequently to help spot issues or misconfigurations that you'd have otherwise missed.

Grand Theft AWS

Once the stealthy Prowler has finished its business, you have a number of other ways to tune it for your needs that you might want to explore. For example, if you have multiple AWS accounts over which you want to run Prowler, you can interpolate the name of the account profile in your ~/.aws/credentials file:

$ ./prowler -p custom-profile -r eu-west-1

Although the command only points at one region, Prowler will traverse the other regions where needed to complete its auditing.

Breaking and Entering

The README file offers some other useful options in the examples I shamelessly repeat and show in this section.

If you ever want to check one of the tests individually, use:

$ ./prowler -c check32

After the first Prowler run to make sure it runs correctly, then a handy tip is to spend some time looking through the benchmarks listed earlier to figure out what you might need to audit against, instead of running through all the many checks.

It's also not such a bad idea if you find the check numbers from the Prowler output and focus on specific areas to speed up your report generation time. Just delimit your list of checks with commas after the -c switch.

Additionally, use the -E command switch

$ ./prowler -E check17,check24

to run Prowler against lots of checks while excluding only a few.

Lookin' Oh So Pretty

As you'd expect, Prowler produces a nicely formatted text file for your auditing report, but harking back to the pip command earlier, you might remember that you also installed the ansi2html package, which allows the mighty Prowler to produce HTML by piping the output of your results:

$ ./prowler | ansi2html -la > prowler-audit.html

Similarly, you can output to JSON or CSV with the -M switch:

$ ./prowler -M json > prowler-audit.json

Just change json to csv (in the file name, too) if you prefer a CSV file.

The well-written Prowler docs also offer a nice example of saving a report to an S3 bucket:

$ ./prowler -M json | aws s3 cp - s3://your-bucket/prowler-audit.json

Finally, if you've worked with security audits before, you'll know that reaching an agreed level of compliance is the norm; therefore if, for example, you only needed to meet the requirements of CIS Benchmark Level 1, you could ask Prowler to focus on those checks only:

$ ./prowler -g cislevel1 If you want to check against multiple AWS accounts at once, then refer to the README file for a clever one-line command that runs Prowler across your accounts in parallel. A useful bootstrap script is offered, as well, to help you set up your AWS credentials via the AWS client and run Prowler, so it's definitely worth a read.

Additionally, a nice troubleshooting section looks at common errors and the use of multifactor authentication (MFA). Suffice it to say that the README file is comprehensive, easy to follow, and puts some other documentation to shame.

The End Is Nigh

Prowler boasts a number of checks that other tools miss, has thorough and considered documentation, and is a lightweight and reliable piece of software. I prefer the HTML reports, but running the JSON through the jq program is also useful for easy-to-read output.

Having scratched the surface of this clever open source tool, I trust you'll be tempted to do the same and to keep an eye on your security issues in an automated fashion.