New storage classes for Amazon S3

Class Society

AWS introduced several new storage services and databases at re:Invent 2018, including new storage classes for Amazon Simple Storage Service (S3). In the meantime, new releases (S3 Intelligent-Tiering and S3 Glacier Deep Archive) have become available that quickly boost the number of storage classes in the oldest and most popular of all AWS services from three to six. In this article, I present the newcomers and their characteristics.

Amazon's Internet storage has always supported storage classes, between which users can choose when uploading an object and which they can also switch automatically later using lifecycle guidelines. The individual storage classes have different price models and availability classes, each of which optimally addresses a different usage profile. So, if you know the most common access patterns to your data stored in S3, you can optimize costs by intelligently choosing the right storage class.

High-Availability SLAs

The individual storage classes differ in terms of availability and durability. Because AWS generally replicates data within a region (with the exception of the S3 One Zone-IA class) across all availability zones, Amazon S3 is basically a simple, key-based object store. Amazon S3, for example, offers 99.99 percent availability in the standard storage class and 99.99999 percent permanence, which means that of 10,000 stored files, one file is lost every 11 million years, on average. AWS even guarantees this under its Amazon S3 Service Level Agreement [1]. By the way, such a service is by no means available for all AWS services.

The new S3 Intelligent-Tiering memory class also has a stability of 99.999999999 percent with an availability of 99.9 percent, just as in the S3 Standard-IA class. In the case of the S3 One Zone-IA storage class, however, replication only takes place within a single availability zone, resulting in reduced availability of 99.5 percent. Replication beyond regions does not take place in AWS to improve further availability or consistency, because this would contradict the corporate philosophy with regard to data protection. However, the user can configure automatic replication to another region in S3 if so desired.

Comparison of Storage Classes

Although S3 has made do with three memory classes – Standard, Standard-IA, and Glacier – for many years, three additional memory classes are now available: Intelligent-Tiering, One Zone-IA, and Glacier Deep Archive, all with a durability of 99.999999999 percent. The documentation still also lists the Standard with Reduced Redundancy (RRS) storage class with a stability of 99.99 percent. Currently, AWS does not recommend the use of RRS – originally intended for non-critical, reproducible data such as thumbnails – because the standard storage class is now cheaper anyway. As Table 1 shows, the inclusion of RRS would mean that there are seven storage classes.

Tabelle 1: Current S3 Memory Classes

|

Storage Class |

Suitable for |

Resistance (%) |

Availability (%) |

Availability Zones |

|---|---|---|---|---|

|

Standard |

Data with frequent access |

99.999999999 |

99.99 |

≥3 |

|

Standard-IA |

Long-term data with irregular access |

99.999999999 |

99.9 |

≥3 |

|

Intelligent-Tiering |

Long-term data with changing or unknown access patterns |

99.999999999 |

99.9 |

≥3 |

|

One Zone-IA |

Long-term, non-critical data with fairly infrequent access |

99.999999999 |

99.5 |

1 |

|

Glacier |

Long-term archiving with recovery times between minutes and hours |

99.999999999 |

99.99 (after restore) |

≥3 |

|

Glacier Deep Archive |

Data archiving for barely used data with a recovery time of 12 hours |

99.999999999 |

99.99 (after restore) |

≥3 |

|

RRS (no longer recommended) |

Frequently retrieved, but non-critical data |

99.99 |

99.99 |

≥3 |

Amazon S3 Costs

Apart from the fact that prices for all AWS services generally vary between regions, S3 storage has four cost drivers: storage (storage prices), retrieval (retrieval prices), management (S3 storage management), and data transfer, where moving data to the cloud does not cost anything. In US East regions, for example, the S3 Standard storage class pricing (in early 2020) looks like this:

- Storage price is $0.023/GB for the first 50TB.

- Retrieval price is $0.005/1,000 PUT, COPY, POST, or LIST requests and $0.0004/1,000 for GET, SELECT and all other requests. All data returned by S3 is charged at $0.0007/GB, all data scanned at $0.002/GB. Lifecycle transition and retrieval requests are free, as are DELETE and CANCEL requests.

- The price of S3 storage management depends on the functions included. For example, S3 object tagging costs $0.01/10,000 tags per month.

- For outgoing transmissions, AWS allows up to 1GB/month free of charge. The next 9.999TB/month is charged at $0.09/GB, the next 40TB/month at $0.085/GB, the next 100TB/month at $0.07/GB, and the next 150TB/month at $0.05/GB.

A complete price overview can be found on the S3 product page [2].

S3 with Intelligent Gradation

The new Intelligent-Tiering memory class is primarily designed to optimize costs. This approach works because AWS continuously analyzes the data for access patterns and automatically transfers the results to the most cost-effective access level. The two target memory classes involved in intelligent tiering are Standard and Standard-IA. As you may know, storage is cheaper in Standard-IA, but retrieval is more expensive. Although retrieval is possible at any time with the same access time and latency, the AWS pricing for this memory class stipulates that the objects are rarely read after the initial write.

For this automation, however, AWS charges an additional monthly monitoring and automation fee per object. Specifically, S3 Intelligent-Tiering monitors the access patterns of the objects and moves objects that have not been accessed for 30 days in succession to Standard-IA. If an object in Standard-IA is accessed, AWS automatically moves it back to S3 Standard. There are no retrieval fees when using S3 Intelligent-Tiering and no additional grading fees are charged when objects switch between access levels. This makes the class particularly suitable for long-term data with initially unknown or unpredictable access patterns.

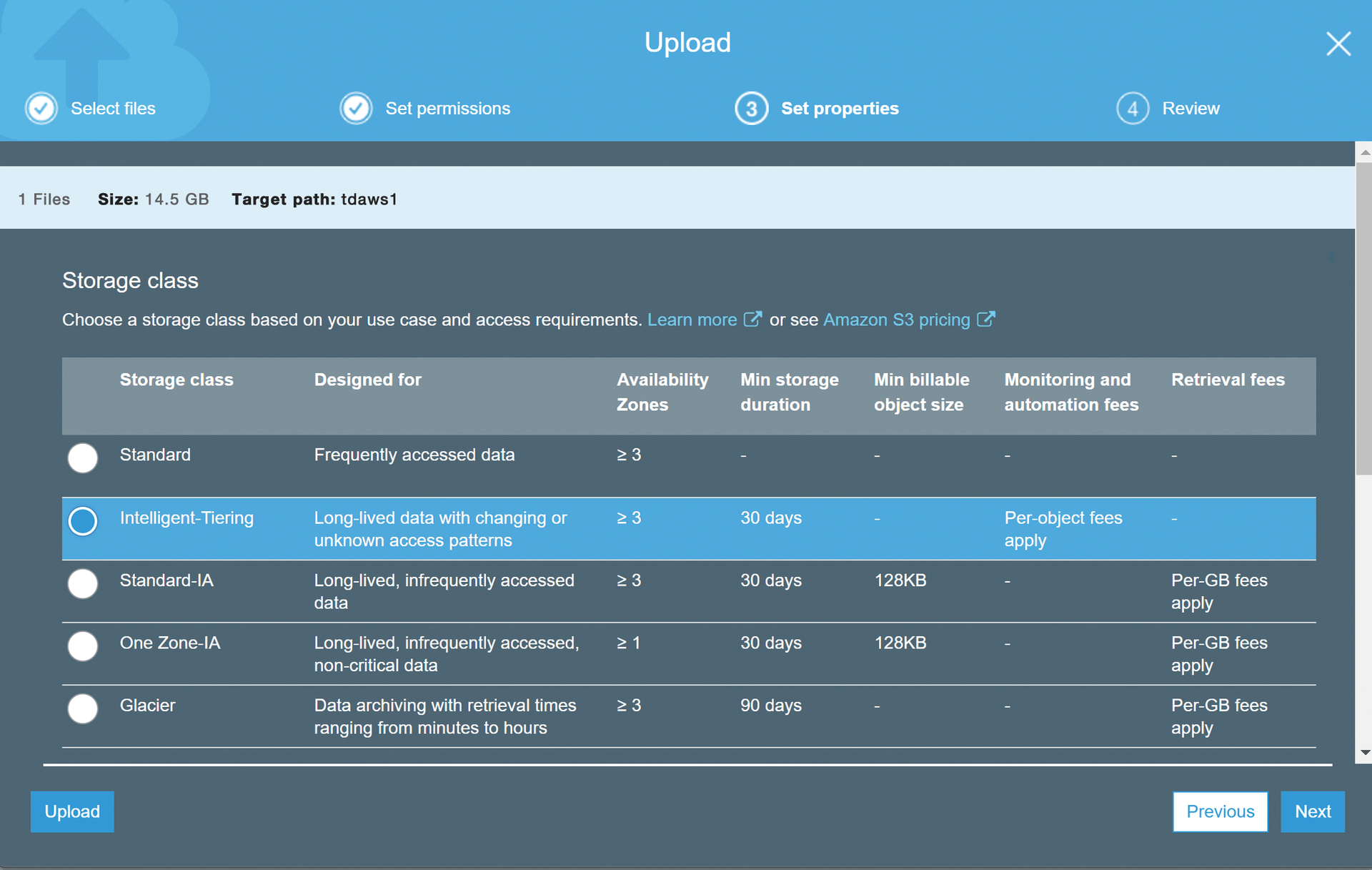

Assigning Storage Classes

S3 storage classes are generally configured and applied at the object level, so the same bucket can contain objects stored in S3 Standard, S3 Standard-IA, S3 Intelligent-Tiering, or S3 One Zone-IA. Glacier Deep Archive, on the other hand, is a service in its own right. Users can upload objects to the storage class of their choice at any time or use S3 lifecycle guidelines to transfer objects from S3 Standard and S3 Standard-IA to S3 Intelligent-Tiering. For example, if the user uploads a new object into a bucket via S3 GUI, they can simply select the desired storage class with the mouse (Figure 1).

When uploading from the CLI, the memory class is given as a parameter, --storage-class. The values STANDARD, REDUCED_REDUNDANCY, STANDARD_IA, ONEZONE_IA, INTELLIGENT_TIERING, GLACIER, and DEEP_ARCHIVE are permitted, for example:

aws s3 cp s3://mybucket/Test.txt s3://mybucket2/ --storage-class STANDARD_IA

The same idea applies when using the REST API. Remember that Amazon S3 is a REST service. Users can send requests to Amazon S3 either directly through the REST API or, to simplify programming, by way of wrapper libraries for the respective AWS SDKs that encapsulate the underlying Amazon S3 REST API.

Therefore, users can send REST requests either in the context of the desired SDK or directly, where Amazon S3 uses the default storage class for storing newly created objects without explicitly specifying the storage class with --storage-class.

Listing 1 shows an example of updating the memory class for an existing object in Java. Another example for uploading an object in Python 3 (with Boto3 SDK) to the infrequently accessed storage class (Standard IA) would look like:

Listing 1: Updating the Storage Class

01 AmazonS3Client s3Client = (AmazonS3Client)AmazonS3ClientBuilder.standard().withRegion(clientRegion).withCredentials(new ProfileCredentialsProvider()).build(); 02 CopyObjectRequest copyRequest = new CopyObjectRequest(sourceBucketName, sourceKey, destinationBucketName, destinationKey).withStorageClass(StorageClass.ReducedRedundancy); 03 s3Client.copyObject(copyRequest);

import boto3

s3 = boto3.resource('s3')

s3.Object ('mybucket', 'hello.txt').put(Body=open('/tmp/hello.txt', 'rb'), StorageClass='STANDARD_IA')

Cheap Storage with Glacier Deep Archives

Of the six main storage classes mentioned, only four can be queried directly at any time because the Glacier and Glacier Deep Archive classes are not applied to the S3 service with its concept of buckets and objects; instead, it is applied to Amazon's Glacier archive service, which offers the same stability as archive storage. However, the retrieval times are configurable between a few minutes and several hours. Immediate retrieval is not possible. Instead, users need to post a retrieval order by way of the API, which eventually returns an archive from a vault. Like S3, the Glacier API is natively supported by numerous third-party applications.

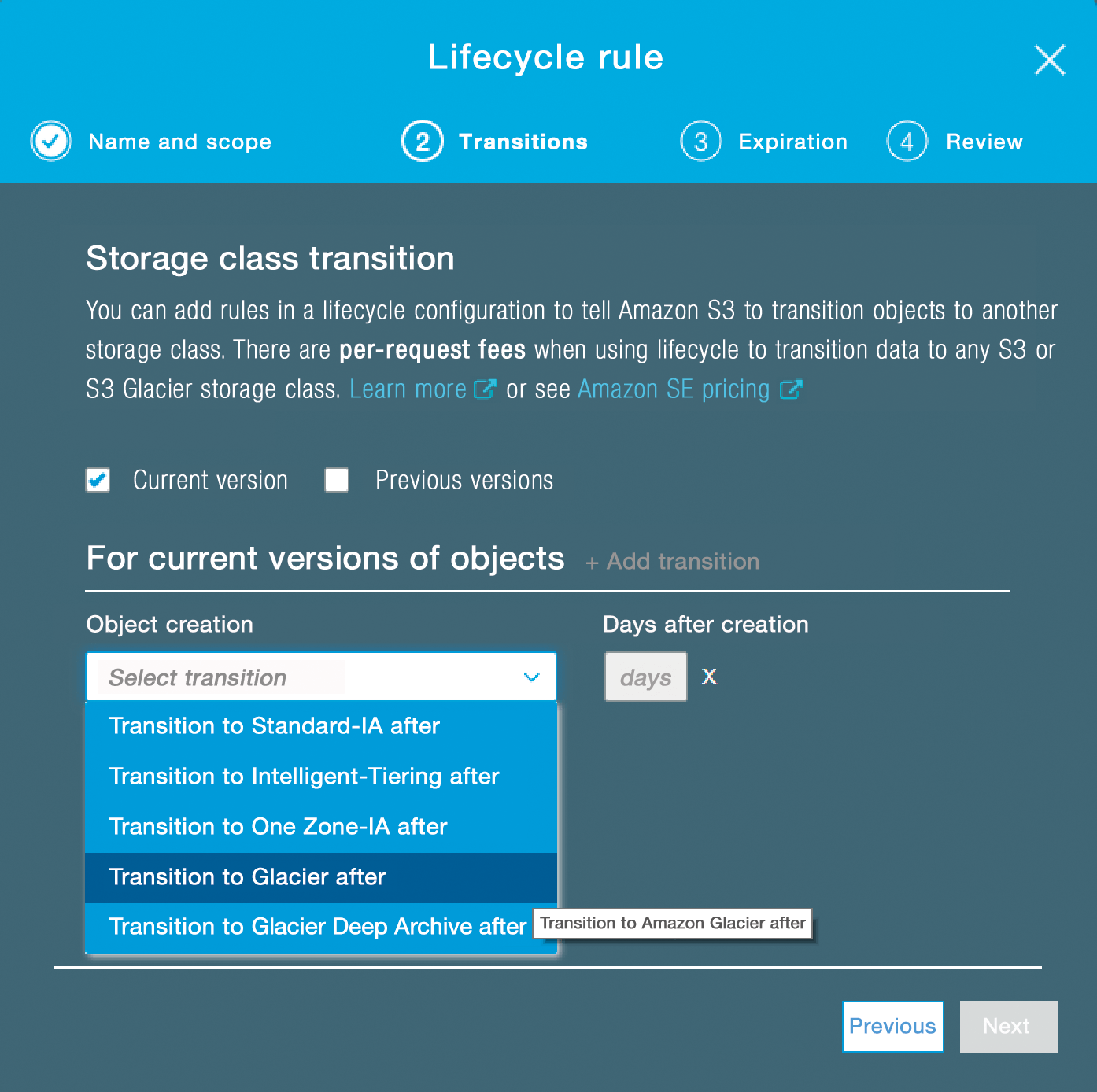

However, one special feature of S3 and Glacier is that the archive service can also be controlled with a lifecycle guideline from the S3 API (Figure 2). Glacier can therefore be controlled either with the Glacier API or the S3 API. In the context of S3 lifecycle policies, for example, it has long been possible to transfer documents that are no longer read after a certain period of time but must be retained for a specific period because of corporate compliance guidelines to Glacier after the desired retention period in S3 Standard-IA.

Glacier Deep Archive has only been available as a storage class for S3 since early 2019. Since then, users have been able to archive objects from S3 Standard, S3 Standard-IA, or S3 Intelligent-Tiering not only in S3 Glacier, but also in Glacier Deep Archive. Although storage in Glacier is already three times cheaper than in S3 Standard, at less than a half a cent per gigabyte, the price in Glacier Deep Archive again drops to $0.00099/GB per month.

This pricing should make Glacier Deep Archive the preferred storage class for all companies needing to maintain persistent archive copies of data that virtually never need to be accessed. It could also make local tape libraries superfluous for many users. The low price of the archive comes at the price of extended access time. Accessing data stored in S3 Glacier Deep archives requires a delivery time of 12 hours compared with a few minutes (Expedited) or five hours (Standard) in Glacier.

Mass Batch for S3 Objects

The new Amazon Batch Operations feature was also presented at re:Invent 2018 and is now available as a preview. This feature lets admins manage billions of objects stored in S3 with a single API call or a few mouse clicks in the S3 console and allows object properties or metadata to be change for any number of S3 objects. This approach also applies to copying objects between buckets or replacing tag sets, changing access controls, or retrieving/restoring objects from S3 Glacier in minutes rather than months.

Until now, companies have often had to spend months of development time writing optimized application software that could apply the required API actions to S3 objects on a massive scale. The Batch Operations feature can also be used to perform custom Lambda functions on billions or trillions of S3 objects, enabling highly complex tasks, such as image or video transcoding. Specifically, the feature takes care of retries, tracks progress, sends notifications, generates final reports, and delivers the events for all changes made and tasks performed to CloudTrail.

For a start, users can specify a list of target objects in an S3 inventory report that lists all objects of an S3 bucket or prefix. Optionally, you can specify your own list of target objects. You then select the desired API action from a prefilled options menu in the S3 Management Console. New S3 Batch Operations [3] are available in all AWS regions now. Operations are charged at $0.25/job or $1.00/million object operations performed, on top of charges associated with any operation S3 Batch Operations performs for you (e.g., data transfer, requests, and other charges).

Conclusions

Amazon S3 is far more than a file storage facility on the Internet, and even experienced users often don't know all of its capabilities, especially because AWS is constantly adding new features. Those who know the access patterns to their data can save a lot of money. Additionally, AWS now provides a degree of automation with the new S3 Intelligent-Tiering memory class (at an extra charge).