Optimally combine Kubernetes and Ceph with Rook

Castling

Hardly a year goes by that does not see some kind of disruptive technology unfold and existing traditions swept away. That's what the two technologies discussed in this article have in common. Ceph captured the storage solutions market in a flash. Meanwhile, Kubernetes shook up the market for virtualization solutions, not only grabbing market share off KVM and others, but also off industry giants such as VMware.

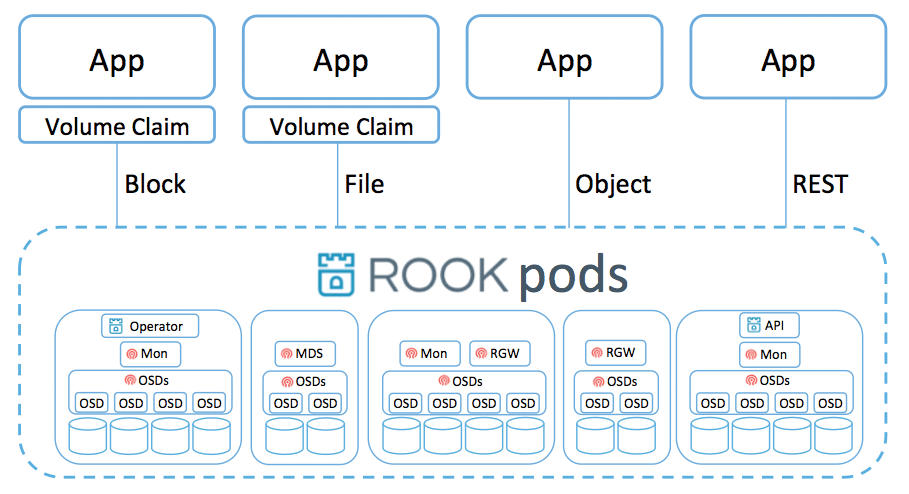

When two disruptive technologies such as containers and Ceph are mixing up the same market, collisions can hardly be avoided. The tool that brings Ceph and Kubernetes together is Rook, which lets you roll out a Ceph installation to a cluster with Kubernetes and offers advantages over a setup where Ceph sits "under" Kubernetes.

The most important advantage is undoubtedly that Ceph integrated into the Kubernetes workflow with the help of Rook [1] can be controlled and monitored just like any other Kubernetes resource. Kubernetes is aware of Ceph and its topology and can adapt it if necessary. However, a setup in which Ceph sits under Kubernetes and only passes persistent volumes through to it, knowing nothing about Ceph, is not integrated and lacks homogeneity.

Getting Started with Rook

ADMIN introduced Rook in detail some time ago [2], looking into its advantages and disadvantages. Since then, much has happened. For example, if you work with OpenStack, Rook will be available automatically in almost every scenario. Many OpenStack vendors are migrating their distributions to Kubernetes, and because OpenStack almost always comes with Ceph in tow, Kubernetes will also include Ceph. However, I'll show you how to get started if you don't have a ready-made OpenStack distribution – and don't want one – with a manual integration.

The Beginnings: Kubernetes

Any admin wanting to work with Rook in a practical way first needs a working Kubernetes, for which Rook needs only a few basic necessities.

In this article, I do not assume that you already have a running Kubernetes available, which gives those who have had little or no practical experience with Kubernetes the chance to get acquainted with the subject. Setting up Kubernetes is not complicated. Several tools promise to handle this task quickly and well.

Rolling Out Kubernetes

Kubernetes, which is not nearly as complex as other cloud approaches like OpenStack, can be deployed in an all-in-one environment by the kubeadm tool. The following example is based on five servers that form a Kubernetes instance.

If you don't have physical hardware at hand, you can alternatively work through this example with virtual machines (VMs); note, however, that Ceph will take possession of the hard drives or SSDs used later. Five VMs on the same SSD, which then form a Kubernetes cluster with Rook, are probably not optimal, especially if you expect a longer service life from the SSD. In any case, Ceph needs at least one completely empty hard disk in every system, which can later be used as a data silo.

Control Plane Node

Of five servers with Ubuntu 18.04 LTS, pick one to serve as the Kubernetes master. For the example here, the setup does without any form of classic high availability. In a production setup, any admin would handle this differently so as not to lose the Kubernetes controller in case of a problem.

On the Kubernetes master, which is referred to as the Control Plane Node in Kubernetes-speak, ports 6443, 2379-2380, 10250, 10251, and 10252 must be accessible from the outside. Also, disable the swap space on all the systems; otherwise, the Kubelet service will not work reliably on the target systems (Kubelet is responsible for communication between the Control Plane and the target system).

Install CRI-O

To run Kubernetes, the cluster systems need a container run time. Until recently, this was almost automatically Docker, but not all of the Linux community is Docker friendly. An alternative to Docker is CRI-O [3], which now officially supports Kubernetes. To use it on Ubuntu 18.04, you need to run the commands in Listing 1. As a first step, you set several sysctl variables; the actual installation of the CRI-O packages then follows.

Listing 1: Installing CRI-O

# modprobe overlay # modprobe br_netfilter [ ... required Sysctl parameters ... ] # cat > /etc/sysctl.d/99-kubernetes-cri.conf <<EOF net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 EOF # sysctl --system [ ... Preconditions ... ] # apt-get update # apt-get install software-properties-common # add-apt-repository ppa:projectatomic/ppa # apt-get update [ ... Install CRI-O ... ] # apt-get install cri-o-1.13

The systemctl start crio command starts the run time, which is now available for use by Kubernetes. Working as an admin user, you need to perform these steps on all the servers, not just on the Control Plane or the future Kubelet servers.

Next Steps

Next, complete the steps in Listing 2 to add the Kubernetes tools kubelet, kubeadm, and kubectl to your systems.

Listing 2: Installing Kubernetes

# apt-get update && apt-get install -y apt-transport-https curl # curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add - # cat <<EOF >/etc/apt/sources.list.d/kubernetes.list deb https://apt.kubernetes.io/ kubernetes-xenial main EOF # apt-get update # apt-get install -y kubelet kubeadm kubectl # apt-mark hold kubelet kubeadm kubectl

Thus far, you have only retrieved the components needed for Kubernetes and still do not have a running Kubernetes cluster. Kubeadm will install this shortly. On the node that you have selected as the Control Plane, the following command sets up the required components:

# kubeadm init --pod-network-cidr=10.244.0.0/16

The --pod-network-cidr parameter is not strictly necessary, but a Kubernetes cluster rolled out with kubeadm needs a network plugin compatible with the Container Network Interface standard (more on this later). First, you should note that the output from kubeadm init contains several commands that will make your life easier.

In particular, you should make a note of the kubeadm join command at the end of the output, because you will use it later to add more nodes to the cluster. Equally important are the steps used to create a folder named .kube/ in your personal folder, in which you store the admin.conf file. Doing so then lets you use Kubernetes tools without being root. Ideally, you would want to carry out this step immediately.

As a Kubernetes admin, you cannot avoid the network. Although not nearly as complicated as with OpenStack, for which an entire software-defined networking suite has to be tamed, you still have to load a network plugin: After all, containers without a network don't make much sense.

The easiest way to proceed is to use Flannel [4], which requires some additional steps. On all your systems, run the following command to route IPv4 traffic from bridges through iptables:

# sysctl net.bridge.bridge-nf-call-iptables=1

Additionally, all your servers need to be able to communicate via ports 8285 and 8462. Flannel can then be integrated on the Control Plane:

# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

This command loads the Flannel definitions directly into the running Kubernetes Control Plane, making them available for use.

Rolling Out Rook

As a final step, run the kubeadm join command (generated previously by kubeadm init), which runs the command on all nodes of the setup except the Control Plane. Kubernetes is now ready to run Rook.

Thanks to various preparations by Rook developers, Rook is just as easy to get up and running as Kubernetes. Before doing so, however, it makes sense to review the basic architecture of a Ceph cluster. Rolling out the necessary containers with Rook is not rocket science, but knowing what is actually happening is beneficial.

To review the basics of Ceph and how it relates to Rook, see the box "How Ceph Works."

To roll out Rook (Figure 2) in Kubernetes, you need OSDs and MONs. Rook makes it easy, because the required resource definitions can be taken from the Rook source code in a standard configuration. Custom Resource Definitions (CRDs) are used in Kubernetes to convert the local hard drives of a system into OSDs without further action by the administrator.

In other words, by applying the ready-made Rook definitions from the Rook Git repository to your Kubernetes instance, you automatically create a Rook cluster with a working Ceph that utilizes the unused disks on the target systems.

Experienced admins might now be thinking of using the Kubernetes Helm package manager for a fast rollout of the containers and solutions. However, it would fail because Rook only packages the operator for Helm, but not the actual cluster.

Therefore, your best approach is to check out Rook's Git directory locally (Listing 3). In the newly created ceph/ subfolder are two files worthy of note: operator.yaml and cluster.yaml. (See also "The Container Storage Interface" box.) With kubectl, first install operator, which enables the operation of the Ceph cluster in Rook. The kubectl get pods command lets you check the rollout to make sure it worked: The pods should be set to Running. Finally, the actual Rook cluster is rolled out with the cluster.yaml file.

Listing 3: Applying Rook Definitions

# git clone https://github.com/rook/rook.git # cd rook/cluster/examples/kubernetes/ceph # kubectl create -f operator.yaml # kubectl get pods -n rook-ceph-system # kubectl create -f cluster.yaml

A second look should now show that all Kubelet instances are running rook-ceph-osd pods for the local hard drives and that rook-ceph-mon pods are running, but not on any of the Kubelet instances. Out of the box, Rook limits the number of MON pods to three because that is considered sufficient.

Integrating Rook and Kubernetes

Some observers claim that cloud computing is actually just a huge layer cake. Given that Rook introduces an additional layer between the containers and Ceph, maybe they are not that wrong, because to be able to use the Rook and Ceph installation in Kubernetes, you have to integrate it into Kubernetes first, independent of the storage type provided by Ceph. If you want to use CephFS for storage, it requires different steps than if you are using the Ceph Object Gateway.

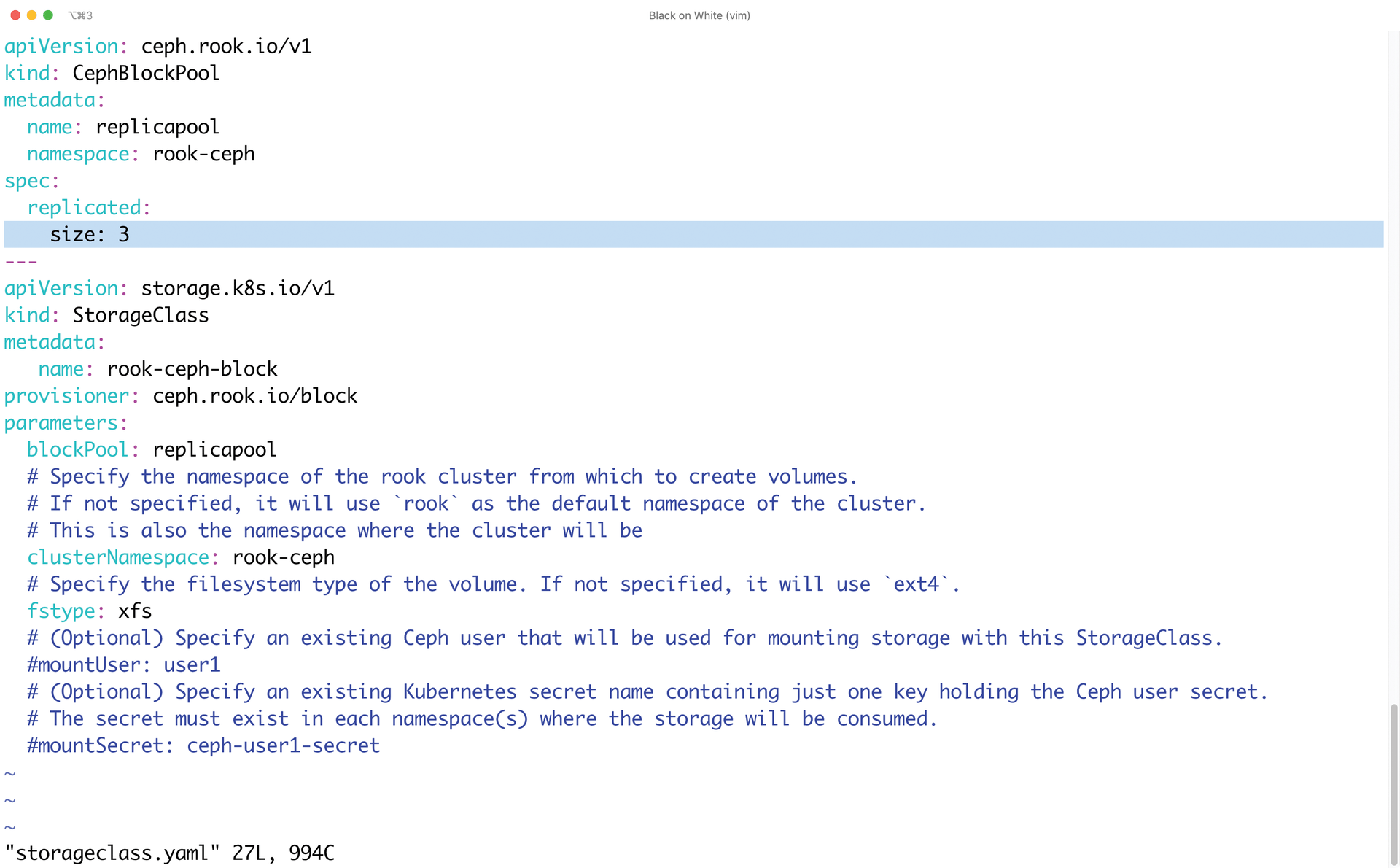

The classic way of using storage, however, has always been block devices, on which the example in the next step is based. In the current working directory, after performing the above steps, you will find a storageclass.yaml file. In this file, replace size: 1 with size: 3 (Figure 3).

size to 3.In the next step, you use kubectl to create a pool in Ceph. In Ceph-speak, pools are something like name tags for binary objects used for the internal organization of the cluster. Basically, Ceph relies on binary objects, but these objects are assigned to placement groups. Binary objects belonging to the same placement group reside on the same OSDs.

Each placement group belongs to a pool, and at the pool level the size parameter determines how often each individual placement group should be replicated. In fact, you determine the replication level with the size entry (1 would not be enough here). The mystery remains as to why the Rook developers do not simply adopt 3 as the default.

As soon as you have edited the file, issue the create command; then, display the new rook-block storage class:

kubectl create -f storageclass.yaml kubectl get sc -a

From now on, you have the option of organizing a Ceph block device from within the working Ceph cluster, which relies on a persistent volume claim (PVC) (Listing 4).

Listing 4: PVC for Kubernetes

01 child: PersistentVolumeClaim 02 apiVersion: v1 03 metadata: 04 name: lm-example-volume-claim 05 spec: 06 storageClassName: rook-block 07 accessModes: 08 - ReadWriteOnce 09 resources: 10 requests: 11 storage: 10Gi

In a pod definition, you then only reference the storage claim (lm-example-volume-claim) to make the volume available locally.

Using CephFS

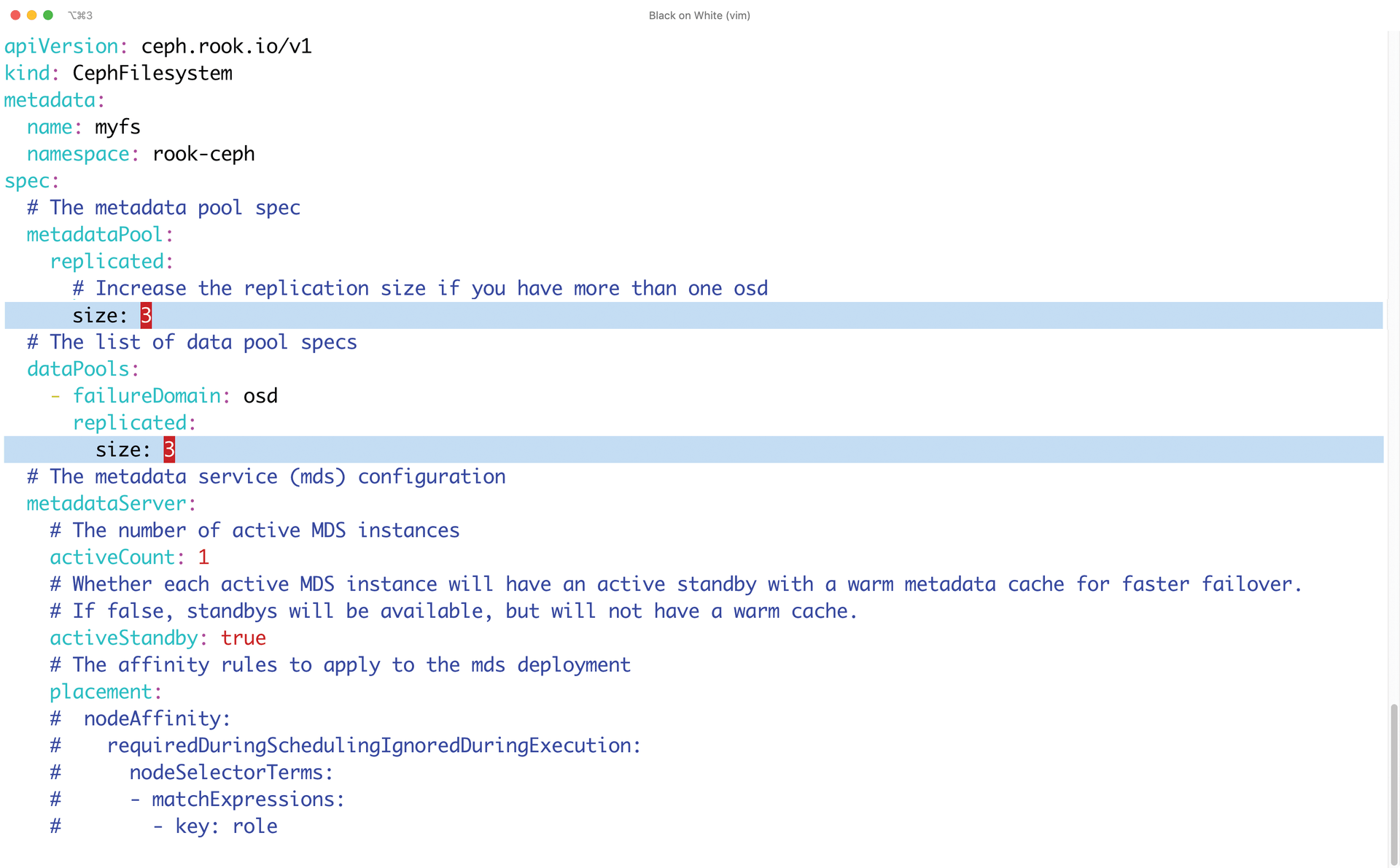

In the same directory is the filesystem.yaml file, which you will need if you want to enable CephFS in addition to the Ceph block device; the setup is pretty much the same for both. As the first step, you need to edit filesystem.yaml and correct the value for the size parameter again, which – as you know – should be set to 3 for both dataPools and metadataPool (Figure 4).

size parameter from 1 to 3 for production.To create the custom resource definition for the CephFS service, type:

kubectl create -f filesystem.yaml

To demonstrate that the pods are now running with the Ceph MDS component, look at the output from the command:

# kubectl -n rook-ceph get pod -l app=rook-ceph-mds

Like the block device, CephFS can be mapped to its own storage class, which then acts as a resource for Kubernetes instances in the usual way.

What's Going On?

If you are used to working with Ceph, the various tools that provide insight into a running Ceph cluster can be used with Rook, too. However, you do need to launch a Pod especially for these tools in the form of the Rook Toolbox.

A CRD definition for this is in the Rook examples, which makes getting the Toolbox up and running very easy before connecting to Rook:

# kubectl create -f toolbox.yaml

# kubectl -n rook-ceph exec -it $(kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" -o jsonpath='{.items[0].metadata.name}') bash

The usual Ceph commands are now available. With ceph status, you can check the status of the cluster; ceph osd status shows how the OSDs are currently getting on; and ceph df checks how much space you still have in the cluster. This part of the setup is therefore not specific to Rook.

Conclusions

Rook in Kubernetes provides a quick and easy way to get Ceph up and running and use it for container workloads. Unlike OpenStack, Kubernetes is not multiclient-capable, so the "one big Ceph for all" approach is far more difficult to implement than with OpenStack. For this reason, admins tend to roll out many individual Kubernetes instances instead of one large one. Rook is ideal for exactly this scenario, because it relieves the admin of a large part of the work: maintaining the Ceph cluster.

Rook version 1.x [5] is now available and is considered mature for deployment in production environments. Moreover, Rook is now an official Cloud Native Computing Foundation (CNCF) project; thus, it is safe to assume that many more practical features will be added in the future.