Zuul 3, a modern solution for CI/CD

Continuous Progress

Zuul 3 brings a new approach to the DevOps challenge. (For more on these important DevOps concepts, see the "CI and CD" box.) Whereas other CI/CD tools like Jenkins, Bamboo, or TeamCity focus on constant building and testing, Zuul's main design goal is to be a gating mechanism. In other words, as soon as a proposed change is verified and approved by a human, it can undergo final tests and be deployed automatically to the production environment. Such a system requires a lot of computing power, but Zuul has been proven reliable and highly scalable by its designers: the OpenStack community.

The general concept behind the Zuul series (Zuul 3 at the time of writing) is to provide developers a system for automatically building, testing, and finally merging new changes to a project. Zuul is extensible and supports a number of development platforms, like GitHub and Gerrit Code Review [6].

Zuul originated as OpenStack CI testing. For years, OpenStack, the Infrastructure-as-a-Service (IaaS) cloud [7], got all the attention. Over time, people began to realize that as impressive as OpenStack was, the CI system behind it also deserved attention, because it enabled contributors and users across many different organizations to work together and quickly develop dozens of independent projects [8] [9]. Finally, it was decided to spin off Zuul 3 from OpenStack and run it as a standalone project under the OpenStack Foundation.

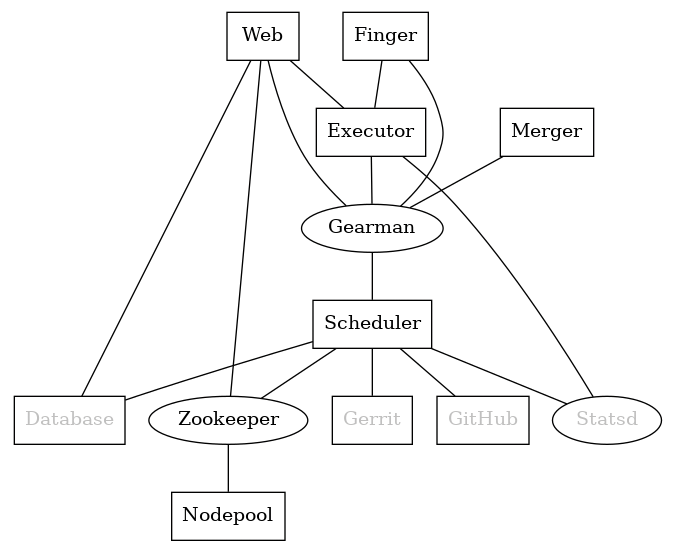

Components

Zuul 3 is organized in the form of services (Figure 2). For small projects, all of the components can be running on a single host. For larger projects, it is better to distribute the entire solution onto several hosts.

All Zuul processes read the /etc/zuul/zuul.conf file (an alternative location may be supplied on the command line), which uses an INI file syntax. Each component may have its own configuration file, although you might find it simpler to use the same file for all components.

Zuul Scheduler

The heart of the system is Zuul Scheduler, which is responsible for listening to and receiving events from a Git hosting service like Gerrit or GitHub. During the initial inspection of an event (e.g., to determine whether an event is associated with any of the projects to be observed), Scheduler forwards the event to be handled by one of the Executors through the Gearman server. In the end, after the flow has been completed, Scheduler reports the results to Gerrit or GitHub.

Although Scheduler is one of the few components that cannot be replicated, it is designed to perform only lightweight tasks and should hardly ever be overwhelmed by load. The OpenStack Foundation states that a small host, even with 1GB of memory, should be enough for a standard composition. Nevertheless, according to available metrics, OpenStack's Scheduler at most requires 8GB. Regardless of the details, almost any machine today can provide enough resources for Zuul Scheduler to work without throttling.

Gearman

Gearman is a separate project, but Zuul has its own implementation inside Zuul Scheduler. Gearman is responsible for distributing tasks between services, which communicate through the Gearman protocol. Scheduler puts a task in Gearman's queue, and the next free Executor takes care of it. Similarly an Executor, after the change is processed, submits the result to the queue, so Scheduler can report the result to Gerrit. Although Gearman can be used as a separate service, the built-in service is sufficient for handling up to 10,000 tasks at once.

If the built-in server is used, other Zuul hosts will need to be able to connect to Scheduler on Gearman TCP port 4730. It is also strongly recommended to use SSL certificates with Gearman, because secrets are transferred from Scheduler to Executors over this link.

Zuul is stateless; hence, all ongoing tasks are kept in Gearman operating memory and are lost on service restart. If you want to restore a queue after a maintenance reboot, for example, you first need to make a snapshot with the built-in zuul-check.py script and load it back to Gearman after restart.

Executors

Each Zuul configuration should have at least one Executor, the component responsible for preparing the execution of tasks. Because the definition of the verification process can be scattered over multiple YAML files, it has to be extracted and sent through Gearman to Zookeeper for execution. One Executor can handle a few dozen simultaneous tasks; however, you should first consider replicating it if you see any performance issues.

Zuul is designed to pull stable changes on production, so it sometimes has to perform merges between independent changes when they are proposed in parallel. Zuul performs a number of Git operations in the course of its work, for which it uses the Merger service, one of which comes built-in with each Executor. However, if the number of merges to be performed grows, you can separate Merger functionality onto separate hosts. Mergers, like Executors, can come in any quantity, depending on a project's needs.

Web

The Web component is a simple dashboard to display the current status of Zuul's tasks. Here, you find tenants, projects, possible jobs, and pipelines, and you can track logs in real time. The dashboard also shows a list of all available nodes and providers defined in Nodepool, a system for managing test node resources. If an additional reporting set is present in the pipeline declaration, you can find there a history of all pipeline executions. Because Zuul Web is read-only, you can have as many instances of this component as you want; however, this configuration never needs replication of service, regardless of load.

Web servers need to be able to connect to the Gearman server (usually the Scheduler host). If SQL reports are generated, a web server needs to be able to connect to the database to which it reports. If a GitHub connection is configured, web servers need to be reachable by GitHub, so they can receive notifications.

Zookeeper

Zookeeper was originally developed at Yahoo for streamlining processes running on big data clusters by storing the status in local logfiles on the Zookeeper servers. These servers communicate with the client machines to provide them the information. Zookeeper was developed to fix the bugs that occurred while deploying distributed big data applications and is a communication service between a Zuul installation and Nodepool and its nodes. Whenever a new node is needed, a request goes to one of the Zookeeper instances, and Zookeeper then obtains from Nodepool the host for execution.

Nodepool

The last of the crucial Zuul 3 components to be explained is Nodepool, a service that keeps the configuration of hosts where jobs are being executed. Apart from static nodes, you can define providers that launch instances in a chosen cluster as needed. Available drives are for OpenStack, Kubernetes, OpenShift, and Amazon Web Services (AWS), but in addition to the official drives, a few exist off the record (e.g., for oVirt or Proxmox).

Configuration

The entire Zuul configuration is written as YAML files. A job (Listing 1) is a group of strictly related tasks to perform to decide whether a change is valid or not. The main job elements are:

-

name– A unique project identifier. -

parent– A job's configuration can be inherited; therefore, all common elements should be kept in one job, and only needed elements should be overwritten. List elements are inherited additively (e.g.,pre-runandpost-runlists are added to those in the base job), whereas others are inherited (e.g., a timeout value). -

pre-run/post run– These lists are playbooks that are always executed. Pre-runs prepare the environment, and post-runs usually collect artifacts. -

run– This element comprises a list of playbooks that perform the verification and are executed until the first failure or all in the list have been run. -

nodeset– The nodes where the job should be run.

Listing 1: sample_job.yml

- job:

name: compilation

parent: base

pre-run: playbooks/base/pre.yaml

run:

- playbooks/compilation.yaml

post-run:

- playbooks/base/post-ssh.yaml

- playbooks/base/post-logs.yaml

timeout: 1800

nodeset:

nodes:

- name: main

label: compilation_hosts

Other elements can be defined in a job that modifies its behavior, like a timeout in seconds or ignore_error, which returns success, regardless of the job's real result.

Pipeline

The pipeline entity (Listing 2) describes conditions, jobs, and the results of a change. The main pipeline elements are:

-

name– A unique name for the Zuul tenant. -

manager– The relation to other ongoing changes for a given project (e.g.,independentmeans that this pipeline will run independent of any other change). This config is useful for the initial verification of each patchset. Another value here informs Zuul that it takes into consideration the output of another pipeline. -

trigger– In this example, the trigger defines the factors that start execution of the pipeline for Gerrit. In this case, it waits for a new patchset (or new review) or comment by a reviewer with the text RECHECK. -

success/failure– These configs determine what Zuul should do, depending on the result. In Listing 2, aVerifiedvote of +1 or -1 is reported to the MySQL database. -

require– The pipeline will not run if these additional conditions are not fulfilled (e.g., here, I do not want to check already closed reviews).

Listing 2: sample_pipeline.yml

01 - pipeline: 02 name: verification 03 manager: independent 04 description: | 05 This pipeline is only to learn how Zuul works 06 trigger: 07 gerrit: 08 - event: patchset-created 09 - event: comment-added 10 comment: (?i)^(Patch Set [0-9]+:)?( [\w\\+-]*)*(\n\n)?\s*RECHECK 11 success: 12 gerrit: 13 # return to Gerrit Verified+1 14 Verified: 1 15 # return data to MySQL (empty key here 'mysql:') 16 mysql: 17 failure: 18 gerrit: 19 Verified: -1 20 mysql: 21 require: 22 gerrit: 23 # prevents waste runs on %submit usage 24 open: true

Project

The project config element (Listing 3) spans pipelines and jobs and defines which jobs should be run for which pipeline. In this example, I expect to run, on verification, a set of three jobs. The first, clang, is a static C++ code analysis. The other two are compilation variants according to the processor architecture: one for i686 and the other for x86_64. In this case, the playbooks used in the job compilation are generic, so they expect variables to be passed. The second compilation call also requires the first clang to be completed successfully.

Listing 3: sample_project.yml

01 - project: 02 verification: 03 jobs: 04 - clang 05 - compilation: 06 vars: 07 hardware: i686 08 technology: legacy 09 - compilation: 10 vars: 11 hardware: x86_64 12 technology: legacy 13 require: 14 - clang

Ansible

To run the job – where the job hits the metal, as it were – the Zuul launcher dynamically constructs an Ansible playbook. This playbook is a concatenation of all playbooks the job needs to run. By using Ansible to run the job, all the flexibility of the orchestration tool is now available to the launcher. For example, a custom console streamer library allows you to live stream the console output for the job over a plain TCP connection on the web dashboard. In the future, Ansible will allow for better coordination when running multinode test jobs; after all, this is what orchestration tools such as Ansible are made for! Although the Ansible run can be fairly heavyweight, especially is you're talking about launching thousands of jobs an hour, the system scales horizontally, with more launchers able to consume more work easily.

At the start of each job, an Executor prepares an environment in which to run Ansible that contains all of the Git repositories specified by the job with all the dependent changes merged into their appropriate branches. The branch corresponding to the proposed change will be checked out in all projects (if it exists). Any roles specified by the job will be present, as well (with dependent changes merged, if appropriate), and added to the Ansible role path. The Executor also prepares an Ansible inventory file with all of the nodes requested by the job.

Trusted/Untrusted

The configuration described above is divided into two sections: trusted and untrusted configurations. The first, trusted, is defined in Zuul's central repository. This repository (or a few, if needed) has stricter access rules, so only a limited number of people can modify it. The trusted repository is also the location where you define the base job, which can later be inherited.

The untrusted repositories are usually those that are being verified by Zuul. The main difference is that Zuul takes YAML only from master branches in trusted repositories for verification and from the review or pull request that is being verified. In other words, if a developer changes a job definition from their repository, Zuul will include those changes in verification. But all "trusted" YAML has to be merged to the master to be considered in the flow.

Playbooks called from the untrusted repositories also are not permitted to load additional Ansible modules or access files outside of the restricted environment prepared for them by the Executor. Additionally, some standard Ansible modules are replaced with versions that prohibit some actions, including attempts to access files outside of the restricted execution context.

When Zuul starts, it examines all of the Git repositories specified by the system administrator in the tenant configuration and searches for files in the root of each repository. Zuul looks first for a file named zuul.yaml or a directory named zuul.d and, if not found, .zuul.yaml or .zuul.d (with a leading dot). In the case of an untrusted project, the configuration from every branch is included; however, in the case of a config project, only the master branch is examined.

Workflow

The workflow in Zuul is similar to that in standard CI/CD tools like GitLab CI/CD: The developer pushes the change for a review, which triggers a CI/CD tool. The tool then proceeds with steps configured as required for judging whether a change is valid or not. If the flow succeeds, you receive approval; otherwise, you get a denial. Now it is up to the developer (and probably other human reviewers) to decide when this change is merged.

In Zuul, however, this is only the first part of the process, called "verification." When a change is approved by verification and accepted by reviewers, you can proceed to the second part known as "gating." The goal of gating is to perform an exhaustive number of tests that confirms the change is production ready before applying it. (See the "Gating" box.)

Web UI

Previous Zuul iterations had Jenkins as the graphical user interface, but OpenStack parted with Jenkins and enforced the implementation of a new service responsible for displaying the current tasks.

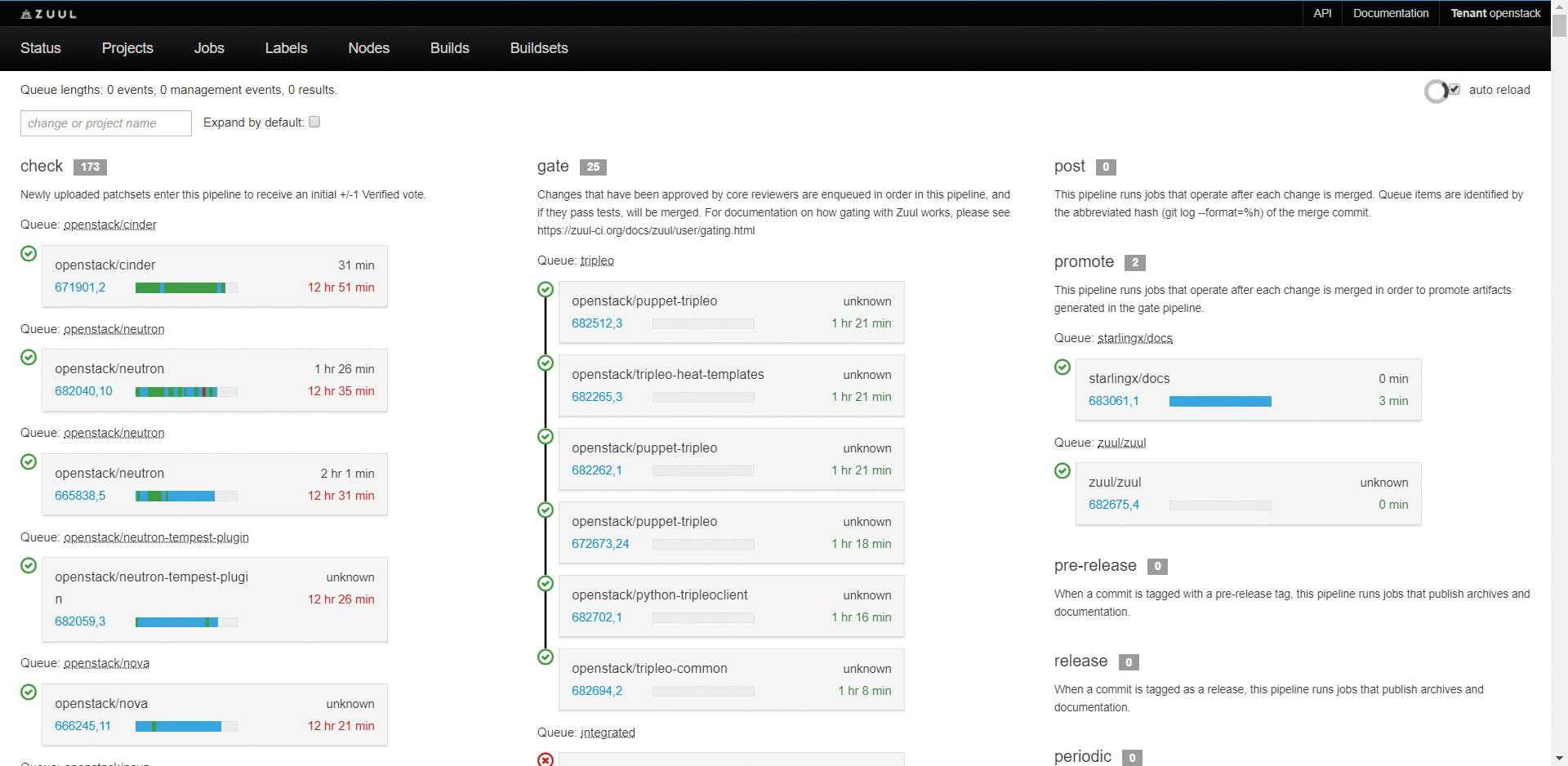

Figure 11 shows the main view. Running tasks appear in the columns (except the last one, in this case). Note that the checks in column 1 are independent, but the gate pipeline in column 2 combines its tasks.

The last column displays less commonly used pipelines that come into play when Zuul acts on different events, including merging changes to the master.

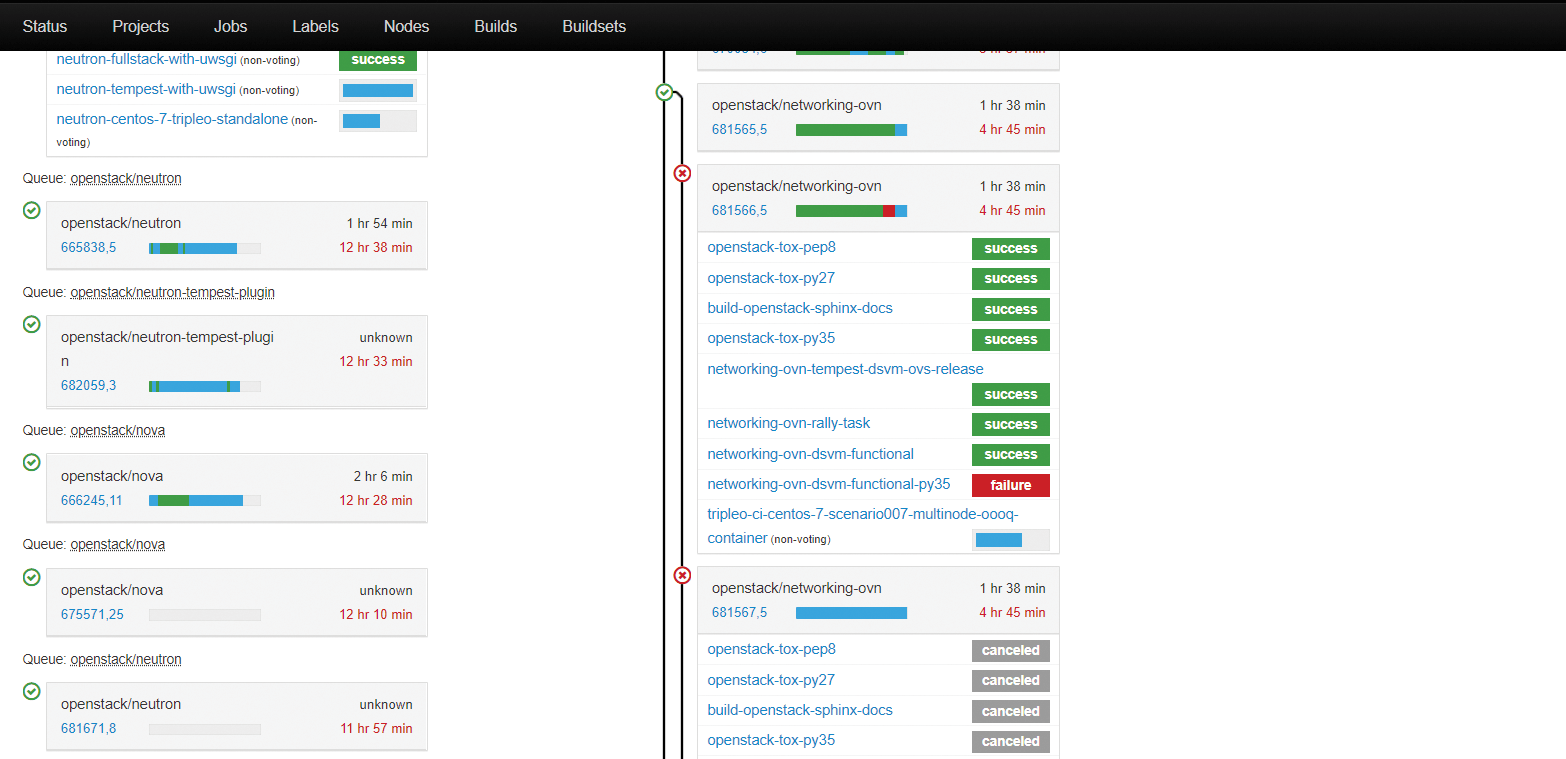

If you take a closer look at a chosen task by clicking on it, it expands to show the jobs and the status of each (Figure 12).

In the menubar at the top of the screen, the Projects menu takes you to a list projects of that Zuul monitors, displaying the hosting platform (GitHhub or Gerrit) and how Zuul trusts its content. Usually there will be only one trusted repo.

The Jobs menu takes you to a list of all jobs known to Zuul, which might be helpful if you are adding a new test to your pipeline: Perhaps someone has written something similar that you could reuse.

The Labels and Nodes menu items are interconnected. The first lists all possible labels you can use to define where jobs ought to be executed, and Nodes lists all nodes known to Zuul, with their labels and other details.

Finally, the Builds item lists all recently completed job,s and Buildsets shows all recently finished pipelines, along with their target (which commit), date, and result.

Summary

The Zuul system, originally developed to manage the massive multiple-contributor OpenStack software platform, has been updated to handle any complex collaborative software project. Zuul 3 is currently in use by multiple international companies (e.g., BMW, Software Factory, and, of course, OpenStack Foundation). In all cases, Zuul has proved to be a reliable and highly scalable solution [10].

With Zuul, "Humans don't merge code, machines merge code" [11]. Moreover, it works with complex code sets: Zuul can coordinate integration and testing across multiple, at first glance independent, repositories. Moreover, Zuul has a growing community. Thanks to a concept similar to Ansible roles [12], community members can reuse existing Zuul roles (e.g., pushing to the Docker registry) or write and share their own playbooks.

To sum up, Zuul 3 [13] is a free-to-use, highly scalable, and flexible solution that fulfills all three modern software development paradigms: continuous integration, continuous delivery, and continuous deployment. Projects that use GitHub or Gerrit repository hosting services can reasonably consider introducing Zuul into the development process.