A service mesh for microarchitecture components

Exchange

One of the disruptive innovations of recent years, which shifted into focus only in the wake of cloud computing and containers, is undoubtedly the microarchitecture approach to computing (see the "Finally Agile" box). Microarchitecture application developers, however, are faced with the task of operating all of the separate microservices and components and maintaining them as part of their application.

This is where the Istio developers enter the scene. They promise container application developers a central network interface in which the parts of an application talk. The required services (i.e., load balancing, filtering, discovery of existing services, etc.) are provided by Istio. To be able to use all these features, you only have to integrate Istio into your application.

Communication Needed

For the individual parts of a microservice application to work together successfully, communication between components is essential. How can a developer make life easier if they are working on a store application, for example, where a customer navigates through different parts of the program during a purchase and has to be forwarded from one component to the next?

The subject of connecting containers is not trivial per se. Because a container usually runs in its own namespace, it is not so easy to access from the main network. Kubernetes, for example, comes with a proxy server on-board, without which connections from the outside world to applications would simply not be possible. Istio extends an existing Kubernetes setup based on pods by adding APIs and central controls.

What is a Service Mesh?

Istio [1] forms a "service mesh" between the individual components of a microservices application by removing the need for the admin to plan the network connections below the individual components. In the Istio context, service mesh means the totality of all components that are part of a microservice application.

In the previous example from the web store, this could mean all the components involved in the ordering process. The application that provides the website, the one that monitors the queue of incoming orders, the one that generates email for orders, and the one that dispatches the email – there are basically no limits to what you could imagine the mesh doing. What all these components have in common is that they communicate with each other on a network. In the worst case, it involves a huge amount of development overhead that isn't actually necessary.

Clearly developers need to deal with the many aspects of connectivity, starting with the simple question of how the application that dispatches email knows how to reach the application that generates email. In a legacy environment, both functions would be part of the same program – or the dispatcher would simply be connected to the mail generator through a static IP address.

This arrangement is not possible in container applications based on a microservice architecture. After all, the individual parts of the application need to be able to scale seamlessly to reflect the load. When all hell breaks loose on the web store, more email senders and email generators need to be fired up, but they will all have dynamic IP addresses. So how do they discover each other?

Discovering Services

Other solutions to this problem, such as Consul or Etcd, have service directories in which services register to shout, "Here I am!" to other services on the network. If you integrate Consul or Etcd into your application, you have solved the autodiscovery problem, but that's not your only worry.

How is load balancing possible across all instances of a particular microservice? If routing is required, what implements the corresponding rules? Will there be any traffic that you want to stop? If so, how do you do that? All these questions have separate answers: Load balancing is handled by a HAProxy and filtering by nftables; routing rules can be defined manually. However, these services can more easily be provided by Istio.

Not Only Kubernetes

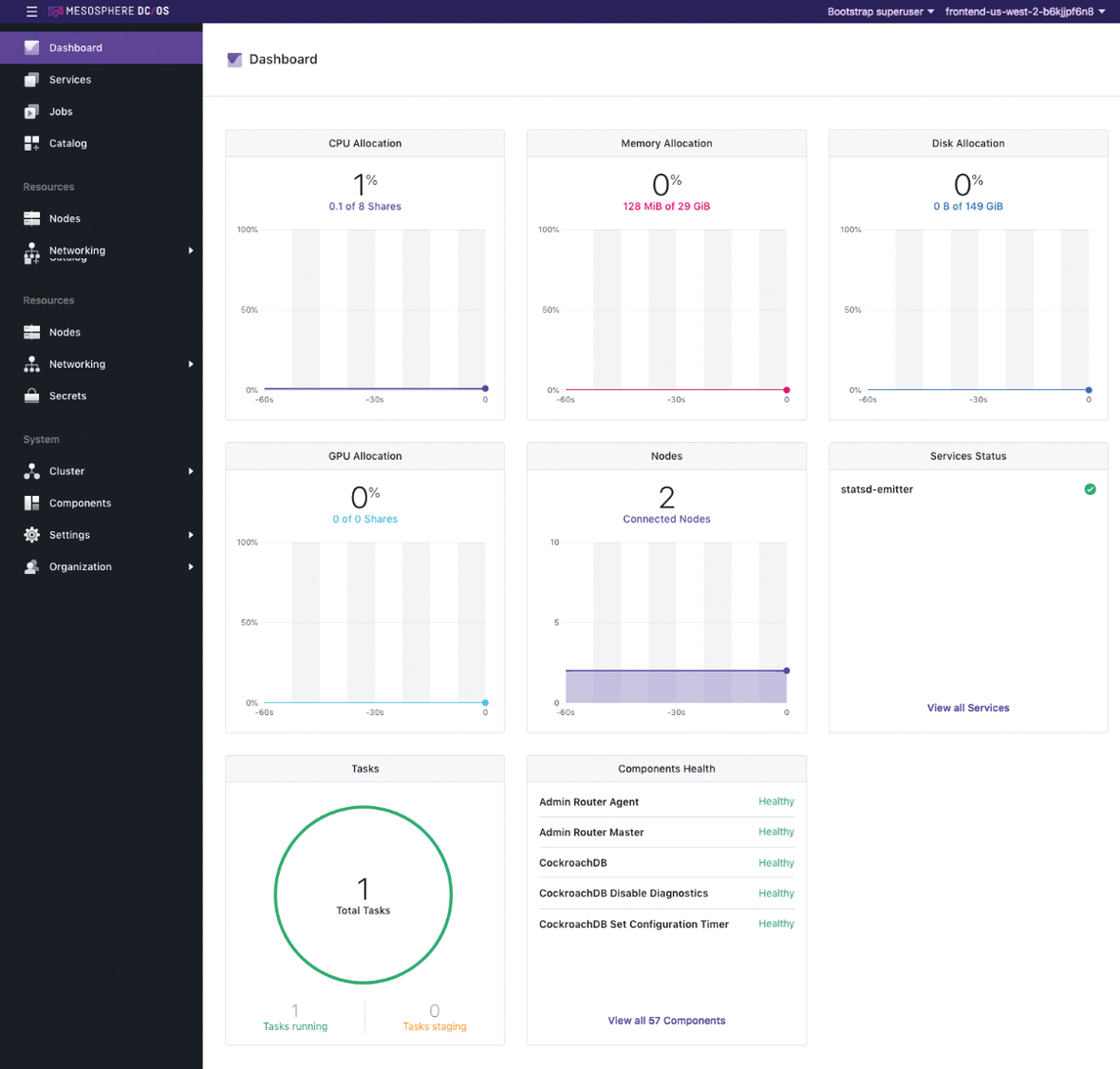

Integration is far less complex than it sounds at first. Up front, Istio currently supports operations with Kubernetes out of the box but is not hard-wired to Kubernetes forever (Figure 1). Support for Mesosphere, for example, is in the planning stage, because Istio doesn't use any features that exist only and exclusively in Kubernetes. Because practically nobody uses plain vanilla Kubernetes but, rather, relies on one of the existing Kubernetes distributions, it is important that Istio also adapt to them.

Currently, Istio can be rolled out with Minikube, as well as with Google Kubernetes Engine or OpenShift. Istio also natively supports Kubernetes clusters in Amazon Web Services (AWS); just load the Istio ruleset into an existing Kubernetes cluster and add the Istio pod definitions – all done. The Istio functionality can now be used in Kubernetes, but how exactly does this work in practice?

Control and Data Planes

If you look at a common Istio deployment, you will be reminded of classic network switches. Istio divides a mesh (i.e., the entire virtual network topology between the components of a microservice architecture app) into two levels: The control plane contains the entire logic for controlling all data streams, and the data plane implements those data streams and ensures that they follow the rules defined in the policy.

Unfortunately, the Istio developers could not resist the temptation to invent a multitude of Istio-specific terms. If you are not used to working with Istio, the names can be very confusing – although most components in Istio perform tasks for which there are clearly named role models in IT.

One example of this is Envoy proxies, a type of sidecar that Istio uses to support containers. A sidecar in Istio is simply any technical device that is welded onto an existing container to provide it with a service.

An Envoy proxy is just one specific sidecar type that tells the Istio rulebook to route all incoming and outgoing traffic. As instructed by Istio, Kubernetes always rolls out the proxies as part of a pod, along with the workload container, and configures the structure so that the proxy is basically a man-in-the-middle for its containers.

Focus on Envoys

Envoy proxies (for simplicity's sake, mostly referred to as Envoys in Istio-speak) are multitalented. As the only component of the setup, the Envoy proxy is written in C++. Compared with a solution in Go, the Istio developers expect performance advantages, although Go is more in keeping with the zeitgeist. Envoys therefore manipulate the host's netfilter rules such that they gain access to all the traffic of the container in whose pod they are rolled out.

The main task of the Envoy proxies is not to change this traffic. Initially, they just measure it. Acquiring telemetry data is absolutely necessary for the functionality of Istio, because without a usable numeric base, it would be impossible to carry out load balancing that reflects actual access or to add or remove instances of existing load balancers as required.

Envoys do not handle these tasks directly. Instead, this is where the control plane comes into play – more specifically, a service that is, in a way, the brain of an Istio installation: Mixer.

Mixer

A great amount of intelligence is built into Mixer. On the basis of the incoming metric data of the various Envoy instances in the cluster, Mixer calculates the current load on the system and the resulting rules for the entire service mesh. Mixer then forwards the resulting set of rules to all running Envoy instances, which then convert it back into a concrete system configuration on the hosts.

Of course, this also means that Mixer enables Istio's platform independence. As a generic API, Istio can be connected to any conceivable fleet manager for containers. At the same time, Istio provides a multitude of functions for container applications that developers would otherwise have to integrate into their applications. For example, if you want to charge for the services provided by the current containers, you can connect Mixer to your own billing system through a service back end and achieve a stable, defined billing API in no time at all.

Pilot

Mixer is not the only service in Istio that provides Envoys with rules. Pilot, which also belongs to Istio's control plane, plays an equally important role. Pilot is a generic API that initially takes care of service discovery. Like Mixer, Pilot is also an abstraction that connects to the APIs of various container orchestrators (e.g., Kubernetes, Mesos, or Cloud Foundry) and forwards the information obtained in this way to the individual Envoys.

The individual adapters (e.g., the platform adapters) take care of this conversion. In this way, all Envoy instances can always keep track of all container instances and are thus able to distribute the load ideally within the mesh.

The Pilot API performs another task: It is the interface that Istio provides for directly defining explicit rules. If you plumb the depths of the Istio documentation, you will quickly come to the conclusion that Istio takes care of everything itself, anyway, and automatically turns a jungle of connections into a huge orchestra. However, you have to tell Istio how you want it to be, which requires the rule API in Istio.

Security

The control plane in Istio contains a third component: Istio Auth is a complete authentication solution for establishing granular access and security rules between the instances of a mesh and between the mesh and the outside world. Istio Auth ensures, out of the box, that the entire communication of the mesh, whether internal or external, is TLS-encrypted.

This function also is implemented by Envoys that receive the required details from Istio Auth and then implement the configuration accordingly. Istio Auth supports granular access models, leaving nothing to be desired.

Is the Complexity Worth It?

If you look at Istio in detail, you will face some complexity, at first. The question is whether the Istio feature set genuinely justifies this complexity. Couldn't comparable results also be achieved with on-board resources? Which functions does Istio provide in detail? A simple example illustrates why Istio is particularly valuable in the context of container fleets.

Imagine a simple web application for a business that is buzzing and generating revenue, but Firefox users are constantly complaining about problems in the presentation of the web store. In the meantime, the DevOps team has found the root of this evil but would like to test a special version of the application with Firefox users before rolling it out for everyone (i.e., a canary release).

How would this work in the example? Clearly the load balancer belonging to the application has to take care of the corresponding distribution when the canary version of the application is rolled out to Firefox users. Without Istio, however, this would be very complex from the developer's point of view. First, it is not so easy to set up a load balancer in Kubernetes out of the box. In the worst case scenario, it runs as part of the container fleet itself.

Additionally, the load balancer would have to be reconfigured so that it uses a set of rules for the HTTP header on which the load balancer is ultimately based; otherwise, it cannot distinguish between the various browser types. If you build all of this, you end up with a complex construct of rules and services that is absolutely specific to just one software solution.

Traffic Management

If you look at the same example with Istio, the advantages are immediately self-evident. The central motivation for admins using Istio is that it usually more or less autonomously handles all the traffic within the service mesh of an application on the basis of the microarchitecture, wherein the scalability of the virtual container environment is strictly separated from the scalability of the traffic in it.

In the load balancer example, this means that for certain browser variants, the admin does not define in detail which instances of a certain pod will receive which calls but only specifies the desired call behavior of the load balancer as a whole (e.g., all requests with the user agent Google Chrome end up with version 1.0 pods of application X, and all requests with Firefox land on version 1.1 pods of application X). That version 1.0 runs on pods 1 through 5 of the application and version 1.1 on pods 6 and 7 does not matter from Istio's point of view.

If you were to launch additional 1.0 or 1.1 pods, the Envoy proxies would automatically integrate them into the existing balancing rules. Istio simply relies on the version number you have specified in the corresponding label when starting the pod, resulting in no additional overhead on your end.

Not much is necessary to implement the example described above. A YAML file that contains the corresponding rules and can be imported into Istio with the istio command is all it takes. Examples can be found in the Istio documentation.

Even More Possibilities

In this article, I can only hope to provide a brief overview of the Istio feature set. The principle of stability, which permeates all functions, is clearly in the foreground with Istio. Functional load balancing is only the most obvious example, but by far not the only one.

For example, Istio has built-in health checks for all pods successfully identified by Pilot. Istio monitors these back ends regularly. If a back end fails, Envoy proxies usually react immediately and remove the defective back end from the distribution. If something unexpected goes wrong and a connection is lost, Istio detects this, too, and the responsible Envoy proxy instantly rewires the connection. Of course, this only applies if you have not set Retry as the default tactic in the Istio configuration, thus forcing a different behavior.

If you want to test whether your container application reacts to errors as desired, Istio offers a fault injection mode. The solution then adopts the role of a Chaos Monkey and provides the adjacent pods with nonsensical input data. This capability is enormously helpful, because an application can be tested in a staging environment without risking problems in real operation.

If everything goes haywire, Istio also offers a circuit breaker function, which is a kind of throttle that takes effect when specific parameters (e.g., the number of incoming connections) exceeds values you can set yourself.

Because every Pod is involved in the dynamic routing of traffic as part of the mesh network, Istio-based meshes are well protected against bad guys by the circuit breaker feature. Such a setup might not survive a massive traffic bomb without damage, but the script kiddies will definitely have more difficulty finding attack vectors.

Finally, don't forget policy-based options in Istio. A central API can filter out or direct certain traffic in an orderly manner.

Conclusions

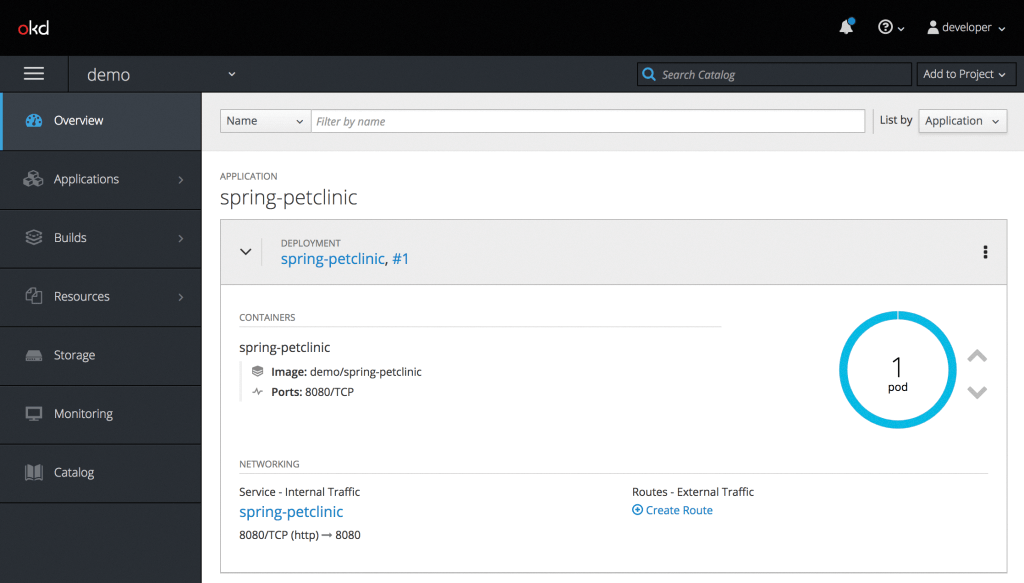

For container applications, automatic traffic rerouting offers massive advantages in many respects. First, Istio offers open and well-documented APIs that make Istio completely independent of a particular platform (Figures 2 and 3). By relying on Istio, you can use the same interface – as part of a development or DevOps team – whether the setup runs on Mesos, Kubernetes, AWS, or something else.

The same principle applies in the opposite direction. Initially, it does not matter where an Istio instance sources its information. Within the environment, all data can always be accessed via the same APIs. If you connect an Istio cluster with Prometheus to improve your statistical base for the network health state, access to this data follows the same procedures as for any other data.

At the same time, Istio offers functions for routing traffic within the mesh that would be difficult to implement otherwise – not as a generic solution, but always specific to a setup. Anyone planning and implementing an application based on the microarchitecture approach will therefore want to take a closer look at Istio and include the product in their own planning. It is virtually impossible to achieve greater flexibility in network matters.

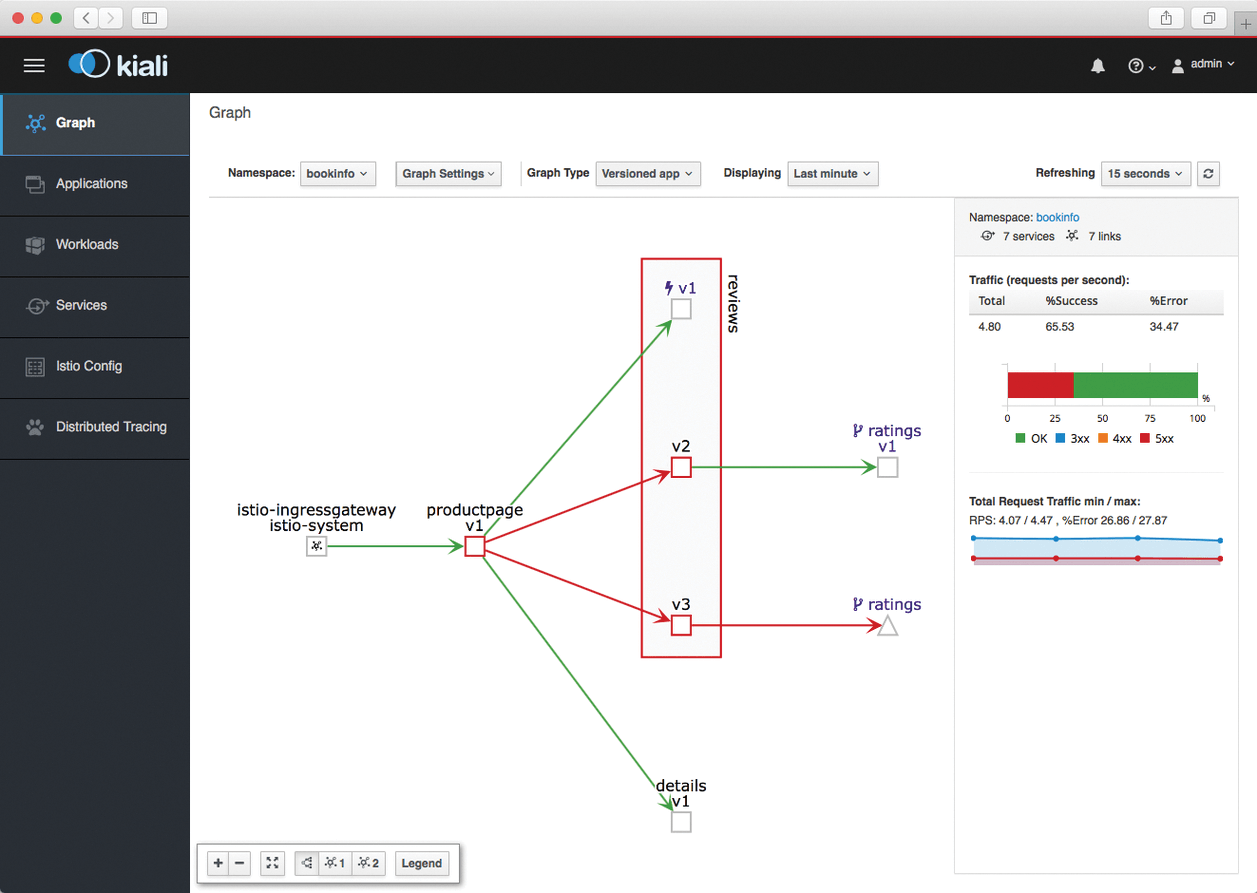

By the way, Red Hat has once again proved that Istio is not a flash in the pan: It has a keen sense of trends and is now offering Kiali for Istio [2]. Kiali visualizes Istio's mesh rules, thus enabling virtualization with graphical appeal (Figure 4).