A buyer's guide to NVMe-based storage

Speedster

Nonvolatile Memory Express (NVMe)-based storage solutions are currently the fastest way to transfer data from memory to processing, which means you can use the latest generation of multicore CPUs and GPUs that require massively parallel processing capacity. NVMe is capable of accelerating databases and large-scale virtual machine (VM) farms to unfold the potential of greater performance density and the advantages of shared storage.

The result is significant performance advantages for demanding applications that are slowed down by previous storage technologies. The added speed ensures that website content is loaded before the viewer leaves. In complex financial transactions, a difference of microseconds can determine the gain or loss of multidigit sums. NVMe is gaining importance in all business scenarios where speed of data access directly affects revenue.

Fast Interface

NVMe is a next-generation interface protocol for accelerated communication between the processor and flash storage hardware. The interface for faster access to solid-state memory (i.e., nonvolatile mass storage) by way of a PCIe bus (PCI Express) was standardized for the first time in 2011. NVMe was initially limited to the consumer market, especially for smartphones and large enterprise storage solutions. In the meantime, however, NVMe has made it into the mainstream business-to-business market with storage solutions that are also cost effective for small and medium-sized businesses.

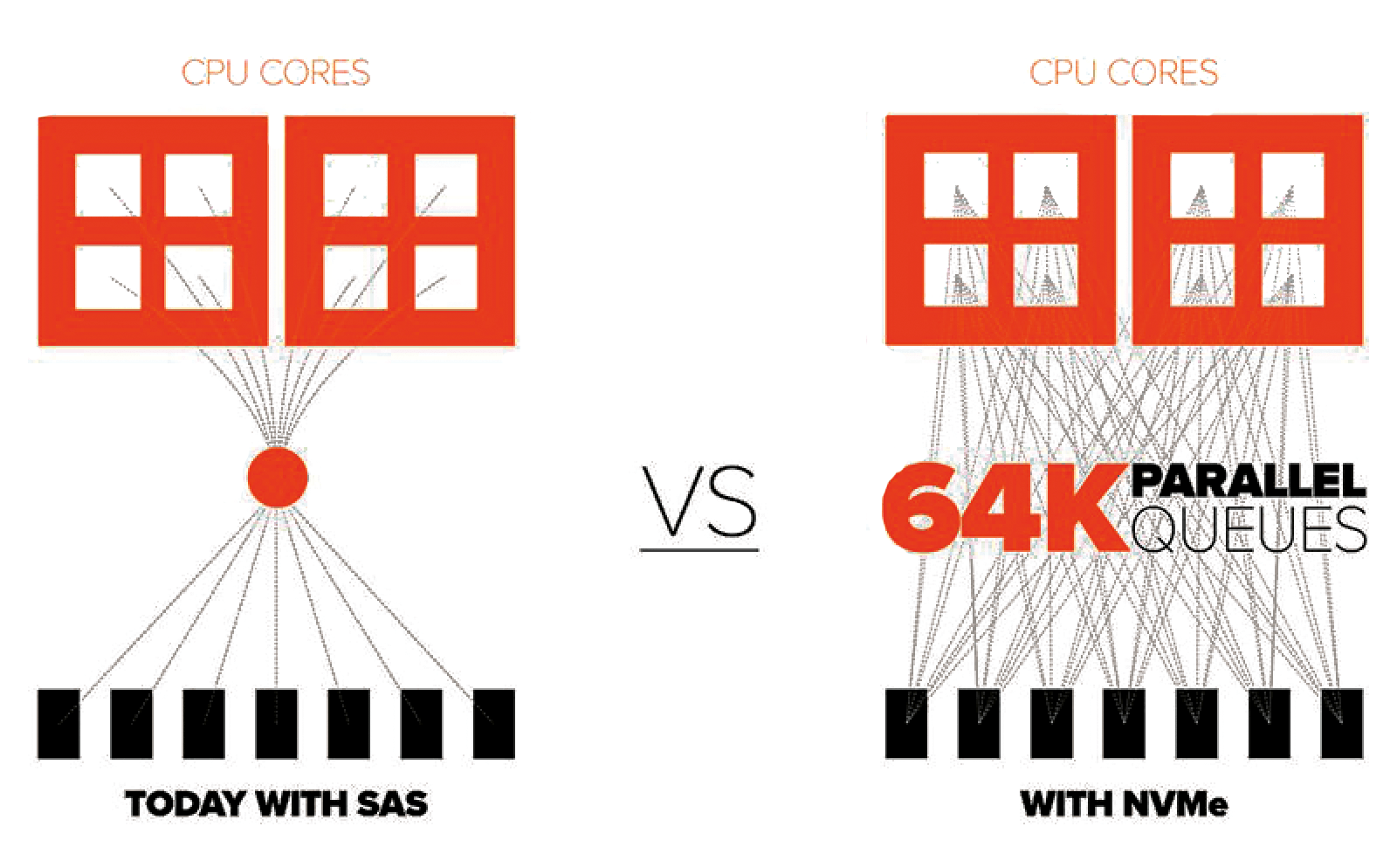

For enterprise flash arrays, NVMe delivers significantly higher performance than the previously predominant serial attached SCSI (SAS) and serial advanced technology attachment (SATA) hard disk interfaces (Figure 1). The reason for the performance leap is that NVMe is significantly faster than the traditional SAS storage protocol, supporting up to 64,000 parallel queues with 64,000 commands per queue. This capability enables direct transmission paths to flash memory. The massively parallel processing benefits gained in this way eliminate the bottlenecks previously caused by serial connections.

NVMe gives all-flash systems, which are already more efficient and faster in terms of data access compared with conventional hard drives, a further boost. Part of the storage industry is therefore pushing forward the transition to NVMe, with the goal of establishing NVMe as the definitive interface protocol for flash memory.

Ensuring Full NVMe Support

Not all NVMe support is designed in the same way. Some storage systems advertise NVMe support, without that necessarily being the case – at least not completely. Most all-flash arrays from established vendors have cache or Vault solid state drives (SSDs) in their controllers; deploying a single-port NVMe drive without too much development overhead is fairly easy. Mass storage media, however, require dual-port and hot-plug functions, which are far more complex to implement in terms of technology and costs and this is what separates the wheat from the chaff. Actual NVMe-based all-flash systems offer dual-port and hot-pluggable NVMe modules on the flash array.

Additionally, most of these all-flash arrays come with an expensive forklift upgrade that requires a complete upgrade of the hardware and software plus data migration to support true NVMe in the future. When choosing a solution that supports NVMe from end to end, enterprises need to pay close attention to the finer technical details, not only within the array, but also for simultaneous support of NVMe over Fabrics for connecting servers with the NVMe protocol.

Software-Controlled NVMe Flash Management

Flash arrays for today's data centers need to leave hard drive era technologies behind, including the flash translation layers in each of the hundreds of SSDs in an all-flash array. To realize its full potential, NVMe must be paired with advanced storage software that manages flash natively and globally within the storage system, to enable lower and more uniform latency times.

Complete global flash management is supported by appropriate operating software that covers flash allocation, I/O scheduling, garbage collection, and bad block management. Typical flash management functions, which previously ran separately on each SSD, are now combined at the system level for higher performance and better utilization. Thanks to global flash allocation, the raw flash capacity can be fully utilized and overcapacities in an SSD are avoided. Moreover, multiple workloads can be consolidated because common management functions run accurately and in a streamlined way when communication with the flash media is routed entirely via NVMe.

One pragmatic solution is a module with a software-defined architecture to connect a large flash pool directly to the flash array by massively parallel NVMe pipes. Software assumes the tasks of the SSD controller (e.g., flash management, garbage collection, and wear leveling) across the entire flash pool and offers enterprise functions such as deduplication, compression, snapshots, cloning, mirroring, and more. It should be possible to upgrade existing systems seamlessly from SAS-based modules to NVMe-based direct flash communication without changing the enclosures. Moreover, for maximum flexibility, it should be possible to use both types in the same enclosure.

Enterprise applications benefit significantly from an NVMe-based, software-optimized storage environment. Software-driven NVMe flash management enables up to 50 percent lower and more predictable latency, five times higher density, and up to 30 percent more usable capacity compared with traditional SSDs. With NVMe, business applications run more efficiently and predictably. An NVMe-based, software-optimized storage environment is therefore the means of choice.

Turbo II – NVMeOF

NVMe over Fabrics (NVMeOF) is another new protocol for speedier server-to-memory connections. NVMeOF is an extension of the NVMe network protocol to Ethernet and Fibre Channel (FC) that enables faster and more efficient connectivity between storage and servers or applications, while reducing CPU load on application host servers. NVMeOF drives further data center and network consolidation by enabling both storage area network (SAN) and direct attached storage (DAS) application infrastructure silos to leverage a single, efficient, shared storage infrastructure. This shared storage network accelerates network speed and improves bandwidth.

The availability of fast networks based on NVMeOF protocols has created a new class of flash memory. Gartner coined the term "shared accelerated storage" for this approach. NVMeOF enables external storage latency comparable to DAS, is significantly more efficient at storage I/O processing than iSCSI, and makes the entire architecture more parallel, eliminating bottlenecks. NVMe and NVMeOF appeal to a wide range of organizations that require scalable, efficient, robust, and easy-to-deploy and manage data center storage.

However, when deciding to implement an NVMeOF solution, bear in mind that NVMeOF is a new technology. Outside of hyperscale environments, it takes time for new technologies to establish themselves. One of the first barriers to access is the NVMeOF ecosystem. Currently, many Linux distributions support NVMeOF. Web-scale and cloud-native applications on Linux such as MongoDB, Cassandra, MariaDB, and Hadoop are great early adopters that take advantage of NVMeOF on Remote Direct Memory Access over Converged Ethernet (RoCE). VMware has introduced NVMeOF capabilities, but has not yet publicly announced when support will be generally available. In addition to the operating system side, support from the application manufacturer is also often required. However, it is worth keeping an eye on this cutting-edge technology, because it could become mainstream in the coming years.

Future-Proof Storage Technology

Modern storage systems have a typical useful life of about six years, but up to a decade or more if the underlying technology in the system is seamlessly upgradable to newer technologies as they emerge. When implementing a storage system, future-proofing should be the most important criterion in terms of investment protection. Anyone already planning to switch from conventional hard drives to an all-flash storage solution today would thus do well to ensure holistic NVMe support.

NVMe offers tremendous potential benefits in array power density, whereas NVMeOF delivers faster connectivity. Traditional all-flash arrays that continue to rely on disk-based SAS protocols will never fully realize the potential of flash, which requires new memory architectures developed from the ground up for massively parallel communication. Flash memory based on NVMe, NVMeOF, and the other critical features of shared accelerated storage can pave the way for enterprise transformation. This approach delivers unprecedented speed and flexible scalability for demanding applications and enables greatly simplified and consolidated IT operations with a view to boosting agility in the data center. Therefore, it is essential for the future storage provider to provide a clear path for seamlessly leveraging NVMe and NVMeOF. Finally, NVMeOF-enabled storage platforms need to support multiple transport protocols, such as RoCEv2, FC, and, in the future, TCP.

Conclusions

Ultimately, the latest innovations in the all-flash storage segment are about lean, powerful, and future-proof data solutions designed to address today's business challenges, reduce data center complexity, and drive innovation across the enterprise. Reducing the number of software layers also contributes to reliability. In the long term, technical application scenarios for new, highly specialized business applications that require even faster communication speeds with memory are bound to arise.