Legally compliant blockchain archiving

All Together Now

A tax-compliant archive, a data warehouse, or an enterprise resource planning (ERP) system largely processes the same data, but unfortunately with a different persistence level. Companies can no longer afford this kind of redundancy. Deepshore provides an alternative concept that draws on blockchain as an important component in its implementation and thus adds often-missed value to the overall system.

Superfluity

As if the sheer volume of electronic information were not already challenging enough, virtually all large companies in this country allow themselves the luxury of processing data multiple times and then storing the data redundantly. Even today, the areas of archiving, data warehouses, and ERP are still organizationally and technically isolated. IT departments do not like people seeing their hand, and setting the isolation of applications in stone does not make it easier to design solutions across these system boundaries.

Different approaches for merging all data on a single platform include the many data lake projects of recent years. Such undertakings, however, were rarely driven by the desire to store data centrally to make the data usable across the board; rather, they were set up primarily to evaluate its content (e.g., as Google did) and use the knowledge gained in a meaningful way to optimize business processes.

Typically, companies in the past stored quite large amounts of data in a Hadoop cluster and then began to think about how to extract its added value from the dormant treasure trove. In many cases, such projects have not been able to realize their promised benefits, because it is not trivial to master the diversity of platform components in their individual complexity. To avoid this complexity, as it was 15 years ago in the ERP environment, it is still common today to react to every technical application with a systemic standard response. Now it is essential to reflect on for which use case the respective system or specific component (technology) is actually suitable.

Complex Data Structures as a Challenge

The partly complex and different data structures within a data lake prove to be a massive challenge, because usually the information, already indexed in the source system, is stored in its natural or raw format without any business context, which of course does not make its subsequent use any easier. Current systems likely are not able to extract the right information automatically from existing raw data and perform intelligent calculations. The attempt to generate significant added value in a classic data lake approach is considered a failure by experts. However, giving up digitization of data would certainly be the worst alternative.

The search for the largest common data denominator was at the forefront of considerations on how to build a meaningful and uniform data persistence in an enterprise that does not run into the typical problems of data lake projects. In the case of electronic information, the specific question was: What are the technical requirements for data persistence? ERP should, for example, execute and control processes and, if necessary, provide operational insights into current process steps. Aggregated reports, evaluations and forecasts for tactical and strategic business management are to be delivered in a data warehouse (DWH), and the archive should satisfy the compliance requirements of the tax authority or other supervisory authorities. Everyone knows that many times the same data is processed redundantly in their respective systems. This considerable cost block, which is reflected in the IT infrastructure, does not yet seem to have reached the focus of optimization efforts because of technical and organizational separation. Indirectly, it is not only about persistence (i.e. storage), but also about the entire data logistics.

Highest Data Quality in the Archive

To come closer to the goal of a uniform data platform, the question now arises as to which business environment defines the highest data requirements in terms of quality, completeness, and integrity. For example, in the typical aggregations of a data warehouse scenario, completeness at the individual document level is not important. In the area of ERP systems, raw data is frequently converted and stored again when changes are made to the original data. In a correctly implemented archive environment, on the other hand, all relevant raw data is stored completely and unchangeably.

Strangely, an archive, the ugly duckling of the IT infrastructure, normally has the highest data quality and integrity. The requirement to capture, store, and manage a lot of data about a company's processes in a revision-proof manner in accordance with legal deletion deadlines is a matter close to the hearts of very few CEOs and CIOs. Instead, legislation, with the specifications of tax codes and directives for the proper keeping and storage of books, records, and documents in electronic form, as well as for data access, along with a multitude of industry-specific regulations are the causes of an unprecedented flood of data and the need for intelligent storage.

Compliance in the Cloud

Revision security (compliance) is a complicated matter, and a technology and provider segment has developed in the past around the accordingly certified IT infrastructures that can only be mastered with considerable investments in hardware, intelligence, and operation. In this segment, conventional technologies dominate, and a few monopolists with highly certified solutions determine the conditions. Particularly painful, in the case of necessary scaling, costs for systems, licenses, and project services do not rise gently because of conventional system architecture. However, in this age of permanently exploding data volume and throughput requirements, the need for scaling is not a special case, but the challenging normal state.

Under this pressure, a changing of the guard has long been underway in the enterprise content management (ECM) and enterprise information management (EIM) platforms: The classic ECM systems have already lost the race; innovative cloud and big data systems are beginning to assert themselves and are raising the organization and the possibilities of archive data to a new level, thanks to NoSQL architecture. Scaling in the system core is also much cheaper and more flexible. With the change on the platform side, it becomes even clearer who is the real cost driver in the case of scaling: the storage infrastructure. Switching to cloud storage systems would significantly reduce storage costs because of the great variety of suppliers in this segment. Costs are extremely low thanks to a genuine buyer's market. Scaling is free and costs linear – with high availability and always-on infrastructure included.

Why do the write once, read many (WORM) memories nevertheless continue to exert themselves in the market? Because, on the one hand, the cloud had no viable compliance concepts up to now and, on the other hand, a storage provider in the cloud cannot simply be changed quickly. The dependence on a cloud provider is even more blatant with large amounts of data than with the use of older WORM media. Unloading data from the cloud once it has been stored and then entrusting it to another provider is simply not part of a cloud provider's business model, although this is certainly not true when data in the tax environment is mentioned. Technically, the customer is on their own and depends on the standard API of the provider. In this context, large-volume migrations can quickly become a nightmare in terms of run times and quality assurance measures.

Blockchain Archiving

Deepshore [1], a German company that develops solutions in the unchartered territory of compliance in distributed and virtual infrastructures, solves the challenges described with blockchain technology and has thus equipped cloud storage with full compliance. The way there is through the establishment of a "Private Permissioned Blockchain," in which hashing and timestamping functions take over the safeguarding of the data. Meanwhile, the data and documents are protected from deletion by storage on a distributed filesystem. Compliance functions and storage locations remain completely separate. No raw data is managed in the blockchain itself – only technical information about the respective document to prove its integrity.

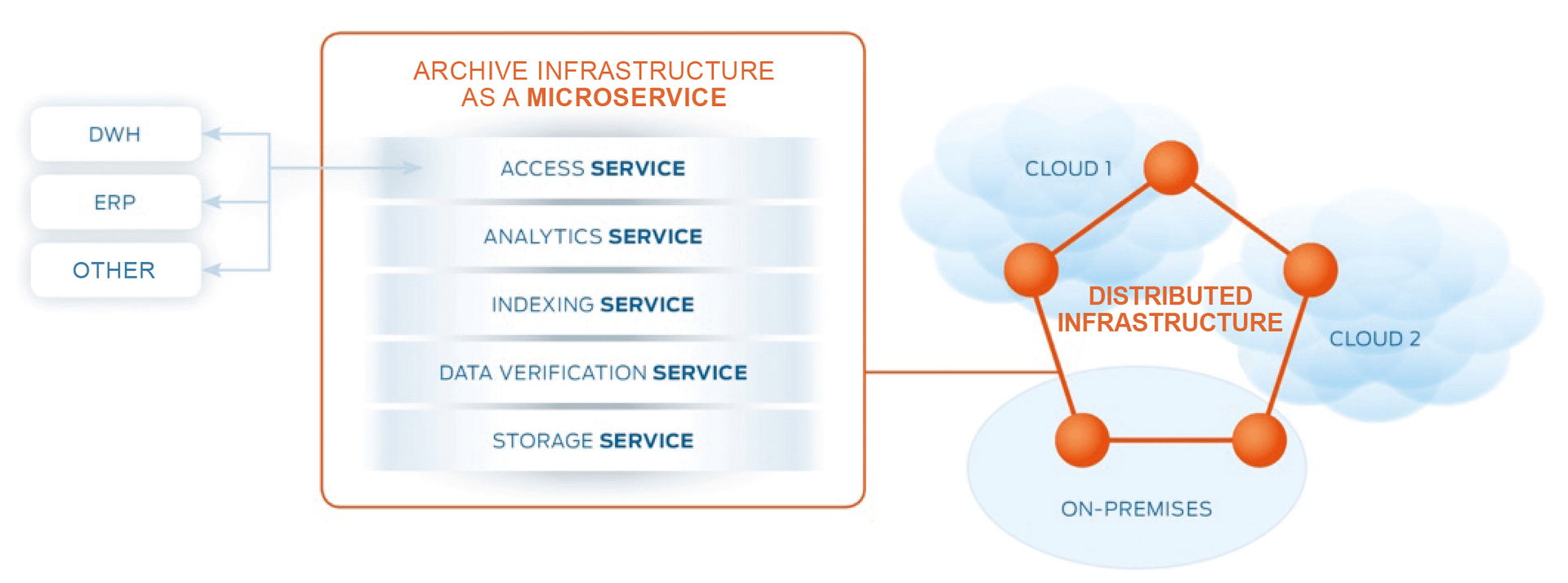

During development, care was taken not to use components whose use is marketed under commercial licenses (i.e., only open source products). The core of the architecture is the implementation of a microservice infrastructure that meets all the requirements outlined above without becoming too complex (Figure 1). Functions such as managing different storage classes and geolocations on the basis of the cloud infrastructures used are absolutely new. Accordingly, a policy-based implementation of individual sets of rules becomes possible (e.g., domestic storage, limitation on the number of copies in certain georegions, copies on different storage systems).

Thanks to complete abstraction of the physical storage layer, migrations in distributed cloud storage are easier than ever before; it is sufficient to log off new nodes and automatically replicate the database. By using the native distribution mechanisms, the system can replicate its data across multiple cloud providers without getting into a legal proof problem of a classic archive migration, which is a novelty. The otherwise usual documentation procedure of a migration is omitted because, technically, the data does not leave the system at any time, even if the underlying infrastructure changes. This setup eliminates the dependence on individual cloud providers, which gives the system a previously nonexistent independence. The infrastructure and each of its components does not care whether they work under Microsoft Azure, Amazon Web Services (AWS), Google Cloud, or on-premises. This independence is achieved through the use of container technology and is a completely new dimension of technical flexibility.

Last but not least, data does not have to go through multiple complicated and complex enterprise application integration (EAI) processes, because applications and clients can speak directly to the new infrastructure. Of course, this applies equally to writing and reading processes and means, for example, that an existing DWH system can potentially make use of any required information from the database.

Technical Details

The system focuses on five main services: Access Service, Analytics Service, Indexing Service, Verification Service, and Storage Service.

A client never talks technically with the distributed components themselves, but always with the Access Service. Therefore, an external system or client does not have to worry about the asynchronous processing of the infrastructure behind the Access Service. This service is arbitrarily scalable and executable on different cloud systems, coordinating requests in the distributed system complex and merging the information from the various services. A major advantage of this approach is that applications can submit their data directly to the data service. The use of complex EAI/enterprise service bus (ESB) scenarios becomes superfluous. The Access Service works the same way in the other direction.

Enterprise applications of any kind, then, can use the data pool of the new service flexibly and at will. These potential infrastructure cost savings alone are likely to be significant in large enterprises and large-volume processing scenarios. This service was developed as a technical hybrid of REST and GraphQL in JavaScript. The content parsing of data can easily be realized as a service within the processing routine.

The Analytics Service is not an essential installation on the system in general. Technically, it is Apache Spark for the more complex computing of Indexing Service data. In this case, however, any other (already existing) analytical system could also be used that extracts information from the system by way of the Access Service. In this respect, the Analytics Service is to be regarded as an optional component, but with the potential to take over tasks from the classic DWH, as well.

The Indexing Service is presented by a NoSQL-like database that uses CockroachDB, although MongoDB and Cassandra were also part of the evaluation. Each database has its own advantages and disadvantages. CockroachDB is a distributed key-value store with the ability to send SQL queries. By using a Raft consensus algorithm [2], the system offers a high degree of consistency in the processing of its transactions. A special and newly developed procedure can also be used to ensure the status or consistency of the data within the Verification Service (more on this later).

The data Verification Service is technically a blockchain based on MultiChain. The tamper-proof Verification Service can document the integrity and the original condition of raw data. Interventions in the Storage Service are also stored as audit trails within the blockchain.

Finally, the Storage Service allows raw data to be stored with any data persistence. As part of cooperative research with the Zuse Institute Berlin, the distributed file system (XtreemFS) was further developed and equipped with different storage adapters and a blockchain link.

Weaknesses of Conventional Archiving

For legal reasons, a compliance archive system should have a complete and unchangeable inventory of all relevant raw data. As already mentioned, the archive marks the most secure and highest quality data storage within a company. However, because of technologic limitations, this information was only partially usable and of very limited use for evaluations or analyses. Classical archive systems are limited to simple search functions based on header data, because data analysis is not their technical focus, which explains the existence of analytics platforms that enable evaluations of subject-specific data.

However, the use of these systems is always caught between cost, speed, and data quality. As a rule, expensive in-memory approaches are only used for a small part of the total data and for a very limited time window. Historical data is often aggregated or outsourced for cost and performance reasons, which reduces the accuracy of analyses over time as information is lost through compression techniques. Common NoSQL stores, on the other hand, provide inexpensive models for high-volume processing but do not guarantee ACID (atomicity, consistency, isolation, and durability) compliance for processing individual data. For analytical use cases, data is usually transferred in a stack-oriented "best-effort" mode, which affects both the real-time processing capability and the quality of the data itself.

Data Processing in the Blockchain Archive

Because historical data is often aggregated or outsourced for cost and performance reasons, which reduces the accuracy of the information over time, one goal in the design of the Deepshore solution was therefore to utilize the entire data depth of the previously stored information for analytical use cases. Cost-optimized NoSQL and MapReduce technologies are used, without sacrificing the depth of information of the original archive-quality documents.

In the course of this process, the outlined vulnerabilities of current approaches are addressed, in which large amounts of data are managed either relationally, in-memory, or in NoSQL stores (i.e., always in the area of conflict between quantity, quality, performance, and costs). The blockchain-based tool is able to resolve this dilemma by methodically combining various services and using the maximum data quality of an archive for analytical purposes, without having to rely on reduced and inaccurate databases or expensive infrastructures.

By extending upstream processing logic, the system can also parse the content of structured data when it is received and write the results to an Indexing Service database. This processing necessarily has nothing to do with establishing the archive status of stored information, which means that the construction of an index layer can also take place at a later point in time, independent of the revision-proof storage of the raw data.

Most important is that the Indexing Service is based on the raw data in the archived state and not built up beforehand, because it is the only way to ensure that the extracted information is based on an unchangeable database. To achieve this state, there are two major differences to traditional data lake or DWH applications. These paradigms are summarized in the common data environment (CDE) model and are explained below.

On the one hand with the Deepshore solution, transferring data to the Indexing Service in a read-after-write procedure is obligatory, ensuring that every record is written before the responsible process can assume that the write process is correct and complete. The procedure could therefore look as follows:

- Parse the data.

- Set up the indexing structure.

- Write the structure to the Indexing Service (database).

- Read the indexing structure after completion of the write process.

- Compare the result of the read operation with the input supplied.

- Finish the transaction successfully, if equal; repeat the transaction, if not equal.

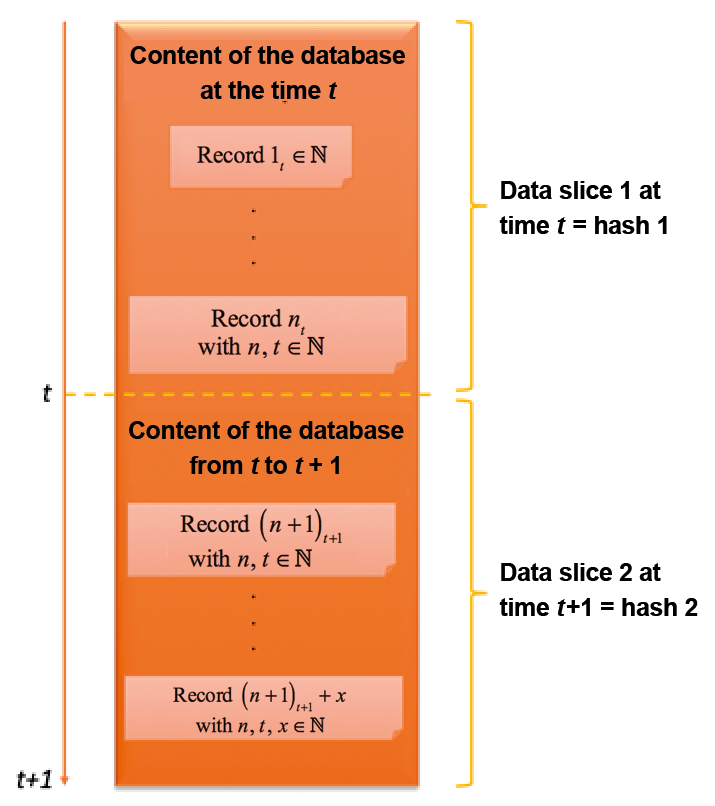

The read-after-write process also establishes a logical link between the raw data in the Storage Service/Verification Service and the Indexing Service. Each archive data record has a primary key that comprises a combination of a raw data hash (SHA256) and a UUID (per document) and is valid for all processes in the system (Figure 2). Thus, it is possible at any time to restore individual data records or even the entire database of the NoSQL store from the existing raw data in the archive store. Accompanying procedures, which cannot be outlined here, ensure that the database is always in a complete and unchangeable state.

Manipulation Excluded

Once the desired index data has been stored completely and correctly in the Indexing Service, the only question that remains to be answered is how to prevent the database from being manipulated in a targeted or inadvertent manner. The second component of the CDE model serves this purpose. The database is considered at a time t when n records have already been written to the database. Now all n data records at time t are selected, and the result is converted into a hash value. The result is the hash value of all aggregated database entries in data slice 1 at time t. This hash value is located in a block of the blockchain as a separate transaction and is therefore protected against a change.

In the next step, the procedure is repeated, whereby the run-time environment uses a random generator from a defined interval to determine exactly when time t+1 occurs. Thus, t+1 is not predeterminable by a human being. In the next data slice 2 (e.g., from t to t+1), the first new transaction n+1 and x other transactions are written, which are not part of data slice 1 at time t. All new transaction data is then selected, and the result is converted to a new hash value 2, which is also stored in the blockchain (Verification Service).

Both hash values now reflect the exact status of the data at time t or of all data newly added after time t up to time t+1. A comparison of the raw data from the database is now possible at any time against the truth of the blockchain. The Verification Service provides a secure view of the database. Such a check can also be carried out at random, one data slice at a time, in the background. If the comparison reveals a discrepancy, the database can be restored from the raw data of the Storage Service in the Indexing Service.

Bottom Line

In times of digitalization, companies can no longer afford to remain at the infrastructural and technical status quo of the 1990s. Silo systems like ERP, DWH, archive, or EAI/ESB not only produce unnecessary costs, they also hinder the efficient processing of company information. In this context, it is of great importance that IT investments do not flow into the conversion of individual systems without a plan, but rather into the strategic alignment of an entire IT infrastructure.

The solution outlined in this article forms the basis of a reorganized IT infrastructure that offers the ability to store distributed data, verifiable by blockchain mechanisms. Every corporate IT system can make use of and store information in this data pool. The architecture avoids dependencies between individual infrastructure providers and thus provides maximum flexibility in the back end.