GitLab for DevOps teams

Assembly Line

Change sometimes progresses rapidly: Just a year ago, Jenkins was the undisputed continuous integration and continuous deployment (CI/CD) king of the hill for the teams at DB Systel GmbH, Germany. Now, some groups are using GitLab CI with growing enthusiasm.

In this article, I highlight why this change is taking place. The best practices described here result from daily work as a network of DevOps experts who support the transformation of groups that are looking to work as DevOps teams in the future or are already doing so. I describe the processes we use that you can also apply to your setup.

CI/CD and SCM

With every new release, an impressive number of new features are introduced to GitLab [1]. The core team has obviously taken up the cause of mapping the entire software life cycle and, in the process, GitLab has become a versatile tool for DevOps teams. From planning to monitoring, the source code management (SCM) software offers a variety of components, the configuration of which varies depending on the edition.

In this article, I refer to the freely available GitLab Community Edition [2]. I also describe scenarios that are particularly relevant for the DB Systel DevOps teams and that have already been successfully implemented with the help of GitLab (i.e., for CI/CD, security, and compliance).

GitLab CI is a GitLab Community Edition component, and thus open source. In particular, GitLab integration boosts the user experience compared with the use of several standalone applications, thus reducing the amount of training required.

Thanks to GitLab, the DevOps team uses container images for each step in the pipeline, which accelerates delivery times. The use of clearly defined and versioned containers allows for pipelines with largely decoupled steps. Dedicated containers for building, testing, and delivering applications can be quickly integrated into other pipelines and used across team boundaries. Each container covers one step in the pipeline.

The basis of every CI/CD pipeline is a source code repository. En route toward GitOps [3] and the associated "everything as code" paradigm, no DevOps team can do without version management. GitLab fully covers the requirements with Git integration and associated features, such as code reviews, comment functions, and web GUIs that allow editing directly in the browser.

Agile Methods

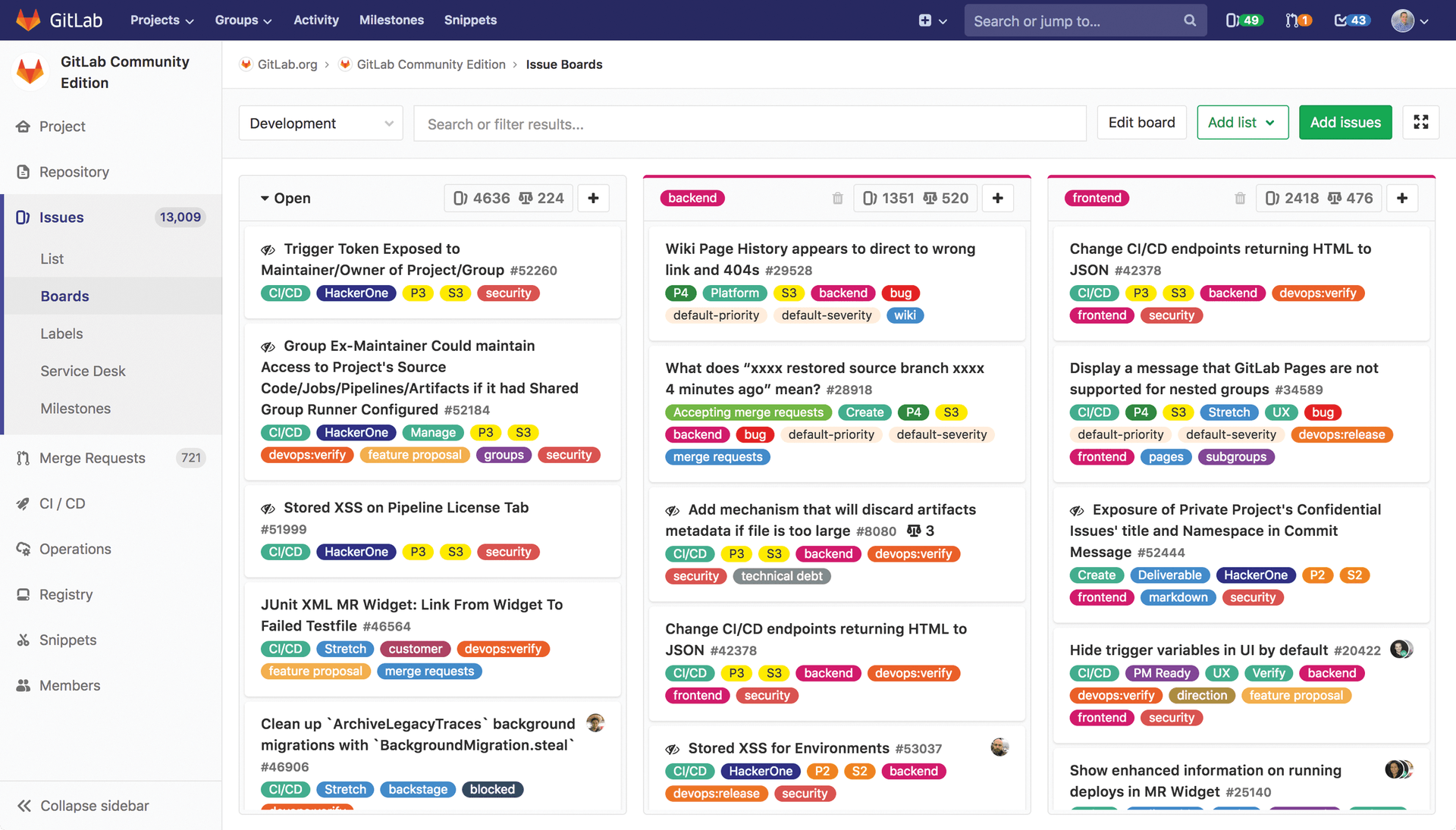

The modules in GitLab (e.g., the issue tracker and the issue boards; Figure 1) currently support all common methods of agile software development from Scrum, through Kanban, to SAFe. The GitLab blog contains a post on the subject of GitLab for agile software development [4] that provides a useful overview of how teams can map agile processes to GitLab features.

However, not all modules are in the Community Edition, so it is often worth taking a look at the existing interfaces to third-party software. Our teams use Jira integration, among others. If a developer adds the Jira issue ID to a merge request, it automatically ends up as a comment in Jira.

At the same time, GitLab inserts a link to the commit through the Jira interface, thus ensuring easy navigation between the two systems. If the developer enters Closes <Jira-Issue-ID> in the description field of the merge request, Jira will close the corresponding issue after the merge in GitLab – but not without leaving a reference to the corresponding commit.

Various markups can also be bundled. These smart commit commands [5] give instructions for comment, status, and time posting simultaneously.

GitLab Runner

One basic building block of the GitLab CI architecture is the GitLab Runner [6]. According to the pull principle, a Runner communicates with the GitLab server via an API, executes declared jobs, and sends the results back to the GitLab server.

Runners run in different environments, but Docker and Kubernetes are preferred by our teams, because they fit seamlessly into our target image of a "shared nothing architecture." By combining different Runners and environments, we map pipeline security and compliance requirements. On this basis, a dedicated team provides Continuous Delivery as a Service (CDaaS) for the integration of GitLab CI into Amazon Web Services and OpenShift. With the use of parameterized deployment templates, we configure the Runners and roll them out on the corresponding platforms.

By tagging Runners and declaring them in the pipeline job, we can assign them to different environments. The configuration itself is created in TOML format [7]. The Runner documentation [8] provides a comprehensive overview of the available parameters.

Runners can be registered in three variants: shared, specific, or group. In contrast to the specific variant, shared Runners are suitable for projects with identical requirements. Group Runners offer all the projects in a group access to the defined Runners. And all Runner types support the Protected option. If this is set, they only execute pipeline jobs declared in protected branches or tags.

Look Ma, No Scripting!

The CI/CD pipeline is configured declaratively in a YAML file, and we only define the "what"; GitLab CI takes care of the "how" [9].

Jobs form the top-level object of a pipeline definition and have to contain at least the Script parameter. Caution is required at this point: The possibilities offered by a Script statement quickly lead one to forget the declarative approach and implement pipeline logic. Then "what" then becomes "how."

To counteract this, our parent company is currently establishing a centrally maintained container library for CI/CD as an inner source that is available to all DB Systel employees. This library serves as the basis for language-specific builder images, ideally based on lightweight base images such as Alpine Linux [10]. All required certificates, environment variables, binaries, and tools for building the application are contained in the images themselves. Helper images, which come with routines for computing a new software version, for example, are also part of the container library.

Docker's approach of running one process per container allows these images to be easily linked in the job definition. The GitLab Runner then automatically starts the respective container and schedules it as soon as it has completed the pipeline step.

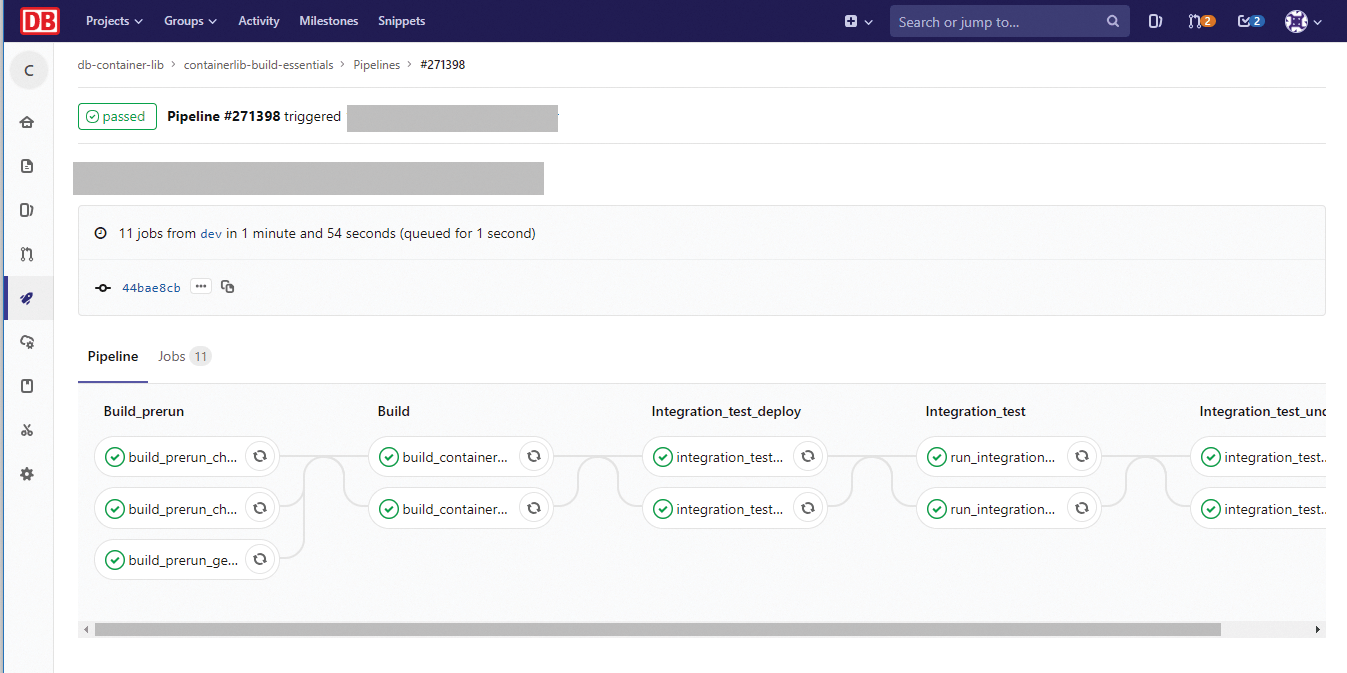

In our shop, we also integrate test automation into the CI/CD chain. New application versions reach the users without lengthy manual tests. Much like the images created during the build phase (Figure 2), these images also end up in a container registry. GitLab offers its own but also has interfaces to external registries like Artifactory [11].

A pipeline definition can quickly grow to more than a couple of hundred lines, making it confusing and no longer maintainable. Each GitLab installation includes a link tool to check the YAML configuration.

Apart from this syntax check, it helps to cluster thematically identical blocks and store them in separate files. Using the include statement added to the core in GitLab 11.3, developers can add external configurations. Technically, this is realized as a deep merge. In this way, standard configurations can easily be added to, and then be overwritten with, project-specific requirements, if necessary. The include statements also include remote files, which opens up further possibilities. On this basis, one team is currently evaluating the approach of curated pipelines as a service.

Build artifacts generated during pipeline execution can be made permanently available by declaration and reused in pending jobs. Additionally, the web GUI offers the very convenient option of browsing through the job artifacts or downloading them.

Pipeline jobs, which have extremely long run times because of their complexity, can be outsourced to separate pipelines by the GitLab scheduler, a kind of Cron daemon. The jobs then run outside the development phases (e.g., nightly builds).

Auto DevOps

The basic idea behind GitLab Auto DevOps is to minimize the complexity associated with fully automated software delivery. For this purpose, GitLab Auto DevOps accumulates the necessary requirements and maps them via a pipeline.

Programming language recognition already works for a large number of common languages and is being continuously extended. Automatic software builds that are based on Docker or Heroku build packs have already been implemented. Pipeline jobs for application tests and quality assurance (QA) are available, as are routines for packaging and monitoring. Deployment mechanisms with Helm charts [12] complete the solution.

To familiarize yourself with the conventions and best practices of a GitLab CI pipeline, take a look at the Auto DevOps pipeline from the GitLab repository [13]. GitLab itself relies on native Kubernetes integration and Google's Kubernetes Engine (GKE) as its target platform. Because it is cloud agnostic, both Auto DevOps and manually generated pipelines can be used with any cloud platform and underlying orchestration tools, thus avoiding vendor lock-in.

Currently, none of the teams we serve uses GitLab Auto DevOps, because it cannot fully cover the DB Group's security and compliance requirements. The concept also does not support all application stacks that we require by default. That said, Auto DevOps is becoming a useful feature that generates added value. A structured blueprint at the fingertips of teams allows them to enter a DevOps-centric work mode quickly.

Security and Compliance

Other challenges our DevOps teams face are security and compliance requirements. On the way to a self-service infrastructure that provides standardized processes in a CI/CD environment, "compliant by default" is a cornerstone for a fully automated pipeline.

Recently, GitLab strongly expanded its focus toward security. The GitLab core team has bundled many of the methods and functionalities required in this area in its roadmap. The vision for GitLabs' product includes, among other things, a secure stage, which the company plans to expand to include a defense stage.

Static Application Security Testing (SAST) checks the source code for existing vulnerabilities, such as buffer overflows or insecure function calls. Dynamic Application Security Testing (DAST) tests against a running application or against the Review Apps collaboration tool [14]. These tests start with each merge request and thus allow early detection of security vulnerabilities at run time. Interactive Application Security Testing (IAST) aims to shed light on how the application deals with security scans that come directly out of the application. For this use case, GitLab delivers an agent in deployment that integrates into the application and implements the scans. Technically, this is done by GitLab integrating a corresponding open source scan tool. The developers are looking to increase the number of supported languages.

Example scan tools include Secret Detection, which scans the commit for credentials and secrets. The results are sent to existing reports. Dependency Scanning has GitLab check the deployed package managers for security vulnerabilities. This technology is also open source and based on Gemnasium [15].

Additional open source tools are used in container scanning. The CoreOS project Clair [16] collects information about app and Docker container vulnerabilities in its database. With the use of the Clair scanner [17], the pipeline is able to validate container images against the Clair server before it pushes the images into the registry.

Last but not least, license management is intended to help meet compliance requirements by checking the code for license violations in the pipeline.

These techniques can already be integrated into pipelines today, even if only in the Enterprise edition. One hopes GitLab will integrate these components into the Community Edition in the future. Currently, manual work is still required to implement the functions themselves, which are largely based on open source software.

Outlook

Although the focus of GitLab CI in recent years has been primarily on source code management and continuous integration, the company has set itself the goal in 2019 of driving forward project management, continuous deployment and release automation, application security testing, and value stream mapping. The roadmap for 2019 includes more than two dozen features (Figure 3).

![For 2019, the GitLab project has shifted its focus to continuous deployment and security testing. Source: GitLab; CC BY-SA 4.0 [18]. For 2019, the GitLab project has shifted its focus to continuous deployment and security testing. Source: GitLab; CC BY-SA 4.0 [18].](images/F03-features.png)

The most interesting novelties from a DevOps point of view include features like Incident Management and Serverless, but also the previously mentioned IAST, as well as Runtime Application Self-Protection (RASP). Some of these features are already available for evaluation in the alpha or beta version.

The GitLab team plans to use the software not only in the DevOps area, but to establish it as a single application for other work areas, too, including security, QA, product owner, tester, and user experience designer.

Both the Community and Enterprise editions have publicly available issue trackers [19] [20]. Their existence motivates users of GitLab to contribute feedback, feature requests, or code. This form of transparency not only forms the basis of a fast feedback loop, but increasingly makes GitLab a versatile tool for DevOps teams.