Google Cloud Storage for backups

King of the Hill Challenge

Amazon Simple Storage Service (Amazon S3) is considered the mother of all object memories and, thanks to its great durability, availability, and built-in scaling, has been virtually unrivalled thus far. However, other providers now also offer S3 alternatives with interesting features. Like Amazon S3, Google Cloud Storage (GCS) is an object storage service for storing and retrieving data from anywhere on the Internet with a REST-flavored API (AWS S3 historically also supports SOAP and BitTorrent) or with a program based on one of the supported SDKs. In fact, GCS buckets and objects are conceptually similar to those in Amazon Web Services (AWS). As with AWS, anything you want to store in GCS must be dropped into buckets, which both organize data and control access.

Deployment Scenarios

Google Cloud Storage supports a wide range of use cases. With GCS, users can store content such as text files, images, videos, and more over the Internet. GCS also supports hosting of static website content, while also offering all the benefits of a highly scalable architecture. For example, if you experience web request load peaks (e.g., because many users are trying to read a popular post or download a coupon code), the system can automatically process the increasing load volume without manual intervention.

Google Cloud Storage also helps IT managers develop and operate serverless applications – for example, by executing tasks in response to events in GCS. Each time a file is uploaded, a format checker or conversion tool could launch, triggered by Google Functions. Finally, GCS can be used for cloud-based backups and for archiving data that is rarely accessed. Unlike Amazon S3, however, GCS lets you recover the archived data within milliseconds, instead of minutes – or hours in the case of AWS Glacier.

Memory Classes and Access Times

As in AWS, buckets are containers that store objects. They cannot be nested and must have a unique name across the entire Google Storage namespace. GCS also supports object versioning. The objects themselves always comprise the object data (e.g., the files to be stored in GCS) and object metadata components (e.g., a collection of key-value pairs that describe an object's attributes). General metadata attributes include content type, content encoding, and cache control, but users can also store user-defined values.

Amazon S3 supports the Standard, Infrequent Access (S3 Standard-IA), and One Zone-Infrequent Access (S3 One Zone-IA) storage classes, and you can also regard S3 Reduced Redundancy (with only 99.99 percent reliability) as a storage class. In addition to archiving, Amazon Glacier is a storage class, because Glacier can be addressed directly by the Glacier API or the S3 API and exists as a "transition" within the framework of S3 life-cycle management. Whereas the access time for S3 is in the range of seconds to milliseconds, Glacier access times range up to five hours for the standard retrieval procedure.

With GCS, on the other hand, the access time is consistently in the millisecond range across all memory classes:

- Data in Google Multi-Regional Storage is geo-redundant. The data is redundantly stored at two or more data centers at least 100 miles apart within the selected multi-regional location.

- Regional storage data, on the other hand, is stored in a bucket created in a regional location. This data does not leave the data centers in the specified region.

- Nearline Storage, the Google counterpart to AWS S3 IA, is a cost-effective, extremely long-lasting storage service for rarely accessed data. The data is redundant within a single region. Nearline Storage is ideal for data that needs to be read or changed once a month or less frequently on average.

- Coldline Storage data, unlike other "cold" storage services like AWS Glacier, is available within milliseconds, not hours or days. Google users can also set up life-cycle management policies to transfer objects between Nearline or Coldline storage classes on a multi-regional or regional basis.

Access Control

Securing access to data in GCS is accomplished in three ways. In addition to the use of Access Control Lists (ACLs), access can also be routed by Identity and Access Management (IAM) authorizations and roles and with the aid of presigned URLs.

With Google Cloud IAM, administrators of the Google Cloud Platform (GCP) can control user and group access to buckets and objects at the project level, allowing a user to perform all tasks on all objects in that project but not create, modify, or delete buckets in the same project. The GCS projects feature adds an additional organizational level to classic G Suite or Google Drive accounts.

Optionally, or additionally, the IT manager in GCS can control access by applying an access control list to individual objects or buckets. Finally, signed URLs make it possible to grant time-limited read or write access for all persons who are in possession of a URL to be generated beforehand.

Transferring Data to the Google Cloud

Although GCS is primarily considered a backup and disaster recovery solution in the following example, it makes sense to be familiar with the common tools and procedures for transferring data to Google's object storage. In general, Google offers two important tools for transferring data to the cloud. The Cloud Storage Transfer Service [1] is used to move data from Amazon S3 or another cloud storage system either from the GUI in the GCP console via the Google API client library in a supported programming language or directly with the cloud storage transfer service REST API.

Also, the gsutil command-line tool [2] can be used to transfer data from a local location to GCS. More specifically, with gsutil you can create and delete buckets; upload, download, or delete objects; list buckets and objects; move, copy, and rename objects; and edit object and bucket ACLs.

Backing Up to Google Cloud

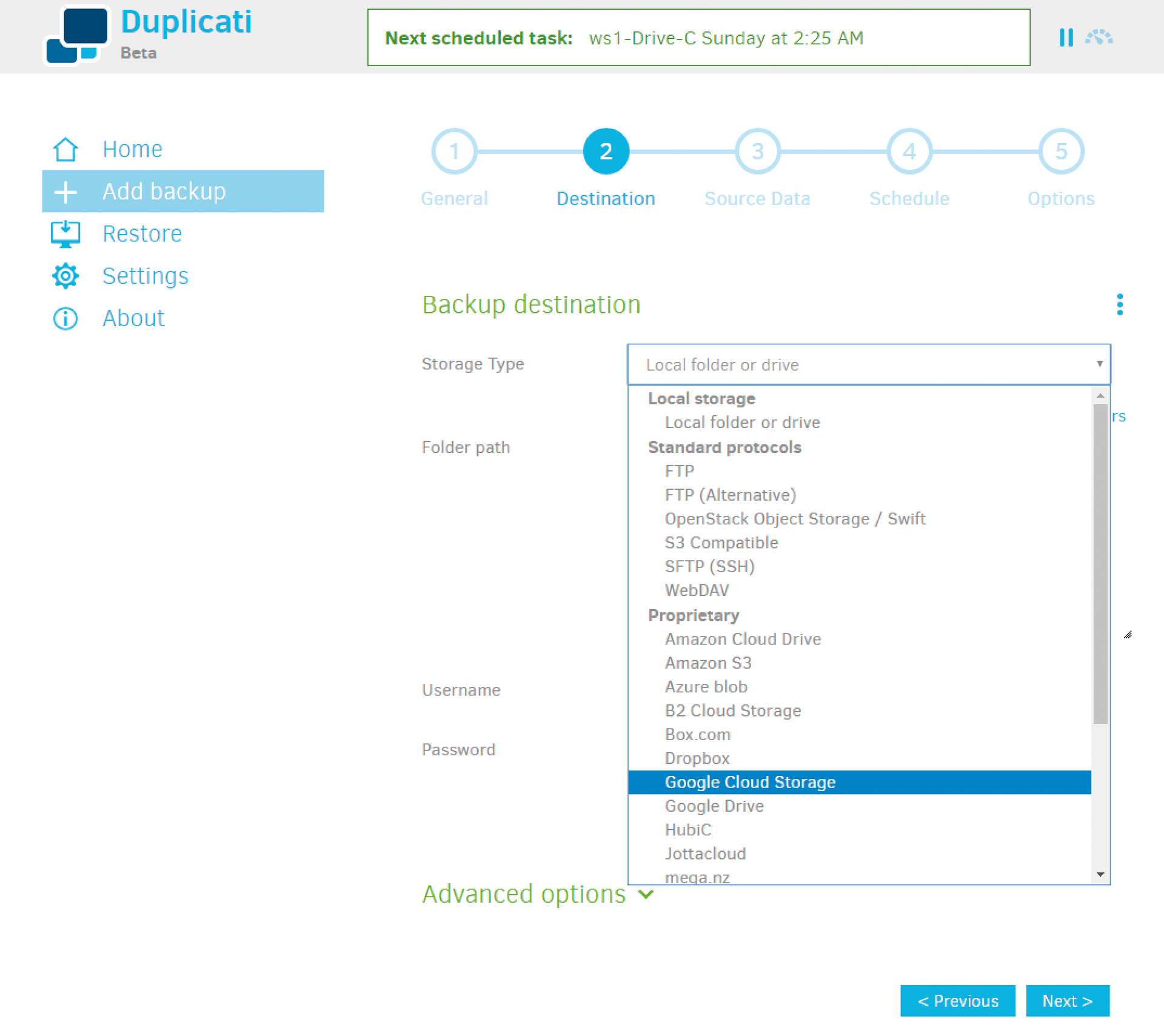

When it comes to backups, countless third-party providers have integrated Amazon S3 connectors into their products (e.g., S3 browser, WordPress backup plugin, Duplicati open source enterprise backup program, and Clonezilla imaging tool). However, Duplicati comes with a GCS connector, as well (Figure 1).

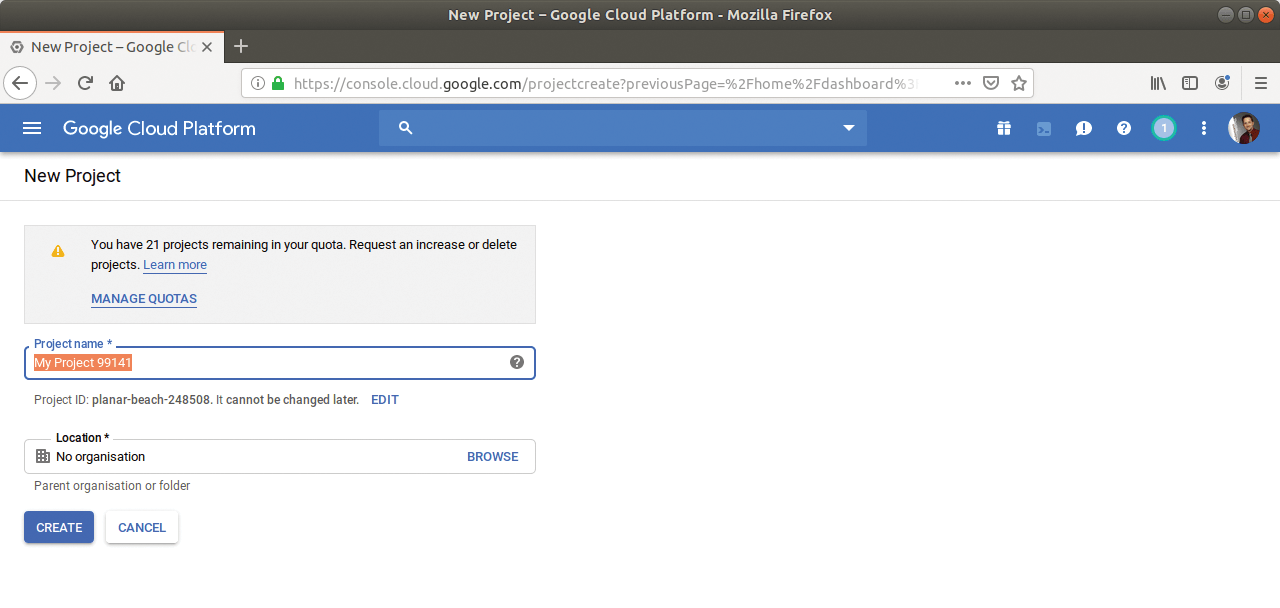

Even without third-party tools, though, the route to the first Google cloud backup is not difficult. To begin, you need a GCP account (e.g., one that comes with Google Drive or G Suite). Thus armed, you will be able to create a new project (Figure 2) in the GCP console [3] by clicking on Create in the Select a Project dialog.

Once the project has been created, create the desired bucket in GCS by clicking on Storage | Browser in the Storage section of the navigation menu and then on Create Bucket. Because the storage class for inexpensive archive storage in this example will be Coldline and because I am located in Germany, my choice of target region here is europe-west-1.

Now you will be able to upload files of any type to the new bucket. Depending on how you want to structure the files, you can use a meaningful folder structure within the bucket. Note, however, that folders in an object store are only pseudo-folders, wherein any path separators like the slash (/) are part of the object name. It is also possible to zip files or backups before uploading to reduce storage costs and simplify uploading and downloading.

Automating Backups

Ambitious users can also use the Google Cloud SDK to automate the backup. Here, I'll look at an Ubuntu-based approach, where I will first add package sources and then install the Google Cloud Public Key:

# export CLOUD_SDK_REPO="cloud-sdk-$(lsb_release -c -s)" # echo "deb http://packages.cloud.google.com/apt $CLOUD_SDK_REPO main" | sudo tee /etc/apt/sources.list.d/google-cloud-sdk.list # curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

The following command installs the SDK:

# sudo apt-get update && sudo apt-get install google-cloud-sdk

The next step is to run the gcloud init command and follow the instructions to sign in and authenticate the SDK to your desired Google Account. After doing so, you can use the gsutil tool, which supports rsync, cp, mv, rm, and many other Linux commands. The syntax for rsync is as expected:

# gsutil rsync -r <source destination>

In other words, you only need to specify the directory to be copied from the local server to Google Cloud Storage, as in:

# gsutil rsync -r /home/drilling/google-demo gs:// td-ita-backup

The -r flag forces recursive synchronization of all subdirectories in the source, as you might expect. The rsync command also checks whether the file exists before copying and only copies if the file has changed. Items in the Google Cloud storage account are not removed by rsync -r when they are deleted locally; however, this operation would be possible if you used the -d option.

The last step is to automate the backup process. The easiest approach is to set up a cron job by creating a script that includes the gsutil command and making it executable:

# nano gcpbackup.sh gsutil rsync -r /home/drilling/google-demo gs://td-ita-backup # chmod +x gcpbackup.sh

Now the script can be called with cron, as required.

Conclusions

If you only look at the suitability of Amazon S3 and AWS Glacier and its excellent integration with other AWS services as backup storage, the flagship Google Cloud Storage service has to admit defeat in terms of cost and access time. However, Google's storage service impresses with interesting access tools such as gsutil and the Storage Transfer Service. This S3 alternative is definitely worth a try, in any case, especially if you already use other Google Cloud Platform tools.