The new OpenShift version 4

Big Shift

The end of the road is nothing like nigh for Google's Kubernetes container orchestrator, reflected not least in sheer numbers: The May 2019 KubeCon saw 7,700 participants. Clearly, no manufacturer can ignore Kubernetes without being considered out of the loop.

A veteran like Red Hat certainly can't afford that perception, which is why they launched OpenShift at an early stage. OpenShift is an open source container application platform that, according to the manufacturer, is particularly easy to roll out and operate. Recently, Red Hat launched OpenShift 4. Of course, this version is bubbling with new features and far better than all its predecessors together – if you believe Red Hat's PR department.

However, does the product deliver what the manufacturer promises? How does OpenShift fit into Red Hat's cloud strategy? I look at precisely these questions in this article.

The Idea

Red Hat describes OpenShift as a Platform as a Service (PaaS), which in itself points toward a development that has been going on for years: the fragmentation of the individual layers of the IT landscape. Whereas customers used to obtain their complete environment from a single source, today they usually have to deal with several providers. The challenge is to enable the various providers to work together across standardized interfaces.

What sounds complicated in theory is far easier in practice. At the bottom of the stack are the platform providers, because even in times of serverless computing, someone has to run the servers that allow customers to do without their servers. Providers usually offer some form of Infrastructure as a Service (IaaS), often combined with a few as-a-service offerings like Database as a Service because – and the providers are aware of this – if you only want to run a web application, you will not want to worry about running a database.

Classic IaaS is now mostly based on virtual machines (VMs), which come with plenty of overhead.

Docker for All

Docker identified the overhead weakness years ago. Without further ado, VMs were given support from containers based on existing kernel functionality. They have far less overhead and allow programmers to write cloud-native applications – the opposite of the conventional consolidation approach. Applications are no longer big and monolithic; instead, they are divided into microservices distributed across many containers.

For this setup to work, however, an instance must manage those containers. Google's Kubernetes has developed into the apparent market leader. Accordingly, Kubernetes is the layer based on classic IaaS offerings and thus enables the efficient operation of cloud-native-style apps (Figure 1).

![Kubernetes is part of the "agile revolution" and enables the operation of cloud-native applications (Kubernetes authors, CC BY 4.0 [1]). Kubernetes is part of the "agile revolution" and enables the operation of cloud-native applications (Kubernetes authors, CC BY 4.0 [1]).](images/F01-openshift-4_1.png)

Most developers of applications will not want to deal with Kubernetes and the operation of the platform itself. What they really want is a kind of Kubernetes as a Service, a product that rolls out Kubernetes on a predefined infrastructure at the push of a button and that makes running your own applications in this infrastructure as easy as possible.

Red Hat is seeking to exploit exactly this niche with OpenShift. Although OpenShift has existed as a PaaS platform for more than eight years, Red Hat did not make the radical shift to Kubernetes until it was mature and proven for production.

Red Hat products cover all the other parts of the modern data center, as well. If you run OpenStack, you can use the Red Hat OpenStack Platform. If you need CloudForms, you can get it as a boxed product. In all of these cases, Red Hat Enterprise Linux (RHEL) acts as the basis for all of the products.

Three Variants

Red Hat still offers three variants of OpenShift. The OpenShift Container Platform is easy to roll out in a data center. If you want an OpenShift managed by Red Hat in Amazon Web Services (AWS), instead, you will opt for OpenShift Dedicated. OpenShift Online, which Red Hat also hosts, stands out from the crowd by giving users access to an OpenShift platform on demand, and paying only for the resources actually needed.

The advantages and disadvantages of the individual variants are discussed in detail later in this article. For the time being, however, the focus is on the on-premises variant – that is, OpenShift Container Platform.

Homogeneous Platform

Particular importance is paid to a homogeneous platform; each step has a Red Hat tool, so you don't have any additional manual work above the initial installation. Red Hat distinguishes between installer-based setups and user-based setups.

The installer approach is far more automated and currently only covers one special case. If you want to run OpenShift in AWS, you can use the Red Hat OpenShift Cluster Manager [2] and roll out OpenShift directly to AWS. This option is convenient because the Cluster Manager only needs valid AWS credentials. The rest is fully automated – including high availability and other essential details.

Unfortunately, Red Hat does not offer similar convenience on other cloud platforms, such as Microsoft Azure and the Google Kubernetes Engine (GKE). GKE should be available from OpenShift 4.2 or, in the case of OpenStack, OpenShift 4.3.

The user-provisioned approach is less automatic. To begin, you download an installation program that currently supports macOS and Linux and create a bootstrap node, which in turn becomes the nucleus of the future OpenShift installation.

Whether it is bare metal, a VM in Azure, or a KVM VM on a private OpenStack installation is irrelevant from OpenShift's point of view; the installation routine works without problems on all these platforms.

A new OpenStack tool, Ignition, runs on the OpenShift installation control plane servers and allows the installation of new systems. Ignition is one of the tools that comes directly from CoreOS. I will look more at the components inherited from CoreOS later.

RHEL or CoreOS?

When rolling out a new OpenShift environment, you encounter one of the central changes in v4.1 for the first time. No, that's not a typo: OpenShift 4.0 never officially existed. What Red Hat currently markets as OpenShift 4 has been version number 4.1 from the outset. Whatever the numbering scheme, RHEL CoreOS first appeared on the scene in series 4. In fact, Red Hat bought CoreOS in early 2018. Now it becomes clear why: In OpenShift, CoreOS is now considered the first choice of operating system on the cloud node.

Red Hat explicitly points out that you can also use RHEL – as long as it is RHEL 7. OpenShift does not yet know how to handle RHEL 8. However, a setup with RHEL at its heart does not make much sense. The purpose of containers is to keep the main system as clean as possible. Clean in this context also means maintenance free, and a system that runs nothing but a kernel, a few basic libraries, and a container run time is far easier to maintain than a full-blown RHEL.

The CoreOS developers thus established their distribution as a lightweight container operating system without any bells and whistles. By first purchasing CoreOS and now establishing it as the preferred system for nodes in OpenShift, Red Hat is continuing along this path.

Another practical effect is that Kubelet, a part of CoreOS, is rolled out in the basic installation, and Kubelet is precisely the service that turns any Linux system into a Kubernetes node with the ability to start containers.

Even More CoreOS

Red Hat has adopted not only the CoreOS distribution, but the company itself. CoreOS also had some other components in the pipeline, which are now also consistently finding their way into OpenShift. Tectonic, for example, was the management layer developed by CoreOS for Kubernetes, which in OpenShift 4 replaces some of Red Hat's in-house offerings. The OpenShift Cluster Manager mentioned earlier is a Tectonic component.

Another example of substitutions is Quay, the container registry developed for CoreOS, which is now used in the new OpenShift version and replaces another Red Hat development (the old registry). The reason for the recent Red Hat shopping spree is now evident: The Kubernetes implementation of earlier OpenShift versions and the old registry had apparently been identified as weak points.

The CoreOS purchase added more powerful components that will successively replace the old products. Although it probably won't affect your work as an admin, it will lead to more stability and performance behind the scenes.

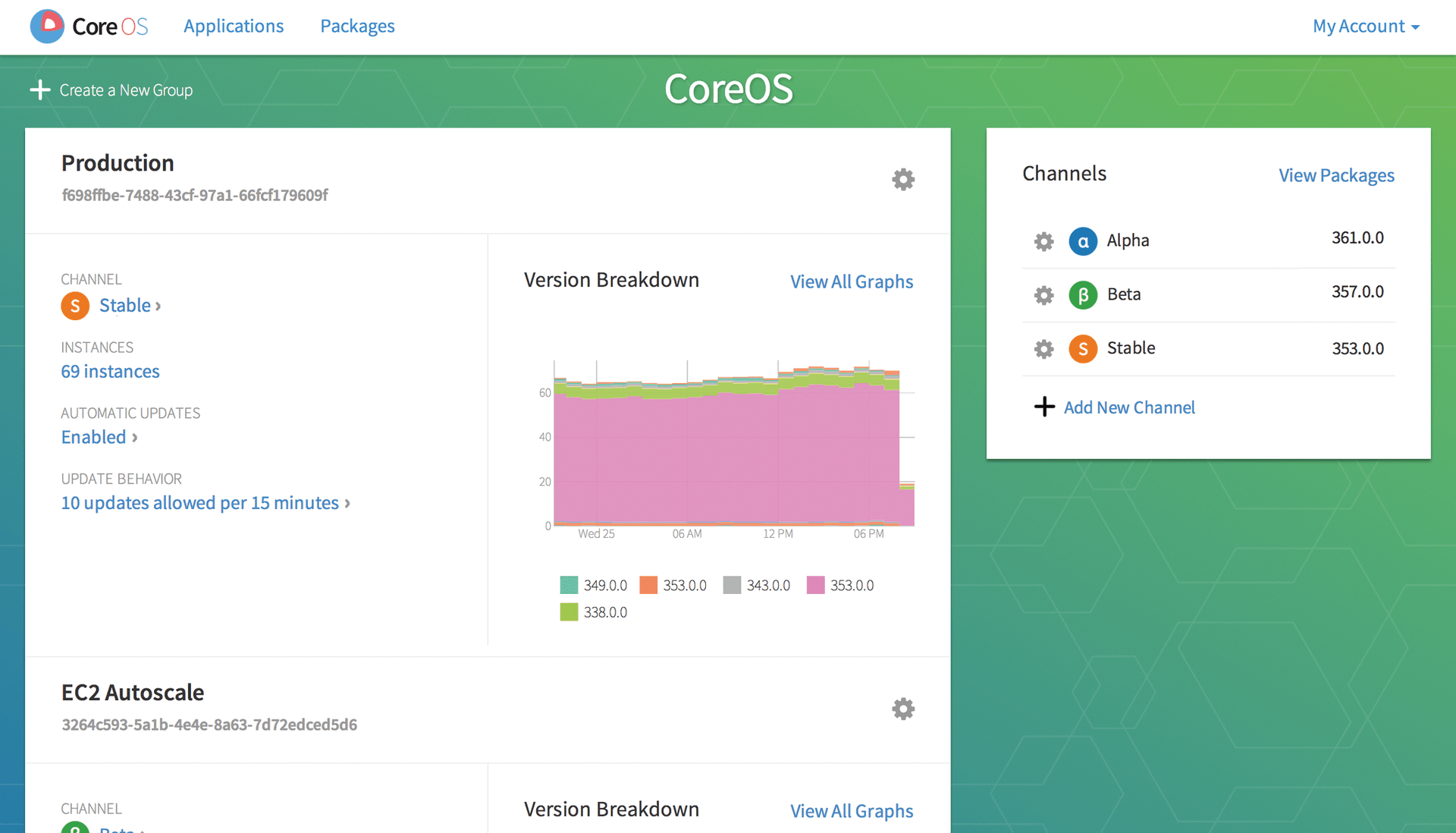

Together with CoreOS, OpenShift also gets a new rollout mechanism for updating the installation nodes. In future, you will be able to manage the nodes by using OpenShift just like the containers belonging to your own virtual environment, made possible by over-the-air updates. OpenShift uses rpm-ostree to apply changes to the systems atomically and automatically (Figure 2).

OpenShift: A Kubernetes Distribution

The OpenShift Container Platform is advertised as a PaaS platform based on Kubernetes. First and foremost, OpenShift is a Kubernetes distribution. It has many things in common with competing products such as SUSE's Container as a Service (CaaS). The primary goal of OpenShift is to give admins a Kubernetes installation as quickly as possible, on which to run workloads in containers.

As you may have guessed, Red Hat enriches the product with some functions beyond Kubernetes – a special sauce, so to speak, for winning over customers.

Hello CRI-O!

Witness the change from Docker to CRI-O, whose "primary goal is to replace the Docker service as the container engine for Kubernetes implementations, such as OpenShift Container Platform" [3]. Docker, remember, is a combination of a userspace daemon and a format for disk images. If you start a Docker container, the Docker daemon combines various kernel functions under the hood to ensure that the data and services in a container are separated from the rest of the system (e.g., with namespaces and cgroups). Other container approaches, such as LXC, are based on exactly the same functions.

Red Hat has no interest in Docker bringing the container market, and the distribution of basic Red Hat images, under its wing almost single-handedly, so they finally decided to launch a rival product: the CRI-O format. The CRI-O container engine implements the Kubernetes Container Runtime Interface (CRI) and is a platform for running Open Container Initiative (OCI)-compatible run times, including Docker.

In OpenShift 4, Red Hat is serious about the Docker replacement. Previously, CRI-O was only available as an alternative to Docker; now it is exactly the other way around. CRI-O is the default format used in OpenShift 4, and Docker is only available as fallback.

Operator Framework

OpenShift 3.11 introduced the Operator Framework, also a component from the CoreOS developers. It is specifically aimed at administrators, not users, of Kubernetes clusters. In short, the promise is that the administrator can use the Operator Framework to get all the services needed on a system for efficient Kubernetes operation.

This is how it works: As a native Kubernetes application, the solution includes an application that runs as a container under Kubernetes but is controlled by the Kubernetes API, for which Kubernetes has controllers, which are extensions of the API itself.

An everyday example would be Prometheus instances and its alert manager, which the admin controls with the Kubernetes API, but which also supplies data from Prometheus by way of the API. The glue needed to ensure this control into and out of the container is the foundation of the Operator Framework that Red Hat has adopted from CoreOS.

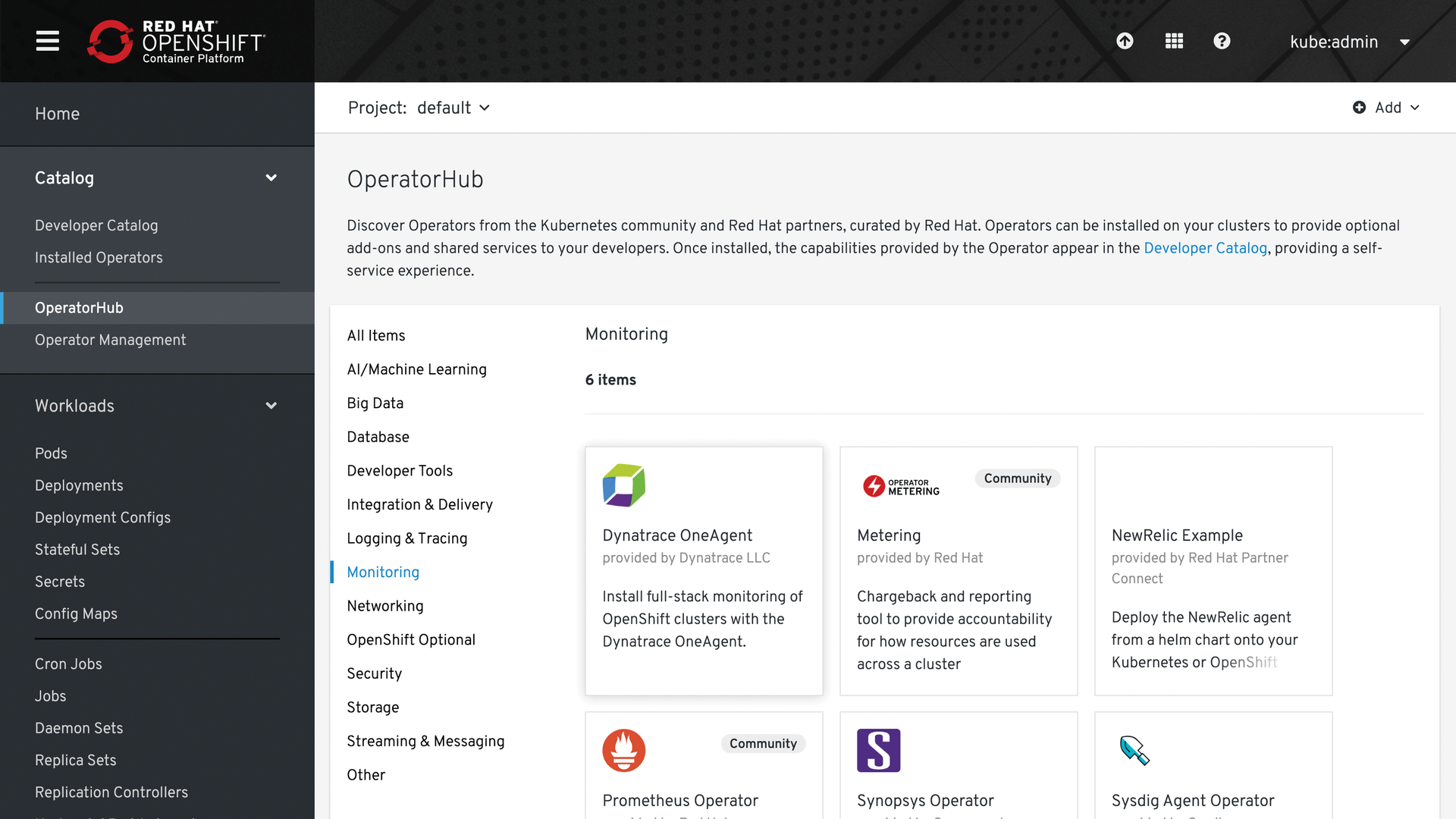

An Operator Lifecycle Manager (OLM), which aids in the administration of Operators, is then added. Letting every admin construct their own Operators doesn't make sense, so CoreOS and Red Hat, together with AWS, Google, and Microsoft, put OperatorHub [4] in place, acting much like Docker Hub, but for OpenShift Operators. Manufacturers offer ready-made Operators, and users can make their Operators available to others in a separate area.

Complete Lifecycle Management

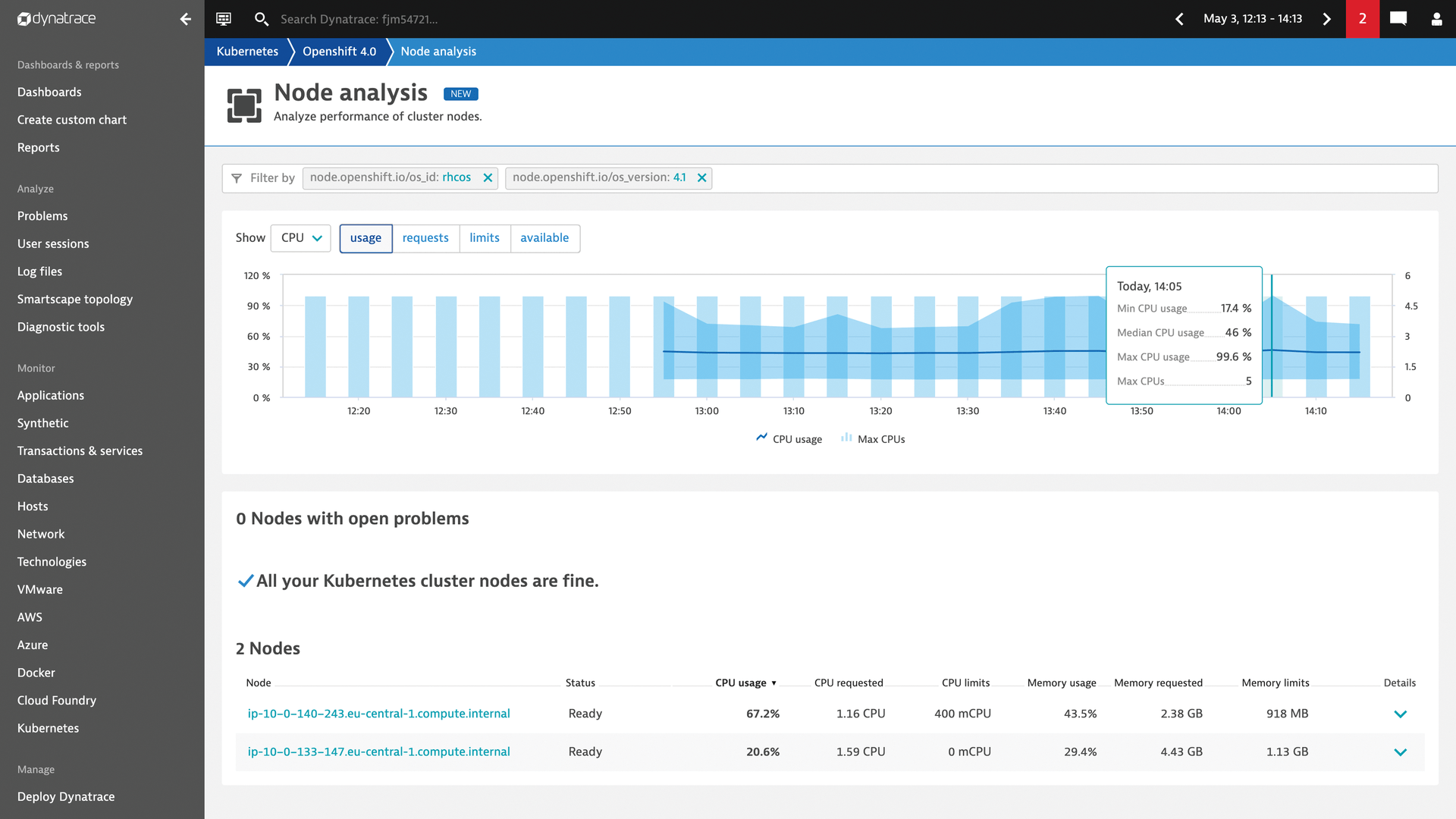

Two examples are sufficient to understand the dimension that OpenShift 4.1 provides through Operators. For example, transferring new Kubernetes pods into a running Prometheus instance by services such as Consul are easy to implement. From the moment a pod starts running, the Prometheus Operator writes data for it and makes it available through the Kubernetes API.

The automatic start of new pods is no problem, as well. If the metrics collected by Prometheus indicate a state of emergency and a possible system slowdown in its current configuration, Operators can be used to start new worker containers. The applied load is then distributed among more instances of the application's services, and the danger of poor performance is eliminated.

Through the Kubernetes API, you can control by Operators the entire lifecycle of pods and containers. The result is a variety of orchestration and automation that is in no way inferior to that of large public clouds – just based on containers.

As seen, the examples mentioned in this article are provided by OpenShift 4.1 as Operators: Metering and monitoring by Prometheus and Chargeback are included in the scope of delivery (Figures 3 and 4).

Help from Istio

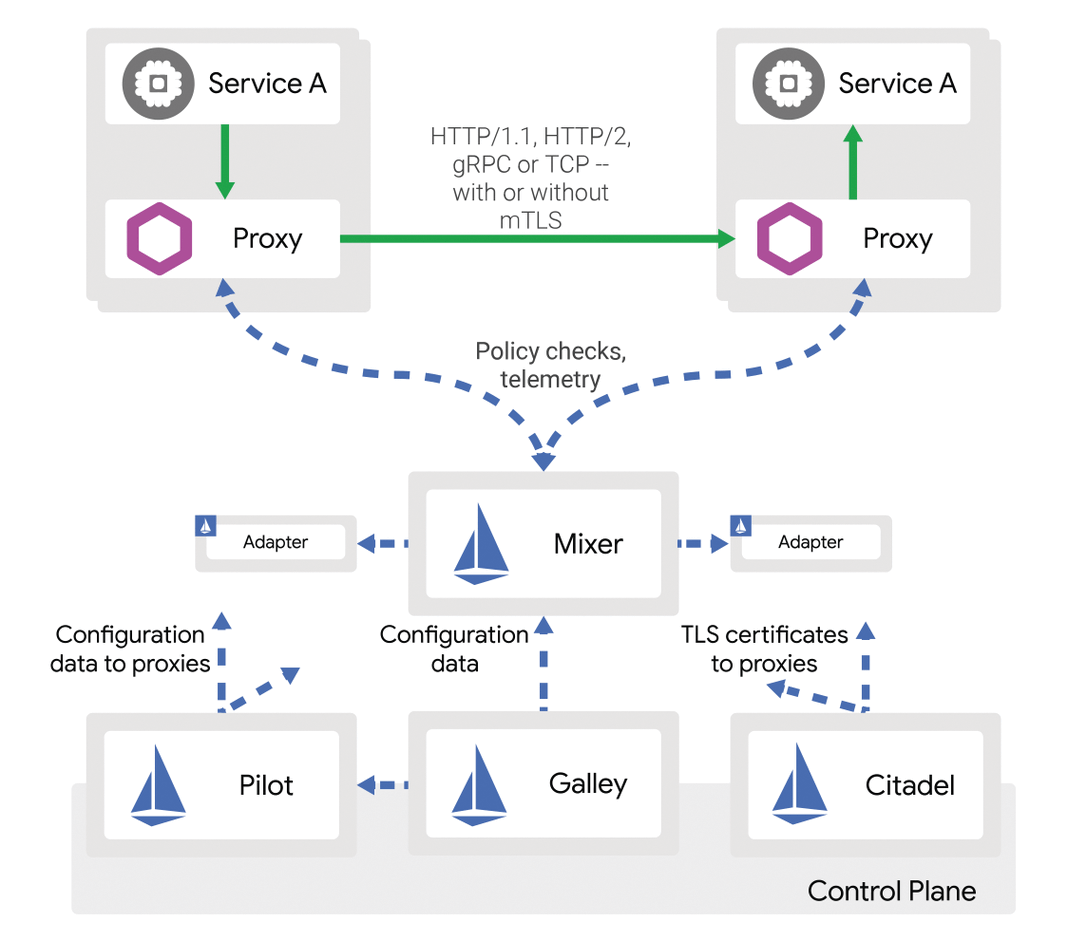

Although most of the changes in OpenShift 4 are undoubtedly aimed at admins, Red Hat is also taking steps to make life easier for developers. One example is Istio, a service mesh that makes it easy for developers to build a working network between many microservices of the same application.

For example, it automatically blocks unneeded network paths between services and installs proxy servers where they are needed (Figure 5). You can find more information about Istio online [5].

Costs

As usual, Red Hat remains rather vague about pricing: OpenShift Online, the product hosted by Red Hat, is available in the starter version without a price tag. With only one project to create and rather limited resources, however, the offer should be considered a test version.

The Pro version will cost at least $50 per month, but you can create up to 10 projects and get basic support from the manufacturer.

OpenShift Dedicated and the OpenShift Container Platform come without any indication of price at all, so if you are interested in those products, you are forced to contact Red Hat Sales directly.

Conclusion

Red Hat OpenShift is an up-to-date Kubernetes distribution. Kubernetes itself comes in version 1.13, which is not new anymore, but it's enough for everyday tasks. Much more important than Kubernetes are the lateral changes that make OpenShift 4.1 a good and functional product.

First, OpenShift 4 makes life much easier for admins, primarily because of the various components gleaned from CoreOS. Rolling out CoreOS on a Kubernetes node in the future will keep your maintenance effort as low as possible.

Kubernetes integration and the Quay container registry, also taken from CoreOS, contribute greatly to increased stability compared with the previous version. The Istio service mesh is so potent as a virtual interface manager that its advantages cannot be described with justice in the context of this article.

Although already included in Open Shift 3.11, the Operator Framework, which introduces comprehensive lifecycle management for virtual applications in containers, is only now really useful and cannot be found in other current Kubernetes distributions.

Red Hat is also active in the OpenStack market, so it seems a bit strange that Red Hat OpenShift 4.1 does not roll out on OpenStack clouds out of the box. After all, you would also be interested in using OpenStack platforms as efficiently as possible. Red Hat should look to solve the problem in one of the upcoming OpenShift versions.

Full integration into continuous integration and continuous delivery environments like Jenkins is still in place and works well, so OpenShift is explicitly keeping an eye on the well-being of developers.

Clearly, OpenShift 4 is a cool upgrade and warmly recommended to all previous OpenShift 3 users. If you are basically interested in Kubernetes distributions at the moment, the quality of OpenShift 4 certainly justifies keeping it in mind.