CI/CD deliverables pipeline

Happy Coincidence

Software developers are faced with an overcrowded toolbox of build and automation tools to help automate the steps from editing source code in Git to delivering the finished product. It is not easy to select the right tools and forge them into a chain completely and efficiently that reflects your way of working.

If a ready-made service solution such as GitLab does not keep you happy because its customization and expansion options are limited, the following guide will take you to a rewarding goal: a continuous integration (CI) installation system that uses Jenkins [1] as the backbone.

This scheme empowers a team of software developers who manage their source code via Git to automate intelligently the recurring steps (builds, tests, delivery) in the project. The following example deliberately keeps the individual components simple, leaving enough items with a potential for improvement, depending on your application.

In release 2.x, pipelining [2] officially became part of Jenkins. Since then, completely new possibilities have opened up as to how a build pipeline can be orchestrated. Docker [3] is also a powerful tool that lets you implement customizations that otherwise require considerable maintenance in traditional installations, including new installations.

Build Environment

The following demonstration is a build pipeline that orchestrates Jenkins and takes advantage of Docker's capabilities for the build process. It is not explicitly about creating Docker images.

Jenkins orchestrates the entire build. To be as flexible as possible about updating Jenkins itself, the setup presented uses the official Jenkins Docker image [4]. The Jenkins Master therefore requires only one machine to run the Docker daemon. The big advantage of the Docker image is that the Jenkins home directory can be moved to a Docker volume. In this way, no settings are lost when you need to update Jenkins or the Jenkins Docker image.

In the case of the slave machines for the Linux builds, it doesn't matter whether they are dedicated servers, virtual machines (VMs), or even VMs hosted by a cloud provider. For Jenkins to be able to use a machine as a slave, the machine only needs to be equipped with SSH access and a Java 8 Runtime. The builds themselves make use of Docker, so additional Docker instances need to be installed on the slaves.

An Apple build slave has to be configured much like a normal Linux machine in terms of Jenkins integration; it also needs SSH access and an installed Java 8 Runtime. If you need Docker for the build, you have to install it there explicitly. Mac-specific builds, however, usually occur directly on the machine, so admins have to set up the required software on the device.

As always, Windows requires extra handling. In short, the implementation is as follows: The Windows slaves need to connect via the Java Network Launch Protocol (JNLP), and the required tool chain must be installed on the Windows machine. Details on connecting these slaves can be found online [5].

Builder Images

To build the results for different Linux target systems, intelligent use of Docker is the answer. The idea is to use a Docker image of the corresponding target system and build it in an instance of the image. After the build, the system exports the results from the instance in an appropriate way. The deliverable is not a Docker image, but a target system-specific binary.

The goal of the setup presented here is a cmake-based Hello World application. The corresponding Dockerfile for a Debian Builder is documented in Listing 1. The resulting binary is only an example, of course, because it is not bound to any specific Linux system as a standalone binary. In this case, the binary built for Fedora works just as well on Debian, which is admittedly rare in the field.

Listing 1: Debian Builder Dockerfile

01 FROM debian:stretch

02 MAINTAINER Yves Schumann <yves@eisfair.org>

03

04 ENV WORK_DIR=/data/work \

05 DEBIAN_FRONTEND=noninteractive \

06 LC_ALL=en_US.UTF-8

07

08 # Mount point for development workspace

09 RUN mkdir -p ${WORK_DIR}

10 VOLUME ${WORK_DIR}

11

12 COPY Debian/stretch-backports.list /etc/apt/sources.list.d/

13 COPY Debian/testing.list /etc/apt/sources.list.d/

14

15 RUN apt-get update -y \

16 && apt-get upgrade -y

17

18 RUN apt-get install -y \

19 autoconf \

20 automake \

21 build-essential \

22 ca-certificates \

23 cmake \

24 curl \

25 g++-7 \

26 git \

27 less \

28 locales \

29 make \

30 openssh-client \

31 pkg-config \

32 wget

33

34 # Set locale to UTF8

35 RUN echo "${LC_ALL} UTF-8" > /etc/locale.gen \

36 && locale-gen ${LC_ALL} \

37 && dpkg-reconfigure locales \

38 && /usr/sbin/update-locale LANG=${LC_ALL}

Uploader Image

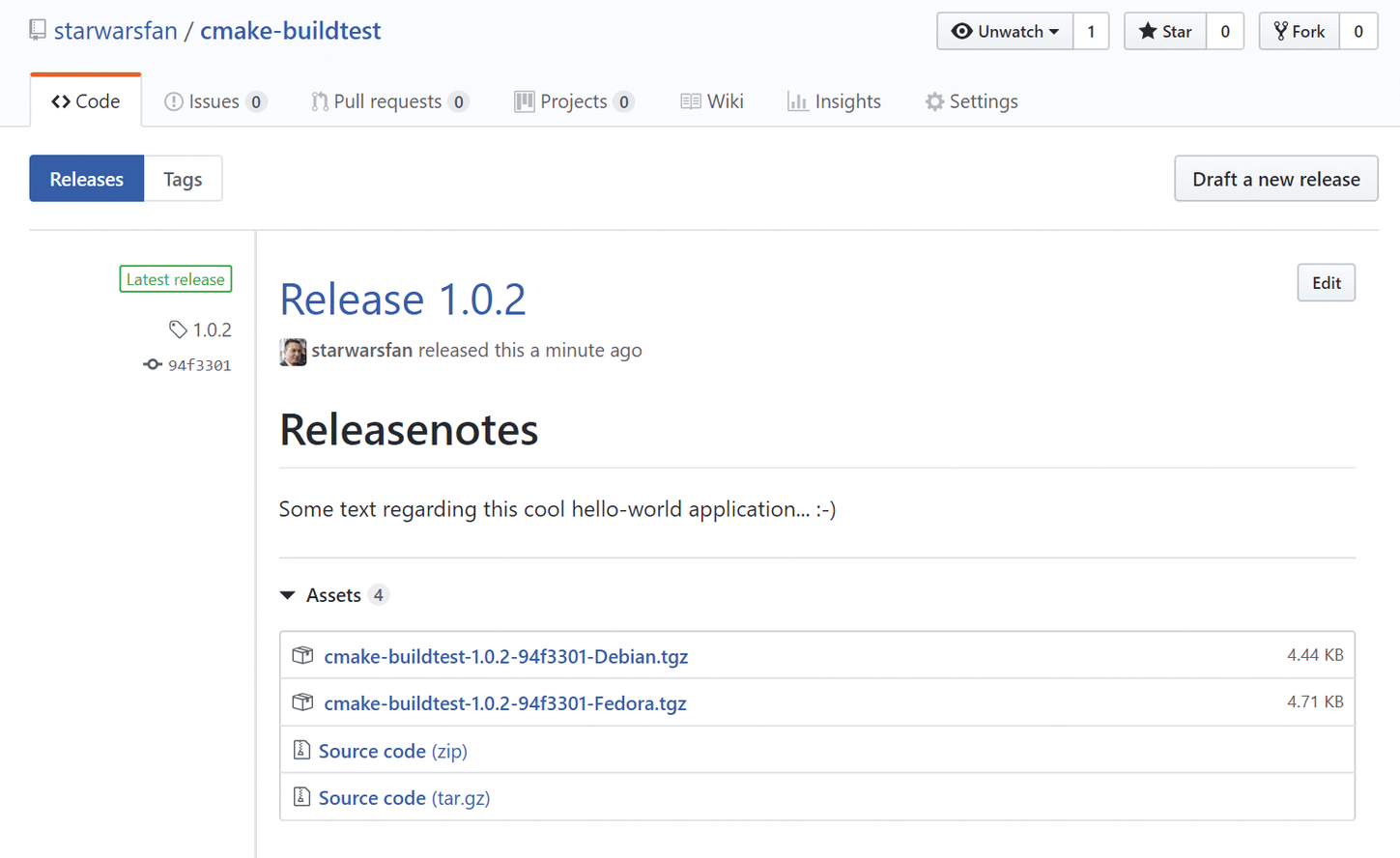

After the build is completed, the deliverable is still inside the running container. If you want to publish the results on GitHub, you need a suitable mechanism to automate this upload step. The aktau/github-release [6] project, a Go application that helps publish arbitrary artifacts on GitHub, is the perfect solution.

To integrate the tool into the build process, Docker and its broad base of ready-made images once again play the leading roles. The Alpine Linux-based golang image is used for the uploader image, which is where line 7 of the Dockerfile in Listing 2 installs the github-release tool.

Listing 2: Dockerfile github-release Tool

01 FROM golang:1.11.5-alpine3.8 02 MAINTAINER Yves Schumann <yves@eisfair.org> 03 04 # Install git, show Go version and install github-release 05 RUN apk add git \ 06 && go version \ 07 && go get github.com/aktau/github-release 08 09 # Create mountpoint for file to upload 10 RUN mkdir /filesToUpload 11 12 # Just show the help to github-release if container is simply started 13 CMD github-release --help

Setting Up a Jenkins Master

The easiest approach is to set up the Jenkins Master based on the official Docker image. To do this, the admin on the host that will be hosting the Jenkins Master runs the command:

docker run --name jenkins -d -v jenkins_home:/var/jenkins_home -p 8080:8080 -p 50000:50000 jenkins/jenkins:latest

Immediately afterward, you need to print the log with

docker logs -f jenkins

to get the initial admin password (Listing 3).

Listing 3: Admin Password

[...] Jenkins initial setup is required. An admin user has been created and a password generated. Please use the following password to proceed to installation: 12345678901234567890123456789012 This may also be found at: /var/jenkins_home/secrets/initialAdminPassword [...]

As you can see, the password is also available for viewing in the container below the specified path:

$ docker exec -it jenkins cat /var/jenkins_home/secrets/initialAdminPassword 12345678901234567890123456789012

The file remains until the initial setup is finished. This is precisely what the admin follows when calling the Jenkins URL in the browser. The easiest way to do this is by typing http://<Host-IP>:8080/ directly on the Jenkins Master, because the setup is simplified and does not include HTTPS setup or port configuration.

The following steps set up the default admin and install the recommended plugins; then, the standard Jenkins interface is accessible, and the basic installation of the Jenkins Master is complete.

In preparation, the admin stores the credentials that Jenkins needs to access GitHub and Docker Hub. The credentials are of the type Username/Password and support access to the respective API. Later, GitHub will also be accessed via SSH, which is why you have to store the corresponding private key in a separate credential entry of Kind SSH Username with private key and install the SSH agent plugin.

For a GitHub organization, it makes sense to set up a separate CI account and deposit the corresponding public key there. In the case of a personal account, the account owner has to add the public key to their profile.

Setting Up Build Slaves

To connect Linux or Mac builders, you need to configure a build node with Launch method Launch slave agents via SSH. For this you need an account with SSH access and key-based login on the slaves. The private key to be used is stored on the master in the credentials configuration.

The account on the slave normally does not need admin privileges but must be allowed to execute Docker commands. It is also important that the Linux slaves have the correct label – docker, in this case – thus ensuring that the pure Docker builds start explicitly on the Linux slaves.

The easiest way for the admin to integrate a Windows slave is with Launch method Launch agent via Java Web Start, as described online [7].

Linking GitHub and Jenkins

An important point of build automation is connecting Jenkins to the repositories on GitHub. At this point, it is advisable to use an organization scanner (Figure 1). For an initial design, the default organization scanner settings will be fine. You only need to enter the access credentials plus the GitHub organization or your own GitHub username under Owner.

The big advantage of a scanner is that you only need to configure the GitHub project or the GitHub user in it. The organization scanner automatically finds the Git repositories for the specified organization or user and analyzes their branches for the presence of a Jenkinsfile. If a Jenkinsfile exists, the scanner automatically creates a suitable build job.

Therefore, admins no longer have to create additional Git repositories on the CI system, because the build jobs are created in a fully automated process. If a branch is deleted after a successful pull request, the corresponding build job also disappears.

Building the Build Images

In the first step, the admin builds the images that the deliverables build requires. It makes sense to use a fully automated process with Jenkins for this, too. To do so, you need to version the Dockerfiles in your own Git repositories. Additionally, each of the repositories is assigned a Jenkinsfile in the root directory that defines the build image. The Git repository for the uploader [8], repository for the builders [9], and corresponding Docker repositories [10]-[12] can be found online.

Thanks to the organization scanner set up in the previous step, the system automatically finds these repositories and sets up corresponding build jobs. The scanner also creates the hooks on GitHub so that the builds are triggered by a push. This eliminates the need to update repositories periodically to reflect changes. The account you use must, of course, have suitable repository permissions, and the Jenkins instance must be accessible from GitHub.

Once the jobs have run successfully, the images required for the build of the deliverables are now available on Docker Hub.

Building the Project Deliverables

Now the setup has progressed to the extent at which the CI system is ready for its tasks: recurring automatic building of the project deliverables, with all required details, and uploading them to GitHub. The main repository of the project has a Jenkinsfile in the root directory that defines the build. The build process from Jenkins' point of view is therefore identical to the build of the auxiliary repositories for the Builder images and the GitHub Uploader that have already been described. The Linux binaries are built-in Docker instances that are based on the prepared Builder and Uploader images. The multistage build of the Debian variant can be seen in Listing 4.

Listing 4: Multistage Dockerfile of App Build

01 ### At first perform source build ###

02 FROM starwarsfan/cmake-builder-debian:1.0 as build

03 MAINTAINER Yves Schumann <yves@eisfair.org>

04

05 COPY /demo-app

06

07 RUN mkdir /demo-app/build \

08 && cd /demo-app/build \

09 && cmake .. \

10 && cmake --build .

11

12 RUN echo "Build is finished now, just execute result right here to show something on the log:" \

13 && /demo-app/build/hello-world

14

15 ### Now upload binaries to GitHub ###

16 FROM starwarsfan/github-release-uploader:1.0

17 MAINTAINER Yves Schumann <yves@eisfair.org>

18

19 ARG GITHUB_TOKEN=1234567

20 ARG RELEASE=latest

21 ARG REPOSITORY=cmake build test

22 ARG GIT_COMMIT=unknown

23 ARG REPLACE_EXISTING_ARCHIVE=''

24 ENV ARCHIVE=cmake-buildtest-${RELEASE}-${GIT_COMMIT}-Debian.tgz

25

26 RUN mkdir -p /filesToUpload/usr/local/bin

27

28 # Maybe copy more from the builder...

29 COPY --from=build /demo-app/build/hello-world /filesToUpload/usr/local/bin/hello-world

30

31 RUN cd /filesToUpload \

32 && tar czf ${ARCHIVE} . \

33 && github-release upload \

34 --user starwarsfan \

35 --security-token "${GITHUB_TOKEN}" \

36 --repo "${REPOSITORY}" \

37 --tag "${RELEASE}" \

38 --name "${ARCHIVE}" \

39 --file "/filesToUpload/${ARCHIVE}" \

40 ${REPLACE_EXISTING_ARCHIVE} \

41 && export GITHUB_TOKEN=---

The exciting part is now to build the project Jenkinsfile as efficiently as possible. In practice, you will notice features that you would need to redefine in almost every Jenkinsfile. It is much more efficient to outsource these to separate pipeline functions and make them available globally in Jenkins. This means you only need to maintain the code in one place, and all pipelines benefit.

Help Functions for Release Handling

The github-release tool as a Docker image provides valuable services for handling releases on GitHub that simplify many tasks, but the functions can be further encapsulated. As an example, I'll look at the approach in the jenkins-pipeline-helper [13] repository. The github-release functions are encapsulated as pipeline functions and can be called directly by name within the pipeline. The Jenkinsfile for creating a GitHub release is shown in Listing 5. As a gimmick, the release functions can process two different variants of the release description.

Listing 5: Jenkinsfile Snippet to Create a GitHub Release

01 script {

02 createRelease(

03 user: "${GITHUB_ACCOUNT}",

04 repository: "${GITHUB_REPOSITORY}",

05 tag: "${GIT_TAG_TO_USE}",

06 name: "${RELEASE_NAME}",

07 description: "${RELEASE_DESCRIPTION}",

08 preRelease: "${PRERELEASE}"

09 )

10 }

If you populate the description parameter with some text, your Jenkins will use this text as the release description. The Develop builds are only given a build number:

RELEASE_DESCRIPTION = "Build ${BUILD_NUMBER}"

However, if you pass in a file name, its content will be used. This is the case in the example with the builds from the Master branch:

RELEASE_DESCRIPTION = "${WORKSPACE}/Releasenotes.md"

Specifically, it causes the tool chain to publish the release notes automatically on GitHub with every release build.

Structuring the Build Pipeline

The Jenkinsfile discussed below defines the entire build. The cmake-buildtest [14] repository contains the source code and the Jenkinsfile for a simple Hello World sample application.

The entire pipeline is first bound to an agent with the label docker. The idea behind this is to map the branch of the pipeline that has the longest run time to this agent without further agent labels. All steps that can be implemented in parallel are then given separate agent labels so that a new builder instance is started for these steps parallel to the instance already running (see the box "Risk of Deadlock"), and the system executes the corresponding build steps there.

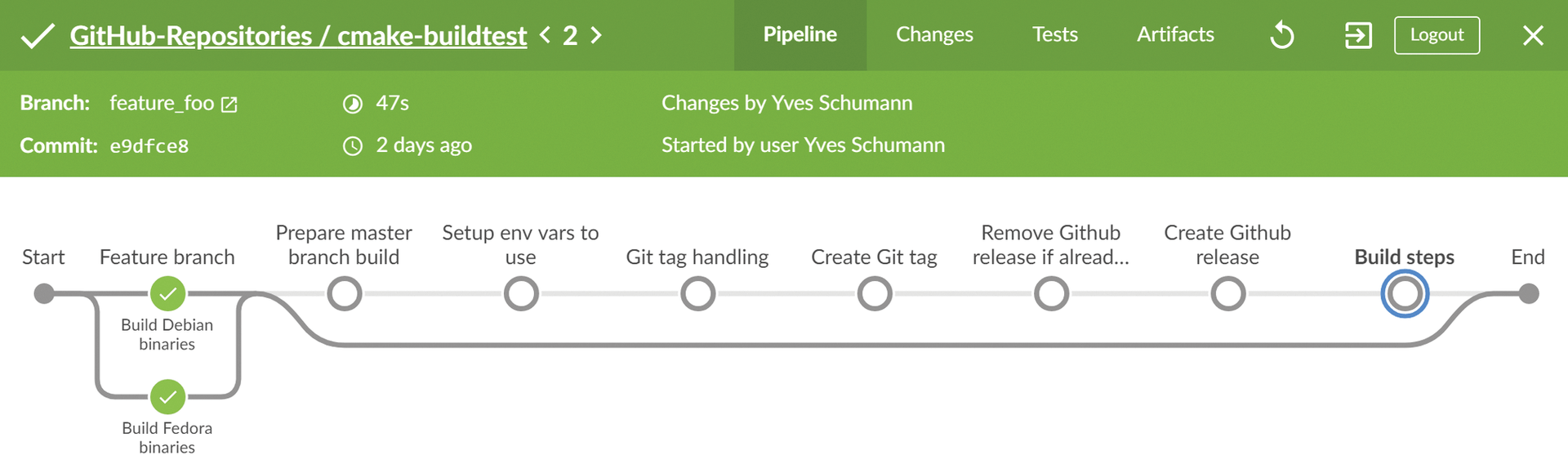

The structure of the pipeline depends on the current branch in the next stage. The first stage contains a when statement that executes only if it is not the Develop or Master branch. These feature branch builds (Figure 2) are usually massively simplified. The example here refers to a special Dockerfile that only builds the demo application and launches it for viewing purposes. Most use cases only process their unit tests here.

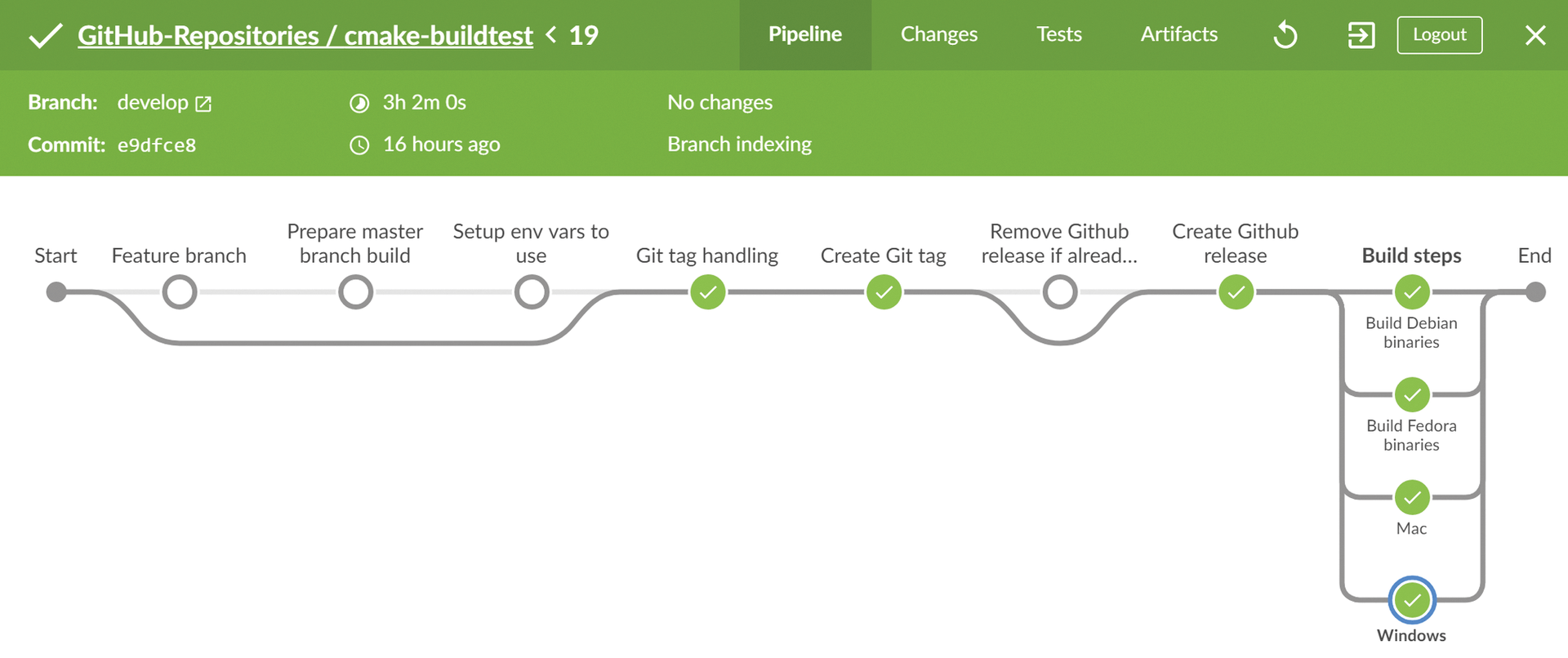

The idea behind the other stages is that they only apply to Master and Develop builds. To do justice to the differences between a build and the Master branch, the second stage, Prepare master branch build, contains only a few new variable definitions. From here on, the helper functions described in the previous section are also used – for example, in the Git tag handling stage to prepare the release for GitHub (Figure 3).

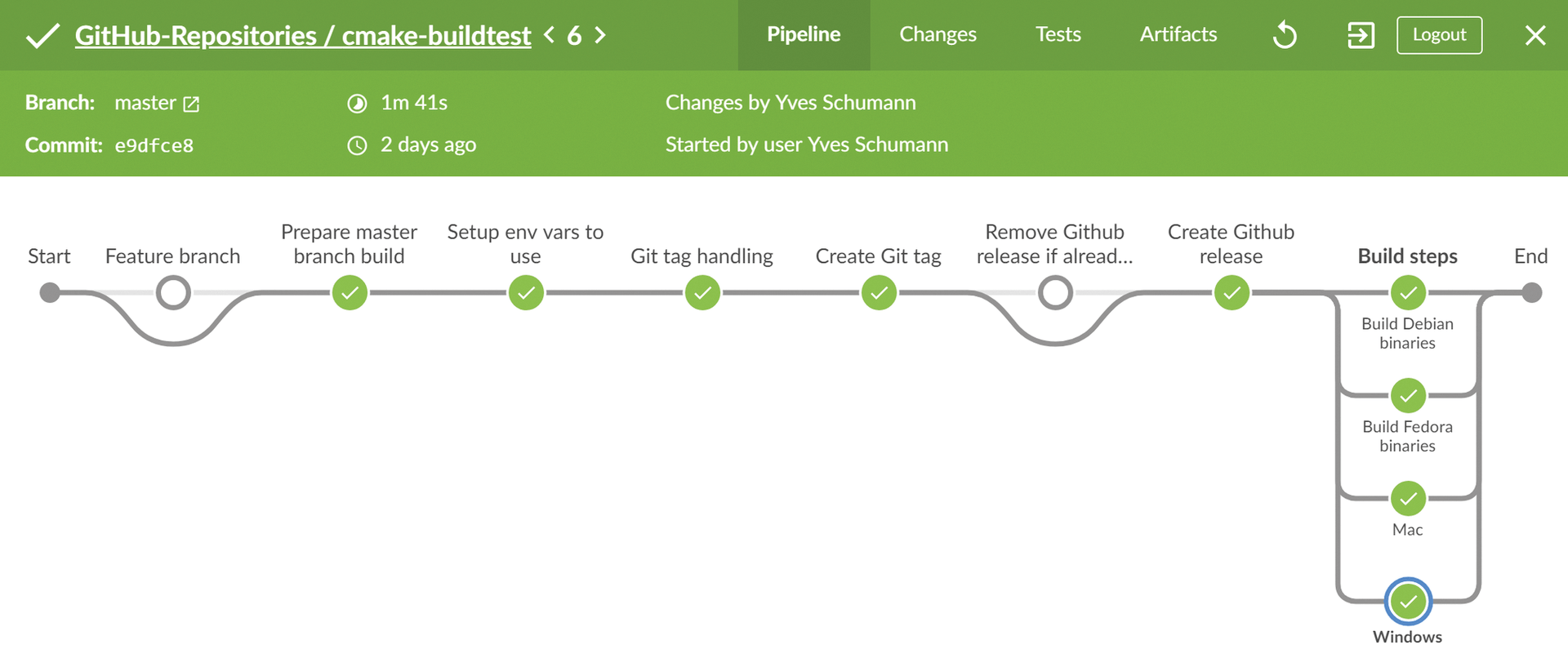

In the last stage, Build steps, the final build of the application, including GitHub deployment, now takes place. Calling the build is again trivial, because the actual build logic is in the corresponding Dockerfile. From there, the GitHub deployment also takes place (Figure 4).

You Have Arrived

In this article, I showed a complete sample setup with deliberately simple individual components that any admin or developer can use to build a continuous integration pipeline with Jenkins (Figure 5).

The domain-specific language (DSL) of the Jenkins pipeline [2] proves to be a very powerful tool to cover all the needs of modern Git- and container-based software development geared toward continuous integration, as well as continuous delivery and deployment.