Five Kubernetes alternatives

Something Else

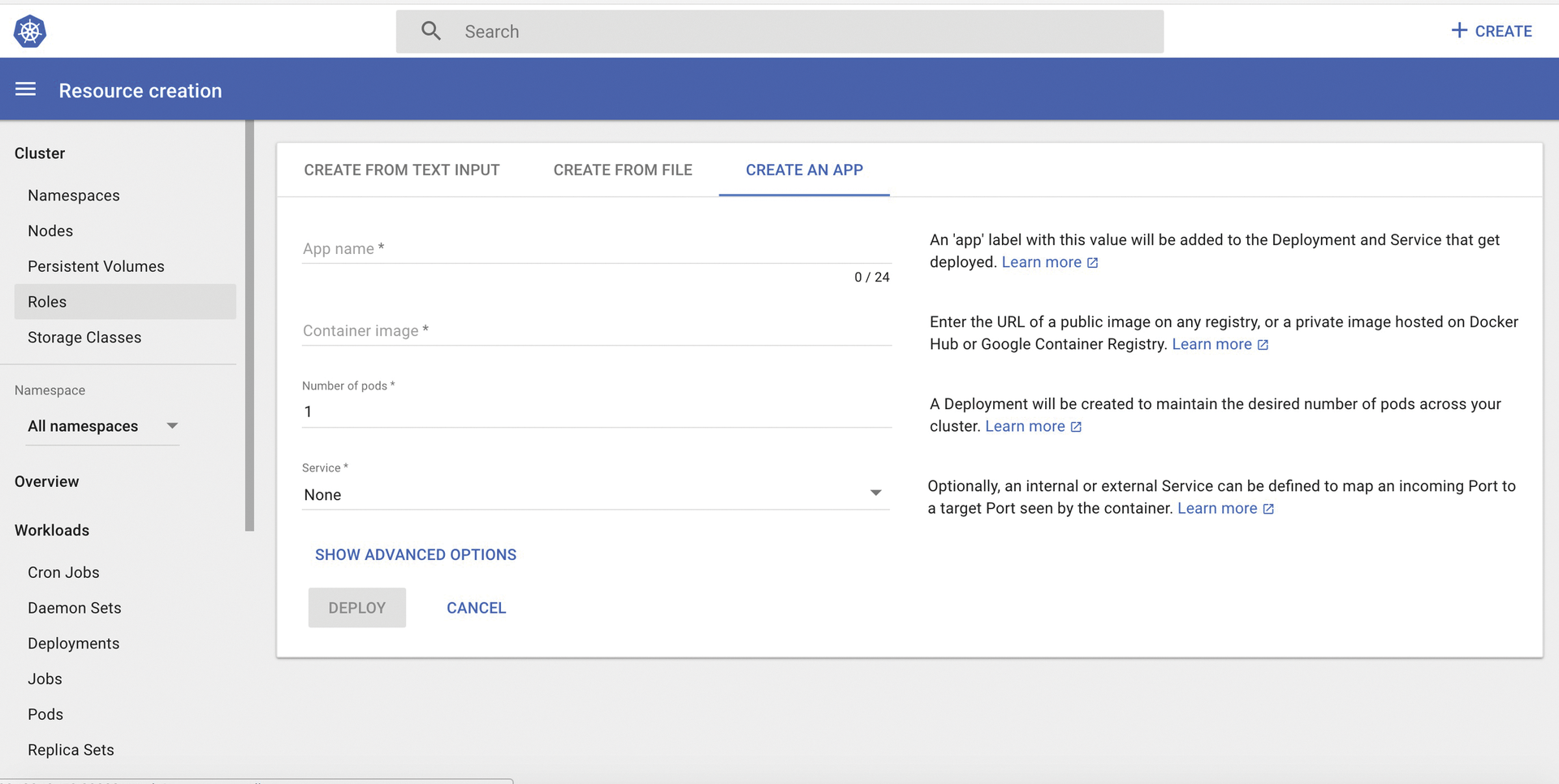

Kubernetes [1] is king of the hill in the orchestration universe. Invented by Google and now under the auspices of the Linux Foundation, Kubernetes has not only blossomed quickly but has led to a kind of monoculture not typically seen in the open source world. Reinforcing the impression is that many observers see Kubernetes (Figure 1) as a legitimate OpenStack successor, although the solutions have different target groups.

Against this background, it is not surprising that Kubernetes' presence sometimes obscures the view of other solutions that might be much better suited to your own use case. In this article, I look to put an end to this unfortunate situation by introducing Kubernetes alternatives Docker Swarm, Nomad, Kontena, Rancher, and Azk and discussing their most important characteristics.

Docker Swarm

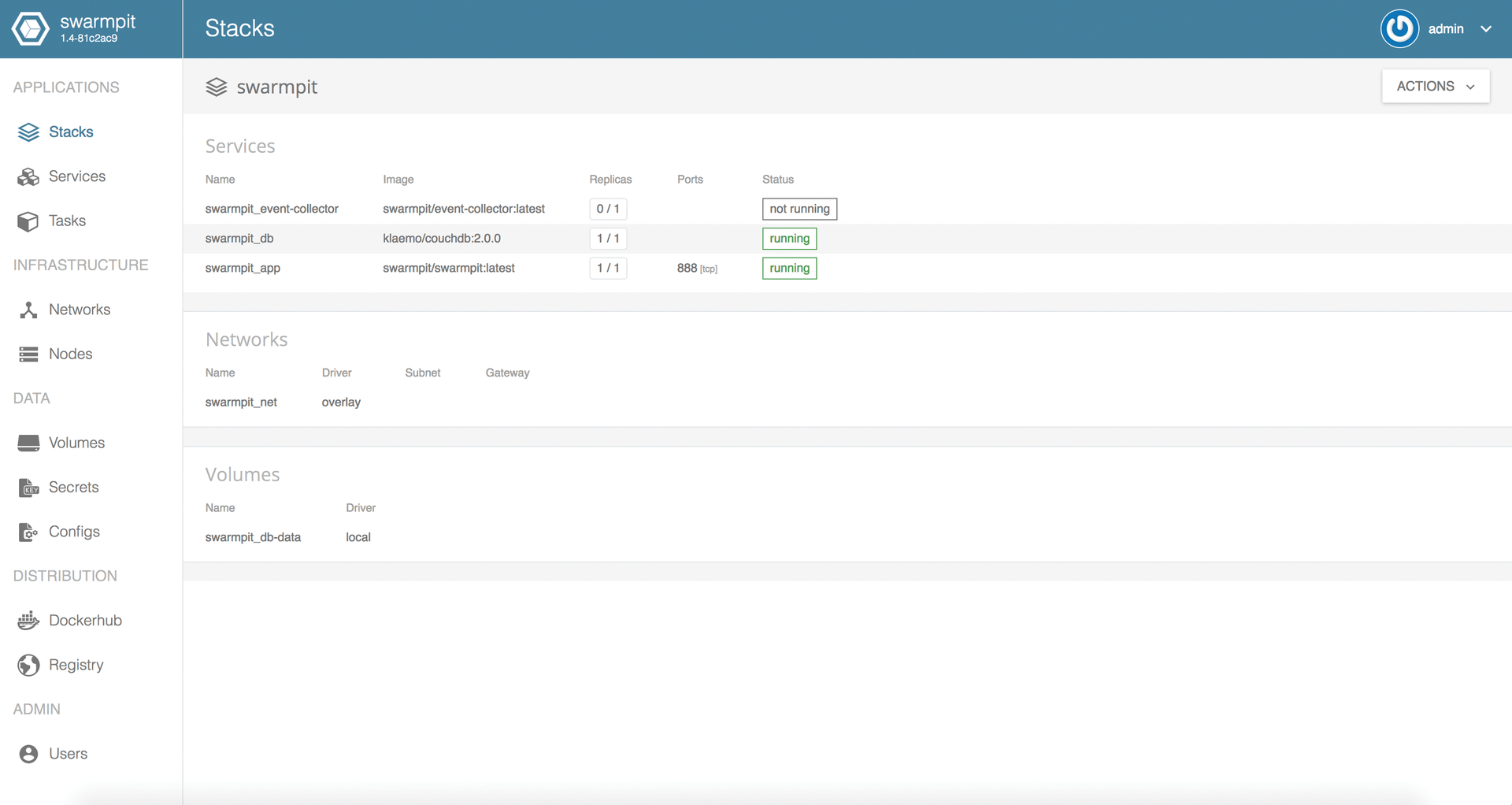

Docker Swarm [2] (Figure 2) must be top of the list of alternatives to Kubernetes. Swarm can be seen as a panic reaction from Docker when the container builders realized that more and more users were combining Docker with Kubernetes.

With Swarm, Docker is demoted to a plain vanilla container format that Kubernetes can use; not every user is satisfied with the Docker format, as demonstrated by Red Hat, who has been working for years on a Docker alternative named Rocket.

The people at Docker headquarters had to be on their guard: If more and more people were to roll out their containers with Kubernetes, it would be conceivable that a new and better container format would replace Docker, and the company would end up empty-handed. Docker Swarm can therefore also be seen as an attempt to tie in customers more tightly to Docker's own technology.

Little wonder, then, that Kubernetes and Docker Swarm are similar in many ways. Kubernetes relies on a pod concept to deploy apps, with a pod being a group of containers co-located on a host. Replicas distributed across the cluster can be created from deployments. Docker Swarm also offers various possibilities to roll out applications as a multicontainer masterpiece distributed across the entire cluster; Docker Compose is often the tool of choice.

Being able to scale virtual container environments well is inherent to both Kubernetes and Swarm: Pods scale horizontally, just like services in Docker. Kubernetes, like Docker Swarm, offers a high availability mode out the box to make both the solution infrastructure and the containers themselves highly available. Load balancers offer additional functionality in Kubernetes; Swarm uses a DNS-based mechanism instead. Despite all the technical differences, at the end of the day, both solutions achieve comparable results.

Storage and Network Differences

Significant differences between Kubernetes and Swarm can be seen in storage and network operations. Kubernetes comes with two storage APIs: one to connect existing storage back ends (e.g., Ceph) and one to provide a kind of abstraction layer for storage requests that containers can use to query storage resources. Swarm keeps things a bit simpler and uses the volume API already available in Docker, which allows additional storage technologies to be integrated with plugins, for example.

When it comes to the network, however, it is exactly the other way around: Kubernetes assumes that the whole network is flat; that is, all pods reside on the same logical network segment and therefore can communicate easily.

Swarm, on the other hand, ensures that each node in a Swarm cluster becomes part of an overlay network, so that Docker traffic is routed separately from the rest. Unlike Kubernetes, Swarm also offers encryption for this network, and if you use the Swarm plugin interface, you can even connect complete container network solutions with external software-defined network plugins; an equivalent feature is missing in Kubernetes.

Performance and Stability

Both Kubernetes and Docker Swarm claim to scale far beyond the limits of what is usually required. In the case of Kubernetes 1.6, the solution supports up to 5,000 physical nodes. Swarm can control 1,000 nodes per Swarm manager and supports up to 30,000 containers per Swarm manager instance.

Such a setup should be sufficient most of the time – if only because many companies do not use their Kubernetes or Swarm clusters in client operations but prefer to build a separate infrastructure for each customer.

Technically, the solutions differ very little, even if some functions are implemented or named differently on one side or the other. Whether you rely on Kubernetes or Swarm is a matter of taste.

Nomad

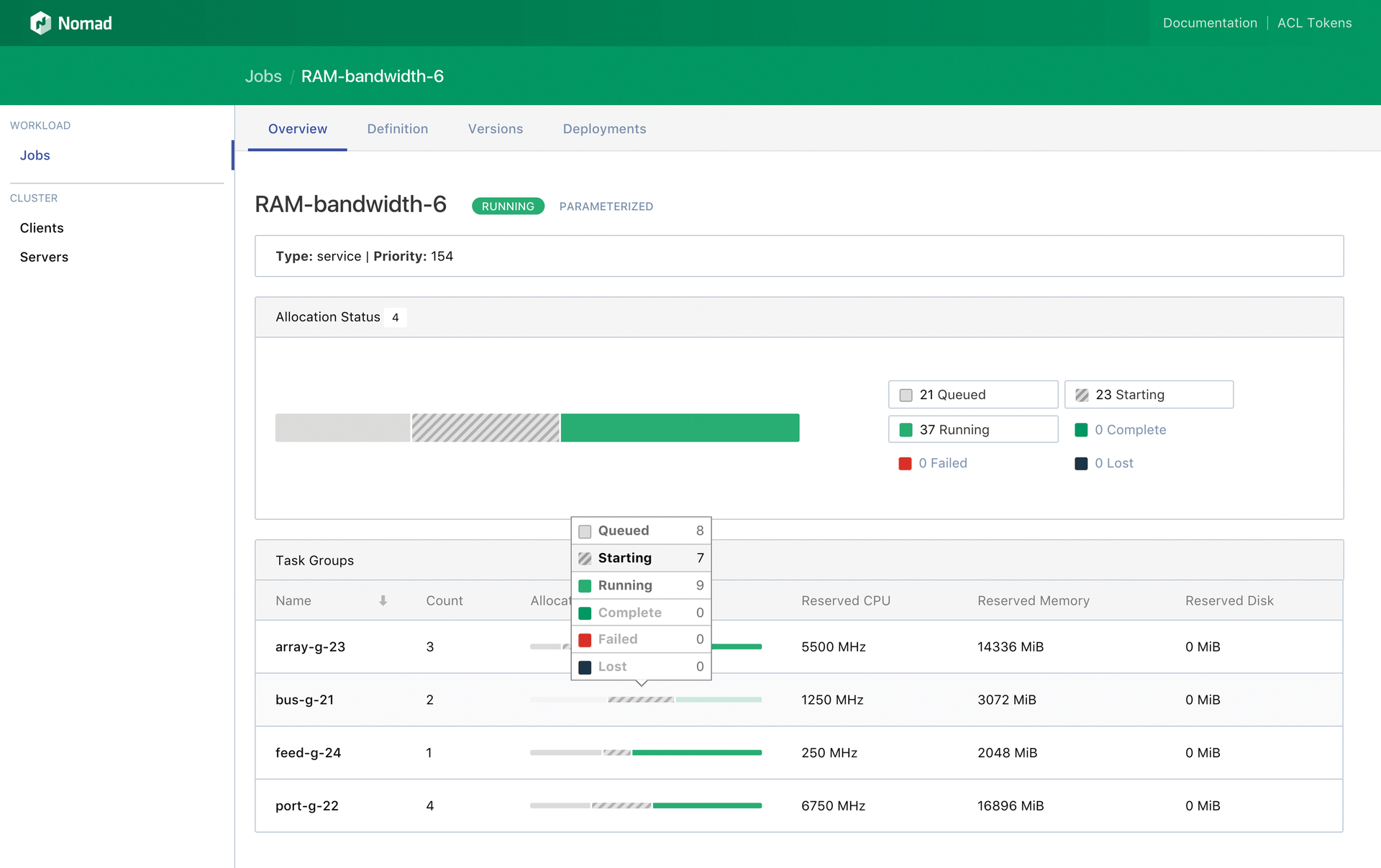

Nomad [3], developed by HashiCorp, takes an approach fundamentally different from Docker Swarm and Kubernetes, even though the product's objectives sound familiar. Right at the top of its website (Figure 3), Nomad states that it seeks to be a tool that effectively manages "clusters of physical machines and the applications running on them." As usual in the container context, it is useful to translate the term "application" to mean an application of any kind, comprising several microcomponents that have to be rolled out together in a predefined manner and in a specific configuration.

Whereas Kubernetes and Swarm employ the container format for this roll-out (i.e., Docker or Rocket), Nomad already starts to differentiate itself from its competitors: In addition to containers, it supports virtualized hardware (i.e., classic virtual machines (VMs)) and standalone applications (i.e., applications that can be used directly without additional components).

Amazingly, Nomad promises a feature scope that can keep pace with Kubernetes and Docker Swarm and comes as a single binary that can be rolled out on any number of hosts without noticeable problems. Nomad does not need any external tools, either. HashiCorp apparently takes advantage of the tools already built for clusters in the form of Serf and Consul, also from HashiCorp (e.g., as a Consul-specific consensus algorithm). The company's experience thus flows into Nomad.

In fact, a Nomad setup is very simple. Although Kubernetes and Swarm also have tools that roll out a complete cluster in a short time, the admin still has to deal with many components. Nomad, on the other hand, is a single binary that bundles all relevant functions.

Managing Microservices

According to HashiCorp, Nomad has one goal above all: Provide a tool to the admin to manage the workload of thousands of small applications well and efficiently across the node boundaries within a cluster.

Very important is that Nomad have no special requirements for the underlying infrastructure: Whether you run Nomad on your own bare metal or in your own data center is irrelevant to the program. Hybrid setups are also no problem, as long as you ensure that the network connection between the Nomad nodes works.

In launching an application, the nomad call defines all the relevant details, such as the driver to be used (e.g., Docker) or the application-specific data. You have a choice between HashiCorp's own HCL format or JSON, which is, however, less easy to read and write.

At the end, you pass the job description directly into Nomad, which now takes care of processing the corresponding jobs in the cluster by starting any required containers.

It might be a bit too optimistic to expect to be able to roll out different services within the cluster that need to access each other. The problem is not new in itself: For example, if you want to run one database and many web server setups that access the same database in your cluster, the web servers need to know how to reach the database. However, you cannot configure this statically, because it is when a job is processed by Nomad that the decision is made as to where the database runs.

In this case, Nomad allows you to join forces with Consul: The finished job creates a corresponding service definition in Consul, which you then reference in the web server application.

Drivers and Web UI

Nomad defines a driver as any interface through which it starts its jobs. It has a driver for Docker, one that connects LXC, and a third one that knows how to use Qemu and KVM. As you will quickly notice, Nomad really only sees itself as a scheduling tool – unlike Kubernetes and Docker. For its applications, it relies 100 percent on the functions offered by the drivers. Nomad does not offer its own network or storage functions, all of which come from the docked partner (e.g., Docker).

As you would expect, Nomad offers a RESTful API into which you can dump jobs directly. The API also forms the back end for a graphical user interface, which is included in Nomad's open source version. Additionally, Nomad understands how to deal with regions out the box, so there's nothing to prevent multisite solutions.

When it comes to scalability, Nomad doesn't need to shy away from comparisons with big competitors: According to the developers, Nomad setups with more than 10,000 nodes are in live operation today, and they work reliably. Given that Nomad implies a significantly lower overhead than Kubernetes and Docker Swarm, this statement is credible, even without having tested it myself.

Nomad is not only available as open source. HashiCorp also offers Pro and Premium versions of the software, which are explicitly aimed at customers in the enterprise environment. In addition to the functions of the open source variant, the Pro version offers namespaces that allow a Nomad cluster to be split logically. Autopilot, which takes care of rolling upgrades in the open source version, is available in an advanced version in Pro; it updates all the servers on the fly without downtime. Silver Support (nine hours a day/five days a week with a corresponding service-level agreement (SLA)) is also included.

If you choose the Premium version, you receive additional resource quotas and a policy framework based on Sentinal, along with Gold Support (24/7 with an SLA). The HashiCorp website does not advertise any prices, however if you request a Pro or Premium demo, price information is delivered.

All told, you can say that Nomad is a light-footed Kubernetes alternative that is completely sufficient for the use cases of many companies, so if you want to run microapplications in containers, you could do worse than taking a good look at Nomad in advance.

Kontena

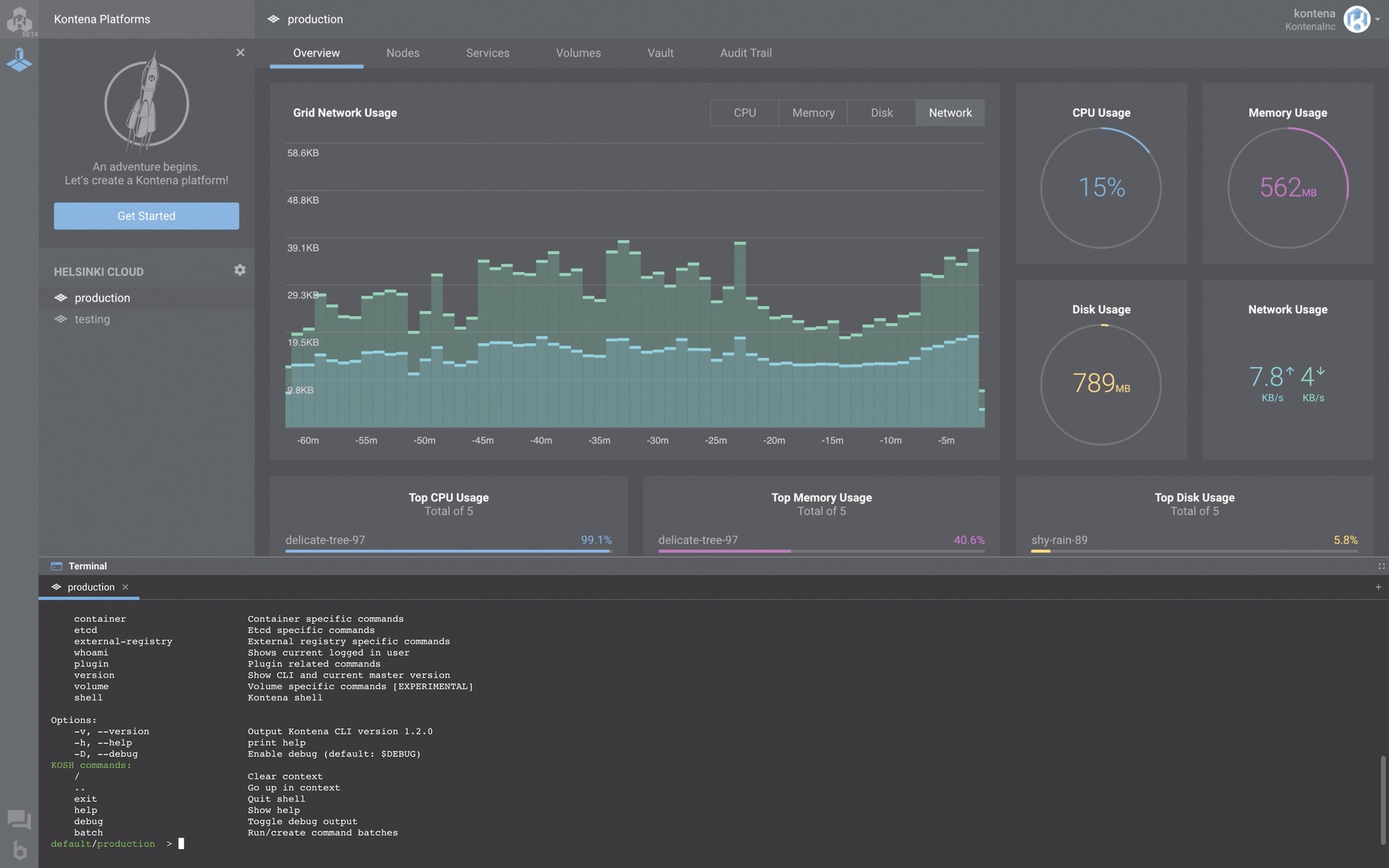

Whereas Nomad relies on homeopathic dosages, Kontena (Figure 4) lays it on thick without apology. The company from New York and Helsinki presents its platform as a complete alternative to Kubernetes and also claims an equal feature set. Architecturally, Kontena [4] is similar to Kubernetes in many ways: The Kontena node, which can be bare metal or VMs in a cloud environment, runs the Kontena agent, which is controlled by a central Kontena master instance.

Kontena puts several layers over the target nodes: First, it creates a Weave-based overlay network technology [5], allowing all parts of the setup to communicate across hosts, data centers, and networks.

Additionally, Kontena includes a DNS-based service discovery solution that is part of the standard program for container solutions and does the job already described in detail for Nomad with regard to node discovery.

One core component of the software is the Kontena orchestrator, which takes care of distributing different containers to the nodes, in single configuration or as a team, and connecting them to each other accordingly. The Kontena workload model differs very little from that of Kubernetes, Docker Swarm, or most other container solutions.

The number of drivers that can be used to control external products is particularly impressive with Kontena. For example, Kontena has a connection for external storage that supports both physical storage in your own data center and cloud storage services from major cloud providers.

The Weave solution used for the virtual network between containers offers features such as multicast support and the ability to integrate VMs directly with clouds through a peer-to-peer feature. Kontena sees itself as a solution for hybrid environments comprising on-premises services and cloud offerings.

Attention to Detail

The many small functions and the various gimmicks the developers have built in are great fun at Kontena. If you need certificates for your containers, for example, you will be pleased to hear about the built-in connection to Let's Encrypt, which makes child's play of issuing certificates. A complete system for role-based access control (RBAC) makes it possible to apply granular control for access to accounts.

OpenVPN is on board to provide a secure connection over an internal network for customers. Load balancers, which are included with almost all container administrators, can be flexibly configured in Kontena (e.g., as SSL terminators), and they also enable rolling updates in combination with various other services.

The possibilities to integrate your applications into accounts in the form of containers are also extensive. Anyone using Docker Compose has an interface in Kontena to which Compose can dock directly. Application definitions can also be imported manually. Once an application is available in Kontena, the environment automatically scales it horizontally as required on the basis of various parameters.

Stacks based on running applications are also possible: These are ready-made, pre-packaged, and reusable collections of services (i.e., a kind of template). For example, anyone who has successfully assembled a web server setup in Kontena from individual parts can make a stack out of it and reproduce the setup as often as desired.

Good Connection to the Outside

The connection to external services is also exemplary: In addition to APIs, which are available as a matter of course, Kontena integrates various monitoring tools, as well as classic logging solutions such as fluentd or StatsD. Anyone who needs an audit trail for compliance reasons will also receive it free of charge in Kontena. Your own image registry, in combination with a very extensive web user interface (UI), complete the offer.

Kontena itself is free; however, you can purchase various support packages from the manufacturer, some of which also include deployment on your own infrastructure, as well as training and business support. Details can be found on the website [4].

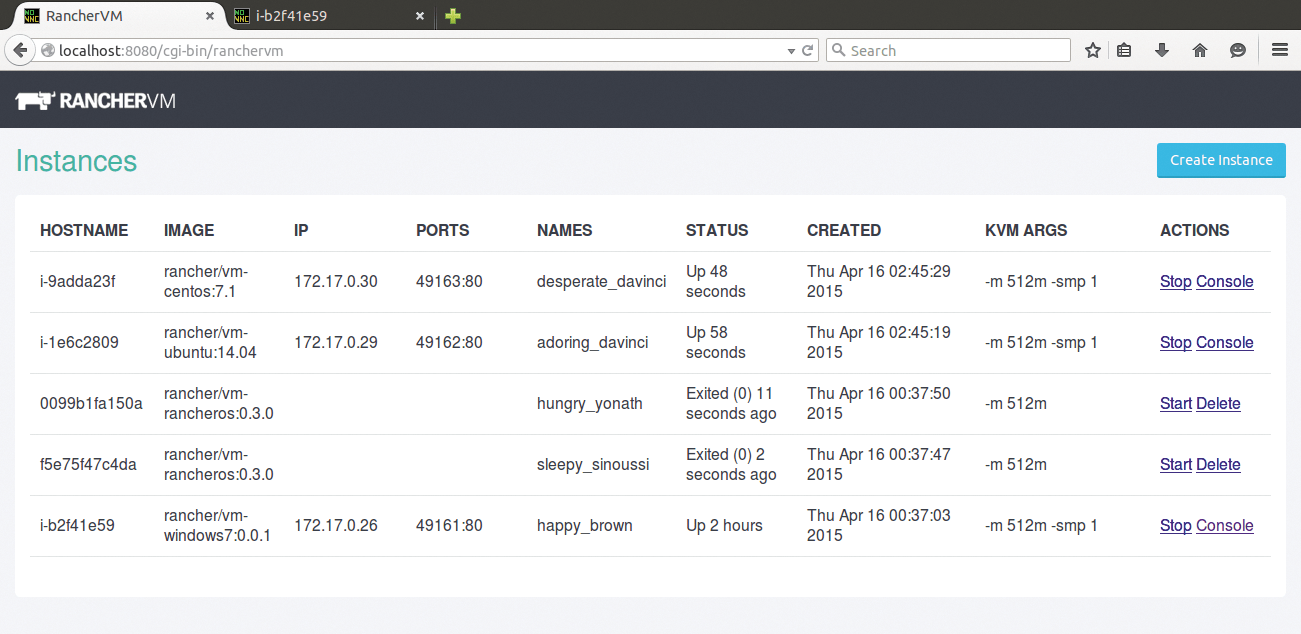

Rancher

Rancher is not an alternative to Kubernetes [6] because it contains a complete Kubernetes distribution. According to the manufacturer, however, the product (Figure 5) expands Kubernetes at three important points: It is designed to enable efficient and easy control of different Kubernetes installations on different platforms, it adds a management component for controlling the workload of applications, and it provides enterprise support for Kubernetes.

The management layer contains a central control plan from which several Kubernetes clusters can be managed after the admin has rolled them out directly with Rancher. Rancher starts complete Kubernetes instances in various public clouds (e.g., AWS, Azure, Google Cloud Platform) at the click of a mouse.

Because Rancher also has its own rights management, user management can be reliably and consistently implemented across all Kubernetes instances. Additionally, various management functions exist for monitoring, alerting, and trending (MAT) of various Kubernetes clusters.

Application management enables users to roll out their micro-architecture applications centrally from Rancher to different clusters, and admins can create user projects that include different namespaces within the Kubernetes clusters. The package also includes comprehensive continuous integration and continuous delivery pipelines, enabling the development of Rancher-based applications.

Support is almost self-explanatory: Rancher itself is open source, but the manufacturer offers commercial support for the solution. As is more or less usual nowadays, you cannot find prices on the Rancher website; you have to contact the software manufacturer.

Rancher provides more details about the scope of support: 24/7 support is available, and if you need help with cluster setup, you can get it on request. Companies thinking about introducing Kubernetes should at least get to know Rancher – especially if the goal is to have many small Kubernetes-based setups spread across multiple locations or platforms.

Azk

The last candidate in this overview is Azk. Unlike the tools presented so far, it is aimed exclusively at developers. Azk does not seek to turn the big container wheel; instead, it looks to allow developers to create a container-based working development environment in the shortest possible time using local resources. As strange as this sounds, in many companies, admins operate huge Kubernetes platforms just to give in-house developers a working development platform. In these cases, Azk is a very welcome alternative [7].

Azk works in a very simple way: In a template file with JavaScript syntax, a developer defines the desired environment. Under the hood, the program uses Docker, but on its website [8], Azk offers a large number of preconfigured Docker images aimed in particular at developers of web applications. The developer then references the images in azkfile.js. All parameters required for the operation of the containers are also specified in the file.

At the end of the day, you just call Azk with the appropriate template; after the program has finished its work, the development environment is ready. If you need the same environment again some time later, you simply call Azk again and reach your destination in a very short time.

Achieve Your Aims Quickly

Azk's functionality is not comparable to that of the large container orchestrators, but that's exactly what its developers wanted to avoid. Instead, Azk is firmly rooted in development. Once you have familiarized yourself with the syntax of the azkfile.js manifest file, you will quickly see results without running a complete and huge Kubernetes cluster with all its components.