How to back up in the cloud

Rescue Approach

Backups in conventional setups are in most cases clear-cut. If you order an all-encompassing no-worries package from a service provider, you can naturally assume that the provider will also take care of backups. The provider then has the task of backing up all user data such that it is quickly available again in the event of data loss. Some technical refinements are required, such as incremental rolling backups, for example, when a specific database status needs to be restored. With this model, the customer only needs to trigger a recovery action when necessary.

This principle no longer works in clouds, because the classical supplier-customer relationship often no longer exists. From the customer's perspective, the provider is the platform provider, but the website running in the cloud may have been programmed by an external company, and you're actually responsible for running it yourself. In the early years of the hype surrounding the cloud, many companies learned the hard way that Ops also means taking care of backups, which raises the question: How can backups of components running in the cloud be made as efficient as possible?

Backing up is also challenging from the point of view of the platform provider, because the cloud provider does not prepare for the failure of individual components when it comes to backups. It is as much about protection against notorious fat fingering (i.e., the accidental deletion of data) as of classic disaster recovery. How can a new data center be restored as quickly as possible if a comet hits the old data center?

In the beginning, you need to understand the provider's viewpoint of how to ensure that backups are created efficiently and well, so that a fast restore is possible.

The answer to this question is a counter-question: What has to be in a backup for the provider to be able to restore the data in as short a time as possible? Where every single file used to end up in some kind of backup, today it makes sense to differentiate carefully. Especially in cloud setups, automation is usually an elementary component of the strategy, which entails some new aspects when it comes to backup.

Automation is Key

When you plan and build a cloud setup, you cannot afford to do without automation. Conventional setups of a smaller nature might still be manageable, but with huge cloud setups comprising servers and infrastructure, this approach is obviously useless, because even if a setup starts very small, automation will be necessary when it comes to horizontal scaling, which is exactly the purpose of clouds. In short, anyone who builds a cloud will want to automate right from the start.

A high degree of automation also means that individual components of the setup can be restored "from the can" in most cases. If the computer hardware dies or a hard disk gives up the ghost, the operating system can be restored from the existing automation without any problems after the defective components have been replaced. There is no need to back up individual systems in such setups.

Keeping the Boot Infrastructure Up and Running

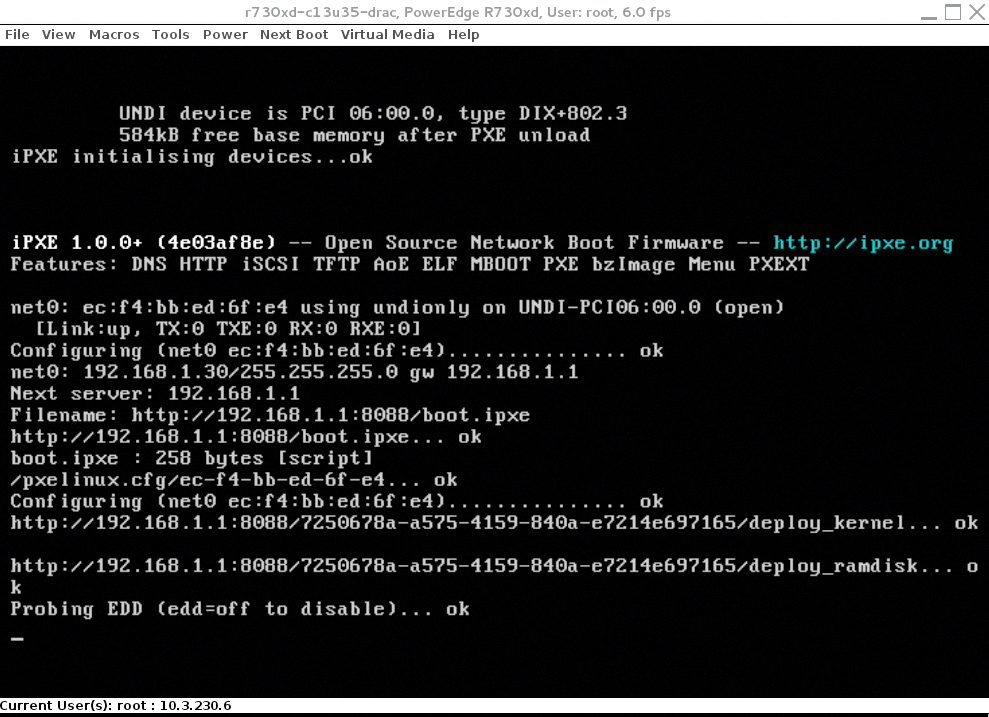

For the process to work as described, several aspects of the operational strategy must be viable. First, the boot infrastructure needs to be available; it offers services such as FTP, NTP, DHCP, and TFTP (Figure 1). Only if those services do their job is the installation of new systems actually possible. At a data center, the boot infrastructure (usually one server is sufficient) ideally should be redundant – if necessary, with classical tools like Pacemaker.

If a provider wants to achieve geo-redundancy, it does not hurt to operate at least the boot infrastructure of a setup as a hot standby at a second data center because, if worst comes to worst, the functioning boot infrastructure is the germ cell of a new setup.

Therefore, the boot infrastructure is one of the few parts of the underlay that requires regular backups – at a different location. Note that the boot infrastructure should also be automated to the greatest extent possible: NTP, TFTP, DHCP, and so on can be rolled out easily by Ansible to localhost.

The required roles, if they are written locally and not organized somewhere on the web, are mandatory parts of the underlay backup. Even if you use ready-made components from the network for the roles, be sure to back up the Ansible Playbooks and the configuration files of the individual services, because these are specific to the respective installation. It goes without saying that a Git directory is a useful choice for all these tasks; ideally, it should be synchronized to another location outside the setup.

If it is possible to restore the boot infrastructure as quickly as possible in the event of a disaster, a functional platform can be bootstrapped from it in no time at all, and operations can be restored. However, a setup raised in such a way at another location would be just the bare bones; the data would be missing.

Persistent Data

As is often the case in a cloud context, it is essential to distinguish between two types of data. On the one hand, the cloud setup always contains the metadata of the cloud itself, including the full set of information about users, virtual networks, virtual machines (VMs), and comparable details. The other type of data that admins usually have to deal with in the cloud is payload data – that is, data that customers store in their virtual area of the cloud. Many types of backup strategies are available, but not all of them make sense.

Metadata backups are easily solved by creating backups to match. Most cloud solutions rely on databases in the background anyway, which is where they store their own metadata, and backing up MySQL, which is the standard tool in OpenStack out of the box, is a previously solved problem.

If you regularly back up the MySQL databases of the individual services, OpenStack only has to deal with special cases separately. Sometimes software-defined networking (SDN) solutions use their own databases. Another topic occasionally forgotten is operating system images in OpenStack Glance. If they do not reside on central storage or would need to be retrieved from the web, backups should be created.

If you use Ceilometer, don't forget its database when backing up; otherwise, the historical records for resource accounting are gone, and in the most unfavorable case, that is conceivably not only a technical problem, but also – and above all – a legal one.

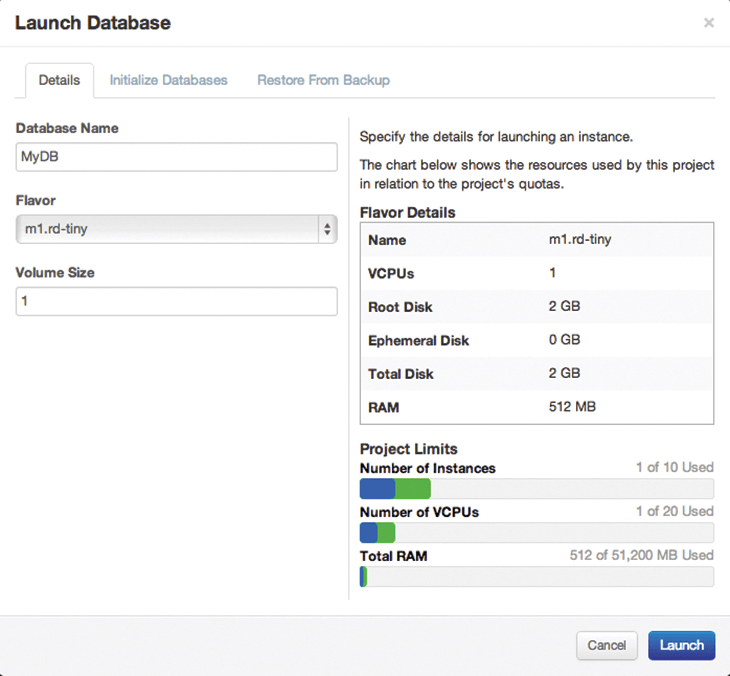

Cloud services that can create backups for customers themselves also deserve special attention. This option is particularly common for Infrastructure as a Service (IaaS) services. If you implement Database as a Service (DBaaS) in OpenStack with Trove, you can have your databases backed up automatically at regular intervals (Figure 2). Providers have a choice: either communicate clearly to the customer that they are responsible for permanent storage of the backups themselves or include backups of those services in the provider's own backups.

Central Storage

When it comes to payload data, the situation is quite different. For this data, many companies rely on central storage solutions, such as Ceph, which although it is possible to build huge scalable storage facilities quickly and easily, also leaves you with a juggernaut when it comes to backups. Anyone coming from the old world of conventional setups often wonders how to bundle this kind of storage into a backup given that huge Ceph setups have several petabytes of capacity.

The answer is very simple: Install a second storage solution of the same size next door and mirror the master regularly, or don't protect payload data at all or only selectively for a surcharge. Logically, if you have a 5PB instance of Ceph, you actually need a 5PB cluster for a backup. In case of doubt, you can also use a trick based on erasure coding: With a 5PB gross capacity, only 1.6PB are effectively available if you have enabled double redundancy in Ceph.

In a backup, redundancy is theoretically not necessary at all, so you could reduce the value to 2PB and enable erasure coding, which is similar to RAID5. At the end of the day, a second storage solution is necessary although not 5PB in volume; nevertheless, it must be able to stash much of the data.

Provider Backups?

From the provider's point of view, the approach taken by large companies like Amazon or Azure is far more elegant. They see backups as the customer's responsibility and, as usual, the warning is that customers should not rely on anything in the cloud. If you book services on Amazon Web Services (AWS), you know that you are responsible for backing up your own data yourself. In case of doubt, the data could simply be gone, and Amazon will not assume any responsibility, even if they contributed to the data deletion because of an error.

Whether this approach can be sustained depends on several factors. Amazon, of course, is big enough to cope with the loss of large customers without any problems – even if it is naturally not desirable. However, the structure of your own clientele plays an important role.

If you want to leave the topic of backup as a provider to your cloud customers, you need to communicate this loudly and clearly at every opportunity that offers itself. A paragraph buried deep in the terms and conditions of your own platform is unlikely to help because for many customers, the backups by the provider are so normal that they would not even dream of this not happening. The step of assuming responsibility for your own backups is part of migrating to the cloud.

The Customer's Point of View

The situation is different from the customer's point of view, even if the fundamental questions are not so different from those the platform admins face. One thing is sure: The degree of automation should be as high as possible, not only in the cloud underlay, but also where customers set up their own virtual worlds. The requirements here are even tougher, because classical automation is joined by orchestration.

Because orchestration sits below automation, it takes advantage of the fact that virtual hardware in a cloud can be controlled through the API of the cloud environment. It is not difficult to imagine that manually clicking together a full virtual environment in AWS or OpenStack can take some time.

At the end of the day, admins start at square one, first creating a virtual network that connects over a router to the provider's network to gain Internet access. Next, the individual VMs of the platform follow; they also need to be set up and configured after a successful boot process.

If you imagine a virtual environment with hundreds of VMs, this process would take forever. Orchestration solves the problem, wherein the admin defines the desired state of the virtual environment with the help of a template language. When the template is executed, the cloud creates all the resources described in the template in the correct order.

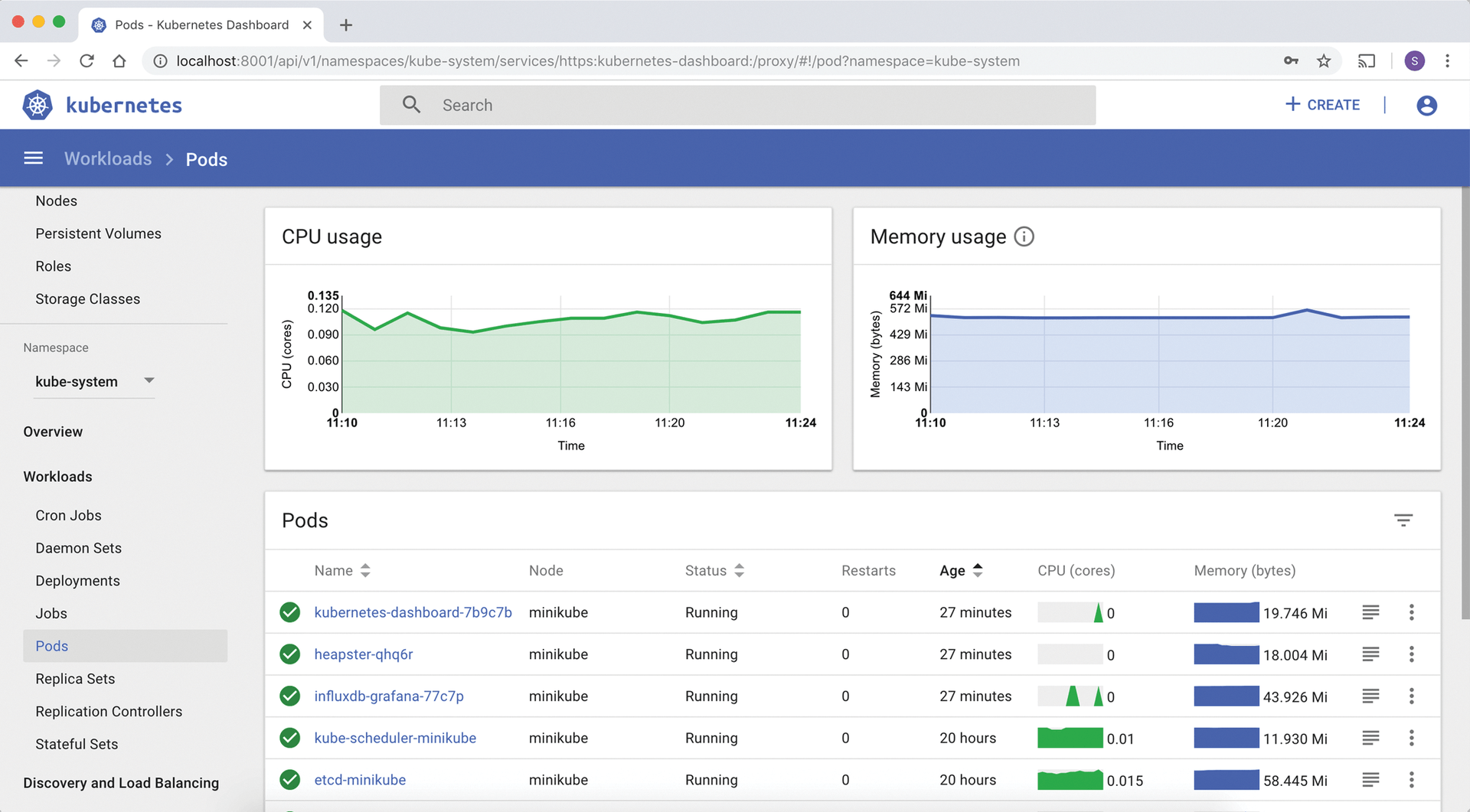

From the user's point of view, they have even more automation options: A specially prepared image can be used to distribute the operating system (OS) image, which then needs a load balancer. If you do not rely on Load Balancing as a Service (LBaaS) from the outset, specific services such as load balancers, databases, and other servers can be provided by the cloud platform itself (Figure 3). Basically, the higher the percentage of a virtual environment that can be reproduced from templates, the easier it is to do the backup.

Keep in mind that nobody wants to back up, but everyone wants to restore. Admins who use the native features of a platform will typically find the restore process far more convenient than with DIY solutions. For restores, it is usually fine to start a new instance via the respective service and point to an existing backup – all done.

Admins who consistently use their cloud platform's LBaaS are pleased to see new instances automatically find their way into the load balancer after starting up. The less work that restoring the individual instances takes, the more fun admins have.

Identify Persistent Data

The be-all and end-all is, of course, identifying the data that has to become part of a backup in the first place. If you build your setup in an exemplary way and it is genuinely cloud-ready, you will soon be done. In the best case, the data only comprises the contents of the database, which acts as a hopper for your own application in the background. Although this is a kind of ideal world, it is a strategy worth considering.

If you are using AWS or OpenStack clouds, you could use your own images. Ideally, you will build an image with a continuous integration and continuous delivery (CI/CD) system such as Jenkins, that is independent of the virtual environment and from which the images can be quickly restored if something goes wrong. Alternatively, standard images provided by distributors can also be used, although it is advisable to have a central automation host from which new VMs can be quickly refuelled.

A setup of this kind will want to source all required services from the cloud to the extent possible. Having your own VMs for databases and the like is a thing of the past; users only need to pay attention to using the DBaaS backup function and regularly copy the backups to a safe place. The recommendation is to use the command-line interface tools for the respective cloud from an external system and use system timers (e.g., to trigger downloads). At best, the application comes in the form of a container or can be checked out from Git and quickly put into operation.

If you build a setup that is cloud-ready in this way, the backup requirements are dramatically lower than in conventional setups, because the volume of setup-specific data is far smaller.

Reality Is More Complex

The described scenario is, of course, the ideal for applications in the cloud and, practically, only realistic for people who tailor a virtual environment to fit a cloud. Anyone migrating a conventional setup to the cloud and not shaking off the traditional standards in the process will naturally also have to deal with conventional backup strategies.

Still, you can access a few tricks and tips for such scenarios. Even if your own application is not cloud-ready, it makes sense to separate strictly user data from generic data. If you move a database from a physical system to a VM, always make sure that the folder with the database data is on a separate volume; then, you only need to include the data from that volume in the backup instead of the whole VM. If something goes wrong, the volume content can be recovered quickly (e.g., from a snapshot), with no need to recover the data in a laboriously manual process.

However, admins should pay special attention to the backup solutions they use in such setups. If you have any kind of S3-compatible memory available, it is a good idea to use backup software with that functionality (Figure 4), because then local backups can be created directly from the backup application, which enables a fast and uncomplicated restore in case of problems.

At the same time, the same software can be used to create a backup (even an encrypted backup) at another location, such as Amazon's physical S3 storage service. This kind of backup gives you security even if the original data center were to burn to the ground. Amazon itself provides instructions for this type of setup [1].

Containers: Not a Special Case

Most of the examples thus far relate to classic full virtualization. However, this does not mean that the rules and principles described are not equally applicable to containers. In fact, they apply even more because cloud migrations that convert conventional applications into containers do not occur so frequently, in my own experience. Instead, the normal case is for the application to be redesigned in the context of the migration into the cloud and developed from scratch.

Accordingly, for container backups, it is always a good idea to store only the actual user data, such as the content of a database, into your own backups (Figure 5). Data that can be restored from standard directories in emergencies does not belong in the container backup; in fact, it complicates the recovery of the data rather than making it easier.

Conclusions

Backups in clouds are different from those in conventional setups. From the vendor's perspective, automation plays a major role. If automation can be restored quickly in the form of a boot infrastructure, bootstrapping the new platform is also fast, and only specific configuration files of the individual cloud services and their metadata need to be included in the backup. Everything else is generic and can be reproduced through automation when needed – and as often as desired.

On the other hand, the cloud is more uncomfortable for customers. Whereas in the past, the provider would take care of backups, now do-it-yourself is the order of the day – unless you make a special agreement with the provider specifically for this purpose. Large providers of public clouds in particular usually do not offer any products for backups, so customers are ultimately dependent on their own initiative.

For large parts of a setup, the same applies as for the underlay: Automation, orchestration, and CI/CD are the keys to success. In the end, only the genuinely persistent data of a virtual environment should be backed up (e.g., customer data from a database).

All virtual instances of the setup, infrastructure, and software required in the environment must be reproducible at all times. If you take this advice to heart, you will usually have very small backup volumes, without spoiling your fun in the cloud.

Clearly, backup habits from the school of "back up everything" have no place in the cloud. Because backups of whole VMs or complete containers eat up a large amount of disk space for repeatedly backing up the same data and because backups of this kind cannot be restored faster than backups made and restored with cloud tools, it is simply pointless to back up everything.

If you consistently use cloud tools, however, you will also make life easier for yourself in other situations. Database updates are far easier if the database and data records are separated from each other, making it easy to create a new DB instance with updated software, into which you then only insert the existing dataset – all done.