Accelerate web applications with Varnish Cache

Horsepower

Successful web applications grow organically and eventually reach the point where a speed bottleneck occurs in the system. Websites that are too slow usually have high bounce rates. Sometimes, they also suffer involuntary denial of service attacks by normal visitors who continuously click Refresh or Reload if load times are too long.

Because the professional environment often dictates the programming language and the framework used, whether the bottleneck happens sooner or later is out of the developer's hands. (See the "Classic" boxout.) However, with the programmable caching HTTP reverse proxy Varnish Cache, you can speed up the delivery of web pages with performance that is usually limited only by the speed of the network itself.

If a website becomes too slow and the bottleneck is the web application server that generates the HTML code, the usual solution is more or faster hardware. If the web application runs on a hosting service like Amazon Web Services or Heroku, you can solve the problem through liberal use of your credit card. Once the latter is exhausted, or if the cloud is not an option or is against your problem-solving principles, the search for better solutions begins.

Whodunit?

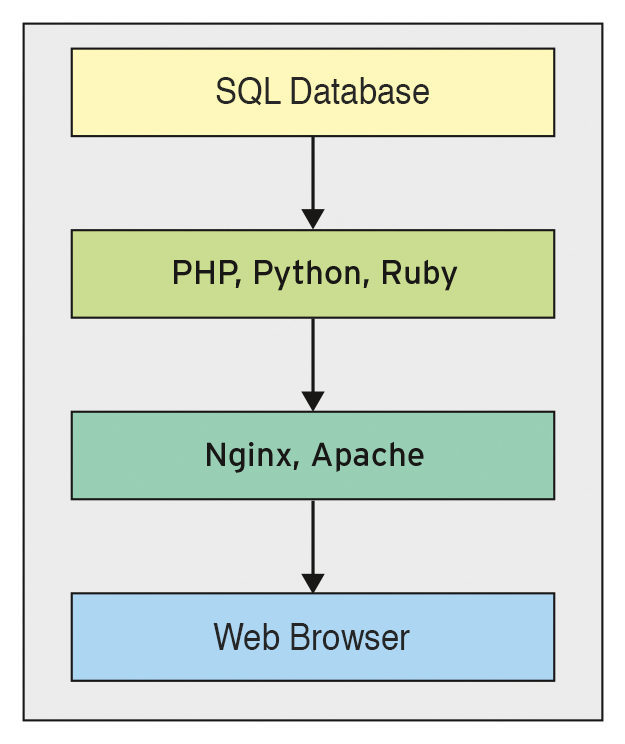

To understand a performance problem, you first need to trace the chain from the web browser query to the record. Web applications usually store data in an SQL database. The web application, often written in PHP, Python, or Ruby, grabs this data and turns it into a web page with HTML code that is delivered by a web server (Apache or Nginx) to the web browser. The whole unit up to the web server is also referred to as the back end (Figure 1).

Start by optimizing the database server and, if it is an SQL system, the SQL queries. Once you have exhausted this path, you can then devote your attention to caching in the application, often in the form of fragment caching, which ensures that the cache stores parts of the HTML code created by the web application in a fast key value store such as Redis [1], from which the web application then retrieves it.

You can also work with HTTP caching [2]. The web application tells the web browser that the currently delivered HTML page or image is valid for a certain period of time. The web server passes on this information unchanged, or you can use an ETag [3] for this purpose. Conversely, the browser then asks the web server during repeated visits whether the locally stored page is still relevant.

Quick Broker

If you are actively using the technology and you are having these problems, you will eventually stumble across Varnish HTTP Cache [4], which is a reverse proxy that resides between the web server and the Internet. Although it sounds unspectacular at first – after all, an Nginx or Apache web server also can be configured as a reverse proxy – Varnish is far more intelligent, because it can be programmed and configured with the high-performance Varnish Configuration Language (VCL) [5].

Varnish primarily targets websites with high traffic volumes. Of course, the reverse proxy will also work for a small website, but the initial overhead will probably not pay off. The goal of Varnish is to field as many requests as possible autonomously. Only if it can't make any progress does it access the web server or the web application server behind it.

Varnish cannot do anything that other elements in the chain (i.e., the web server and the web application server) cannot do. In fact, in direct comparison with the application server, Varnish does very few things – but it does them properly; that is, really quickly, because it works well as part of a cluster and globally distributed setup.

Because Varnish uses fewer resources and works faster, the proxy accelerates the entire system. Therefore, most content delivery network providers are probably already working with Varnish, seeking to deliver their data as quickly as possible across continents. How this works is shown by a few VCL examples, in which Varnish plays out its speed.

Redirect from HTTP to HTTPS

Because data transmission via HTTPS is the standard in the age of HTTP2, the website needs a redirect from HTTP to HTTPS (Listing 1). The requesting web browser then receives a 301 Redirect response to the new HTTPS URL.

Listing 1: Redirect from HTTP to HTTPS

01 sub vcl_recv

02 {

03 if (req.http.host ~ "example.com" &&

04 req.http.Cookie == "logged_in")

05 {

06 return (pass);

07 }

08

09 unset req.http.Cookie;

10 }

Caching Time-to-Live

Caching content and delivering it later to identical requests is Varnish's bread and butter. If you want the reverse proxy to serve a web page persistently for another five minutes after the first request, the simple VCL code snippet

sub vcl_backend_response {

set beresp.ttl = 5m;

}

will accomplish this time-to-live (TTL) configuration.

No Cache for Logged-In Users

If a website displays customized content for a logged-in user, Varnish is not allowed to cache this page. In these cases, it may react to certain cookies set by the web application. In the example in Listing 2, the cookie is logged_in and the associated domain is example.com.

Listing 2: Cookie for Registered Users

01 sub vcl_recv

02 {

03 if ((req.http.host ~ "example.com" &&

04 req.http.Cookie == "logged_in")

05 {

06 return (pass);

07 }

08

09 unset req.http.Cookie;

10 }

Availability Without a Back End

If the back end becomes very slow or fails completely (e.g., because of a software update or a denial-of-service attack), Varnish still needs to deliver the previously valid static website.

In the configuration shown in Listing 3, Varnish checks every three seconds to see whether the back end, 127.0.0.1:8080, is still alive and whether the response from the back end takes less than 50ms. If this is not the case, Varnish will continue to deliver the old cache for a maximum of 12 hours.

Listing 3: Heart Rate Monitor

01 backend default {

02 .host = '127.0.0.1';

03 .port = '8080';

04 .probe = {

05 .url = "/";

06 .timeout = 50ms;

07 .interval = 3s;

08 .window = 10;

09 .threshold = 8;

10 }

11 }

12

13 set beresp.grace = 12h;

Changes on the Fly

The reverse proxy not only delivers web pages and other resources at face value, it also modifies them in passing. The software dynamically changes or deletes cookies and adjusts HTTP headers.

Varnish also temporarily stores parts of the website and loads other parts as needed from the back end. In an online store, for example, the software dynamically retrieves the navigation with the user data and the shopping cart from the back end and reads the representation of the current product from the cache. Varnish then injects the dynamic part into the static part.

Compression

The speed gained by Varnish is useless if the transport does not compress the transferred text files. Varnish supports Gzip by default, but not the state-of-the-art Brotli [6], as yet, because you want compression to occur on the web server; after all, Varnish is only a cache. Optimum Brotli compression can be used if the web server (Nginx or Apache) compresses the files, so Varnish can cache them as is.

If you are looking for further optimizations, you can also compress static files for the web server offline. This setup works for Gzip with Zopfli [7] and for Brotli at compression level 10, but both are highly CPU-intensive and take a long time. Therefore, it is only possible for offline optimization of the content.

Conclusions

Whereas Nginx and Apache are family sedans, in which the entire family and even the dog fit and maintenance is relatively cheap, Varnish is a race car. No matter how much you dress up your sedan, it can't compete on the race track.